Building a Node.js/TypeScript REST API, Part 2: Models, Middleware, and Services

The Express.js REST API journey continues! Move from models to full DAOs and DTOs, validate requests with middleware, separate services from controllers, and prepare for a real database.

The Express.js REST API journey continues! Move from models to full DAOs and DTOs, validate requests with middleware, separate services from controllers, and prepare for a real database.

Marcos has 17+ years in IT and development. His passions include REST architecture, Agile development methodology, and JS.

Expertise

In the first article of our REST API series, we covered how to use npm to create a back end from scratch, add dependencies like TypeScript, use the debug module built into Node.js, build an Express.js project structure, and log runtime events flexibly with Winston. If you’re comfortable with those concepts already, simply clone this, switch to the toptal-article-01 branch with git checkout, and read on.

REST API Services, Middleware, Controllers, and Models

As promised, we’ll now get into details about these modules:

- Services that make our code cleaner by encapsulating business logic operations into functions that middleware and controllers can call.

- Middleware that will validate prerequisite conditions before Express.js calls the appropriate controller function.

- Controllers that use services to process the request before finally sending a response to the requester.

- Models that describe our data and aid in compile-time checks.

We will also add a very rudimentary database that is in no way suitable for production. (Its only purpose is to make this tutorial easier to follow, paving the way for our next article to delve into database connection and integration with MongoDB and Mongoose.)

Hands-on: First Steps with DAOs, DTOs, and Our Temporary Database

For this part of our tutorial, our database won’t even use files. It will simply keep user data in an array, which means the data evaporates whenever we quit Node.js. It will support only the most basic create, read, update, and delete (CRUD) operations.

We are going to use two concepts here:

- Data access objects (DAOs)

- Data transfer objects (DTOs)

That one-letter difference between acronyms is essential: A DAO is responsible for connecting to a defined database and performing CRUD operations; a DTO is an object that holds the raw data that the DAO will send to—and receive from—the database.

In other words, DTOs are objects that conform to data model types, and DAOs are the services that use them.

While DTOs can get more complicated—representing nested database entities, for example—in this article, a single DTO instance will correspond to a specific action on a single database row.

Why DTOs?

Using DTOs to have our TypeScript objects conform to our data models helps maintain architectural consistency, as we’ll see in the section on services below. But there’s a crucial caveat: Neither DTOs nor TypeScript itself promise any sort of automatic user input validation, since that would have to occur at runtime. When our code receives user input at an endpoint in our API, that input may:

- Have extra fields

- Be missing required fields (i.e., those not suffixed with

?) - Have fields in which the data is not the type we specified in our model using TypeScript

TypeScript (and the JavaScript it gets transpiled to) won’t magically check this for us, so it is important to not forget these validations, especially when opening your API to the public. Packages like ajv can help with this but normally work by defining models in a library-specific schema object rather than native TypeScript. (Mongoose, discussed in the next article, will play a similar role in this project.)

You might be thinking, “Is it really best to use both DAOs and DTOs, instead of something simpler?” Enterprise developer Gunther Popp offers an answer; you’ll want to avoid DTOs in most smaller real-world Express.js/TypeScript projects unless you can reasonably expect to scale in the medium term.

But even if you aren’t about to use them in production, this example project is a worthwhile opportunity on the road to mastering TypeScript API architecture. It’s a great way to practice leveraging TypeScript types in additional ways and working with DTOs to see how they compare to a more basic approach when adding components and models.

Our User REST API Model at the TypeScript Level

First we will define three DTOs for our user. Let’s create a folder called dto inside the users folder, and create a file there called create.user.dto.ts containing the following:

export interface CreateUserDto {

id: string;

email: string;

password: string;

firstName?: string;

lastName?: string;

permissionLevel?: number;

}

We’re saying that every time we create a user, regardless of the database, it should have an ID, password, and email, and optionally a first and last name. These requirements can change based on the business requirements of a given project.

For PUT requests, we want to update the entire object, so our optional fields are now required. In the same folder, create a file called put.user.dto.ts with this code:

export interface PutUserDto {

id: string;

email: string;

password: string;

firstName: string;

lastName: string;

permissionLevel: number;

}

For PATCH requests, we can use the Partial feature from TypeScript, which creates a new type by copying another type and making all its fields optional. That way, the file patch.user.dto.ts need only contain the following code:

import { PutUserDto } from './put.user.dto';

export interface PatchUserDto extends Partial<PutUserDto> {}

Now, let’s create the in-memory temporary database. Let’s create a folder called daos inside the users folder, and add a file named users.dao.ts.

First, we want to import the DTOs that we created:

import { CreateUserDto } from '../dto/create.user.dto';

import { PatchUserDto } from '../dto/patch.user.dto';

import { PutUserDto } from '../dto/put.user.dto';

Now, to handle our user IDs, let’s add the shortid library (using the terminal):

npm i shortid

npm i --save-dev @types/shortid

Back in users.dao.ts, we’ll import shortid:

import shortid from 'shortid';

import debug from 'debug';

const log: debug.IDebugger = debug('app:in-memory-dao');

We can now create a class called UsersDao, which will look like this:

class UsersDao {

users: Array<CreateUserDto> = [];

constructor() {

log('Created new instance of UsersDao');

}

}

export default new UsersDao();

Using the singleton pattern, this class will always provide the same instance—and, critically, the same users array—when we import it in other files. That’s because Node.js caches this file wherever it’s imported, and all the imports happen on startup. That is, any file referring to users.dao.ts will be handed a reference to the same new UsersDao() that gets exported the first time Node.js processes this file.

We will see this working when we use this class further on in this article, and use this common TypeScript/Express.js pattern for most classes throughout the project.

Note: An oft-cited disadvantage to singletons is that they’re hard to write unit tests for. In the case of many of our classes, this disadvantage won’t apply, since there aren’t any class member variables that would need resetting. But for those where it would, we leave it as an exercise for the reader to consider approaching this problem with the use of dependency injection.

Now we are going to add the basic CRUD operations to the class as functions. The create function will look like this:

async addUser(user: CreateUserDto) {

user.id = shortid.generate();

this.users.push(user);

return user.id;

}

Read will come in two flavors, “read all resources” and “read one by ID.” They’re coded like this:

async getUsers() {

return this.users;

}

async getUserById(userId: string) {

return this.users.find((user: { id: string }) => user.id === userId);

}

Likewise, update will mean either overwriting the complete object (as a PUT) or just parts of the object (as a PATCH):

async putUserById(userId: string, user: PutUserDto) {

const objIndex = this.users.findIndex(

(obj: { id: string }) => obj.id === userId

);

this.users.splice(objIndex, 1, user);

return `${user.id} updated via put`;

}

async patchUserById(userId: string, user: PatchUserDto) {

const objIndex = this.users.findIndex(

(obj: { id: string }) => obj.id === userId

);

let currentUser = this.users[objIndex];

const allowedPatchFields = [

'password',

'firstName',

'lastName',

'permissionLevel',

];

for (let field of allowedPatchFields) {

if (field in user) {

// @ts-ignore

currentUser[field] = user[field];

}

}

this.users.splice(objIndex, 1, currentUser);

return `${user.id} patched`;

}

As mentioned earlier, despite our UserDto declaration in these function signatures, TypeScript provides no runtime type checking. This means that:

-

putUserById()has a bug. It will let API consumers store values for fields that are not part of the model defined by our DTO. -

patchUserById()depends on a duplicate list of field names that must be kept in sync with the model. Without this, it would have to use the object being updated for this list. That would mean it would silently ignore values for fields that are part of the DTO-defined model but hadn’t been saved to before for this particular object instance.

But both these scenarios will be handled correctly at the database level in the next article.

The last operation, to delete a resource, will look like this:

async removeUserById(userId: string) {

const objIndex = this.users.findIndex(

(obj: { id: string }) => obj.id === userId

);

this.users.splice(objIndex, 1);

return `${userId} removed`;

}

As a bonus, knowing that a precondition to create a user is to validate if the user email is not duplicated, let’s add a “get user by email” function now:

async getUserByEmail(email: string) {

const objIndex = this.users.findIndex(

(obj: { email: string }) => obj.email === email

);

let currentUser = this.users[objIndex];

if (currentUser) {

return currentUser;

} else {

return null;

}

}

Note: In a real-world scenario, you will probably connect to a database using a preexisting library, such as Mongoose or Sequelize, which will abstract all the basic operations that you might need. Because of this, we are not going into the details of the functions implemented above.

Our REST API Services Layer

Now that we have a basic, in-memory DAO, we can create a service that will call the CRUD functions. Since CRUD functions are something that every service that will connect to a database will need to have, we are going to create a CRUD interface that contains the methods we want to implement every time we want to implement a new service.

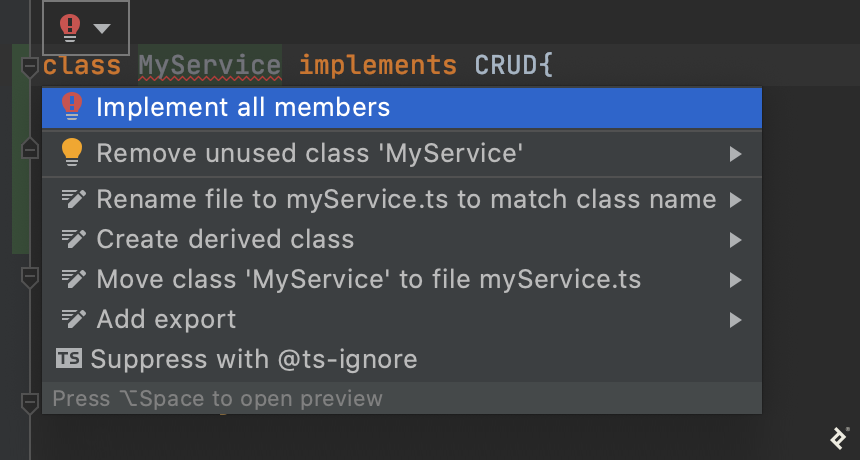

Nowadays, the IDEs that we work with have code generation features to add the functions we are implementing, reducing the amount of repetitive code we need to write.

A quick example using the WebStorm IDE:

The IDE highlights the MyService class name and suggests the following options:

The “Implement all members” option instantly scaffolds the functions needed to conform to the CRUD interface:

That all said, let’s first create our TypeScript interface, called CRUD. At our common folder, let’s create a folder called interfaces and add crud.interface.ts with the following:

export interface CRUD {

list: (limit: number, page: number) => Promise<any>;

create: (resource: any) => Promise<any>;

putById: (id: string, resource: any) => Promise<string>;

readById: (id: string) => Promise<any>;

deleteById: (id: string) => Promise<string>;

patchById: (id: string, resource: any) => Promise<string>;

}

With that done, lets create a services folder within the users folder and add the users.service.ts file there, containing:

import UsersDao from '../daos/users.dao';

import { CRUD } from '../../common/interfaces/crud.interface';

import { CreateUserDto } from '../dto/create.user.dto';

import { PutUserDto } from '../dto/put.user.dto';

import { PatchUserDto } from '../dto/patch.user.dto';

class UsersService implements CRUD {

async create(resource: CreateUserDto) {

return UsersDao.addUser(resource);

}

async deleteById(id: string) {

return UsersDao.removeUserById(id);

}

async list(limit: number, page: number) {

return UsersDao.getUsers();

}

async patchById(id: string, resource: PatchUserDto) {

return UsersDao.patchUserById(id, resource);

}

async readById(id: string) {

return UsersDao.getUserById(id);

}

async putById(id: string, resource: PutUserDto) {

return UsersDao.putUserById(id, resource);

}

async getUserByEmail(email: string) {

return UsersDao.getUserByEmail(email);

}

}

export default new UsersService();

Our first step here was to import our in-memory DAO, our interface dependency, and the TypeScript type of each of our DTOs, it’s time to implement UsersService as a service singleton, the same pattern we used with our DAO.

All the CRUD functions now just call the respective functions of UsersDao. When it comes time to replace the DAO, we won’t have to make changes anywhere else in the project, except for some tweaks to this file where the DAO functions are called, as we’ll see in Part 3.

For example, we won’t have to track down every call to list() and check its context before replacing it. That’s the advantage of having this layer of separation, at the cost of the small amount of initial boilerplate you see above.

Async/Await and Node.js

Our use of async for the service functions may seem pointless. For now, it is: All of these functions just immediately return their values, without any internal use of Promises or await. This is solely to prepare our codebase for services that will use async. Likewise, below, you’ll see that all calls to these functions use await.

By the end of this article, you’ll again have a runnable project to experiment with. That will be an excellent moment to try adding various types of errors in different places in the codebase, and seeing what happens during compilation and testing. Errors in an async context in particular may not behave quite as you’d expect. It’s worth digging in and exploring various solutions, which are beyond the scope of this article.

Now, having our DAO and services ready, let’s go back to the user controller.

Building Our REST API Controller

As we said above, the idea behind controllers is to separate the route configuration from the code that finally processes a route request. That means that all validations should be done before our request reaches the controller. The controller only needs to know what to do with the actual request because if the request made it that far, then we know it turned out to be valid. The controller will then call the respective service of each request that it will be handling.

Before we start, we’ll need to install a library for securely hashing the user password:

npm i argon2

Let’s start by creating a folder called controllers inside the users controller folder and creating a file called users.controller.ts in it:

// we import express to add types to the request/response objects from our controller functions

import express from 'express';

// we import our newly created user services

import usersService from '../services/users.service';

// we import the argon2 library for password hashing

import argon2 from 'argon2';

// we use debug with a custom context as described in Part 1

import debug from 'debug';

const log: debug.IDebugger = debug('app:users-controller');

class UsersController {

async listUsers(req: express.Request, res: express.Response) {

const users = await usersService.list(100, 0);

res.status(200).send(users);

}

async getUserById(req: express.Request, res: express.Response) {

const user = await usersService.readById(req.body.id);

res.status(200).send(user);

}

async createUser(req: express.Request, res: express.Response) {

req.body.password = await argon2.hash(req.body.password);

const userId = await usersService.create(req.body);

res.status(201).send({ id: userId });

}

async patch(req: express.Request, res: express.Response) {

if (req.body.password) {

req.body.password = await argon2.hash(req.body.password);

}

log(await usersService.patchById(req.body.id, req.body));

res.status(204).send();

}

async put(req: express.Request, res: express.Response) {

req.body.password = await argon2.hash(req.body.password);

log(await usersService.putById(req.body.id, req.body));

res.status(204).send();

}

async removeUser(req: express.Request, res: express.Response) {

log(await usersService.deleteById(req.body.id));

res.status(204).send();

}

}

export default new UsersController();

Note: The lines above sending back nothing with an HTTP 204 No Content response are in line with RFC 7231 on the topic.

With our user controller singleton done, we’re ready to code the other module that depends on our example REST API object model and service: our user middleware.

Node.js REST Middleware with Express.js

What can we do with Express.js middleware? Validations are a great fit, for one. Let’s add some basic validations to act as gatekeepers for requests before they make it to our user controller:

- Ensure the presence of user fields such as

emailandpasswordas required to create or update a user - Ensure a given email isn’t in use already

- Check that we’re not changing the

emailfield after creation (since we’re using that as the primary user-facing ID for simplicity) - Validate whether a given user exists

To make these validations to work with Express.js, we will need to translate them into functions that follow the Express.js pattern of flow control using next(), as described in the previous article. We’ll need a new file, users/middleware/users.middleware.ts:

import express from 'express';

import userService from '../services/users.service';

import debug from 'debug';

const log: debug.IDebugger = debug('app:users-controller');

class UsersMiddleware {

}

export default new UsersMiddleware();

With the familiar singleton boilerplate out of the way, let’s add some of our middleware functions to the class body:

async validateRequiredUserBodyFields(

req: express.Request,

res: express.Response,

next: express.NextFunction

) {

if (req.body && req.body.email && req.body.password) {

next();

} else {

res.status(400).send({

error: `Missing required fields email and password`,

});

}

}

async validateSameEmailDoesntExist(

req: express.Request,

res: express.Response,

next: express.NextFunction

) {

const user = await userService.getUserByEmail(req.body.email);

if (user) {

res.status(400).send({ error: `User email already exists` });

} else {

next();

}

}

async validateSameEmailBelongToSameUser(

req: express.Request,

res: express.Response,

next: express.NextFunction

) {

const user = await userService.getUserByEmail(req.body.email);

if (user && user.id === req.params.userId) {

next();

} else {

res.status(400).send({ error: `Invalid email` });

}

}

// Here we need to use an arrow function to bind `this` correctly

validatePatchEmail = async (

req: express.Request,

res: express.Response,

next: express.NextFunction

) => {

if (req.body.email) {

log('Validating email', req.body.email);

this.validateSameEmailBelongToSameUser(req, res, next);

} else {

next();

}

};

async validateUserExists(

req: express.Request,

res: express.Response,

next: express.NextFunction

) {

const user = await userService.readById(req.params.userId);

if (user) {

next();

} else {

res.status(404).send({

error: `User ${req.params.userId} not found`,

});

}

}

To make an easy way for our API consumers to make further requests about a newly added user, we are going to add a helper function that will extract the userId from the request parameters—coming in from the request URL itself—and add it to the request body, where the rest of the user data resides.

The idea here is to be able to simply use the full body request when we would like to update user information, without worrying about getting the ID from the parameters every time. Instead, it’s taken care of in just one spot, the middleware. The function will look like this:

async extractUserId(

req: express.Request,

res: express.Response,

next: express.NextFunction

) {

req.body.id = req.params.userId;

next();

}

Besides the logic, the main difference between the middleware and the controller is that now we are using the next() function to pass control along a chain of configured functions until it arrives at the final destination, which in our case is the controller.

Putting it All Together: Refactoring Our Routes

Now that we have implemented all the new aspects of our project architecture, let’s go back to the users.routes.config.ts file we defined in the previous article. It will call our middleware and our controllers, both of which rely on our user service, which in turn requires our user model.

The final file will be as simple as this:

import { CommonRoutesConfig } from '../common/common.routes.config';

import UsersController from './controllers/users.controller';

import UsersMiddleware from './middleware/users.middleware';

import express from 'express';

export class UsersRoutes extends CommonRoutesConfig {

constructor(app: express.Application) {

super(app, 'UsersRoutes');

}

configureRoutes(): express.Application {

this.app

.route(`/users`)

.get(UsersController.listUsers)

.post(

UsersMiddleware.validateRequiredUserBodyFields,

UsersMiddleware.validateSameEmailDoesntExist,

UsersController.createUser

);

this.app.param(`userId`, UsersMiddleware.extractUserId);

this.app

.route(`/users/:userId`)

.all(UsersMiddleware.validateUserExists)

.get(UsersController.getUserById)

.delete(UsersController.removeUser);

this.app.put(`/users/:userId`, [

UsersMiddleware.validateRequiredUserBodyFields,

UsersMiddleware.validateSameEmailBelongToSameUser,

UsersController.put,

]);

this.app.patch(`/users/:userId`, [

UsersMiddleware.validatePatchEmail,

UsersController.patch,

]);

return this.app;

}

}

Here, we’ve redefined our routes by adding middleware to validate our business logic and the appropriate controller functions to process the request if everything is valid. We’ve also used the .param() function from Express.js to extract the userId.

At the .all() function, we are passing our validateUserExists function from UsersMiddleware to be called before any GET, PUT, PATCH, or DELETE can go through on the endpoint /users/:userId. This means validateUserExists doesn’t need to be in the additional function arrays we pass to .put() or .patch()—it will get called before the functions specified there.

We’ve leveraged the inherent reusability of middleware here in another way, too. By passing UsersMiddleware.validateRequiredUserBodyFields to be used in both POST and PUT contexts, we’re elegantly recombining it with other middleware functions.

Disclaimers: We only cover basic validations in this article. In a real-world project, you will need to think about and find all the restrictions you need to code. For the sake of simplicity, we are also assuming that a user cannot change their email.

Testing Our Express/TypeScript REST API

We can now compile and run our Node.js app. Once it’s running, we’re ready to test our API routes using a REST client such as Postman or cURL.

Let’s first try to get our users:

curl --request GET 'localhost:3000/users' \

--header 'Content-Type: application/json'

At this point, we will have an empty array as a response, which is accurate. Now we can try to create the first user resource with this:

curl --request POST 'localhost:3000/users' \

--header 'Content-Type: application/json'

Note that now our Node.js app will send back an error from our middleware:

{

"error": "Missing required fields email and password"

}

To fix it, let’s send a valid request to post to /users resource:

curl --request POST 'localhost:3000/users' \

--header 'Content-Type: application/json' \

--data-raw '{

"email": "marcos.henrique@toptal.com",

"password": "sup3rS3cr3tPassw0rd!23"

}'

This time, we should see something like the following:

{

"id": "ksVnfnPVW"

}

This id is the identifier of the newly created user and will be different on your machine. To make the remaining testing statements easier, you can run this command with the one you get (assuming you’re using a Linux-like environment):

REST_API_EXAMPLE_ID="put_your_id_here"

We can now see the response we get from making a GET request using the above variable:

curl --request GET "localhost:3000/users/$REST_API_EXAMPLE_ID" \

--header 'Content-Type: application/json'

We can now also update the entire resource with the following PUT request:

curl --request PUT "localhost:3000/users/$REST_API_EXAMPLE_ID" \

--header 'Content-Type: application/json' \

--data-raw '{

"email": "marcos.henrique@toptal.com",

"password": "sup3rS3cr3tPassw0rd!23",

"firstName": "Marcos",

"lastName": "Silva",

"permissionLevel": 8

}'

We can also test that our validation works by changing the email address, which should result in an error.

Note that when using a PUT to a resource ID, we, as API consumers, need to send the entire object if we want to conform to the standard REST pattern. That means that if we want to update just the lastName field, but using our PUT endpoint, we will be forced to send the entire object to be updated. It would be easier to use a PATCH request since there it’s still within standard REST constraints to send just the lastName field:

curl --request PATCH "localhost:3000/users/$REST_API_EXAMPLE_ID" \

--header 'Content-Type: application/json' \

--data-raw '{

"lastName": "Faraco"

}'

Recall that in our own codebase, it’s our route configuration that enforces this distinction between PUT and PATCH using the middleware functions we added in this article.

PUT, PATCH, or Both?

It may sound like there’s not much reason to support PUT given the flexibility of PATCH, and some APIs will take that approach. Others may insist on supporting PUT to make the API “completely REST-conformant,” in which case, creating per-field PUT routes might be an appropriate tactic for common use cases.

In reality, these points are part of a much larger discussion ranging from real-life differences between the two to more flexible semantics for PATCH alone. We present PUT support here and widely-used PATCH semantics for simplicity, but encourage readers to delve into further research when they feel ready to do so.

Getting the user list again as we did above, we should see our created user with its fields updated:

[

{

"id": "ksVnfnPVW",

"email": "marcos.henrique@toptal.com",

"password": "$argon2i$v=19$m=4096,t=3,p=1$ZWXdiTgb922OvkNAdh9acA$XUXsOHaRN4uVg5ltIwwO+SPLxvb9uhOKcxoLER1e/mM",

"firstName": "Marcos",

"lastName": "Faraco",

"permissionLevel": 8

}

]

Finally, we can test deleting the user with this:

curl --request DELETE "localhost:3000/users/$REST_API_EXAMPLE_ID" \

--header 'Content-Type: application/json'

Getting the user list again, we should see that the deleted user is no longer present.

With that, we have all the CRUD operations for the users resource working.

Node.js/TypeScript REST API

In this part of the series, we further explored key steps in building a REST API using Express.js. We split our code to support services, middleware, controllers, and models. Each of their functions has a specific role, whether it’s validation, logical operations, or processing valid requests and responding to them.

We also created a very simple way to store data, with the (pardon the pun) express purpose of allowing some testing at this point, then being replaced with something more practical in the next part of our series.

Besides building an API with simplicity in mind—using singleton classes, for example—there are several steps to take to make it easier to maintain, more scalable, and secure. In the last article in the series, we cover:

- Replacing the in-memory database with MongoDB, then using Mongoose to simplify the coding process

- Adding a security layer and control access in a stateless approach with JWT

- Configuring automated testing to allow our application to scale

You can browse the final code from this article here.

Further Reading on the Toptal Blog:

- Building a Node.js/TypeScript REST API, Part 1: Express.js

- Using Express.js Routes for Promise-based Error Handling

- Building a Node.js/TypeScript REST API, Part 3: MongoDB, Authentication, and Automated Tests

- Creating a Secure REST API in Node.js

- The Top 10 Most Common Mistakes That Node.js Developers Make

Understanding the basics

What is the difference between middleware and an API?

It depends. In the context of an Express.js back end, middleware is just one type of component in the internal architecture used to implement an API. However, in larger (e.g., distributed) contexts, sometimes APIs themselves may be considered “middleware.”

What is the role of middleware?

When building an API, the middleware will help to validate any requirements inside of a request before reaching the controller, meanwhile keeping the code organized and easy to do maintenance.

Why is middleware needed?

Express.js middleware functions can manipulate a request and also end a request-response life cycle. When multiple routes need the same validation or manipulation, middleware can be reused among them, making it easier to maintain and extend the codebase.

How does middleware work?

Express.js middleware functions receive request and response objects and a “next” function. They might modify the request or use the response object to short-circuit the request-response life cycle (e.g., by sending an error 400.) The “next” function calls the next piece of middleware, chaining them all together.

What is a controller in a REST API?

Express.js REST API controllers can be defined as the last function that receives the request and response objects, with the purpose of processing the request before sending a response to the requester.

Is a REST API a web service?

Yes, a REST API can be considered a web service since the underlying protocol of REST is HTTP, which allows machine-to-machine interaction over the web.

What is a data transfer object in MVC?

A data transfer object (DTO) is a design pattern created to define the way two different applications can transfer data to one another when they aren’t necessarily in the same environment.

Lecco, Province of Lecco, Italy

Member since February 25, 2017

About the author

Marcos has 17+ years in IT and development. His passions include REST architecture, Agile development methodology, and JS.