Rudolf Eremyan

Verified Expert in Engineering

Data Science Developer

Rudolf is a data scientist with eight years of experience in the field. He developed the first chatbot framework for the Georgian language, which the largest bank in Georgia adopted. Rudolf designed big data processing pipelines and analytics solutions based on cloud technologies for Fortune 500 companies. He was invited to be a speaker and judge on international hackathons and conferences like PyData, Google DevFest, and NASA's international space app challenge.

Portfolio

Experience

Availability

Preferred Environment

Amazon Web Services (AWS), Python, Big Data, PostgreSQL, SQL, PySpark, Data Modeling, Data Pipelines, Pandas, Data Scraping

The most amazing...

...thing I've developed is a chatbot framework for the Georgian language.

Work Experience

Data Engineer

Amgreat North America

- Developed scripts for parsing data from social media platforms, contributing to streamlined data analysis and information retrieval processes.

- Implemented a topic modeling solution to extract valuable insights from complex datasets, enhancing the depth and efficiency of data analysis processes.

- Designed interactive dashboard prototypes using Streamlit and Plotly libraries, elevating data visualization capabilities for enhanced user engagement and comprehension.

- Implemented and deployed automated data pipelines on AWS, optimizing data workflows for increased efficiency and scalability.

Data Scientist

Midea - Main

- Developed scripts for collecting data from eCommerce platforms.

- Used cloud service providers for computation and AI-based data analysis.

- Designed an advanced insights analytics dashboard with AWS QuickSight.

Data Engineer

Staude Capital

- Designed a data model based on customer-provided requirements and business needs.

- Developed an investor CRM system for managing hedge fund trades, orders, and other operations.

- Created automated reporting tools and deployed them on the Amazon Web Services.

- Developed an internal communication and notification system.

Data Scientist

ATH Digital LLC

- Created data ingestion scripts for pulling data from ad platforms like Google Ads and Facebook Ads.

- Developed automatic uploading of the CSV and Excel file data into the database based on the AWS services.

- Set up the marketing streaming cloud infrastructure of the data processing pipeline.

- Designed a database model based on the data science team's requirements.

- Created a model for forecasting and visualizing the balance burn rate metric.

Senior Data Scientist

Zelos.AI

- Processed and analyzed over 100 million athletic performance data with PySpark running on AWS EMR.

- Designed a data model based on the companies business requirements.

- Made a batch data processing pipeline orchestrated by Airflow.

- Created a data scraping tool for parsing dynamic and static web pages using Scrapy, Selenium, lxml.

- Developed athletics competitions simulations based on the Monte Carlo approach.

Data Scientist

Windsor.AI

- Optimized existing SQL queries, making them less complex and having higher performance.

- Used SQL for gaining insights, detecting anomalies and problems in the collected data.

- Created a workflow for the data migration between different database management systems.

- Developed scripts for ingesting data from different online advertising platforms.

- Designed new database tables according to the analytics team requirements.

Data Scientist

Frontier Data Corporation

- Developed models for trend detection in the Twitter stream.

- Developed AI-based application's architecture.

- Integrated in-house ML models with cloud services as IBM BlueMix and Google Cloud NLP.

- Worked with big datasets using Google BigQuery.

- Created customized modules for new ML models evaluation.

- Trained machine learning models for text classification.

- Created tests for existing applications.

Data Scientist

Pulsar AI

- Developed a chatbot framework for the Georgian language applying machine learning and natural language processing (NLP) techniques.

- Trained and deployed a machine learning model for an automated grouping of the news and articles from Georgian media websites.

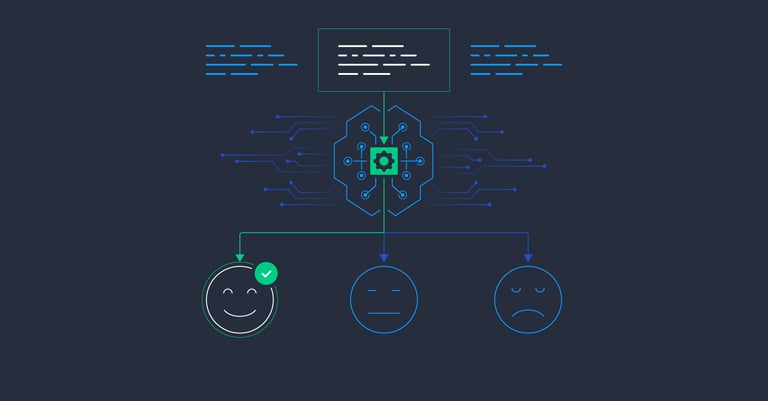

- Designed a tool for sentiment classification on texts from social networks.

- Analyzed a large amount of user conversations data applying NLP, statistics and presented precise results.

- Worked with time series for analyzing and predicting cryptocurrency prices.

- Managed a team of linguists who worked on the data collection and labeling.

Software Developer Internship

Virtuace Inc.

- Fixed bugs.

- Expanded functionality of the existing application.

- Tested new modules.

Full-stack Software Engineer

Georgian Technical University

- Developed the front-end for managing and working with linguistic corpora.

- Created web services for operating with linguistic corpus data.

- Organized database structure for storing and manipulating the linguistic corpora.

- Analyzed documents using NLP tools and presented results in a clear manner.

Experience

Consumer Insights Analysis

Social Media Monitoring

Multi-asset Hedge Fund Management System

Trend Detection in Twitter Stream

Attribution Modeling for Marketing Optimization

I developed scripts for data migration and client notifications and implemented data integrity tests to ensure the completeness and accuracy of existing data. During this project, there was effective collaboration between me and an international team spread across different geographical locations.

Advanced News Filter

Trained machine learning models for text classification which used in text filtering mechanism. Integrated cloud ML services such as IBM BlueMix and Google Cloud NLP with an existing application.

Chatbot Framework for Georgian Language

https://www.facebook.com/TBCTIbot/Automated News Article Grouping Tool

Social Media Sentiment Analysis Tool

Spell Checker for Georgian Language

NLP Tool for Automatic Identification of Georgian Dialects

This project was awarded the "Best Scientific Research of the Tbilisi State University 76th Student Conference"

Cryptocurrency Prices Monitoring Tool

Linguistic Corpus Management System

ETL pipeline for pharmaceutical industry data

Simulation of the Tokio 2020 Olympic Games

Skills

Languages

Python, SQL, XML, JavaScript, Java, HTML, CSS, R, Bash, Excel VBA, GraphQL

Frameworks

Selenium, Flask, Scrapy, Spark

Libraries/APIs

Pandas, Beautiful Soup, REST APIs, XGBoost, SciPy, NumPy, SpaCy, Scikit-learn, Natural Language Toolkit (NLTK), Twitter API, PySpark, Google AdWords, Matplotlib, Google Cloud API, AdWords API, Facebook API, Google Analytics API, Node.js

Tools

Trello, Jupyter, GitHub, Gensim, Apache Airflow, pgAdmin, Bitbucket, Git, Cron, Plotly, Amazon Elastic MapReduce (EMR), Google Analytics, Docker Compose, Spark SQL

Paradigms

Data Science, ETL, Scrum, REST, Database Design, Anomaly Detection

Platforms

Jupyter Notebook, Docker, Amazon Web Services (AWS), Linux, Amazon EC2, Appsmith

Storage

PostgreSQL, MySQL, DB, MongoDB, Database Modeling, Amazon DynamoDB, Redshift, Data Lakes, Data Pipelines, Elasticsearch

Other

Data Scraping, Big Data, Data Engineering, Text Classification, Text Mining, Data Analysis, Data Analytics, Batch File Processing, Predictive Analytics, Apache Superset, Regular Expressions, Web Scraping, Clustering Algorithms, Topic Modeling, Web Services, Data Mining, Attribution Modeling, Data Visualization, Reporting, Trading, Natural Language Processing (NLP), Markov Chain Monte Carlo (MCMC) Algorithms, Markov Model, Code Architecture, Data Modeling, lxml, fastText, Linguistics, Time Series Analysis, SSH, Machine Learning, Computational Linguistics, Statistics, Data Structures, Algorithms, IBM Cloud, Amazon Kinesis, Hedge Funds, GPT, Generative Pre-trained Transformers (GPT), Sentiment Analysis, Agile Data Science, OpenAI, HubSpot CRM, Dash, Financial Data

Industry Expertise

Marketing, Healthcare

Education

Bachelor's Degree in Computer Science

Tbilisi State University of Ivane Javakhishvili - Tbilisi, Georgia

Certifications

Data Analysis Nanodegree

Udacity

AWS Certified Solutions Architect Associate 2020

CloudGuru

Marketing Analytics with R

Datacamp.com

Google Analytics Individual Qualification

Digital Academy for Ads

Deep Learning Summer School

University of Deusto

Deep Learning Nanodegree

Udacity

Machine Learning Online Course

Stanford University

Language and Modern Technologies

Goethe University Frankfurt/Main

How to Work with Toptal

Toptal matches you directly with global industry experts from our network in hours—not weeks or months.

Share your needs

Choose your talent

Start your risk-free talent trial

Top talent is in high demand.

Start hiring