10 Tips for Effective Legacy Data Migration

Nobody wants to leave valuable customer data behind. Unfortunately, though, the hardest part of data migration to a complex CRM system, such as Salesforce, is the handling of legacy data.

In this article, Toptal Software Engineer Marian Paul provides 10 tips for successful legacy data migration to Salesforce.

Nobody wants to leave valuable customer data behind. Unfortunately, though, the hardest part of data migration to a complex CRM system, such as Salesforce, is the handling of legacy data.

In this article, Toptal Software Engineer Marian Paul provides 10 tips for successful legacy data migration to Salesforce.

Marian is a senior developer, expert in data migrations and back-end solutions. He has Oracle SQL and Microsoft SQL expert certifications.

Expertise

PREVIOUSLY AT

Migrating legacy data is hard.

Many organizations have old and complex on-premise business CRM systems. Today, there are plenty of cloud SaaS alternatives, which come with many benefits; pay as you go and to pay only for what you use. Therefore, they decide to move to the new systems.

Nobody wants to leave valuable data about customers in the old system and start with the empty new system, so we need to migrate this data. Unfortunately, data migration is not an easy task, as around 50 percent of deployment effort is consumed by data migration activities. According to Gartner, Salesforce is the leader of cloud CRM solutions. Therefore, data migration is a major topic for Salesforce deployment.

while preserving all history.

So, how can we ensure a successful transition of legacy data into a shiny new system and ensure we will preserve all of its history? In this article, I provide 10 tips for successful data migration. The first five tips apply to any data migration, regardless of the technology used.

Data Migration in General

1. Make Migration a Separate Project

In the software deployment checklist, data migration is not an “export and import” item handled by a clever “push one button” data migration tool that has predefined mapping for target systems.

Data migration is a complex activity, deserving a separate project, plan, approach, budget, and team. An entity level scope and plan must be created at the project’s beginning, ensuring no surprises, such as “Oh, we forgot to load those clients’ visit reports, who will do that?” two weeks before the deadline.

The data migration approach defines whether we will load the data in one go (also known as the big bang), or whether we will load small batches every week.

This is not an easy decision, though. The approach must be agreed upon and communicated to all business and technical stakeholders so that everybody is aware of when and what data will appear in the new system. This applies to system outages too.

2. Estimate Realistically

Do not underestimate the complexity of the data migration. Many time-consuming tasks accompany this process, which may be invisible at the project’s beginning.

For example, loading specific data sets for training purposes with a bunch of realistic data, but with sensitive items obfuscated, so that training activities do not generate email notifications to clients.

The basic factor for estimation is the number of fields to be transferred from a source system to a target system.

Some amount of time is needed in different stages of the project for every field, including understanding the field, mapping the source field to the target field, configuring or building transformations, performing tests, measuring data quality for the field, and so on.

Using clever tools, such as Jitterbit, Informatica Cloud Data Wizard, Starfish ETL, Midas, and the like, can reduce this time, especially in the build phase.

In particular, understanding the source data – the most crucial task in any migration project – cannot be automated by tools, but requires analysts to take time going through the list of fields one by one.

The simplest estimate of the overall effort is one man-day for every field transferred from the legacy system.

An exception is data replication between the same source and target schemas without further transformation – sometimes known as 1:1 migration – where we can base the estimate on the number of tables to copy.

A detailed estimate is an art of its own.

3. Check Data Quality

Do not overestimate the quality of source data, even if no data quality issues are reported from the legacy systems.

New systems have new rules, which may be violated with legacy data. Here’s a simple example. Contact email can be mandatory in the new system, but a 20-year-old legacy system may have a different point of view.

There can be mines hidden in historical data that have not been touched for a long time but could activate when transferring to the new system. For example, old data using European currencies that do not exist anymore need to be converted to Euros, otherwise, currencies must be added to the new system.

Data quality significantly influences effort, and the simple rule is: The further we go in history, the bigger mess we will discover. Thus, it is vital to decide early on how much history we want to transfer into the new system.

4. Engage Business People

Business people are the only ones who truly understand the data and who can therefore decide what data can be thrown away and what data to keep.

It is important to have somebody from the business team involved during the mapping exercise, and for future backtracking, it is useful to record mapping decisions and the reasons for them.

Since a picture is worth more than a thousand words, load a test batch into the new system, and let the business team play with it.

Even if data migration mapping is reviewed and approved by the business team, surprises can appear once the data shows up in the new system’s UI.

“Oh, now I see, we have to change it a bit,” becomes a common phrase.

Failing to engage subject matter experts, who are usually very busy people, is the most common cause of problems after a new system goes live.

5. Aim for Automated Migration Solution

Data migration is often viewed as a one-time activity, and developers tend to end up with solutions full of manual actions hoping to execute them only once. But there are many reasons to avoid such an approach.

- If migration is split into multiple waves, we have to repeat the same actions multiple times.

- Typically, there are at least three migration runs for every wave: a dry run to test the performance and functionality of data migration, a full data validation load to test the entire data set and to perform business tests, and of course, production load. The number of runs increases with poor data quality. Improving data quality is an iterative process, so we need several iterations to reach the desired success ratio.

Thus, even if migration is one-time activity by nature, having manual actions can significantly slow down your operations.

Salesforce Data Migration

Next we will cover five tips for a successful Salesforce migration. Keep in mind, these tips are likely applicable to other cloud solutions as well.

6. Prepare for Lengthy Loads

Performance is one of, if not the biggest, tradeoff when moving from an on-premise to a cloud solution – Salesforce not excluded.

On-premise systems usually allow for direct data load into an underlying database, and with good hardware, we can easily reach millions of records per hour.

But, not in the cloud. In the cloud, we are heavily limited by several factors.

- Network latency – Data is transferred via the internet.

- Salesforce application layer – Data is moved through a thick API multitenancy layer until they land in their Oracle databases.

- Custom code in Salesforce – Custom validations, triggers, workflows, duplication detection rules, and so on – many of which disable parallel or bulk loads.

As a result, load performance can be thousands of accounts per hour.

It can be less, or it can be more, depending on things, such as the number of fields, validations and triggers. But it is several grades slower than a direct database load.

Performance degradation, which is dependent on the volume of the data in Salesforce, must also be considered.

It is caused by indexes in the underlying RDBMS (Oracle) used for checking foreign keys, unique fields, and evaluation of duplication rules. The basic formula is approximately 50 percent slowdown for every grade of 10, caused by O(logN) the time complexity portion in sort and B-tree operations.

Moreover, Salesforce has many resource usage limits.

One of them is the Bulk API limit set to 5,000 batches in 24-hour rolling windows, with the maximum of 10,000 records in each batch.

So, the theoretical maximum is 50 million records loaded in 24 hours.

In a real project, the maximum is much lower due to limited batch size when using, for example, custom triggers.

This has a strong impact on the data migration approach.

Even for medium-sized datasets (from 100,000 to 1 million accounts), the big bang approach is out of the question, so we must split data into smaller migration waves.

This, of course, impacts the entire deployment process and increases the migration complexity because we will be adding data increments into a system already populated by previous migrations and data entered by users.

We must also consider this existing data in the migration transformations and validations.

Further, lengthy loads can mean we cannot perform migrations during a system outage.

If all users are located in one country, we can leverage an eight-hour outage during the night.

But for a company, such as Coca-Cola, with operations all over the world, that is not possible. Once we have U.S., Japan, and Europe in the system, we span all time zones, so Saturday is the only outage option that doesn’t affect users.

And that may not be enough, so, we must load while online, when users are working with the system.

7. Respect Migration Needs in Application Development

Application components, such as validations and triggers, should be able to handle data migration activities. Hard disablement of validations at the time of the migration load is not an option if the system must be online. Instead, we have to implement different logic in validations for changes performed by a data migration user.

- Date fields should not be compared to the actual system date because that would disable the loading of historical data. For example, validation must allow entering a past account start date for migrated data.

- Mandatory fields, which may not be populated with historical data, must be implemented as non-mandatory, but with validation sensitive to the user, thus allowing empty values for data coming from the migration, but rejecting empty values coming from regular users via the GUI.

- Triggers, especially those sending new records to the integration, must be able to be switched on/off for the data migration user in order to prevent flooding the integration with migrated data.

Another trick is using field Legacy ID or Migration ID in every migrated object. There are two reasons for this. The first is obvious: To keep the ID from the old system for backtracking; after the data is in the new system, people may still want to search their accounts using the old IDs, found in places as emails, documents, and bug-tracking systems. Bad habit? Maybe. But users will thank you if you preserve their old IDs. The second reason is technical and comes from the fact Salesforce does not accept explicitly provided IDs for new records (unlike Microsoft Dynamics) but generates them during the load. The problem arises when we want to load child objects because we have to assign them IDs of the parent objects. Since we will know those IDs only after loading, this is a futile exercise.

Let’s use Accounts and their Contacts, for example:

- Generate data for Accounts.

- Load Accounts into Salesforce, and receive generated IDs.

- Incorporate new Account IDs in Contact data.

- Generate data for Contacts.

- Load Contacts in Salesforce.

We can do this more simply by loading Accounts with their Legacy IDs stored in a special external field. This field can be used as a parent reference, so when loading Contacts, we simply use the Account Legacy ID as a pointer to the parent Account:

- Generate data for Accounts, including Legacy ID.

- Generate data for Contacts, including Account Legacy ID.

- Load Accounts into Salesforce.

- Load Contacts in Salesforce, using Account Legacy ID as parent reference.

The nice thing here is that we have separated a generation and a loading phase, which allows for better parallelism, decrease outage time, and so on.

8. Be Aware of Salesforce Specific Features

Like any system, Salesforce has plenty of tricky parts of which we should be aware in order to avoid unpleasant surprises during data migration. Here are handful of examples:

- Some changes on active Users automatically generate email notifications to user emails. Thus, if we want to play with user data, we need to deactivate users first and activate after changes are completed. In test environments, we scramble user emails so that notifications are not fired at all. Since active users consume costly licenses, we are not able to have all users active in all test environments. We have to manage subsets of active users, for example, to activate just those in a training environment.

- Inactive users, for some standard objects such as Account or Case, can be assigned only after granting the system permission “Update Records with Inactive Owners,” but they can be assigned, for example, to Contacts and all custom objects.

- When Contact is deactivated, all opt out fields are silently turned on.

- When loading a duplicate Account Team Member or Account Share object, the existing record is silently overwritten. However, when loading a duplicate Opportunity Partner, the record is simply added resulting in a duplicate.

- System fields, such as

Created Date,Created By ID,Last Modified Date,Last Modified By ID, can be explicitly written only after granting a new system permission “Set Audit Fields upon Record Creation.” - History-of-field value changes cannot be migrated at all.

- Owners of knowledge articles cannot be specified during the load but can be updated later.

- The tricky part is the storing of content (documents, attachments) into Salesforce. There are multiple ways to do it (using Attachments, Files, Feed attachments, Documents), and each way has its pros and cons, including different file size limits.

- Picklist fields force users to select one of the allowed values, for example, a type of account. But when loading data using Salesforce API (or any tool built upon it, such as Apex Data Loader or Informatica Salesforce connector), any value will pass.

The list goes on, but the bottom line is: Get familiar with the system, and learn what it can do and what it cannot do before you make assumptions. Do not assume standard behavior, especially for core objects. Always research and test.

9. Do Not Use Salesforce as a Data Migration Platform

It is very tempting to use Salesforce as a platform for building a data migration solution, especially for Salesforce developers. It is the same technology for the data migration solution as for the Salesforce application customization, the same GUI, the same Apex programming language, the same infrastructure. Salesforce has objects which can act as tables, and a kind of SQL language, Salesforce Object Query Language (SOQL). However, please do not use it; it would be a fundamental architectural flaw.

Salesforce is an excellent SaaS application with a lot of nice features, such as advanced collaboration and rich customization, but mass processing of data is not one of them. The three most significant reasons are:

- Performance – Processing of data in Salesforce is several grades slower than in RDBMS.

- Lack of analytical features – Salesforce SOQL does not support complex queries and analytical functions that must be supported by Apex language, and would degrade performance even more.

- Architecture* – Putting a data migration platform inside a specific Salesforce environment is not very convenient. Usually, we have multiple environments for specific purposes, often created ad hoc so that we can put a lot of time on code synchronization. Plus, you would also be relying on connectivity and availability of that specific Salesforce environment.

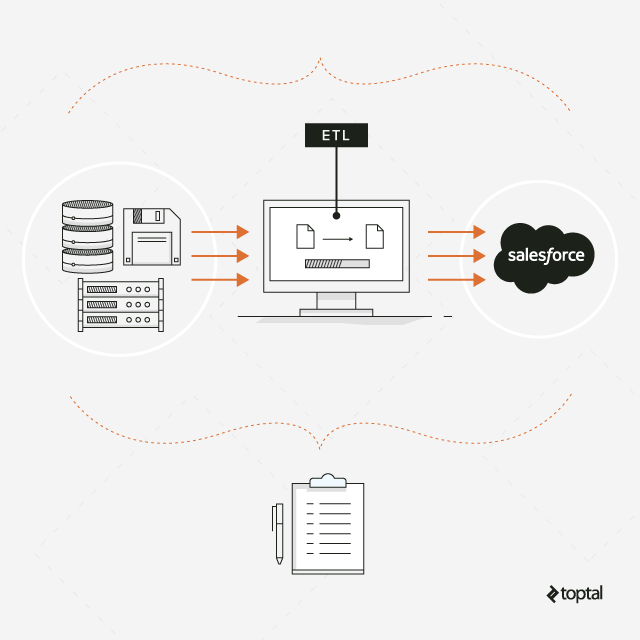

Instead, build a data migration solution in a separate instance (it could be a cloud or on-premise) using an RDBMS or ETL platform. Connect it with source systems and target the Salesforce environments you want, move the data you need into your staging area and process it there. This will allow you to:

- Leverage the full power and capabilities of the SQL language or ETL features.

- Have all code and data in one place so that you can run analyses across all systems.

- For example, you can combine the newest configuration from the most up-to-date test Salesforce environment with real data from the production Salesforce environment.

- You are not so dependent upon the technology of the source and target systems and you can reuse your solution for the next project.

10. Oversight Salesforce Metadata

At the project beginning, we usually grab a list of Salesforce fields and start the mapping exercise. During the project, it often happens that new fields are added by the application development team into Salesforce, or that some field properties are changed. We can ask the application team to notify the data migration team about every data model change, but doesn’t always work. To be safe, we need to have all data model changes under supervision.

A common way to do this is to download, on a regular basis, migration-relevant metadata from Salesforce into some metadata repository. Once we have this, we can not only detect changes in the data model, but we can also compare data models of two Salesforce environments.

What metadata to download:

- A list of objects with their labels and technical names and attributes such as

creatableorupdatable. - A list of fields with their attributes (better to get all of them).

- A list of picklist values for picklist fields. We will need them to map or validate input data for correct values.

- A list of validations, to make sure new validations are not creating problems for migrated data.

How to download metadata from Salesforce? Well, there is no standard metadata method, but there are multiple options:

-

Generate Enterprise WSDL – In the Salesforce web application navigate to the

Setup / APImenu and download strongly typed Enterprise WSDL, which describe all the objects and fields in Salesforce (but not picklist values nor validations). - Call Salesforce

describeSObjectsweb service, directly or by using Java or C# wrapper (consult Salesforce API). This way, you get what you need, and this the recommended way to export the metadata. - Use any of the numerous alternative tools available on the internet.

Prepare for the Next Data Migration

Cloud solutions, such as Salesforce, are ready instantly. If you are happy with the built-in functionalities, just log in and use it. However, Salesforce, like any other cloud CRM solution, brings specific problems to data migration topics to be aware of, in particular, regarding the performance and resources limits.

Moving legacy data into the new system is always a journey, sometimes a journey to history hidden in data from past years. In this article, based on a dozen migration projects, I’ve presented ten tips on how to migrate legacy data and successfully avoid the most pitfalls.

The key is to understand what the data reveals. So, before you start the data migration, make sure your Salesforce development team is well-prepared for the potential problems your data may hold.

Marian Paul

Pezinok, Bratislava Region, Slovakia

Member since June 18, 2020

About the author

Marian is a senior developer, expert in data migrations and back-end solutions. He has Oracle SQL and Microsoft SQL expert certifications.

Expertise

PREVIOUSLY AT