MIDI Tutorial: Creating Browser-Based Audio Applications Controlled by MIDI Hardware

Modern web browsers provide a rich set of APIs; some of which have been around for a long time, and have since been used to build powerful web applications.

Web Audio API has been popular among HTML5 game developers, however, the Web MIDI API and its capabilities have yet to be utilized. In this article, Toptal engineer Stéphane P. Péricat guides you through the basics of the Web MIDI API, and shows you how to build a simple monosynth to play with your favorite MIDI device.

Modern web browsers provide a rich set of APIs; some of which have been around for a long time, and have since been used to build powerful web applications.

Web Audio API has been popular among HTML5 game developers, however, the Web MIDI API and its capabilities have yet to be utilized. In this article, Toptal engineer Stéphane P. Péricat guides you through the basics of the Web MIDI API, and shows you how to build a simple monosynth to play with your favorite MIDI device.

Stéphane is a front-end engineer with 7+ years’ of experience. He specializes in building performant JavaScript-based web applications.

Expertise

PREVIOUSLY AT

While the Web Audio API is increasing in popularity, especially among HTML5 game developers, the Web MIDI API is still little known among frontend developers. A big part of this probably has to do with its current lack of support and accessible documentation; the Web MIDI API is currently only supported in Google Chrome, granted that you enable a special flag for it. Browser manufacturers currently put little emphasis on this API, as it is planned to be part of ES7 standard.

Designed in the early 80’s by several music industry representatives, MIDI (short for Musical Instrument Digital Interface), is a standard communication protocol for electronic music devices. Even though other protocols, such as OSC, have been developed since then; thirty years later, MIDI is still the de-facto communication protocol for audio hardware manufacturers. You will be hard-pressed to find a modern music producer that does not own at least one MIDI device in his studio.

With the fast development and adoption of the Web Audio API, we can now start building browser-based applications that bridge the gap between the cloud and the physical world. Not only does the Web MIDI API allows us to build synthesizers and audio effects, but we can even start building browser-based DAW (Digital Audio Workstation) similar in feature and performance to their current flash-based counterparts (check out Audiotool, for example).

In this MIDI tutorial, I will guide you through the basics of the Web MIDI API, and we will build a simple monosynth that you will be able to play with your favorite MIDI device. The full source code is available here, and you can test the live demo* directly. If you do not own a MIDI device, you can still follow this tutorial by checking out the ‘keyboard’ branch of the GitHub repository, which enables basic support for your computer keyboard, so you can play notes and change octaves. This is also the version that is available as the live demo. However, due to limitations of the computer hardware, velocity and detune are both disabled whenever you use your computer keyboard to control the synthesizer. Please refer to the readme file on GitHub to read about the key/note mapping.

* Note: After publication, Heroku stopped offering free hosting, and the demo is no longer available.

Midi Tutorial Prerequisites

You will need the following to for this MIDI tutorial:

- Google Chrome (version 38 or above) with the

#enable-web-midiflag enabled - (Optionally) A MIDI device, that can trigger notes, connected to your computer

We will also be using Angular.js to bring a bit of structure to our application; therefore, basic knowledge of the framework is a prerequisite.

Getting Started

We will modularize our MIDI application from the ground up by separating it into 3 modules:

- WebMIDI: handling the various MIDI devices connected to your computer

- WebAudio: providing the audio source for our synth

- WebSynth: connecting the web interface to the audio engine

An App module will handle the user interaction with the web user interface. Our application structure could look a bit like this:

|- app

|-- js

|--- midi.js

|--- audio.js

|--- synth.js

|--- app.js

|- index.html

You should also install the following libraries to help you build up your application: Angular.js, Bootstrap, and jQuery. Probably the easiest way to install these is via Bower.

The WebMIDI Module: Connecting with the Real World

Let’s start figuring out how to use MIDI by connecting our MIDI devices to our application. To do so, we will create a simple factory returning a single method. To connect to our MIDI devices via the Web MIDI API, we need to call the navigator.requestMIDIAccess method:

angular

.module('WebMIDI', [])

.factory('Devices', ['$window', function($window) {

function _connect() {

if($window.navigator && 'function' === typeof $window.navigator.requestMIDIAccess) {

$window.navigator.requestMIDIAccess();

} else {

throw 'No Web MIDI support';

}

}

return {

connect: _connect

};

}]);

And that’s pretty much it!

The requestMIDIAccess method returns a promise, so we can just return it directly and handle the result of the promise in our app’s controller:

angular

.module('DemoApp', ['WebMIDI'])

.controller('AppCtrl', ['$scope', 'Devices', function($scope, devices) {

$scope.devices = [];

devices

.connect()

.then(function(access) {

if('function' === typeof access.inputs) {

// deprecated

$scope.devices = access.inputs();

console.error('Update your Chrome version!');

} else {

if(access.inputs && access.inputs.size > 0) {

var inputs = access.inputs.values(),

input = null;

// iterate through the devices

for (input = inputs.next(); input && !input.done; input = inputs.next()) {

$scope.devices.push(input.value);

}

} else {

console.error('No devices detected!');

}

}

})

.catch(function(e) {

console.error(e);

});

}]);

As mentioned, the requestMIDIAccess method returns a promise, passing an object to the then method, with two properties: inputs and outputs.

In earlier versions of Chrome, these two properties were methods allowing you to retrieve an array of input and output devices directly. However, in the latest updates, these properties are now objects. This makes quite a difference, since we now need to call the values method on either the inputs or outputs object to retrieve the corresponding list of devices. This method acts as a generator function, and returns an iterator. Again, this API is meant to be part of ES7; therefore, implementing generator-like behavior makes sense, even though it is not as straight-forward as the original implementation.

Finally, we can retrieve the number of devices via the size property of the iterator object. If there is at least one device, we simply iterate over the result by calling the next method of the iterator object, and pushing each device to an array defined on the $scope. On the front-end, we can implement a simple select box which will list all the available input devices and let us choose which device we want to use as the active device to control the web synth:

<select ng-model="activeDevice" class="form-control" ng-options="device.manufacturer + ' ' + device.name for device in devices">

<option value="" disabled>Choose a MIDI device...</option>

</select>

We bound this select box to a $scope variable called activeDevice which we will later use to connect this active device to the synth.

The WebAudio Module: Making Noise

The WebAudio API allows us to not only play sound files, but also generate sounds by recreating the essential components of synthesizers such as oscillators, filters, and gain nodes amongst others.

Create an Oscillator

The role of oscillators is to output a waveform. There are various types of waveforms, amongst which four are supported in the WebAudio API: sine, square, triangle and sawtooth. Wave forms are said to “oscillate” at a certain frequency, but it is also possible for one to define their own custom wavetable if needed. A certain range of frequencies are audible by human beings - they are known as sounds. Alternatively, when they are oscillating at low frequencies, oscillators can also help us build LFO’s (“low frequency oscillator”) so we can modulate our sounds (but that is beyond the scope of this tutorial).

The first thing we need to do to create some sound is to instantiate a new AudioContext:

function _createContext() {

self.ctx = new $window.AudioContext();

}

From there, we can instantiate any of the components made available by the WebAudio API. Since we might create multiple instances of each component, it makes sense to create services to be able to create new, unique instances of the components we need. Let’s start by creating the service to generate a new oscillator:

angular

.module('WebAudio', [])

.service('OSC', function() {

var self;

function Oscillator(ctx) {

self = this;

self.osc = ctx.createOscillator();

return self;

}

});

We can now instantiate new oscillators at our will, passing as an argument the AudioContext instance we created earlier. To make things easier down the road, we will add some wrapper methods - mere syntactic sugar - and return the Oscillator function:

Oscillator.prototype.setOscType = function(type) {

if(type) {

self.osc.type = type

}

}

Oscillator.prototype.setFrequency = function(freq, time) {

self.osc.frequency.setTargetAtTime(freq, 0, time);

};

Oscillator.prototype.start = function(pos) {

self.osc.start(pos);

}

Oscillator.prototype.stop = function(pos) {

self.osc.stop(pos);

}

Oscillator.prototype.connect = function(i) {

self.osc.connect(i);

}

Oscillator.prototype.cancel = function() {

self.osc.frequency.cancelScheduledValues(0);

}

return Oscillator;

Create a Multipass Filter and a Volume Control

We need two more components to complete our basic audio engine: a multipass filter, to give a bit of shape to our sound, and a gain node to control the volume of our sound and turn the volume on and off. To do so, we can proceed in the same way we did for the oscillator: create services returning a function with some wrapper methods. All we need to do is provide the AudioContext instance and call the appropriate method.

We create a filter by calling the createBiquadFilter method of the AudioContext instance:

ctx.createBiquadFilter();

Similarly, for a gain node, we call the createGain method:

ctx.createGain();

The WebSynth Module: Wiring Things Up

Now we are almost ready to build our synth interface and connect MIDI devices to our audio source. First, we need to connect our audio engine together and get it ready to receive MIDI notes. To connect the audio engine, we simply create new instances of the components that we need, and then “connect” them together using the connect method available for each components’ instances. The connect method takes one argument, which is simply the component you want to connect the current instance to. It is possible to orchestrate a more elaborate chain of components as the connect method can connect one node to multiple modulators (making it possible to implement things like cross-fading and more).

self.osc1 = new Oscillator(self.ctx);

self.osc1.setOscType('sine');

self.amp = new Amp(self.ctx);

self.osc1.connect(self.amp.gain);

self.amp.connect(self.ctx.destination);

self.amp.setVolume(0.0, 0); //mute the sound

self.filter1.disconnect();

self.amp.disconnect();

self.amp.connect(self.ctx.destination);

}

We just built the internal wiring of our audio engine. You can play around a bit and try different combinations of wiring, but remember to turn down the volume to avoid becoming deaf. Now we can hook up the MIDI interface to our application and send MIDI messages to the audio engine. We will setup a watcher on the device select box to virtually “plug” it into our synth. We will then listen to MIDI messages coming from the device, and pass the information to the audio engine:

// in the app's controller

$scope.$watch('activeDevice', DSP.plug);

// in the synth module

function _onmidimessage(e) {

/**

* e.data is an array

* e.data[0] = on (144) / off (128) / detune (224)

* e.data[1] = midi note

* e.data[2] = velocity || detune

*/

switch(e.data[0]) {

case 144:

Engine.noteOn(e.data[1], e.data[2]);

break;

case 128:

Engine.noteOff(e.data[1]);

break;

}

}

function _plug(device) {

self.device = device;

self.device.onmidimessage = _onmidimessage;

}

Here, we are listening to MIDI events from the device, analysing the data from the MidiEvent Object, and passing it to the appropriate method; either noteOn or noteOff, based on the event code (144 for noteOn, 128 for noteOff). We can now add the logic in the respective methods in the audio module to actually generate a sound:

function _noteOn(note, velocity) {

self.activeNotes.push(note);

self.osc1.cancel();

self.currentFreq = _mtof(note);

self.osc1.setFrequency(self.currentFreq, self.settings.portamento);

self.amp.cancel();

self.amp.setVolume(1.0, self.settings.attack);

}

function _noteOff(note) {

var position = self.activeNotes.indexOf(note);

if (position !== -1) {

self.activeNotes.splice(position, 1);

}

if (self.activeNotes.length === 0) {

// shut off the envelope

self.amp.cancel();

self.currentFreq = null;

self.amp.setVolume(0.0, self.settings.release);

} else {

// in case another note is pressed, we set that one as the new active note

self.osc1.cancel();

self.currentFreq = _mtof(self.activeNotes[self.activeNotes.length - 1]);

self.osc1.setFrequency(self.currentFreq, self.settings.portamento);

}

}

A few things are happening here. In the noteOn method, we first push the current note to an array of notes. Even though we are building a monosynth (meaning we can only play one note at a time), we can still have several fingers at once on the keyboard. So, we need to queue all theses notes so that when we release one note, the next one is played. We then need to stop the oscillator to assign the new frequency, which we convert from a MIDI note (scale from 0 to 127) to an actual frequency value with a bit of math:

function _mtof(note) {

return 440 * Math.pow(2, (note - 69) / 12);

}

In the noteOff method, we first start by finding the note in the array of active notes and removing it. Then, if it was the only note in the array, we simply turn off the volume.

The second argument of the setVolume method is the transition time, meaning how long it takes the gain to reach the new volume value. In musical terms, if the note is on, it would be the equivalent of the attack time, and if the note is off, it is the equivalent of the release time.

The WebAnalyser Module: Visualising our Sound

Another interesting feature we can add to our synth is an analyser node, which allows us to display the waveform of our sound using canvas to render it. Creating an analyser node is a bit more complicated than other AudioContext objects, as it requires to also create a scriptProcessor node to actually perform the analysis. We start by selecting the canvas element on the DOM:

function Analyser(canvas) {

self = this;

self.canvas = angular.element(canvas) || null;

self.view = self.canvas[0].getContext('2d') || null;

self.javascriptNode = null;

self.analyser = null;

return self;

}

Then, we add a connect method, in which we will create both the analyser and the script processor:

Analyser.prototype.connect = function(ctx, output) {

// setup a javascript node

self.javascriptNode = ctx.createScriptProcessor(2048, 1, 1);

// connect to destination, else it isn't called

self.javascriptNode.connect(ctx.destination);

// setup an analyzer

self.analyser = ctx.createAnalyser();

self.analyser.smoothingTimeConstant = 0.3;

self.analyser.fftSize = 512;

// connect the output to the destination for sound

output.connect(ctx.destination);

// connect the output to the analyser for processing

output.connect(self.analyser);

self.analyser.connect(self.javascriptNode);

// define the colors for the graph

var gradient = self.view.createLinearGradient(0, 0, 0, 200);

gradient.addColorStop(1, '#000000');

gradient.addColorStop(0.75, '#ff0000');

gradient.addColorStop(0.25, '#ffff00');

gradient.addColorStop(0, '#ffffff');

// when the audio process event is fired on the script processor

// we get the frequency data into an array

// and pass it to the drawSpectrum method to render it in the canvas

self.javascriptNode.onaudioprocess = function() {

// get the average for the first channel

var array = new Uint8Array(self.analyser.frequencyBinCount);

self.analyser.getByteFrequencyData(array);

// clear the current state

self.view.clearRect(0, 0, 1000, 325);

// set the fill style

self.view.fillStyle = gradient;

drawSpectrum(array);

}

};

First, we create a scriptProcessor object and connect it to the destination. Then, we create the analyser itself, which we feed with the audio output from the oscillator or filter. Notice how we still need to connect the audio output to the destination so we can hear it! We also need to define the gradient colors of our graph - this is done by calling the createLinearGradient method of the canvas element.

Finally, the scriptProcessor will fire an ‘audioprocess’ event on an interval; when this event is fired, we calculate the average frequencies captured by the analyser, clear the canvas, and redraw the new frequency graph by calling the drawSpectrum method:

function drawSpectrum(array) {

for (var i = 0; i < (array.length); i++) {

var v = array[i],

h = self.canvas.height();

self.view.fillRect(i * 2, h - (v - (h / 4)), 1, v + (h / 4));

}

}

Last but not least, we will need to modify the wiring of our audio engine a bit to accommodate this new component:

// in the _connectFilter() method

if(self.analyser) {

self.analyser.connect(self.ctx, self.filter1);

} else {

self.filter1.connect(self.ctx.destination);

}

// in the _disconnectFilter() method

if(self.analyser) {

self.analyser.connect(self.ctx, self.amp);

} else {

self.amp.connect(self.ctx.destination);

}

We now have a nice visualiser which allows us to display the waveform of our synth in real time! This involves a bit of a work to setup, but it’s very interesting and insightful, especially when using filters.

Building Up on our Synth: Adding Velocity & Detune

At this point in our MIDI tutorial we have a pretty cool synth - but it plays every note at the same volume. This is because instead of handling the velocity data properly, we simply set the volume to a fixed value of 1.0. Let’s start by fixing that, and then we will see how we can enable the detune wheel that you find on most common MIDI keyboards.

Enabling Velocity

If you are unfamiliar with it, the ‘velocity’ relates to how hard you hit the key on your keyboard. Based on this value, the sound created seems either softer or louder.

In our MIDI tutorial synth, we can emulate this behavior by simply playing with the volume of the gain node. To do so, we first need to do a bit of math to convert the MIDI data into a float value between 0.0 and 1.0 to pass to the gain node:

function _vtov (velocity) {

return (velocity / 127).toFixed(2);

}

The velocity range of a MIDI device is from 0 to 127, so we simply divide that value by 127 and return a float value with two decimals. Then, we can update the _noteOn method to pass the calculated value to the gain node:

self.amp.setVolume(_vtov(velocity), self.settings.attack);

And that’s it! Now, when we play our synth, we will notice the volumes vary based on how hard we hit the keys on our keyboard.

Enabling the Detune Wheel on your MIDI Keyboard

Most MIDI keyboards feature a detune wheel; the wheel allows you to slightly alter the frequency of the note currently being played, creating an interesting effect known as ‘detune’. This is fairly easy to implement as you learn how to use MIDI, since the detune wheel also fires a MidiMessage event with its own event code (224), which we can listen to and act upon by recalculating the frequency value and updating the oscillator.

First, we need to catch the event in our synth. To do so, we add an extra case to the switch statement we created in the _onmidimessage callback:

case 224:

// the detune value is the third argument of the MidiEvent.data array

Engine.detune(e.data[2]);

break;

Then, we define the detune method on the audio engine:

function _detune(d) {

if(self.currentFreq) {

//64 = no detune

if(64 === d) {

self.osc1.setFrequency(self.currentFreq, self.settings.portamento);

self.detuneAmount = 0;

} else {

var detuneFreq = Math.pow(2, 1 / 12) * (d - 64);

self.osc1.setFrequency(self.currentFreq + detuneFreq, self.settings.portamento);

self.detuneAmount = detuneFreq;

}

}

}

The default detune value is 64, which means there is no detune applied, so in this case we simply pass the current frequency to the oscillator.

Finally, we also need to update the _noteOff method, to take the detune into consideration in case another note is queued:

self.osc1.setFrequency(self.currentFreq + self.detuneAmount, self.settings.portamento);

Creating the Interface

So far, we only created a select box to be able to select our MIDI device and a wave form visualiser, but we have no possibility to modify the sound directly by interacting with the web page. Let’s create a very simple interface using common form elements, and bind them to our audio engine.

Creating a Layout for the Interface

We will create various form elements to control the sound of our synth:

- A radio group to select the oscillator type

- A checkbox to enable / disable the filter

- A radio group to select the filter type

- Two ranges to control the filter’s frequency and resonance

- Two ranges to control the attack and release of the gain node

Creating an HTML document for our interface, we should end up with something like this:

<div class="synth container" ng-controller="WebSynthCtrl">

<h1>webaudio synth</h1>

<div class="form-group">

<select ng-model="activeDevice" class="form-control" ng-options="device.manufacturer + ' ' + device.name for device in devices">

<option value="" disabled>Choose a MIDI device...</option>

</select>

</div>

<div class="col-lg-6 col-md-6 col-sm-6">

<h2>Oscillator</h2>

<div class="form-group">

<h3>Oscillator Type</h3>

<label ng-repeat="t in oscTypes">

<input type="radio" name="oscType" ng-model="synth.oscType" value="{{t}}" ng-checked="'{{t}}' === synth.oscType" />

{{t}}

</label>

</div>

<h2>Filter</h2>

<div class="form-group">

<label>

<input type="checkbox" ng-model="synth.filterOn" />

enable filter

</label>

</div>

<div class="form-group">

<h3>Filter Type</h3>

<label ng-repeat="t in filterTypes">

<input type="radio" name="filterType" ng-model="synth.filterType" value="{{t}}" ng-disabled="!synth.filterOn" ng-checked="synth.filterOn && '{{t}}' === synth.filterType" />

{{t}}

</label>

</div>

<div class="form-group">

<!-- frequency -->

<label>filter frequency:</label>

<input type="range" class="form-control" min="50" max="10000" ng-model="synth.filterFreq" ng-disabled="!synth.filterOn" />

</div>

<div class="form-group">

<!-- resonance -->

<label>filter resonance:</label>

<input type="range" class="form-control" min="0" max="150" ng-model="synth.filterRes" ng-disabled="!synth.filterOn" />

</div>

</div>

<div class="col-lg-6 col-md-6 col-sm-6">

<div class="panel panel-default">

<div class="panel-heading">Analyser</div>

<div class="panel-body">

<!-- frequency analyser -->

<canvas id="analyser"></canvas>

</div>

</div>

<div class="form-group">

<!-- attack -->

<label>attack:</label>

<input type="range" class="form-control" min="50" max="2500" ng-model="synth.attack" />

</div>

<div class="form-group">

<!-- release -->

<label>release:</label>

<input type="range" class="form-control" min="50" max="1000" ng-model="synth.release" />

</div>

</div>

</div>

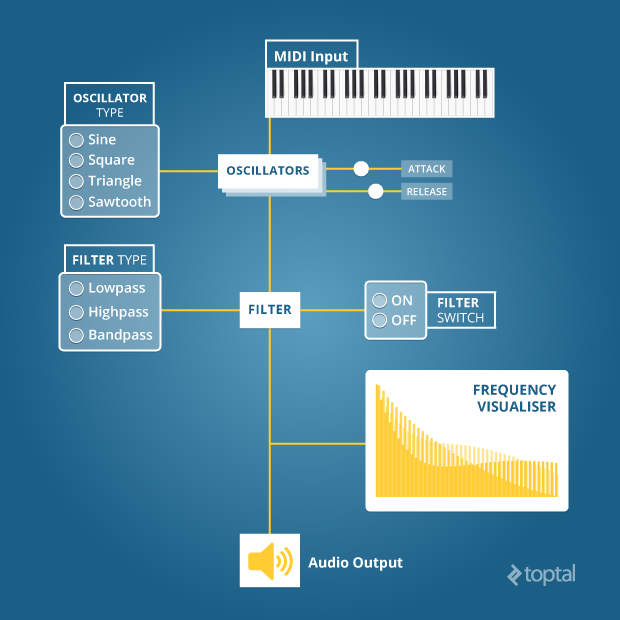

Decorating the user interface to look fancy is not something I will cover in this basic MIDI tutorial; instead we can save it as an exercise for later to polish the user interface, perhaps to look something like this:

Binding the Interface to the Audio Engine

We should define a few methods to bind these controls to our audio engine.

Controlling the Oscillator

For the oscillator, we only need a method allowing us to set the oscillator type:

Oscillator.prototype.setOscType = function(type) {

if(type) {

self.osc.type = type;

}

}

Controlling the Filter

For the filter, we need three controls: one for the filter type, one for the frequency and one for the resonance. We can also connect the _connectFilter and _disconnectFilter methods to the value of the checkbox.

Filter.prototype.setFilterType = function(type) {

if(type) {

self.filter.type = type;

}

}

Filter.prototype.setFilterFrequency = function(freq) {

if(freq) {

self.filter.frequency.value = freq;

}

}

Filter.prototype.setFilterResonance = function(res) {

if(res) {

self.filter.Q.value = res;

}

}

Controlling the Attack and Resonance

To shape our sound a bit, we can change the attack and release parameters of the gain node. We need two methods for this:

function _setAttack(a) {

if(a) {

self.settings.attack = a / 1000;

}

}

function _setRelease(r) {

if(r) {

self.settings.release = r / 1000;

}

}

Setting Up Watchers

Finally, in our app’s controller, we only need to setup a few watchers and bind them to the various methods we just created:

$scope.$watch('synth.oscType', DSP.setOscType);

$scope.$watch('synth.filterOn', DSP.enableFilter);

$scope.$watch('synth.filterType', DSP.setFilterType);

$scope.$watch('synth.filterFreq', DSP.setFilterFrequency);

$scope.$watch('synth.filterRes', DSP.setFilterResonance);

$scope.$watch('synth.attack', DSP.setAttack);

$scope.$watch('synth.release', DSP.setRelease);

Conclusion

A lot of concepts were covered in this MIDI tutorial; mostly, we discovered how to use WebMIDI API, which is fairly undocumented apart from the official specification from the W3C. The Google Chrome implementation is pretty straight forward, although the switch to an iterator object for the input and output devices requires a bit of refactoring for legacy code using the old implementation.

As for the WebAudio API, this is a very rich API, and we only covered a few of its capabilities in this tutorial. Unlike the WebMIDI API, the WebAudio API is very well documented, in particular on the Mozilla Developer Network. The Mozilla Developer Network contains a plethora of code examples and detailed lists of the various arguments and events for each component, which will help you implement your own custom browser-based audio applications.

As both API’s continue to grow, it will open some very interesting possibilities for JavaScript developers; allowing us to develop fully-featured, browser-based, DAW that will be able to compete with their Flash equivalents. And for desktop developers, you can also start creating your own cross-platform applications using tools such as node-webkit. Hopefully, this will spawn a new generation of music tools for audiophiles that will empower users by bridging the gap between the physical world and the cloud.

Stéphane P. Péricat

Chicago, IL, United States

Member since October 11, 2014

About the author

Stéphane is a front-end engineer with 7+ years’ of experience. He specializes in building performant JavaScript-based web applications.

Expertise

PREVIOUSLY AT