Scaling Play! to Thousands of Concurrent Requests

Web Developers often fail to consider the consequences of thousands of users accessing our applications at the same time. Perhaps it’s because we love to rapidly prototype; perhaps it’s because testing such scenarios is simply hard.

Regardless, I’m going to argue that ignoring scalability is not as bad as it sounds—if you use the proper set of tools and follow good development practices. In this case: the Play! framework and the Scala language.

Web Developers often fail to consider the consequences of thousands of users accessing our applications at the same time. Perhaps it’s because we love to rapidly prototype; perhaps it’s because testing such scenarios is simply hard.

Regardless, I’m going to argue that ignoring scalability is not as bad as it sounds—if you use the proper set of tools and follow good development practices. In this case: the Play! framework and the Scala language.

Paulo’s 19+ years in software development have seen him switch from being a Java senior to a Scala powerhouse. He also has a BCS degree.

Scala Web Developers often fail to consider the consequences of thousands of users accessing our applications at the same time. Perhaps it’s because we love to rapidly prototype; perhaps it’s because testing such scenarios is simply hard.

Regardless, I’m going to argue that ignoring scalability is not as bad as it sounds—if you use the proper set of tools and follow good development practices.

Lojinha and the Play! Framework

Some time ago, I started a project called Lojinha (which translates to “small store” in Portuguese), my attempt to build an auction site. (By the way, this project is open source). My motivations were as follows:

- I really wanted to sell some old stuff that I don’t use anymore.

- I don’t like traditional auction sites, especially those that we have down here in Brazil.

- I wanted to “play” with the Play! Framework 2 (pun intended).

So obviously, as mentioned above, I decided to use the Play! Framework. I don’t have an exact count of how long it took to build, but it certainly wasn’t long before I had my site up and running with the simple system deployed at http://lojinha.jcranky.com. Actually, I spent at least half of the development time on the design, which uses Twitter Bootstrap (remember: I’m no designer…).

The paragraph above should make at least one thing clear: I did not worry about performance too much, if at all when creating Lojinha.

And that is exactly my point: there’s power in using the right tools—tools that keep you on the right track, tools that encourage you to follow best development practices by their very construction.

In this case, those tools are the Play! Framework and the Scala language, with Akka making some “guest appearances”.

Let me show you what I mean.

Immutability and Caching

It’s generally agreed that minimizing mutability is good practice. Briefly, mutability makes it harder to reason about your code, especially when you try to introduce any parallelism or concurrency.

The Play! Scala framework makes you use immutability a good portion of the time, and so does the Scala language itself. For instance, the result generated by a controller is immutable. Sometimes you might consider this immutability “bothersome” or “annoying”, but these “good practices” are “good” for a reason.

In this case, the controller’s immutability was absolutely crucial when I finally decided to run some performance tests: I discovered a bottleneck and, to fix it, simply cached this immutable response.

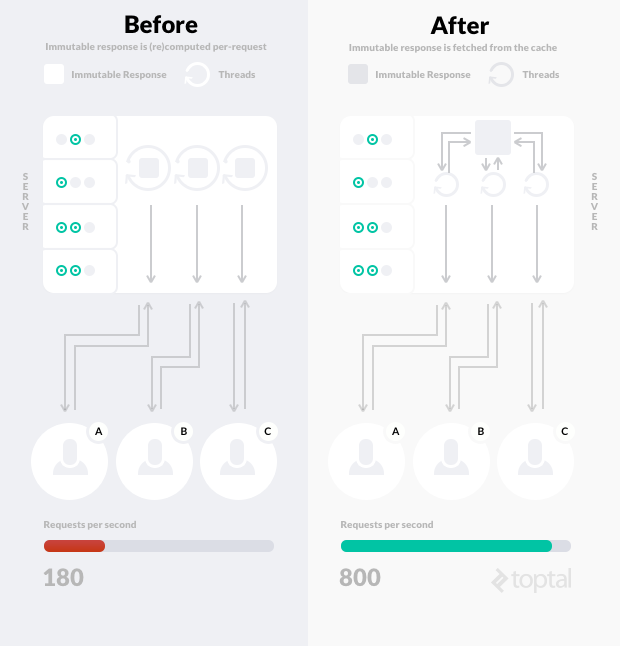

By caching, I mean saving the response object and serving an identical instance, as is, to any new clients. This frees the server from having to recalculate the result all over again. It wouldn’t be possible to serve the same response to multiple clients if this result were mutable.

The downside: for a brief period (the cache expire time), clients can receive outdated information. This is only a problem in scenarios where you absolutely need the client to access the most recent data, with no tolerance for delay.

For reference, here is the Scala code for loading the start page with a list of products, without caching:

def index = Action { implicit request =>

Ok(html.index(body = html.body(Items.itemsHigherBids(itemDAO.all(false))), menu = mainMenu))

}

Now, adding the cache:

def index = Cached("index", 5) {

Action { implicit request =>

Ok(html.index(body = html.body(Items.itemsHigherBids(itemDAO.all(false))), menu = mainMenu))

}

}

Quite simple, isn’t it? Here, “index” is the key to be used in the cache system and 5 is the expiration time, in seconds.

To test the effect of this change, I ran some JMeter tests (included in the GitHub repo) locally. Before adding the cache, I achieved a throughput of approximately 180 requests per second. After caching, the throughput went up to 800 requests per second. That’s an improvement of more than 4x for less than two lines of code.

Memory Consumption

Another area where the right Scala tools can make a big difference is in memory consumption. Here, again, Play! pushes you in the right (scalable) direction. In the Java world, for a “normal” web application written with the servlet API (i.e, almost any Java or Scala framework out there), it’s very tempting to put lots of junk in the user session because the API offers easy-to-call methods that allow you do so:

session.setAttribute("attrName", attrValue);

Because it’s so easy to add information to the user session, it is often abused. As a consequence, the risk of using up too much memory for possibly no good reason is equally high.

With the Play! framework, this is not an option—the framework simply doesn’t have a server side session space. The Play! framework user session is kept in a browser cookie, and you have to live with it. This means that the session space is limited in size and type: you can only store strings. If you need to store objects, you’ll have to use the caching mechanism we discussed before. For example, you might want to store the current user’s e-mail address or username in the session, but you will have to use the cache if you need to store an entire user object from your domain model.

Again, this might seem like a pain at first, but in truth, Play! keeps you on the right track, forcing you to carefully consider your memory usage, which produces first-pass code that is practically cluster ready—especially given that there is no server-side session that would have to be propagated throughout your cluster, making life infinitely easier.

Async Support

Next in this Play! framework review, we will examine how Play! also shines in async(hronous) support. And beyond its native features, Play! allows you to embed Akka, a powerful tool for async processing.

Altough Lojinha does not yet take full advantage of Akka, its simple integration with Play! made it really easy to:

- Schedule an asynchonrous e-mail service.

- Process offers for various products concurrently.

Briefly, Akka is an implementation of the Actor Model made famous by Erlang. If you are not familiar with the Akka Actor Model, just imagine it as a small unit that only communicates through messages.

To send an e-mail asynchronously, I first create the proper message and actor. Then, all I need to do is something like:

EMail.actor ! BidToppedMessage(item.name, itemUrl, bidderEmail)

The e-mail sending logic is implemented inside the actor, and the message tells the actor which e-mail we would like to send. This is done in a fire-and-forget scheme, meaning that the line above sends the request and then continues to execute whatever we have after that (i.e., it does not block).

For more information about Play!’s native Async, take a look at the official documentation.

Conclusion

In summary: I rapidly developed a small application, Lojinha, capable of scaling up and out very well. When I ran into problems or discovered bottlenecks, the fixes were fast and easy, with much credit due to the tools I used (Play!, Scala, Akka, and so forth), which pushed me to follow best practices in terms of efficiency and scalability. With little concern for performance, I was able to scale to thousands of concurrent requests.

When developing your next application, consider your tools carefully.