5 Pillars of Responsible Generative AI: A Code of Ethics for the Future

Generative AI advances raise new questions around data ownership, content integrity, algorithmic bias, and more. Here, three experts at the forefront of NLP present recommendations for developing ethical generative AI solutions.

Generative AI advances raise new questions around data ownership, content integrity, algorithmic bias, and more. Here, three experts at the forefront of NLP present recommendations for developing ethical generative AI solutions.

Madelyn Douglas

Madelyn is the Lead Editor of Engineering at Toptal and a former software engineer at Meta. She has more than six years of experience researching, writing, and editing for engineering publications, specializing in emerging technologies and AI. She previously served as an editor at USC’s Viterbi School of Engineering and her research on engineering ethics was published at IEEE’s NER 2021 conference.

Expertise

PREVIOUSLY AT

Featured Experts

Generative AI is everywhere. With the ability to produce text, images, video, and more, it is considered the most impactful emerging technology of the next three to five years by 77% of executives. Though generative AI has been researched since the 1960s, its capabilities have expanded in recent years due to unprecedented amounts of training data and the emergence of foundation models in 2021. These factors made technologies like ChatGPT and DALL-E possible and ushered in the widespread adoption of generative AI.

However, its rapid influence and growth also yields a myriad of ethical concerns, says Surbhi Gupta, a GPT and AI engineer at Toptal who has worked on cutting-edge natural language processing (NLP) projects ranging from chatbots and marketing-related content generation tools to code interpreters. Gupta has witnessed challenges like hallucinations, bias, and misalignment firsthand. For example, she noticed that one generative AI chatbot intended to identify users’ brand purpose struggled to ask personalized questions (depending on general industry trends instead) and failed to respond to unexpected, high-stakes situations. “For a cosmetics business, it would ask questions about the importance of natural ingredients even if the user-defined unique selling point was using custom formulas for different skin types. And when we tested edge cases such as prompting the chatbot with self-harming thoughts or a biased brand idea, it sometimes moved on to the next question without reacting to or handling the problem.”

Indeed, in the past year alone, generative AI has spread incorrect financial data, hallucinated fake court cases, produced biased images, and raised a slew of copyright concerns. Though Microsoft, Google, and the EU have put forth best practices for the development of responsible AI, the experts we spoke to say the ever-growing wave of new generative AI tech necessitates additional guidelines due to its unchecked growth and influence.

Why Generative AI Ethics Are Important—and Urgent

AI ethics and regulations have been debated among lawmakers, governments, and technologists around the globe for years. But recent generative AI increases the urgency of such mandates and heightens risks, while intensifying existing AI concerns around misinformation and biased training data. It also introduces new challenges, such as ensuring authenticity, transparency, and clear data ownership guidelines, says Toptal AI expert Heiko Hotz. With more than 20 years of experience in the technology sector, Hotz currently consults for global companies on generative AI topics as a senior solutions architect for AI and machine learning at AWS.

Existing Concern | Before Wide Adoption of Generative AI | After Wide Adoption of Generative AI |

Misinformation | The main risk was blanket misinformation (e.g., on social media). Intelligent content manipulation through programs like Photoshop could be easily detected by provenance or digital forensics, says Hotz. | Generative AI can accelerate misinformation due to the low cost of creating fake yet realistic text, images, and audio. The ability to create personalized content based on an individual’s data opens new doors for manipulation (e.g., AI voice-cloning scams) and difficulties in detecting fakes persist. |

Bias | Bias has always been a big concern for AI algorithms as it perpetuates existing inequalities in major social systems such as healthcare and recruiting. The Algorithmic Accountability Act was introduced in the US in 2019, reflecting the problem of increased discrimination. | Generative AI training data sets amplify biases on an unprecedented scale. “Models pick up on deeply ingrained societal bias in massive unstructured data (e.g., text corpora), making it hard to inspect their source,” Hotz says. He also points to the risk of feedback loops from biased generative model outputs creating new training data (e.g., when new models are trained on AI-written articles). |

In particular, the potential inability to determine whether something is AI- or human-generated has far-reaching consequences. With deepfake videos, realistic AI art, and conversational chatbots that can mimic empathy, humor, and other emotional responses, generative AI deception is a top concern, Hotz asserts.

Also pertinent is the question of data ownership—and the corresponding legalities around intellectual property and data privacy. Large training data sets make it difficult to gain consent from, attribute, or credit the original sources, and advanced personalization abilities mimicking the work of specific musicians or artists create new copyright concerns. In addition, research has shown that LLMs can reveal sensitive or personal information from their training data, and an estimated 15% of employees are already putting business data at risk by regularly inputting company information into ChatGPT.

5 Pillars of Building Responsible Generative AI

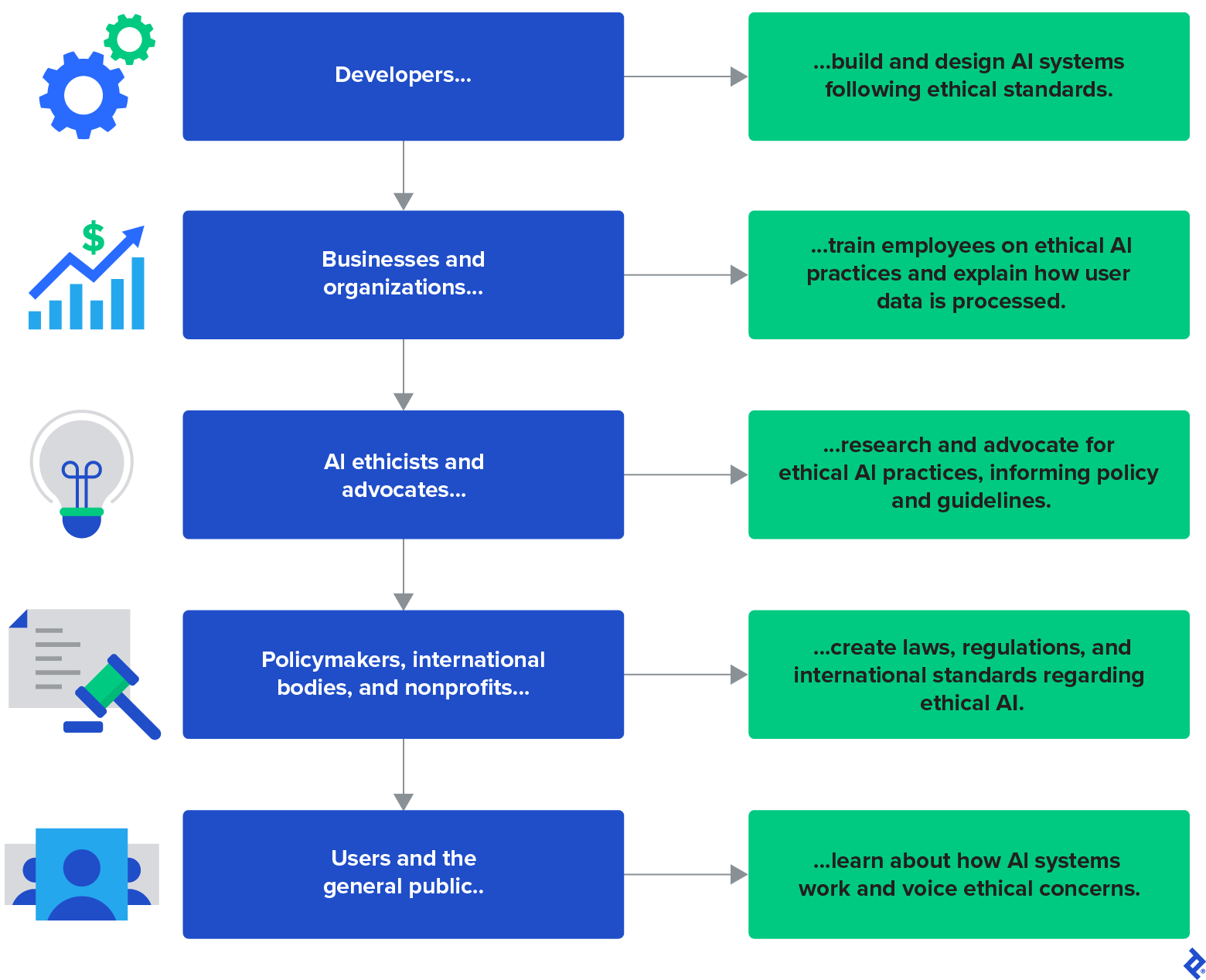

To combat these wide-reaching risks, guidelines for developing responsible generative AI should be rapidly defined and implemented, says Toptal developer Ismail Karchi. He has worked on a variety of AI and data science projects—including systems for Jumia Group impacting millions of users. “Ethical generative AI is a shared responsibility that involves stakeholders at all levels. Everyone has a role to play in ensuring that AI is used in a way that respects human rights, promotes fairness, and benefits society as a whole,” Karchi says. But he notes that developers are especially pertinent in creating ethical AI systems. They choose these systems’ data, design their structure, and interpret their outputs, and their actions can have large ripple effects and affect society at large. Ethical engineering practices are foundational to the multidisciplinary and collaborative responsibility to build ethical generative AI.

To achieve responsible generative AI, Karchi recommends embedding ethics into the practice of engineering on both educational and organizational levels: “Much like medical professionals who are guided by a code of ethics from the very start of their education, the training of engineers should also incorporate fundamental principles of ethics.”

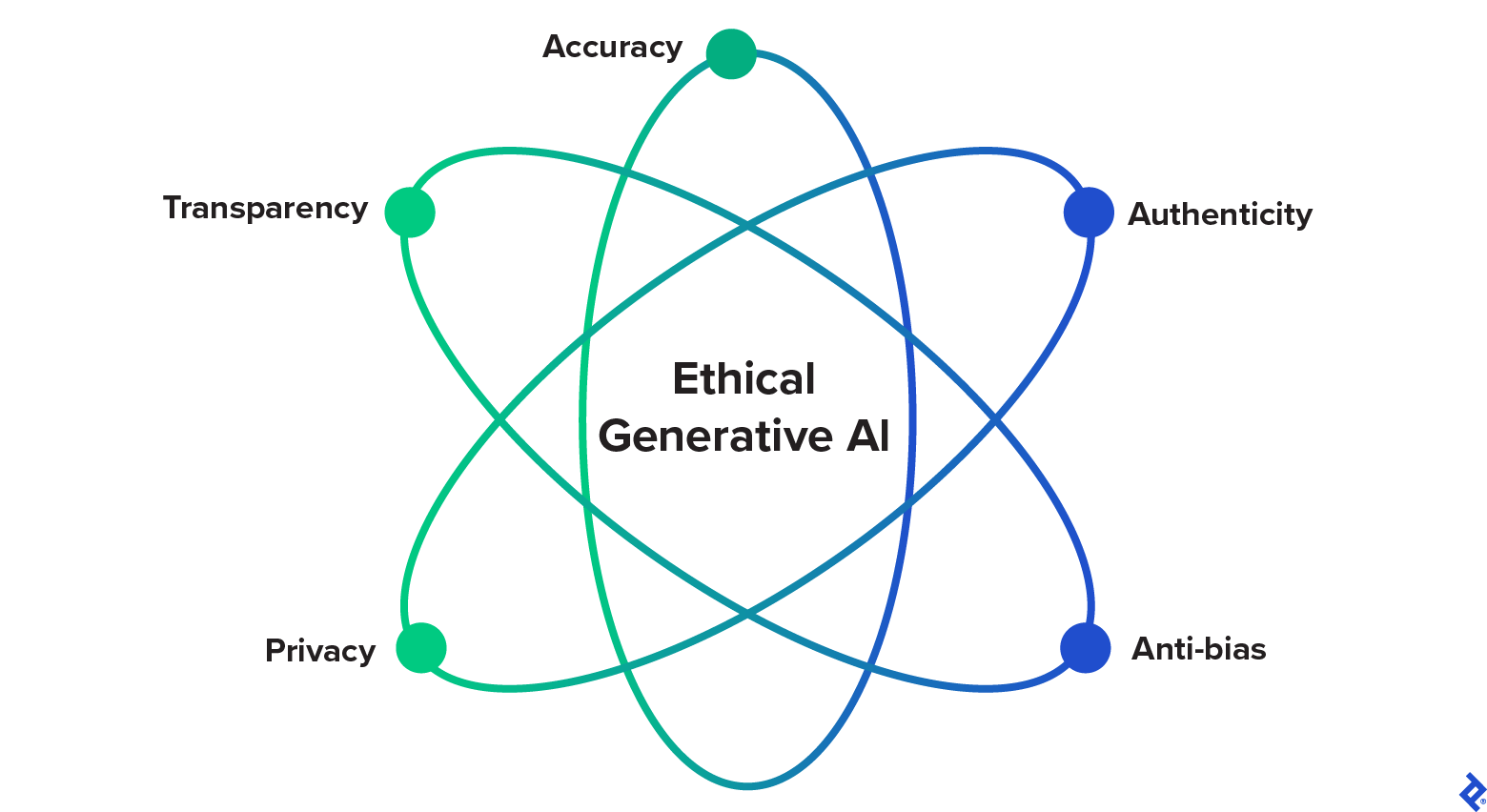

Here, Gupta, Hotz, and Karchi propose just such a generative AI code of ethics for engineers, defining five ethical pillars to enforce while developing generative AI solutions. These pillars draw inspiration from other expert opinions, leading responsible AI guidelines, and additional generative-AI-focused guidance and are specifically geared toward engineers building generative AI.

1. Accuracy

With the existing generative AI concerns around misinformation, engineers should prioritize accuracy and truthfulness when designing solutions. Methods like verifying data quality and remedying models after failure can help achieve accuracy. One of the most prominent methods for this is retrieval augmented generation (RAG), a leading technique to promote accuracy and truthfulness in LLMs, explains Hotz.

He has found these RAG methods particularly effective:

- Using high-quality data sets vetted for accuracy and lack of bias

- Filtering out data from low-credibility sources

- Implementing fact-checking APIs and classifiers to detect harmful inaccuracies

- Retraining models on new data that resolves knowledge gaps or biases after errors

- Building in safety measures such as avoiding text generation when text accuracy is low or adding a UI for user feedback

For applications like chatbots, developers might also build ways for users to access sources and double-check responses independently to help combat automation bias.

2. Authenticity

Generative AI has ushered in a new age of uncertainty regarding the authenticity of content like text, images, and videos, making it increasingly important to build solutions that can help determine whether or not content is human-generated and genuine. As mentioned previously, these fakes can amplify misinformation and deceive humans. For example, they might influence elections, enable identity theft or degrade digital security, and cause instances of harassment or defamation.

“Addressing these risks requires a multifaceted approach since they bring up legal and ethical concerns—but an urgent first step is to build technological solutions for deepfake detection,” says Karchi. He points to various solutions:

- Deepfake detection algorithms: “Deepfake detection algorithms can spot subtle differences that may not be noticeable to the human eye,” Karchi says. For example, certain algorithms may catch inconsistent behavior in videos (e.g., abnormal blinking or unusual movements) or check for the plausibility of biological signals (e.g., vocal tract values or blood flow indicators).

- Blockchain technology: Blockchain’s immutability strengthens the power of cryptographic and hashing algorithms; in other words, “it can provide a means of verifying the authenticity of a digital asset and tracking changes to the original file,” says Karchi. Showing an asset’s time of origin or verifying that it hasn’t been changed over time can help expose deepfakes.

- Digital watermarking: Visible, metadata, or pixel-level stamps may help label audio and visual content created by AI, and many digital text watermarking techniques are under development too. However, digital watermarking isn’t a blanket fix: Malicious hackers could still use open-source solutions to create fakes, and there are ways to remove many watermarks.

It is important to note that generative AI fakes are improving rapidly—and detection methods must catch up. “This is a continuously evolving field where detection and generation technologies are often stuck in a cat-and-mouse game,” says Karchi.

3. Anti-bias

Biased systems can compromise fairness, accuracy, trustworthiness, and human rights—and have serious legal ramifications. Generative AI projects should be engineered to mitigate bias from the start of their design, says Karchi.

He has found two techniques especially helpful while working on data science and software projects:

- Diverse data collection: “The data used to train AI models should be representative of the diverse scenarios and populations that these models will encounter in the real world,” Karchi says. Promoting diverse data reduces the likelihood of biased results and improves model accuracy for various populations (for example, certain trained LLMs can better respond to different accents and dialects).

- Bias detection and mitigation algorithms: Data should undergo bias mitigation techniques both before and during training (e.g., adversarial debiasing has a model learn parameters that don’t infer sensitive features). Later, algorithms like fairness through awareness can be used to evaluate model performance with fairness metrics and adjust the model accordingly.

He also notes the importance of incorporating user feedback into the product development cycle, which can provide valuable insights into perceived biases and unfair outcomes. Finally, hiring a diverse technical workforce will ensure different perspectives are considered and help curb algorithmic and AI bias.

4. Privacy

Though there are many generative AI concerns about privacy regarding data consent and copyrights, here we focus on preserving user data privacy since this can be achieved during the software development life cycle. Generative AI makes data vulnerable in multiple ways: It can leak sensitive user information used as training data and reveal user-inputted information to third-party providers, which happened when Samsung company secrets were exposed.

Hotz has worked with clients wanting to access sensitive or proprietary information from a document chatbot and has protected user-inputted data with a standard template that uses a few key components:

- An open-source LLM hosted either on premises or in a private cloud account (i.e., a VPC)

- A document upload mechanism or store with the private information in the same location (e.g., the same VPC)

- A chatbot interface that implements a memory component (e.g., via LangChain)

“This method makes it possible to achieve a ChatGPT-like user experience in a private manner,” says Hotz. Engineers might apply similar approaches and employ creative problem-solving tactics to design generative AI solutions with privacy as a top priority—though generative AI training data still poses significant privacy challenges since it is sourced from internet crawling.

5. Transparency

Transparency means making generative AI results as understandable and explainable as possible. Without it, users can’t fact-check and evaluate AI-produced content effectively. While we may not be able to solve AI’s black box problem anytime soon, developers can take a few measures to boost transparency in generative AI solutions.

Gupta promoted transparency in a range of features while working on 1nb.ai, a data meta-analysis platform that is helping to bridge the gap between data scientists and business leaders. Using automatic code interpretation, 1nb.ai creates documentation and provides data insights through a chat interface that team members can query.

“For our generative AI feature allowing users to get answers to natural language questions, we provided them with the original reference from which the answer was retrieved (e.g., a data science notebook from their repository).” 1nb.ai also clearly specifies which features on the platform use generative AI, so users have agency and are aware of the risks.

Developers working on chatbots can make similar efforts to reveal sources and indicate when and how AI is used in applications—if they can convince stakeholders to agree to these terms.

Recommendations for Generative AI’s Future in Business

Generative AI ethics are not only important and urgent—they will likely also be profitable. The implementation of ethical business practices such as ESG initiatives are linked to higher revenue. In terms of AI specifically, a survey by The Economist Intelligence Unit found that 75% of executives oppose working with AI service providers whose products lack responsible design.

Expanding our discussion of generative AI ethics to a large scale centering around entire organizations, many new considerations arise beyond the outlined five pillars of ethical development. Generative AI will affect society at large, and businesses should start addressing potential dilemmas to stay ahead of the curve. Toptal AI experts suggest that companies might proactively mitigate risks in several ways:

- Set sustainability targets and reduce energy consumption: Gupta points out that the cost of training a single LLM like GPT-3 is huge—it is approximately equal to the yearly electricity consumption of more than 1,000 US households—and the cost of daily GPT queries is even greater. Businesses should invest in initiatives to minimize these negative impacts on the environment.

- Promote diversity in recruiting and hiring processes: “Diverse perspectives will lead to more thoughtful systems,” Hotz explains. Diversity is linked to increased innovation and profitability; by hiring for diversity in the generative AI industry, companies reduce the risk of biased or discriminatory algorithms.

- Create systems for LLM quality monitoring: The performance of LLMs is highly variable, and research has shown significant performance and behavior changes in both GPT-4 and GPT-3.5 from March to June of 2023, Gupta notes. “Developers lack a stable environment to build upon when creating generative AI applications, and companies relying on these models will need to continuously monitor LLM drift to consistently meet product benchmarks.”

- Establish public forums to communicate with generative AI users: Karchi believes that improving (the somewhat lacking) public awareness of generative AI use cases, risks, and detection is essential. Companies should transparently and accessibly communicate their data practices and offer AI training; this empowers users to advocate against unethical practices and helps reduce rising inequalities caused by technological advancements.

- Implement oversight processes and review systems: Digital leaders such as Meta, Google, and Microsoft have all instituted AI review boards, and generative AI will make checks and balances for these systems more important than ever, says Hotz. They play an essential role at various product stages, considering unintended consequences before a project’s start, adding project requirements to mitigate harm, and monitoring and remedying harms after release.

As the need for responsible business practices expands and the profits of such methods gain visibility, new roles—and even entire business departments—will undoubtedly emerge. At AWS, Hotz has identified FMOps/LLMOps as an evolving discipline of growing importance, with significant overlap with generative AI ethics. FMOps (foundation model operations) includes bringing generative AI applications into production and monitoring them afterward, he explains. “Because FMOps consists of tasks like monitoring data and models, taking corrective actions, conducting audits and risk assessments, and establishing processes for continued model improvement, there is great potential for generative AI ethics to be implemented in this pipeline.”

Regardless of where and how ethical systems are incorporated in each company, it is clear that generative AI’s future will see businesses and engineers alike investing in ethical practices and responsible development. Generative AI has the power to shape the world’s technological landscape, and clear ethical standards are vital to ensuring that its benefits outweigh its risks.

Further Reading on the Toptal Blog:

- Advantages of AI: Using GPT and Diffusion Models for Image Generation

- Ask an AI Engineer: Trending Questions About Artificial Intelligence

- AI in Design: Experts Discuss Practical Applications, Ethics, and What’s Coming Next

- Ask an NLP Engineer: From GPT Models to the Ethics of AI

- Machines and Trust: How to Mitigate AI Bias

Understanding the basics

What is the future impact of generative AI?

Generative AI will likely revolutionize content creation and task automation, transforming industries such as entertainment, advertising, and gaming. Robust ethical and security measures will be necessary to prevent misuse and ensure authenticity.

What is the future of generative AI models?

Future generative AI models have the potential for improved realism and creativity. As these models evolve, they may better mimic human creativity, enhance personalization, and streamline content generation. Managing their ethical use will be a critical challenge.

How close are we to advanced generative AI?

We are currently in the early stages of generative AI technology, with models such as GPT-3.5 and GPT-4 creating realistic text. But achieving complete realism (understanding context in all scenarios) remains challenging.

What are the ethical concerns of generative AI?

Generative AI raises ethical concerns about the creation of deepfakes and misinformation, invasion of privacy, and the lack of transparency in both AI decision-making and accountability for AI-generated content.

Why is generative AI controversial?

Generative AI is controversial due to its potential adverse effects (e.g., misuse in creating deceptive content) and the concerns it brings up regarding job displacement, authorship, and intellectual property ownership.

What are the negative effects of generative AI?

The adverse effects of generative AI include the potential for spreading misinformation, the risk of job displacement in creative industries, the difficulty in identifying AI-generated content, and concerns about content authenticity.

Is generative AI biased?

Yes, generative AI can exhibit bias, as it learns from data that can contain human biases. For this reason, it is important to use diverse, representative data sets and conduct careful model training.

What are the limitations of generative models?

The limitations of generative models include difficulty in handling multi-modal data, the need for large data sets, the potential for generating inappropriate content, and a lack of control over generated content.