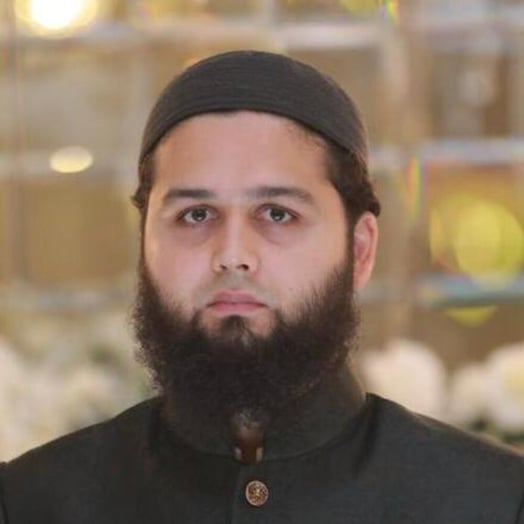

Abdul Samad

Verified Expert in Engineering

Data Engineer and Developer

Abdul is a seasoned software and data engineer who has helped Fortune 500 companies harness the power of their data by building robust pipelines for ingesting and processing vast amounts of data. Skilled in several technologies, programming languages, and frameworks, he is proficient in using OSS and Google Cloud services. Abdul believes that no matter the company, data is indispensable to business, and he is committed to enhancing its power and preserving its integrity.

Portfolio

Experience

Availability

Preferred Environment

Linux

The most amazing...

...project I've worked on is a disaster recovery mechanism for the on-premise clusters that deploy the app to another data center in case of a data-center crash.

Work Experience

Principal Data Engineer

Confiz

- Developed data pipelines for ingestion and processing using technologies like Storm, Spark, and Flume, which read the data from Apache Kafka and write it to Hadoop (HDFS), Cosmos Db, and BigQuery.

- Created and managed on-premise clusters for Storm and Flume.

- Implemented auto-scaling for on-premise Storm clusters.

- Used BigQuery and Looker to build analytical dashboards and reports, which are then used by business personnel and other project members.

- Monitored the health of deployed data pipelines using Spring Boot scheduler.

- Set up and maintained CI/CD pipelines using Jenkins and Concord for seamless deployment of our data pipelines.

- Reduced the cost of Storm clusters to half by using low-end machines without compromising the performance.

- Built a disaster recovery mechanism using Kafka's Active-Active configuration to avoid downtime in case any data center goes down.

- Implemented data observability around the real-time pipeline.

Data Engineer

Pace LLC

- Gathered delivery rider's data and processed it through our data pipelines into our data lake on BigQuery.

- Built an analytics platform for business teams using tools like Python, Pandas, and Apache Superset on the Google Cloud Platform.

- Created a mechanism to incentivize riders based on their deliveries.

Experience

Upfront | A Project for Walmart

PACE

Education

Bachelor's Degree in Computer Engineering

National University of Computer and Emerging Sciences - Lahore, Pakistan

Skills

Libraries/APIs

Pandas, PySpark

Tools

Apache Storm, Looker, Kafka Streams, Google Cloud Dataproc, Tableau, Jenkins, Splunk, Apache Beam, Apache Airflow

Frameworks

Apache Spark, Spark, Hadoop, Spring

Languages

Java, SQL, Scala, Python

Platforms

Apache Kafka, Google Cloud Platform (GCP), Kubernetes, Jupyter Notebook, Docker, Linux

Paradigms

ETL

Storage

Apache Hive, MySQL, MariaDB, Azure Cosmos DB, PostgreSQL, Cloud Firestore

Other

Google BigQuery, Data Engineering, Big Data, Computer Engineering, Apache Flume, Cloud Storage, Apache Superset

How to Work with Toptal

Toptal matches you directly with global industry experts from our network in hours—not weeks or months.

Share your needs

Choose your talent

Start your risk-free talent trial

Top talent is in high demand.

Start hiring