Demand for Hadoop Developers Continues to Expand

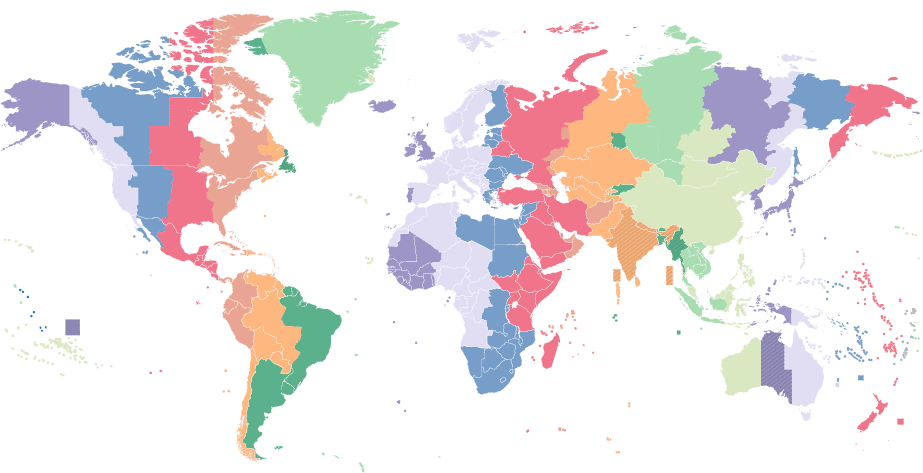

The demand for skilled Apache Hadoop developers is growing rapidly, making it a challenge for hiring managers and CTOs to find the right candidates. As businesses increasingly rely on big data to drive decision-making, the need for robust data processing frameworks like Hadoop has surged. The global Hadoop market, valued at $39.09 billion in 2024, is projected to reach $1,091.01 billion by 2034, highlighting the expanding role of the Hadoop framework in the datasphere.

Finding top talent in Hadoop development is not just about technical expertise. It requires individuals who understand the complexities of big data processing, distributed computing, and the specific needs of your organization. The skill set for an ideal Hadoop programmer includes proficiency in Hadoop’s core components (e.g., HDFS, YARN, MapReduce), familiarity with associated tools (e.g., Hive, Pig, Spark), and experience with data management and analytics. Additionally, candidates should possess problem-solving skills, the ability to work with large datasets, and an understanding of data security practices.

This guide provides detailed insights into the process of identifying, evaluating, and hiring a great Hadoop developer—whether you’re looking for a part-time freelancer or a full-time dedicated developer. We’ll cover the essential skills and qualifications to look for, key Hadoop interview questions to ask, and strategies to assess a candidate’s fit for your organization. By the end of this guide, you’ll be equipped with the knowledge to identify and hire top-tier Hadoop talent who can drive your data initiatives forward.

What Attributes Distinguish Quality Hadoop Developers From Others?

Hiring a quality developer proficient in this open-source framework can transform how your organization handles and leverages big data. An accomplished Hadoop engineer can design, implement, and optimize data processing workflows, ensuring your data operations are efficient and scalable. The following core skills list identifies attributes that set top candidates apart from their peers.

Core Hadoop Developer Skills

Programming and Performance Optimization: Proficiency in core software engineering concepts and programming languages such as Java, Python, and Scala is essential. Expert developers can write clean, efficient code and are familiar with best practices for coding within the Hadoop ecosystem. A top-tier Hadoop software engineer also excels in identifying and solving complex data processing challenges. They can troubleshoot performance bottlenecks, optimize workflows, and provide innovative solutions to improve data processing efficiency. Experts know how to fine-tune Hadoop configurations and optimize MapReduce jobs for better performance. They are familiar with techniques to reduce job execution time and resource usage, ensuring that data processing tasks run smoothly.

Proficiency in Hadoop Ecosystem Components: An expert developer understands the intricacies of HDFS (Hadoop Distributed File System), including its architecture, file management, and how to optimize data storage and retrieval. In addition, proficiency in writing and optimizing MapReduce programs is crucial: A top developer can break down complex data processing tasks into manageable units and write efficient MapReduce jobs. Likewise, a skilled developer can efficiently manage and monitor cluster resources using YARN to ensure optimal performance and job scheduling.

Familiarity With Hadoop-related Tools: Experts often have extensive experience with high-level tools in the Hadoop ecosystem. They may have comprehensive knowledge of systems such as Apache Hive (for SQL-like queries) and Pig (for data-flow scripting). They can write complex queries and scripts to process and analyze data efficiently. In addition, understanding how to use HBase, the nonrelational Hadoop database, for real-time read/write access to large datasets is critical for applications that require quick data retrieval and updates.

Data Management and Security: Handling large volumes of data is a core requirement. Experienced developers are adept at managing and processing massive datasets, ensuring operations remain efficient and scalable even as data volumes grow. A top developer has expertise in data ingestion tools (e.g., Apache Flume, Sqoop) and ETL processes, designing and implementing efficient pipelines to extract, transform, and load data. In addition, in-depth knowledge of Hadoop security issues and mechanisms, including Kerberos authentication, HDFS file permissions, and encryption, protects data against unauthorized access and breaches.

Machine Learning and Data Analysis: A standout candidate often has experience with data analysis tools and frameworks. They may have extensive knowledge of machine learning libraries (e.g., Mahout, MLlib) and can implement data analysis and predictive modeling workflows within Hadoop.

Communication and Continuous Learning: Effective communication and teamwork are vital. Expert developers can work closely with data scientists, analysts, and other stakeholders to facilitate project discussions, understand business objectives, and deliver solutions that turn business ideas into actionable outcomes. In addition, the big data landscape is constantly evolving. Top developers stay updated with the latest trends, tools, and best practices in the Hadoop ecosystem. They are proactive in learning and adopting new technologies to enhance their skill set.

A quality candidate brings a combination of technical expertise, project management experience, soft skills, and the ability to handle large-scale data processing tasks. They stand out by their proficiency in the Hadoop ecosystem, their experience with related tools, and their continuous drive to optimize and innovate. By focusing on these attributes, you can find developers who will significantly contribute to your organization’s data-driven initiatives.

Complementary Skills for Hadoop Developers

When hiring a Hadoop developer, look for a wide range of related technology skills that enhance their capabilities and benefit the business in various ways. Here’s the complementary Hadoop developer skills list to help you identify outstanding candidates:

Java: Java is integral to Hadoop app development services, as many core components like HDFS and MapReduce are implemented in Java. A Hadoop developer proficient in Java can optimize and extend Hadoop’s functionality, ensuring efficient data processing and scalability.

SQL: SQL skills are crucial for Hadoop software developers to interact with data stored in Hadoop using tools like Hive and Impala. Proficiency in SQL enables efficient data querying and analysis, supporting business intelligence and reporting needs.

Hive: Hive provides SQL-like querying capabilities over Hadoop datasets. A Hadoop developer proficient in Hive can optimize queries, improve data processing efficiency, and facilitate data warehousing and ad-hoc analysis, enabling faster decision-making.

Databases: Understanding databases (both relational and NoSQL) is essential for integrating Hadoop with existing data systems. A Hadoop developer with database skills can design robust data pipelines and ensure seamless data integration across platforms, enhancing data consistency and accessibility.

Big data: Understanding the principles and challenges of big data is critical, as is possessing technical data management skills. A Hadoop developer well-versed in big data concepts can design scalable architectures, implement data governance strategies, and leverage emerging technologies to extract actionable insights from large datasets, driving business growth and innovation.

JavaScript: JavaScript is essential for web-based Hadoop applications and interactive data visualizations. A Hadoop developer skilled in JavaScript can enhance user interfaces and integrate Hadoop-powered data analytics into web applications, improving data accessibility and user experience.

Node.js: Node.js complements Hadoop by enabling server-side JavaScript execution. It facilitates real-time data processing and integrates seamlessly with Hadoop clusters, enhancing the responsiveness and scalability of data-driven applications.

Cloud Computing: Many organizations deploy Hadoop on cloud platforms like Amazon Web Services (AWS) or Microsoft Azure, leveraging services like Amazon EMR, S3, Glue, and Azure HDInsight for scalable and cost-effective big data processing. A candidate with cloud expertise can optimize data workflows, integrate with cloud storage solutions, and enhance infrastructure efficiency in hybrid and multi-cloud environments.

By incorporating these complementary technology skills into your Hadoop web development or app development team, you empower developers to maximize the value of big data, streamline data operations, and deliver scalable solutions that effectively meet evolving business goals.

How Can You Identify the Ideal Hadoop Developer for You?

Identifying the right Hadoop developer for your organization requires a structured approach. Start by clearly defining your organization’s skills gap or problem statement. Determine the specific challenges you face with your current data infrastructure and what you aim to achieve with Hadoop.

- Are you looking to improve data processing speed and efficiency?

- Do you need to handle larger datasets than your current system allows?

- Are there specific use cases, like real-time analytics or machine learning, that Hadoop will address?

Answering these questions can help you narrow down the precise technical skills and development experience required, making it easier to attract suitable candidates while recruiting. Regardless, look for practical experience in handling and processing large datasets, which is a fundamental aspect of Hadoop. Strong interpersonal skills are also vital for working with cross-functional teams, understanding project requirements, and delivering effective data solutions.

Hadoop Developer Expertise Levels

The talent pool for Hadoop developers varies widely, with a mix of junior candidates entering the field and experienced professionals specializing in advanced data architectures. Differentiating between them can help you make the right hiring decision:

Junior Hadoop developers typically have a basic understanding of Hadoop components and familiarity with common data processing tools. They usually have one or two years of experience, often in supporting roles. They are suited to tasks that require less complexity, such as writing basic MapReduce jobs, assisting in data pipeline development, or performing routine data processing tasks. If your data processing needs are straightforward (e.g., basic ETL tasks), junior talent can handle these effectively.

Mid-level Hadoop developers are proficient in core Hadoop components, have a firm grasp of related tools like Hive and Spark, and possess solid programming skills. They usually have three to five years of hands-on experience that includes complex projects. Mid-level developers are ideal for managing and optimizing complex data workflows, developing more advanced data processing solutions, and providing intermediate-level support for big data initiatives.

Senior Hadoop developers are experts in the Hadoop ecosystem and possess extensive experience with advanced tools, strong programming abilities, and excellent problem-solving skills. They typically have more than five years of experience, often including leadership roles and major development project contributions. Senior developers are best suited for strategic roles, such as designing scalable data architectures, leading big data projects, and optimizing entire data ecosystems. They are also the most qualified to handle sophisticated data processing tasks like real-time analytics, machine learning integration, and critical performance issues.

Whether you need to invest in expert talent or hire junior talent depends on your specific needs. Junior developers have lower salary expectations, making them ideal for organizations with limited budgets or less critical data processing needs. With proper training and mentorship, they can grow with your company, potentially becoming mid-level or senior developers. Starting with mid-level developers right away will yield moderate salary expectations, making them a good fit for organizations looking for a balance between expertise and budget. While senior developers have the highest salary expectations, their significant impact on your organization’s data capabilities can still make these candidates the most cost-effective. If you require someone to lead a team, mentor junior staff, and make high-level technical decisions, a senior Hadoop developer is the best choice.

Common Use Cases for Hadoop Developers

Finding the ideal Hadoop developer requires a clear understanding of your organization’s business needs, your broader industry, and how to match the right talent to the right tasks. By focusing on your particular use case, you can find Hadoop developers who will drive your data initiatives and help your organization harness the full potential of big data.

-

Real-time Data Processing: For real-time data processing tasks, look for proficiency in Apache Spark, Kafka, and Flink, as these technologies extend Hadoop’s capabilities for low-latency event streaming and analytics. Candidates should have experience building real-time data pipelines and integrating them with batch processing frameworks like Hadoop. This is particularly valuable in industries like finance, telecommunications, and logistics, where real-time fraud detection, network traffic monitoring, and supply chain optimization depend on continuous data streams and high performance.

-

Data Warehousing and Business Intelligence (BI): For data warehouse and analytics applications, look for expertise in Apache Hive, Pig, and SQL-based querying within Hadoop. Candidates should have experience designing scalable data warehouses, optimizing query performance, and integrating structured datasets for analytical insights. This is crucial for industries like retail, healthcare, and government, where large-scale reporting, customer insights, and regulatory compliance analysis require efficient data storage and retrieval.

-

Machine Learning and Data Science: For machine learning and AI-driven data processing, look for skills in Mahout, MLlib, and deep learning frameworks integrated with Hadoop. Candidates should have experience training large-scale machine learning models, processing high-volume unstructured data, and optimizing predictive analytics workflows. Data science is widely used in e-commerce, healthcare, and financial services, where recommendation algorithms, disease prediction models, and risk assessments rely on massive datasets and scalable machine learning pipelines.

-

Data Security and Compliance: For organizations handling sensitive or regulated data, seek expertise in Kerberos authentication, HDFS file permissions, encryption, and governance frameworks. Candidates should have experience implementing secure Hadoop clusters, ensuring data integrity, and maintaining compliance with industry regulations. This is essential in sectors like banking, legal, and healthcare, where financial transactions, legal records, and patient data privacy require robust security measures.

-

Scalable Data Processing for Startups: Startups building cost-effective, scalable big data architectures, should look for candidates with experience in cloud-based Hadoop deployments, API integrations, and serverless data workflows. Developers should be proficient in AWS (Amazon EMR, S3, Glue) and Azure HDInsight, enabling flexible and efficient data pipelines without heavy infrastructure investment. Startups in fintech, AI, and cybersecurity use Hadoop to support real-time fraud detection, automated analytics, and AI-driven decision-making while maintaining scalability as their data needs grow.

Hadoop vs. Apache Spark Developers

You may wonder, “What are the benefits of Hadoop compared to Spark when it comes to large data environments?” To determine whether a Hadoop expert or a Spark developer is best suited for your project, you’ll first need to understand the differences.

When comparing Hadoop and Spark in large data environments, Hadoop offers distinct benefits, particularly in fault tolerance and availability. Hadoop’s ecosystem, built around HDFS and MapReduce, ensures high fault tolerance by replicating data across nodes and maintaining availability even in the face of hardware failures. Unlike traditional systems that rely heavily on hardware redundancy for high availability, Hadoop’s design addresses failures at the application layer, minimizing downtime and data-loss risks. In contrast, Spark, while highly efficient for in-memory processing and real-time analytics, may require additional configuration for fault tolerance and does not inherently offer the same level of data replication and availability as Hadoop’s HDFS.

For a hiring manager, understanding these distinctions is crucial in making informed decisions. A Hadoop developer’s expertise in building and optimizing fault-tolerant data pipelines using HDFS can ensure robust data processing capabilities, which are critical for large-scale projects where reliability and scalability are paramount. This knowledge allows the hiring manager to assess candidates based on their proficiency in designing resilient data architectures, handling large datasets, and mitigating risks associated with data processing failures. Ultimately, hiring a Hadoop programmer equipped with these skills can significantly enhance an organization’s ability to manage and derive valuable insights from large data environments reliably and efficiently.

How to Write a Hadoop Developer Job Description for Your Project

Writing an effective job description for a Hadoop developer is crucial for attracting the right candidates. Start by clearly outlining your organization’s needs and the specific role the developer will play. Highlight the essential technical skills such as proficiency in Hadoop components (HDFS, YARN, MapReduce), familiarity with related tools (Hive, Pig, Spark, HBase), and strong back-end programming abilities in languages like Java, Python, or Scala. Emphasize experience with data ingestion, ETL processes, and data security.

Detail the problem-solving skills required, including optimizing Hadoop workflows and handling large datasets. Mention the importance of communication and collaboration skills, as the developer will work with cross-functional teams. Specify whether the role is junior, mid-level, or senior, based on the complexity of tasks and the level of expertise needed. Are you seeking a remote developer or a dedicated developer who will work from a specific location? Remote postings may better suit experienced developers who need less support during onboarding, especially if there are time-zone differences.

Potential job openings for someone with this skill include titles like Hadoop Developer, Big Data Developer, Big Data Engineer, Data Architect, and Data Processing Specialist. Each role may focus on different aspects of Hadoop, from developing and managing data pipelines to designing scalable data architectures.

Finally, including the role’s hourly rate and other company perks will attract top candidates to your company. By clearly defining the role and required skills, you can attract qualified candidates who are well-suited to meet your organization’s big data needs.

What Are the Most Important Hadoop Developer Interview Questions?

When vetting candidates, it’s essential to ask Hadoop interview questions that assess their understanding of big data concepts, Hadoop ecosystem components, and their problem-solving abilities. Here are some key questions to consider, along with the desired responses and explanations of their importance:

Describe a challenging Hadoop project you worked on and how you overcame the challenges.

The candidate should provide a detailed explanation of a specific project, the challenges faced, and the steps taken to address those challenges. This question reveals the extent of the candidate’s hands-on experience, problem-solving skills, and ability to handle real-world challenges, indicating their readiness to contribute effectively to your projects.

Explain big data and list its characteristics.

This question is important as it reveals the candidate’s fundamental understanding of big data, a core concept that underpins Hadoop’s utility. A desirable response should highlight the candidate’s understanding of the five main characteristics of big data: Volume, Velocity, Variety, Veracity, and Value. Volume refers to the vast amount of data generated every second, while velocity describes the speed at which this data is generated and processed. Variety encompasses the different types of data (structured, unstructured, semi-structured) from various sources. Veracity is about the quality and accuracy of the data, and value pertains to the usefulness of data in making decisions. A strong candidate can clearly articulate these characteristics and their relevance in big data environments.

What are the different features of HDFS?

The candidate should provide a comprehensive list of HDFS features, including fault tolerance, high availability, high reliability, replication, and scalability. They should explain that fault tolerance allows HDFS to recover data in case of hardware failure through data replication. High availability ensures data is accessible even if a node fails, while high reliability guarantees that data is stored reliably and can be accurately retrieved. Replication involves copying data across multiple nodes to ensure fault tolerance and reliability, and scalability means the system can scale horizontally by adding more nodes to handle increasing amounts of data. A candidate who can succinctly explain each feature demonstrates a solid understanding of HDFS. This question assesses the candidate’s technical knowledge of HDFS, which is crucial for ensuring reliable and scalable data storage in a Hadoop environment.

What is shuffling in MapReduce?

This question is essential to understand the candidate’s grasp of the MapReduce process, which is central to Hadoop’s data processing capability. MapReduce consists of mapping and reducing stages, with shuffling and sorting stages occurring in between them. A strong response will detail that shuffling is the process of transferring data from the mapping phase to the reducing phase, involving sorting and grouping the output of the Mapper tasks based on keys. The purpose of shuffling is to ensure that all data corresponding to a particular key is sent to the same Reducer, which is crucial for aggregating and processing the data correctly. The candidate should explain this process clearly and emphasize its importance in the MapReduce framework.

In answering this question, the candidate should discuss techniques such as configuring job parameters, using combiners, tuning the number of Mappers and Reducers, and optimizing data locality. These strategies are crucial for enhancing the efficiency and speed of Hadoop jobs. This question helps determine the candidate’s practical skills in performance optimization, which can significantly impact the efficiency of Hadoop operations.

Can you explain the role of YARN in Hadoop?

This question is important because it assesses the candidate’s understanding of resource management in Hadoop, which is vital for keeping Hadoop clusters running efficiently. The ideal response will describe YARN (Yet Another Resource Negotiator) as the resource management layer in Hadoop. It manages and schedules resources for various applications running in a Hadoop cluster, allowing for efficient resource utilization and scalability.

Focusing on these key questions can effectively gauge a candidate’s expertise in Hadoop, understanding of core concepts, and ability to apply knowledge to practical situations. This approach will help you identify candidates who are well-equipped to handle your organization’s big data needs.

Why Do Companies Hire Hadoop Developers?

In this hiring guide, we’ve covered the essential aspects of identifying, evaluating, and hiring Hadoop developers. But why hire a developer in the first place? Companies seek these experts due to the exponential growth of data and the need for efficient processing and analysis. Hadoop programers possess specialized skills in handling big data through Hadoop’s robust ecosystem, including components like HDFS, YARN, and MapReduce, and tools such as Hive, Pig, and Spark.

Hadoop developers stand out by their ability to manage and optimize large-scale data workflows, ensuring data is processed quickly and accurately. They bring valuable expertise in designing scalable data architectures, improving data processing efficiency, and solving complex data-related problems. Their ability to leverage big data technologies translates into actionable insights, driving better decision-making and strategic business initiatives.

The business value of Hadoop developers lies in their capacity to turn vast amounts of raw data into valuable information, enabling companies to gain a competitive edge. They help organizations uncover patterns, predict trends, and make informed decisions, ultimately leading to improved operational efficiency, enhanced customer experiences, and increased profitability. Furthermore, their expertise in data security and compliance ensures that data is handled responsibly and in line with regulatory requirements.

In summary, hiring Hadoop developers is crucial for companies looking to harness the power of big data. Their specialized skills, problem-solving abilities, and strategic impact on data-driven initiatives make them indispensable assets in today’s data-centric business landscape. By effectively identifying and hiring the right Hadoop developers, companies can thrive in the era of big data and gain a significant competitive advantage.

Is Hiring a Hadoop Developer a Future-proof Solution for Long-term Projects?

Hiring a Hadoop developer constitutes a future-proof solution for long-term projects, particularly in the realm of big data and scalable data processing. One of the core strengths of Hadoop is its HDFS, which excels in both parallel computing and data storage. HDFS distributes data across multiple nodes in a cluster, enabling high throughput and allowing applications to work with large datasets effectively. This architecture not only supports massive scalability but also ensures fault tolerance by replicating data across different nodes. In the event of hardware failures or data corruption, HDFS automatically recovers without compromising data integrity, ensuring high availability of data.

These capabilities make Hadoop ideal for long-term projects that require handling vast amounts of data and performing complex analytics. As businesses continue to generate and utilize more data, the demand for efficient data storage and processing solutions like Hadoop remains strong. Moreover, Hadoop’s ecosystem, including tools like MapReduce, Hive, and Spark, provides comprehensive support for diverse data processing needs, from batch processing to real-time data analytics and machine learning.