Flexible A/B Testing with AWS Lambda@Edge

One of the new possibilities offered by Lambda@Edge is the ability to implement server-side A/B testing using Lambdas on CloudFront’s edge servers.

In this article, Toptal Full-stack Developer Georgios Boutsioukis guides you through the process and outlines the pros and cons of A/B testing with Lambda@Edge.

One of the new possibilities offered by Lambda@Edge is the ability to implement server-side A/B testing using Lambdas on CloudFront’s edge servers.

In this article, Toptal Full-stack Developer Georgios Boutsioukis guides you through the process and outlines the pros and cons of A/B testing with Lambda@Edge.

Georgios is a full-stack developer with more than eight years of experience. He worked at CERN and as a member of the mobile API team at Booking.com.

PREVIOUSLY AT

Content delivery networks (CDNs) like Amazon CloudFront were, until recently, a relatively simple part of web infrastructure. Traditionally, web applications were designed around them, treating them mostly as a passive cache instead of an active component.

Lambda@Edge and similar technologies have changed all that and opened up a whole world of possibilities by introducing a new layer of logic between a web application and its users. Available since mid-2017, Lambda@Edge is a new feature in AWS that introduces the concept of executing code in the form of Lambdas directly on CloudFront’s edge servers.

One of the new possibilities that Lambda@Edge offers is a clean solution to server-side A/B testing. A/B testing is a common method of testing the performance of multiple variations of a website by showing them at the same time to different segments of the website’s audience.

What Lambda@Edge Means for A/B Testing

The main technical challenge in A/B testing is to properly segment the incoming traffic without affecting either the quality of the experiment’s data or the website itself in any way.

There are two main routes for implementing it: client-side and server-side.

- Client-side involves running a bit of JavaScript code in the end-user’s browser that chooses which variant will be shown. There are a couple of significant downsides to this approach—most notably, it can both slow down rendering and cause flickering or other rendering issues. This means websites that seek to optimize their loading time or have high standards for their UX will tend to avoid this approach.

- Server-side A/B testing does away with most of these issues, as the decision on which variant to return is taken entirely on the side of the host. The browser simply renders each variant normally as if it was the standard version of the website.

With that in mind, you might wonder why everyone doesn’t simply use server-side A/B testing. Unfortunately, the server-side approach is not as easy to implement as the client-side approach, and setting up an experiment often requires some form of intervention on the server-side code or the server configuration.

To complicate things even further, modern web applications like SPAs are often served as bundles of static code directly from an S3 bucket without even involving a web server. Even when a web server is involved, it’s often not feasible to change the server-side logic to set up an A/B test. The presence of a CDN poses yet another obstacle, as caching might affect segment sizes or, conversely, this kind of traffic segmentation can lower the CDN’s performance.

What Lambda@Edge offers is a way to route user requests across variants of an experiment before they even hit your servers. A basic example of this use case can be found directly in the AWS documentation. While useful as a proof of concept, a production environment with multiple concurrent experiments would probably need something more flexible and robust.

Moreover, after working a bit with Lambda@Edge, you will probably realize that there are some nuances to be aware of when building out your architecture.

For example, deploying the edge Lambdas takes time, and their logs are distributed across AWS regions. Be mindful of this if you need to debug your configuration to avoid 502 errors.

This tutorial will introduce AWS developers to a way of implementing server-side A/B testing using Lambda@Edge in a manner that can be reused across experiments without modifying and redeploying the edge Lambdas. It builds on the approach of the example in the AWS documentation and other similar tutorials, but instead of hardcoding the traffic allocation rules in the Lambda itself, the rules are periodically retrieved from a configuration file on S3 that you can change at any time.

Overview of Our Lambda@Edge A/B Testing Approach

The basic idea behind this approach is to have the CDN assign each user to a segment and then route the user to the associated origin configuration. CloudFront allows distribution to point to either S3 or custom origins, and in this approach, we support both.

The mapping of segments to experiment variants will be stored in a JSON file on S3. S3 is chosen here for simplicity, but this could also be retrieved from a database or any other form of storage that the edge Lambda can access.

Note: There are some limitations - check the article Leveraging external data in Lambda@Edge on the AWS Blog for more info.

Implementation

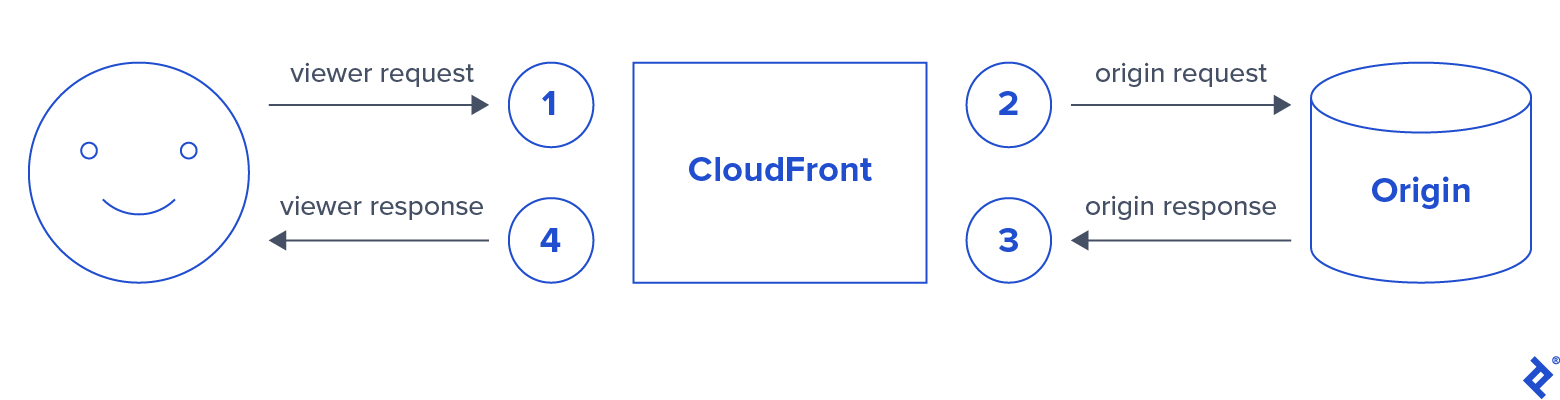

Lambda@Edge can be triggered by four different types of CloudFront events:

In this case, we’ll be running a Lambda on each of the following three events:

- Viewer request

- Origin request

- Viewer response

Each event will implement a step in the following process:

- abtesting-lambda-vreq: Most of the logic is contained in this lambda. First, a unique ID cookie is read or generated for the incoming request, and it is then hashed down to a [0, 1] range. The traffic allocation map is then fetched from S3 and cached across executions. And finally, the hashed down value is used to choose an origin configuration, which is passed as a JSON-encoded header to the next Lambda.

- abtesting-lambda-oreq: This reads the origin configuration from the previous Lambda and routes the request accordingly.

- abtesting-lambda-vres: This just adds the Set-Cookie header to save the unique ID cookie on the user’s browser.

Let’s also set up three S3 buckets, two of which will contain the contents of each of the experiment’s variants, while the third will contain the JSON file with the traffic allocation map.

For this tutorial, the buckets will look like this:

-

abtesting-ttblog-a public

- index.html

-

abtesting-ttblog-b public

- index.html

-

abtesting-ttblog-map private

- map.json

Source Code

First, let’s start with the traffic allocation map:

map.json

{

"segments": [

{

"weight": 0.7,

"host": "abtesting-ttblog-a.s3.amazonaws.com",

"origin": {

"s3": {

"authMethod": "none",

"domainName": "abtesting-ttblog-a.s3.amazonaws.com",

"path": "",

"region": "eu-west-1"

}

}

},

{

"weight": 0.3,

"host": "abtesting-ttblog-b.s3.amazonaws.com",

"origin": {

"s3": {

"authMethod": "none",

"domainName": "abtesting-ttblog-b.s3.amazonaws.com",

"path": "",

"region": "eu-west-1"

}

}

}

]

}

Each segment has a traffic weight, which will be used to allocate a corresponding amount of traffic. We also include the origin configuration and host. The origin configuration format is described in the AWS documentation.

abtesting-lambda-vreq

'use strict';

const aws = require('aws-sdk');

const COOKIE_KEY = 'abtesting-unique-id';

const s3 = new aws.S3({ region: 'eu-west-1' });

const s3Params = {

Bucket: 'abtesting-ttblog-map',

Key: 'map.json',

};

const SEGMENT_MAP_TTL = 3600000; // TTL of 1 hour

const fetchSegmentMapFromS3 = async () => {

const response = await s3.getObject(s3Params).promise();

return JSON.parse(response.Body.toString('utf-8'));

}

// Cache the segment map across Lambda invocations

let _segmentMap;

let _lastFetchedSegmentMap = 0;

const fetchSegmentMap = async () => {

if (!_segmentMap || (Date.now() - _lastFetchedSegmentMap) > SEGMENT_MAP_TTL) {

_segmentMap = await fetchSegmentMapFromS3();

_lastFetchedSegmentMap = Date.now();

}

return _segmentMap;

}

// Just generate a random UUID

const getRandomId = () => {

return 'xxxxxxxx-xxxx-4xxx-yxxx-xxxxxxxxxxxx'.replace(/[xy]/g, function (c) {

var r = Math.random() * 16 | 0,

v = c == 'x' ? r : (r & 0x3 | 0x8);

return v.toString(16);

});

};

// This function will hash any string (our random UUID in this case)

// to a [0, 1) range

const hashToInterval = (s) => {

let hash = 0,

i = 0;

while (i < s.length) {

hash = ((hash << 5) - hash + s.charCodeAt(i++)) << 0;

}

return (hash + 2147483647) % 100 / 100;

}

const getCookie = (headers, cookieKey) => {

if (headers.cookie) {

for (let cookieHeader of headers.cookie) {

const cookies = cookieHeader.value.split(';');

for (let cookie of cookies) {

const [key, val] = cookie.split('=');

if (key === cookieKey) {

return val;

}

}

}

}

return null;

}

const getSegment = async (p) => {

const segmentMap = await fetchSegmentMap();

let weight = 0;

for (const segment of segmentMap.segments) {

weight += segment.weight;

if (p < weight) {

return segment;

}

}

console.error(`No segment for value ${p}. Check the segment map.`);

}

exports.handler = async (event, context, callback) => {

const request = event.Records[0].cf.request;

const headers = request.headers;

let uniqueId = getCookie(headers, COOKIE_KEY);

if (uniqueId === null) {

// This is what happens on the first visit: we'll generate a new

// unique ID, then leave it the cookie header for the

// viewer response lambda to set permanently later

uniqueId = getRandomId();

const cookie = `${COOKIE_KEY}=${uniqueId}`;

headers.cookie = headers.cookie || [];

headers.cookie.push({ key: 'Cookie', value: cookie });

}

// Get a value between 0 and 1 and use it to

// resolve the traffic segment

const p = hashToInterval(uniqueId);

const segment = await getSegment(p);

// Pass the origin data as a header to the origin request lambda

// The header key below is whitelisted in Cloudfront

const headerValue = JSON.stringify({

host: segment.host,

origin: segment.origin

});

headers['x-abtesting-segment-origin'] = [{

key: 'X-ABTesting-Segment-Origin',

value: headerValue

}];

callback(null, request);

};

Here, we explicitly generate a unique ID for this tutorial, but it’s pretty common for most websites to have some other client ID lying around that could be used instead. This would also eliminate the need for the viewer response Lambda.

For performance considerations, the traffic allocation rules are cached across Lambda invocations instead of fetching them from S3 on every request. In this example, we set up a cache TTL of 1 hour.

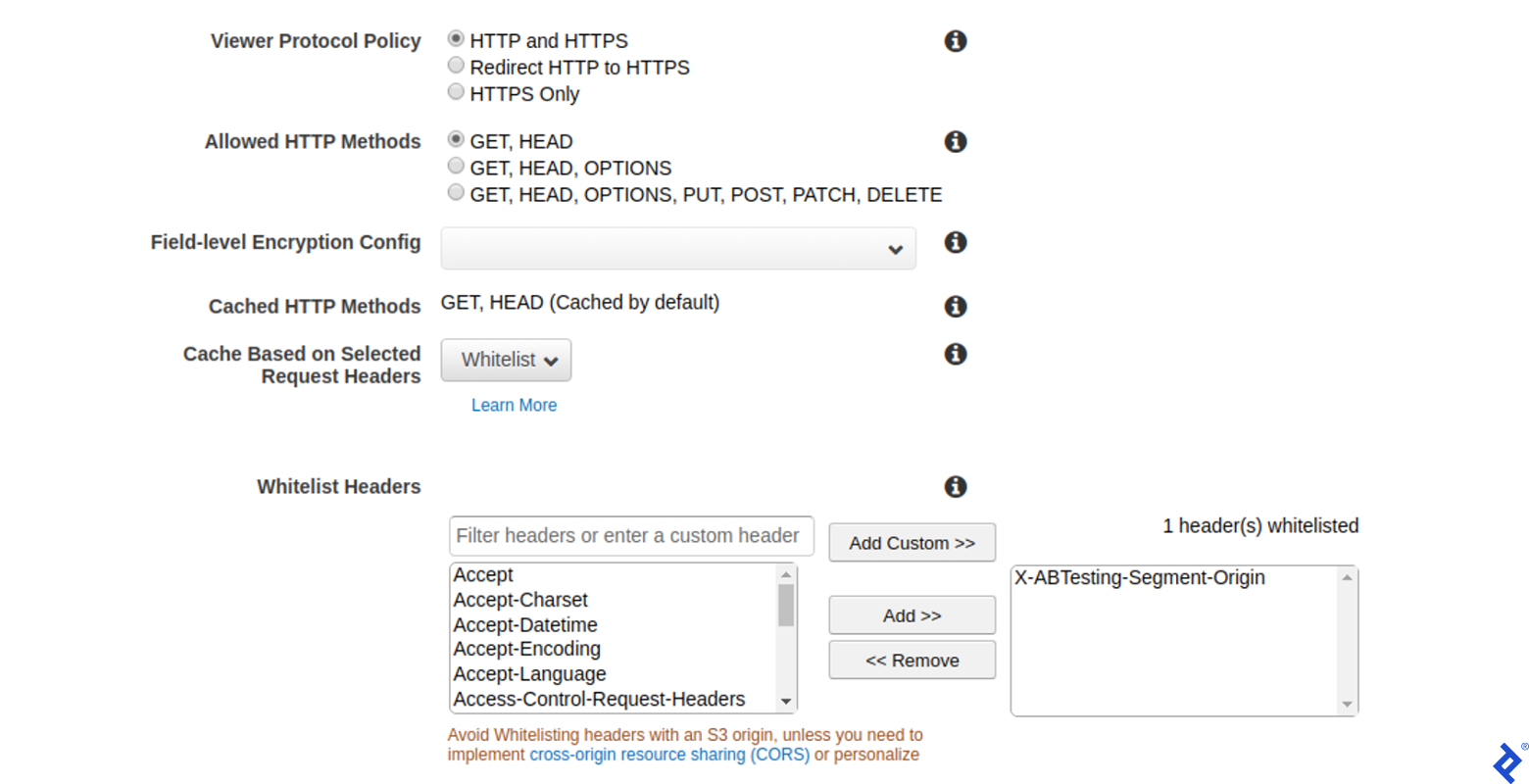

Note that the X-ABTesting-Segment-Origin header needs to be whitelisted in CloudFront; otherwise, it will be wiped from the request before it reaches the origin request Lambda.

abtesting-lambda-oreq

'use strict';

const HEADER_KEY = 'x-abtesting-segment-origin';

// Origin Request handler

exports.handler = (event, context, callback) => {

const request = event.Records[0].cf.request;

const headers = request.headers;

const headerValue = headers[HEADER_KEY]

&& headers[HEADER_KEY][0]

&& headers[HEADER_KEY][0].value;

if (headerValue) {

const segment = JSON.parse(headerValue);

headers['host'] = [{ key: 'host', value: segment.host }];

request.origin = segment.origin;

}

callback(null, request);

};

The origin request Lambda is pretty straightforward. The origin configuration and host is read from the X-ABTesting-Origin header that was generated in the previous step and injected into the request. This instructs CloudFront to route the request to the corresponding origin in case of a cache miss.

abtesting-lambda-vres

'use strict';

const COOKIE_KEY = 'abtesting-unique-id';

const getCookie = (headers, cookieKey) => {

if (headers.cookie) {

for (let cookieHeader of headers.cookie) {

const cookies = cookieHeader.value.split(';');

for (let cookie of cookies) {

const [key, val] = cookie.split('=');

if (key === cookieKey) {

return val;

}

}

}

}

return null;

}

const setCookie = function (response, cookie) {

console.log(`Setting cookie ${cookie}`);

response.headers['set-cookie'] = response.headers['set-cookie'] || [];

response.headers['set-cookie'] = [{

key: "Set-Cookie",

value: cookie

}];

}

exports.handler = (event, context, callback) => {

const request = event.Records[0].cf.request;

const headers = request.headers;

const response = event.Records[0].cf.response;

const cookieVal = getCookie(headers, COOKIE_KEY);

if (cookieVal != null) {

setCookie(response, `${COOKIE_KEY}=${cookieVal}`);

callback(null, response);

return;

}

console.log(`no ${COOKIE_KEY} cookie`);

callback(null, response);

}

Finally, the viewer response Lambda is responsible for returning the generated unique ID cookie in the Set-Cookie header. As mentioned above, if a unique client ID is already used, this Lambda can be completely omitted.

In fact, even in this case, the cookie can be set with a redirect by the viewer request Lambda. However, this could add some latency, so in this case, we prefer to do it in a single request-response cycle.

The code can also be found on GitHub.

Lambda Permissions

As with any edge Lambda, you can use the CloudFront blueprint when creating the Lambda. Otherwise, you will need to create a custom role and attach the “Basic Lambda@Edge Permissions” policy template.

For the viewer request Lambda, you will also need to allow access to the S3 bucket that contains the traffic allocation file.

Deploying the Lambdas

Setting up edge Lambdas is somewhat different than the standard Lambda workflow. On the Lamba’s configuration page, click “Add trigger” and select CloudFront. This will open up a small dialog that allows you to associate this Lambda with a CloudFront distribution.

Select the appropriate event for each of the three Lambdas and press “Deploy.” This will start the process of deploying the function code to CloudFront’s edge servers.

Note: If you need to modify an edge Lambda and redeploy it, you need to manually publish a new version first.

CloudFront Settings

In order for a CloudFront distribution to be able to route traffic to an origin, you will need to set up each one separately in the origins panel.

The only configuration setting you’ll need to change is to whitelist the X-ABTesting-Segment-Origin header. On the CloudFront console, choose your distribution and then press edit to change the distribution’s settings.

On the Edit Behavior page, select Whitelist from the dropdown menu on the Cache Based on Selected Request Headers option and add a custom X-ABTesting-Segment-Origin header to the list:

If you deployed the edge Lambdas as described in the previous section, they should already be associated with your distribution and listed in the last section of the Edit Behavior page.

A Good Solution with Minor Caveats

Server-side A/B testing can be challenging to implement properly for high-traffic websites that are deployed behind CDN services like CloudFront. In this article, we demonstrated how Lambda@Edge can be employed as a novel solution to this problem by hiding away the implementation details into the CDN itself, while also offering a clean and reliable solution to run A/B experiments.

However, Lambda@Edge has a few drawbacks. Most importantly, these additional Lambda invocations between CloudFront events can add up in terms of both latency and cost, so their impact on a CloudFront distribution should be carefully measured first.

Moreover, Lambda@Edge is a relatively recent and still evolving feature of AWS, so naturally, it still feels a bit rough around the edges. More conservative users might still want to wait some time before placing it at such a critical point of their infrastructure.

That being said, the unique solutions that it offers make it an indispensable feature of CDNs, so it’s not unreasonable to expect it to become much more widely adopted in the future.

Further Reading on the Toptal Blog:

Understanding the basics

What is Lambda@Edge?

Lambda@Edge is a feature of CloudFront that allows you to run custom code in response to CloudFront events in the form of AWS Lambda functions, which are executed close to CloudFront’s edge servers. These functions can be used to modify CloudFront’s behavior at any point of the request-response cycle.

How does Lambda@Edge work?

Lambda@Edge uses standard AWS Lambda functions that are attached to CloudFront event triggers, which correspond to the four possible stages that CloudFront can enter when responding to an HTTP request. During each triggered execution, the Lambda can read and modify the request or response object before passing it to the next stage.

When should I use AWS Lambda@Edge?

AWS Lambda@Edge can be used to make decisions on how requests should be handled before they reach a CloudFront origin. A popular use case is origin selection for A/B testing, but it can also be used in a similar manner to provide custom content that is optimized for a particular device type or user region. Other use cases include user authentication through CloudFront (e.g., verifying user credentials when serving static assets) and some forms of fine-grained cache optimization.

Is something similar available outside AWS?

At the time of writing, there’s nothing similar to Lambda@Edge on either Microsoft Azure or Google Cloud Platform. A current competing alternative is CloudFlare’s Workers feature, which in principle should make it possible to implement a similar solution for A/B testing. However, this might soon change as both cloud services might start offering something similar in the future.

Would this increase CloudFront costs?

As CloudFront is charged based on volume, any form of A/B testing that is properly implemented shouldn’t have a significant impact on CDN traffic. Lambda@Edge invocations are, however, charged separately in the same manner as AWS Lambda functions - i.e., based on the number of invocations and the total duration of each Lambda’s execution.

Georgios Boutsioukis

Athens, Central Athens, Greece

Member since January 17, 2018

About the author

Georgios is a full-stack developer with more than eight years of experience. He worked at CERN and as a member of the mobile API team at Booking.com.

PREVIOUSLY AT