Within the vast and complex world of contemporary IT, quality assurance engineering occupies a unique place. Regardless of the specific technology being developed and the size and scope of the project at hand, QA engineering is nearly always a vital element to the endeavor.

This is true throughout the development lifecycle. QA helps an organization to ensure successful and efficient design, completion, and ongoing maintenance of its tech products during each stage of the process. For companies looking to strengthen this capability, knowing how to hire qa developer talent who can match both technical and collaborative requirements is essential.

So how does a firm go about assessing high-quality candidates? They must keep in mind the candidates must be able to perform both the role of an intuitive technical detective as well as a skilled technological engineer. They must also take into account that due to the broad real-world applications of quality assurance tasks, many key terminologies and related concepts in the industry are constantly debated among engineers themselves.

While assessing a potential candidate, remember that their purpose will be to seek out and discover any and all negative issues with complicated technical products through intricate deductive investigations. They will relentlessly attempt to break and to critically review the systems that other engineers spend long hours designing and developing to be as error-free as possible.

Meanwhile, the candidate must communicate proactively with cross-functional departments and personnel from start to finish. They will need to turn otherwise negative criticisms into helpful and positive input and feedback. And, they will need to plan and organize the processes themselves as well as the specific test plans and test results. In addition, they must produce clear and organized documentation relating to each of these elements.

The overall goal of the IT organization, after all, is to ensure a positive and smooth experience for the end users of its products. Engineers, therefore, must advocate for the user experience with insight, intuition, and accuracy. They have to do this in a collaborative manner with all of the connected teams involved in QA and relevant development processes, whether they are working on a full-time, part-time, on-staff, or freelance basis.

The Essentials of the QA Engineer’s Role

Before diving into skills and evaluation, it’s useful to understand the meaning of quality assurance in modern software teams. A QA engineer is responsible for identifying and addressing defects, improving product quality, and supporting seamless user experiences. So, what do QA developers do on a day-to-day basis? They build and execute test plans, coordinate across teams, and act as advocates for the end user throughout the development process.

This guide will focus on three complementary areas of strengths and skills that a successful candidate should have:

- An intuitive and confident ability to perform intensive technical detective work.

-

Technical expertise, professional experience, and career goals.

- Superior verbal communication, documentation, and reporting skills.

Before we begin, it is helpful to note a couple of central points regarding the interview process.

Interrelationship of Skills

The three areas of assessment outlined above are closely interrelated, and they overlap in various ways. This is beneficial during the interview: While focusing on one category of skills, we are able to learn more about the other two at the same time.

Being Put on the Spot

During the interview, if the candidate encounters a topic they do not fully understand or must inquire for additional information in order to proceed, great!

This will present a distinct opportunity for the interviewer and the candidate alike. It is the ideal moment for the candidate to demonstrate their confidence and communication approach in the face of a potentially negative, pressuring, and embarrassing situation.

Are they unafraid to candidly recognize a lack of knowledge or skill, and to boldly and proactively engage in relevant questions so they can address the matter? Does the manner in which they formulate questions and use their answers indicate an ability to intuitively deduce on the fly, communicate fearlessly, and logically and intelligently incorporate freshly acquired data in a real-time process?

For example, if a candidate admits they have never heard of a particular term they were just quizzed about, talk it through, because this may be a good sign. It could well be that they are quite familiar with the concept, but simply hadn’t yet seen it under that name.

The Interview as Its Own QA Exercise

By the end of the interview, you should have a well-rounded, detailed perspective of the candidate’s technical skill sets, relevant personality traits, and professional experience. Remember that the interview itself may be considered to be an exercise in quality assurance: It’s an opportunity to test the tester and explore the extent of the candidate’s abilities.

So, let’s get started.

Assessment Category #1: Technical Sherlock Holmes

The first area candidates should show strength in is an intuitive and confident ability to perform thorough, technical detective work. The candidate will need to infer, discover, categorize, and interconnect intricate details, behavioral characteristics, and performance metrics of complex systems.

This area represents more of a set of personality traits, rather than specific technical skills. Potential engineers need to show you they have a solid capacity for performing granular and self-assured technical investigations. These traits include excellent attention to detail, tenacious commitment and follow-through, and keen logical and intuitive deductive reasoning as well as confidence and proficiency in communicating and asking relevant questions.

Many within the QA discipline consider this area to be the most important when assessing a candidate’s abilities, although certainly to be complemented with competent technical knowledge and experience. An eager and persistent investigator will likely excel irrespective of which particular platform or technology a given organization is using with its IT products, or which specific tools the QA team uses.

But what’s the best approach to evaluating a candidate’s investigative strengths? We recommend you jump right into the interview process by putting the candidate on the spot with a couple of practical hands-on exercises, followed by a few simple questions and answer rounds addressing related qualities and skill sets.

First Written Exercise: Basic Parts of a Test Plan

Begin by asking your test engineer candidate to jot down on paper the basic parts of a test plan, along with a quick summary of each part. If they’ve successfully completed testing cycles before, they should be familiar with producing—or at least referencing—test plan documentation, which is a central part of the process. Below is a list of standard parts of a test plan, according to ISO/IEC/IEEE 29119-3:2013. (This supersedes the IEEE 829 Standard Test Plan Template, but the latter site allows for more convenient reading.) Note that there are different variations of test plan documentation for different levels:

-

Master test plans cover multiple levels

-

Phase test plans cover single phases and may include:

- Testing-level-specific test plans (e.g., integration)

- Testing-type-specific test plans (e.g., performance testing)

The IEEE 829 template included here generally applies to master and phase test plans (which are sometimes combined into one test plan document).

-

Test Plan Identifier: A unique way of filing and referring to each test plan document.

- References: List of documents that support the test plan.

-

Introduction: Brief introduction about the project and test plan document (references and glossary may sometimes be included in this section).

-

Test Items: List of software items that are to be tested.

- Software Risk Issues: Critical software areas to test and inherent risks, such as complexity.

-

Features to Be Tested: List of features to be tested.

-

Features Not to Be Tested: Features not to be tested and reasons for not including them.

-

Approach or Approach/Techniques: Overall approach and relevant techniques for testing.

-

Item Pass/Fail Criteria: Completion criteria for elements to be tested. (This is sometimes called simply Pass/Fail or Features Pass/Fail, in which case it may also include criteria for plan-level elements.)

-

Suspension Criteria and Resumption Requirements: Conditions under which testing will be suspended and subsequent conditions under which testing will be resumed.

-

Test Deliverables: Documents and tools to be created as a part of the testing process.

-

Test Tasks: Tasks for planning and executing the testing.

-

Environmental Needs: Environmental requirements such as hardware, software, OS, network configuration, tools, etc.

-

Staffing and Training Needs: Staffing, skills, and training requirements.

-

Responsibilities: Relevant roles and responsibilities of team members.

-

Schedule: Important project delivery dates and key milestones.

-

Risks and Contingencies [Mitigation]: High-level project risks and assumptions and a contingency/mitigation plan for each risk.

-

Approvals: Each approver of the document, related sections, and sign-off dates.

- Glossary: Definitions of specific and/or unusual terms and acronyms used in the document.

Note: A candidate doesn’t need to list all possible parts of the test plan template, but they should be familiar with several of the more common items, which are marked in bold. There are many variations to this general outline used in actual projects, and some organizations use proprietary test plans, so it is important to remain open to variant responses.

Test plans outline the key guidelines that will be applied throughout the testing procedures for a given software project or perhaps a more specific module or component within a software project.

Sometimes, a candidate might not have had direct experience with writing test plans. For example, that responsibility is often left to other members of QA teams, such as test leads or test managers. In that case, ask them if they are at least familiar enough with referencing and using test plans so as to write down a few of the central parts. If they can’t, they should be able to sufficiently explain why; then also ask them, in an open-ended fashion, instead to write down a summary of the basic phases of the software testing lifecycle (STLC).

Asking the candidate to write down the stages of the STLC with which they are familiar might uncover additional terms and processes relevant to QA. These points of discussion can then provide further insight into their level of successfulness and type of experience in the industry. The central steps in the STLC include:

- Requirements/Design Review: Testable software requirements and design elements are organized and reviewed.

- Test Planning: Testing strategies are planned and defined.

- Test Designing/Test Case Development: Test cases, test data, and automation scripts are prepared.

- Environment Setup: Test environment is set up to replicate the end-user environment.

- Test Execution: Test cases/scripts are run and results are logged and tracked, etc.

- Test Reporting and Test Cycle Closure: Reports are prepared/reviewed and the test cycle is closed.

In any scenario, follow up this portion of the exercise with a verbal review of the candidate’s written responses. As we mentioned, doing this in the early stages provides the interviewer with a chance to see how the candidate communicates and responds while under a sudden spotlight. This quiz helps to discover their knowledge and experience while simultaneously testing their behavioral response and related personality traits.

It’s certainly okay to ask for both STLC and test plan outlines up front; however, it isn’t particularly necessary because if a candidate knows the test plan elements well either in part or in whole, they will most likely also be familiar with STLC phase summaries. (Note: The STLC is addressed in more detail in later interview segments.)

Note: One of the hallmark behavioral traits of a proficient engineer is that they will tend to spontaneously ask relevant questions throughout the interview process. For example, when beginning to write the basic parts of a test plan, an experienced candidate might inquire if a master plan, a testing-level plan, or perhaps a testing-type plan is to be specifically considered for the exercise. This could be a good indicator of a skilled engineer, and the answer to such a question might be just to consider a general phase-level test plan template, or perhaps to choose any specific type of test plan the candidate prefers.

Second Written Exercise: Test Cases for a Simple GUI

Next, move right into another written exercise by giving the candidate a basic diagram of a graphic user interface (GUI). Then ask them to write down as many test cases as possible relating to that GUI in a fixed amount of time, perhaps 10-15 minutes or so.

This is a very common method of quizzing applicants. It’s highly effective for observing real-world technical investigative skills, historical experience, and comfortability with the core QA task of intuitive and deductive logical testing-related analyses. This also serves yet again as a way to observe relevant behavioral and personality traits.

It’s important to use a GUI design that’s simple and clear. You need very few (but clearly labeled) form fields, command buttons, check boxes, and links. Perhaps two to five fields, and four to eight related elements would do it. That will provide sufficient logical material to generate dozens of possible test cases.

Many interviewers use a standard online login form for this type of exercise:

Such a login screen renders plenty of test cases. But a quick search will reveal a variety of sites listing several dozen test cases for common login forms. Many will even be specifically about QA engineer interviews.

That’s why we recommend coming up with a unique design of a simple GUI for this activity, considering that many applicants might have prepared in advance for a login screen test case quiz.

This applies to the sample GUI we’re about to cover below, too. So if it’s possible to use a simple proprietary user interface that is currently in use within your organization—or perhaps undergoing design review or testing—even better. Then it will be not only unique but also highly relevant.

It may also be viable to use a deliberately reduced version of a particular GUI, or a specific subsection of an interface that can stand on its own and be clearly understood when being reviewed for test cases. For example, the following fictional diagram demonstrates a simple proprietary interface to be used for this activity. This is a straightforward GUI representing the header section of an invoice initiation and generation screen.

Note: Whatever GUI diagram is to be used, just keep it very standalone, simple, and clearly understandable. Too many fields or elements, or potentially unclear or confusing aspects of a given interface design, might complicate the exercise and require lengthy explanations before beginning the activity, or multiply the potential number of test cases beyond a reasonable extent. Remember what we mentioned earlier: Only two fields and a handful of buttons, links, and checkboxes on a very simple login window will already provide several dozen test cases.

Before the candidate begins writing out test cases, allow for a short review of the purpose of the interface and the high-level context of how the GUI is used. Either verbally or in writing, outline that this interface is used in order to:

- Create a new invoice ID number by entering the customer name, issue and due dates, and subject/description, then clicking on the “Generate Invoice” button.

- The customer name field may be auto-filled by linking the new invoice to a preexisting invoice by clicking on the “Link to Existing Invoice” button.

- After filling in this header section information and generating the new invoice ID, the screen automatically enables an “Invoice Items/Details” section at the bottom (not pictured) and allows you to begin entering invoice items.

Give just enough information to cover the overall purpose of the interface, but do not go into specific details of particular rules, requirements, or testing pre-conditions related to individual elements within the GUI. (Also clarify that they shouldn’t create test cases for the “Invoice Items/Details” section at the bottom.)

Then, just before commencing with the written portion of the activity, encourage the candidate to take the lead in asking for any clarifications about the design elements of the GUI that might be relevant when formulating test case scenarios. For example, they might ask about precise software rules and requirements, including intended behaviors of specific functionalities, or process flows relating to test case pre-conditions.

This is an important part of the exercise. Be sure to allow the candidate sufficient time for this before beginning the timed written portion of the activity. This will let you observe how well the candidate can interpret the design of an interface and effectively investigate relevant details before applying them to test cases.

The invoice initiation GUI might prompt questions like these:

- What is the intended tab order of the fields, command buttons, and hyperlink? Should some or all of the command buttons and hyperlink receive the focus while tabbing through the interface?

- Should the customer drop-down box allow or prohibit new customer entries directly into that field? What is the intended behavior if that is allowed vs. prohibited? Should a pop-up warning message appear if disallowed, or a pop-up data entry window appear if allowed?

- What should occur when clicking the “Search Customer” button? Should a separate search window open up, or perhaps an additional section within the same screen expand, or should the customer field’s list simply drop down to scroll through customer names?

- Are there validation rules for the issue date and due date values? E.g., does the due date have to fall after the issue date, or are there any other date validations? If so, what is the intended behavior if such rules are broken?

- Does the subject/description field have a character limit, and if so, how many characters are allowed? Is there an intended behavior if the character limit is exceeded?

- Is the subject/description field Unicode-compatible?

- What should happen when the user clicks on the “Link to Existing Invoice” button? Should a separate invoice search window appear, or should a drop-down selection list expand?

- Which data fields are required in order to create a new invoice ID number? What is the intended behavior if one or more required fields are missing when clicking on the “Generate Invoice” button?

- What is the intended behavior when clicking on the “Close and Save” and “Cancel and Exit” command buttons, and what are the related pre-conditions for those test scenarios? E.g., what should clicking “Close and Save” do if the invoice ID hasn’t been generated yet, and what should “Cancel and Exit” do when partial or full data has already been entered?

- What should occur when clicking on the “Invoicing Rules & Guidelines” button? Should a pop-up help window appear, or perhaps a reference page should open in the user’s external browser?

Note: This part of the activity is another reason why it is a good idea to use a unique, perhaps proprietary, user interface as opposed to a typical login page. There wouldn’t be a lot of opportunity for inquiry regarding rules, intended behaviors, and test conditions with an essentially universal login page design with which we are all very familiar. A unique and original, albeit simple, user interface design will instead provide the candidate with plenty of room so as to analyze the GUI for a variety of relevant rules, workflows, conditions, etc.

This is another opportunity to observe the applicant’s communication styles and relevant skills. For example: Are they intuitive and deductive while analyzing the GUI and any potential test-case-related issues? Are they clear and efficient in the manner in which they communicate? Do they meticulously write down the answers to their questions, or do they commit various points to memory? If using their memory, are they successful in recalling those points when generating the corresponding test cases?

And again, pay attention to whether candidates tend to ask spontaneous questions. For example, during this exercise, a high-quality candidate might ask if they should use a specific format—assuming the interviewer doesn’t provide one already.

They might ask if they should use a table, and if so, whether to include or exclude columns for test case ID number, positive vs. negative case and test type classification, preconditions, input test data, or test case steps.

If the interviewer wants to specify the format, here are three approaches they might have the candidate use:

The list format of the first approach will give a clear enough picture of the candidate’s ability to logically infer and deduce test cases.

The second approach wouldn’t take too much extra time, and would help to shed more light on the applicant’s comfortability and expertise with test case formulation and documentation.

But if the table is to have more than four columns—perhaps those your organization actually uses for test case creation—be sure to allow the candidate extra time. Also beware that specifying this up front might be overkill, detracting from the main point of the exercise. Only make this a requirement for the most eager experts.

After the applicant’s questions and their timed test-case writing, follow up with a verbal review of the results.

Be sure to have prepared a thorough internal version of a complete list of test cases for the interface, and use that master copy as a reference while looking over the candidate’s version of the test cases list. Engage the applicant in an open-ended discussion of the cases they have written down, as well as any relevant observations regarding the documentation style and layout they may have used to organize the list.

Putting the applicant on the spot right at the start of the interview process with these hands-on and interactive written and verbal exercises will yield a great deal of insight. Both of these initial written activities can help to begin to shed light on the manner in which the candidate tends to organize testing related documentation as well. You’ll also learn a lot about the candidate’s personality, experience, confidence, and communication styles, and how well they conduct intuitively deductive technical investigations.

Question and Answer Round: Thorough and Intuitive Technical Investigation

It’s a good idea to follow up the preceding two exercises with some traditional interview question-and-answer rounds, choosing topics that relate to their personality traits and skills at performing intricate technical investigations.

Get the candidate’s perspective on their own experience, proficiency, and success levels concerning follow-through, attention to detail, and deductive reasoning.

Following are some select interview questions, and their potential answers, that would serve well to kick off question-and-answer segments, and also help to get a better idea of the applicant’s technical investigative prowesses.

Note: Experienced candidates will have plenty of ideas to illustrate and tales to tell about technical subjects and on-the-job scenarios—always ask for examples!

Q: Why do you like working in software quality assurance? Can you elaborate on one or two specific on-the-job examples that highlight what you most enjoy about this field?

Beginning with this question allows the interviewer to get an idea of the applicant’s perspective on the QA environment. Considering the core activity in quality assurance is deductive technical investigation and critiquing via test results, this is a good opportunity to check for red flags with the candidate’s general attitude.

For example, if they say they enjoy how it feels to be able to turn software problems into positives via the software processes—and provided they can cite specific, meaningful examples of such scenarios—that would indicate a high-quality and team-oriented candidate.

But if they say that they enjoy the opportunity to criticize other people’s work and point out the bugs in what other engineers have designed and developed, maybe showing condescension—this is definitely a counterproductive disposition. Even if they might be good at finding bugs, it would be better to hire someone who can not only find them efficiently but also report them in a professional way. Otherwise you’ll be spending extra time on morale drain, if not sorting out outright conflicts.

Q: Is there anything you find difficult, or perhaps even unpleasant, about working in quality assurance? Can you describe any specific examples of on-the-job situations in the past that were very challenging, and how you dealt with those?

Follow up the first question by flipping the script. Here you let the applicant address any negative feelings and perspectives on QA in an open-ended fashion. Find out if there is anything they deem difficult or even displeasing about the industry. This is also a way to test the applicant’s ability to communicate fearlessly, recognize and address potentially negative topics, and maintain a positive outlook and vantage point toward potentially unpleasant subjects.

Maybe they say that sometimes architects and developers have taken thorough defect lists personally. If they follow by saying that reaching out to such collaborating engineers with specifically positive notes and feedback regarding the same QA analyses helps greatly to alleviate negative vibes, great! That suggests the applicant is good at both investigating software and working as part of a team.

But maybe they explain that constantly having to review and re-review specification documents gives them unbearable headaches and interferes with their personal lives. If that’s the end of that thread, that would be cause for concern.

Or maybe they say they can’t stand explaining the logic behind test methods and results to other engineers, or hate figuring out when to stop testing overly buggy modules, or wish they could do away with all the paperwork. Such answers—without appropriate solutions—are also red flags.

Q: How do you familiarize yourself with a new software product and learn everything you need to know in order to test it thoroughly? What is your approach if documentation is unavailable, limited, or outdated and inaccurate?

Good answers to look for would include:

-

Use it! Good software testers are good software users. They’ll want to jump right in as an everyday user and use it, making notes of relevant observations and potential questions as they go.

-

In-house and external consultation. Quality assurance engineers often go over the software with other team members like architects and developers, as well as other testers. In addition, experienced candidates might mention the idea of speaking with sales and marketing team members for a product. Often they’ll have a distinct perspective on the system—a helpful addition once an engineer has finished with their initial exploration.

- Sales is often focused on specific features and characteristics related to performance/capacity, real-world scalability, specific usability points, future enhancement plans, and useful product comparisons with other similar systems on the market. Furthermore, the features they’re pitching make sense to prioritize when testing and can allow for earlier demo availability.

- If the product is already being used by clientele/end-users, advanced engineers will want to investigate input and feedback from those avenues as well, including potential public or market level review of the system, if applicable.

-

Version and code review. Skilled engineers will want to look over previous versions of the application, if available, as well as investigate pertinent source code managing relevant application logic. They may also mention specifically that it’s important to review SQL/data-tier/back-end/mid-tier layers or other potentially less visible parts of a system’s architecture.

-

Documentation. Engineers will want to review documentation relating to the design, rules and requirements, coding, prior testing cycles, and both historical and current roadmaps. But what if they can’t?

-

Review any and all existing document resources. No matter how scarce or outdated/inaccurate previous documentation may be, usually at least something exists. Perhaps there are a few handmade pre-development sketches of rough interface layouts or wireframes. Or perhaps there’s a small group of quick-and-dirty lists of high-level requirements or use cases. These may still offer valuable insights regarding actual, or at least intended, current and past states and characteristics of a system.

-

Learn the system and infer corresponding reference materials. Even when documents are not present, software still consists of the logical design specifications, rules, and requirements that guided its creation. Therefore, using the methods listed above—e.g., end-user mock usage of the product, version and code review, and pertinent consultations—one may become as familiar with the system as possible, and in the process infer as many rules and requirements as one can, while keeping in mind to limit practical margins for error by mitigating potentially unclear or complex assumptions.

-

Create it now. An eager and expert candidate will simply push to fill in the gap by writing (or collaborating on) accurate documentation while performing the activities outlined in the previous two steps!

Q: Do you consider yourself detail-oriented, or perhaps even somewhat of a perfectionist? If so, what kind of impact does that have on your work in QA? Can you cite examples from past projects of both positive and negative effects of these or similar character traits you feel you may have?

Some believe that engineers in quality assurance generally have perfectionistic tendencies, and perhaps even that they should, at least on some level. However, this comes with an understanding that there is a point at which testing needs to stop, and too much obsessing about details and defects can turn into a negative aspect.

Hit this topic head-on and directly ask regarding potentially adverse dimensions of the issue. Whether they respond that they are a perfectionist or they aren’t, or maybe somewhere in between, they will at any rate proceed to elaborate if they feel themself to be tenacious and detail-oriented. Let this conversation naturally lead into describing how, when, where, and why the applicant exhibits dedication and follow-through, and a pursuit of detailed levels of successfulness in the investigative environment.

Do they relate these traits directly with topics of performing intricate deductive processes? What kinds of examples do they use to illustrate real-world situations that reflect these qualities?

It is important that they address any negative angles in their history on this subject. However, if their response turns into complaining that they have a hard time sleeping at night due to obsessing over one or two test cases they may have missed among many dozens of already thoroughly defined scenarios, that’s cause for concern.

If, on the other hand, they articulate that among a constant pursuit, and resulting onslaught, of detailed specifications, rules, test cases, test results, subsequent analyses, documentations, etc., they are able strike a healthy balance with keeping sight of overall project-level pictures, and rest confident in knowing when the job is sufficiently done, that would suggest the applicant is committed, and maybe even a perfectionist of sorts, but also knows how best to manage it.

A great on-the-job example relating to this area would be if an applicant points out the fact that the QA processes and documentation are deliberately designed to help with just such pitfalls: This is specifically why test plans and strategies [should] contain complete and detailed information on test items, features to include/exclude, approaches, pass/fail criteria, suspension criteria, test tasks, etc. It’s also why every step should be planned in advance and effectively monitored along the way. This structure should make for a stress-free, pleasant, and rewarding environment in quality assurance. This is because even for perfectionists, it places a precise limit on the amount of detail needed.

If a candidate openly admits they have a problem with obsessive-compulsive traits caused by a truly perfectionistic personality profile, but they’ve learned how to leverage this rather than having it overwhelm them—and they have the track record on their CV to back up that claim—they would likely be an ideal software tester.

Q: What is error guessing? Can you give a few examples of the types of test cases and defects this might address?

Error guessing refers to a methodology that infers otherwise obscure test cases based on testing results found with similar applications from prior projects. This technique is used by more seasoned engineers, combining their experience and intuition.

Ex-developers are sometimes well-versed in error guessing with certain areas of applications due to the fact that these kinds of bugs often relate to behind-the-scenes technical aspects of coding and SQL/data layers and other technologies relating to system architectures and design concepts:

- Data errors resulting from entering data fields and/or clicking on data-related command buttons in the wrong order in a system that processes data records—e.g. clicking an “Update Record” button when no new data for the record, or partially insufficient data, has been registered.

- Errors related to leading spaces, trailing spaces, or entirely blank spaces in a system that edits/stores text.

- “Null attachment” or “attachment limit exceeded” errors in a system that processes attachments.

- Divide-by-zero and numeric overflow errors in a system that performs calculations.

- Resource allocation and deallocation errors in a system that allocates resources.

- Invalid/missing SQL parameter errors in a system that uses parameterized SQL APIs.

Q: Describe how you go about finding and defining potential edge cases and corner cases. When is it a good idea to stop? When is it a good idea to ignore cases that have already been identified vs. finding a workaround or implementing full resolutions? Can you give any examples from past projects, or hypothetical illustrations?

Edge cases and corner cases are basic concepts in QA relating to intensive deductive and intuitive logic, and every engineer should be familiar with them. These are when systems are used and/or operate at or beyond normal operating boundaries or conditions. The conditions may be technical in nature, such as when exceeding system specifications, or they may be logical, such as when real-world operations fall outside of typical/intended process flows. Often, the errors themselves occur rarely or periodically.

A technical example might be when a row size limit is exceeded upon insertion of a new record in a SQL table, and defining what the system should do when that happens.

For an example of a logical edge case, consider a production line system where once in a while, output levels are increased. In response, the system flags a need for additional parts orders, the orders are then placed, but the output level flag is then thought to be in error, so cancellation requests are registered on those orders, and then the flag is again thought to be correct; meanwhile, the status of the orders is subsequently pending/unknown. Again, it’s important to define how the system should manage such a scenario.

Edge cases generally involve a single extreme condition like in the two examples above. Corner cases are when multiple edge cases happen at the same time. Suppose the above example of the SQL row insertion limit were reached together with simultaneous SQL table indexing and locking edge case issues. Or suppose the parts orders edge case coincided with an edge case involving incorrect parts inventory levels. Do these scenarios leave the system in a broken state?

Some such cases can be expected based on well-understood and commonly considered technical and logical limits and boundaries of a system and procedural workflows. But there are always unexpected cases which are not readily apparent from software designs, requirements, and process flows alone.

However, be on the lookout for responses to this question where the candidate leans too heavily on this being about “expecting the unexpected.” While that sentiment is important, advanced applicants know that an effective approach is a bit more concrete than that—i.e., it’s important to:

- Use the software specifications, rules, and design elements (such as GUI layouts, process flows, etc.).

- Investigate system limitations and technical characteristics.

- Pay attention to other more obvious and easily defined test and use cases.

- Apply a lot of purely imaginative thought.

- Ask lots and lots (and lots) of practical as well as hypothetical questions about all the different possible ways the system can be used and also affected by external factors.

It’s essential to contemplate situations and events that other engineers might rarely (if ever) consider, including corner case scenarios as we defined above.

This is where testers try hard to break the application. It’s also where a top-notch candidate knows how far to go, and that edge/corner cases may not be fully resolved but instead worked around or even left alone. It’s all about the severity of the risk involved.

For example, when working on financial/banking and security sensitive systems, it is obviously imperative to rule out all potential edge and corner cases that may affect monetary transactions and security issues and to resolve every possible technical and logical use and test case, no matter how rare or improbable the scenario may be. However, when testing a system that stores consumer survey data, it may be OK to tie off a rare and unlikely data entry corner case by simply adding a quick error message like, “Something went wrong, please enter that name and address again.”

Top-notch applicants will try to pay attention to hidden assumptions and obscure system characteristics that others might take for granted or not notice, or not even be aware of in the first place. They may also try to imagine they are end users who may:

- Know little or nothing about technology or software engineering

- Be unaware of various rules and intended usages of the system

- Misunderstand the system in various ways

These approaches—which apply more generally to QA test brainstorming, as well—may help them conjure up use cases that software designers might not have assumed would ever occur.

Other QA Engineering Interview Question Ideas

We offer here some more suggestions for when interviewers wish to delve further into the applicant’s behavioral traits and logical skills:

- Do you engage in any activities off the job related to quality assurance, such as blog reading or writing, or participation in IT or QA forums and organizations?

- What is meant by test effectiveness and test efficiency, and can you provide past or hypothetical examples?

- Test effectiveness generally refers to the relative quantity of valid and resolvable defects found, while test efficiency means gauging the time and resources spent along the way. Depending on the candidate’s particular experience, a response to this question might be a quick summary or might develop into a dissertation on test-metric tools and analyses. The point here is to explore the applicant’s understanding and experience with formally assessing quality assurance efforts.

- Which is more important, a focus on testing or on quality?

- It is important to keep in mind the end goal is quality, and testing is a key means to achieving that.

- How do you classify priority vs. severity with defect reports? Can you give past or hypothetical examples of defects that have high severity but low priority, and vice versa?

- An otherwise basic and simple question, but a logical exercise all the same, so as to observe the agility with which the applicant formulates their response.

- Have you ever come across a system or a GUI that was very difficult to generate test cases for? If so, why, and how did you go about completing the cycle? Conversely, have you ever seen a system or GUI that was extremely easy to write test cases for, and why? Elaborate on one or two of the most difficult defects you’ve ever found, how you found them, and why they were challenging to uncover.

- This topic can be valuable to provide more insight into how the candidate’s logical, intuitive, and deductive mental processes function as well as to further illustrate related skills.

Having looked deeply into a candidate’s investigative skills, it’s time to switch gears and take a broader look at their CV.

Assessment Category #2: Technical Expertise, Professional Experience, and Career Goals

The second major area to cover is technical skills and proficiencies, including the demonstration of solid professional experience. This includes both general technical and software development topics as well as QA-specific methodologies and testing tools.

It is therefore helpful if you’re able to get an idea of the candidate’s understanding of some core development and QA terminology. As mentioned at the beginning of this guide, many key terms and related concepts in the quality assurance sphere are under constant debate. All the better! This gives the interviewer insight into the candidate’s particular point of view on the concepts and dynamics behind the terms.

It’s definitely worth it to review skills relevant to the specific platforms, technologies, methodologies, and tools that are to be used in the organization’s IT projects. However, even if the candidate lacks experience here, a solid engineer will almost certainly be able to learn and adapt to new tools and technologies—prior experience with yours is mostly a bonus.

Interviewers will also want to get an idea of the applicant’s expectations and aspirations when it comes to career goals. Which roles, if any, do they prefer to assume (or avoid) in the team, and why? What are they looking for from potential employment with your organization? How do they want to see their career develop and advance within the near future as well as further down the road? What do they plan on doing in order to realize their goals?

Technical Proficiencies, Concepts, and Terms

Let’s continue on with a more extended question-and-answer segment, covering various areas relating to technical talent. Explore the applicant’s knowledge, understanding, and proficiency with general software development and concepts, methodologies, and terms.

Note: Speaking of terminology, the International Software Testing Qualifications Board (ISTQB) publishes a helpful and comprehensive resource listing hundreds of terms and corresponding IEEE/ISO sections, cross-references, and synonyms.

Find out if they have potential cross-functional skill sets in areas that interact closely with teams and processes. These might include prior employment as a software architect or developer, documentation specialist, project manager, or DevOps engineer; or, perhaps even more distantly related experience in a non-IT field, such as in manufacturing quality control.

Also, make sure to include questions regarding scripting as well as SQL/data-tier-related issues, considering that coding and back-end technologies are common and important elements throughout software and testing projects.

Here is a list of questions to customize according to the candidate’s profiles and the flow of the interview so far:

Q: Explain the stages in the software development life cycle (SDLC) and give a quick summary of the different development process models with which you are familiar, such as Waterfall, Agile, and Agile sub-types like Scrum.

Discover the applicant’s understanding and familiarity with the SDLC and development process models. QA mirrors the SDLC along the path of software production, usually playing a part at every step along the way. QA not only helps to ensure defect-free products, but simultaneously assists in optimizing development processes.

Q: Describe the software testing life cycle (STLC) phases and how these interrelate with the SDLC. At which stage of development efforts should QA start to get involved in projects, and what factors should be considered when deciding that?

Whether the STLC was previously addressed in the first written interview segment or not, this will help you get a more detailed perspective on STLC stages and the applicant’s understanding of how these interconnect with the SDLC.

Generally, quality assurance should optimize for joining early, and most times that means right from the start of the SDLC. For example, a development cycle in concept stages for a large, complex, and original consumer software product would certainly benefit from fully integrating the testing cycle with development up front.

However, there may be less need for QA input at the very first stages of development with a smaller in-house project where internal user personnel directly explain thorough specs to fellow in-house developers who are highly skilled at routinely designing and coding the architecture that the application will use.

Q: Do you have experience writing test strategy documentation, and can you outline what a test strategy document might contain? What do you think might be an example of an effective test strategy?

Test strategy documents outline overall testing standards and methods to be used in order to achieve testing-related goals. They may apply at a high organizational level, or at more specific phase and test plan levels. Various potential sections may be included.

Determining what testing strategy may be optimal for a project or phase depends on complex factors and considerations. There is no right or wrong answer to this question.

Mini-quiz: Central QA Terms and Concepts

These questions are shorter but still worth asking. Quiz the candidate regarding a few central terminologies and concepts:

Discussion: Testing Types

The subject of testing types is broad, complex, and hard to organize. There are more than a hundred identifiable terms for different types of testing. This is why many guides simply ask about a handful of types or testing-type-related issues and leave it at that—but we think the topic deserves better attention.

Testing types reflect the numerous real-world approaches that must be taken to cover overlapping factors, such as project/app type, bug types, the current STLC stage, and who will perform the testing. Here are some useful approaches to take when organizing testing types:

High-level categorizations:

- Q: What are the different testing levels?

- A: Unit, integration, system, and acceptance testing.

- Q: What are some of the major testing types?

- A: E.g., smoke, regression, and acceptance testing.

Common characteristics:

- At what stage during the development and QA life cycles might a given testing type be used?

- Which testing types might be used with different project profiles, such as with web applications or with mobile apps?

- Which testing types may relate to manual testing vs automated testing?

- Which testing types are likely to be performed by people in different roles—e.g., developers, testers, end-users, project managers, internal sales associates, etc.?

Asking the candidate to review common and major testing types and basic corresponding characteristics in this open-ended manner is an excellent way to observe their level of familiarity and skill with testing in general, their knowledge and expertise with those central types of testing, and an extra bonus: their communicative ability to organize and list them on the fly.

It’s also valuable—either as an alternative or an addition to the above free-form questions—to ask questions tailored to more specific testing types or their related dynamics. Three of the most central specifics to cover are:

- What is regression testing, what are some of the benefits and drawbacks of this type of testing, and what are some different subtypes of regression testing?

- What is smoke testing and when is it a good idea to automate this testing type? Compare and contrast smoke testing with sanity testing.

- What kinds of testing types are used when testing web-based applications, what kinds of specific tests would you run with a web-based app, and what are some useful tools for running them?

Testing-type-related terms may cross-reference with others or be synonymous in different ways. What’s important is that a candidate cover a solid cross-section of testing types along both of those dimensions, along with any your organization specifically emphasizes.

Regardless of approach, candidates should demonstrate a clear grasp of what is QA engineering—not just as a role, but as a mindset focused on prevention, collaboration, and continuous improvement.

Q: Which of the SDLC process models do you have more experience with and are there any that you prefer, or do not prefer, to work with regarding QA endeavors and the STLC? Why?

This will let you get an idea of the applicant’s insight and experience with how SDLC process models such as Scrum or Waterfall affect and interrelate with QA endeavors and the STLC. Engineers should generally be comfortable with and adaptable to different development approaches and philosophies. However, tying in with the previous questions regarding SDLC, STLC, test strategies, and testing types, find out if they have opinions and insights regarding which methodologies might be better choices depending on project, development, and QA related factors, and why. Encourage the candidate to explain any notable preferences they may have on the topic.

Q: Highlight the technologies, platforms, architectures, programming languages, and tools that you have most worked with, if there are any that you particularly prefer, and why.

Again, no wrong answers here, but a chance to see if the applicant knows what they’re talking about, and can also defend their opinions professionally, with facts.

Q: What experience and proficiency level do you have with specific automation scripting languages? Do you enjoy writing code-based scripts—why or why not? Do you consider yourself a quick study when it comes to learning new coding and scripting languages?

Be sure to explicitly cover automation scripting and coding capabilities, including any specific languages that relate to your organization’s projects.

Q: What is your general knowledge and experience with back-end data-tier and SQL structures, languages, and principles, and what type of experience do you have with back-end- and SQL-related testing?

Data technology is an integral part of many system architectures, and plays key roles in system stability, scalability, performance, functionality, and maintainability. Therefore, back-end testing is an important part of many testing cycles, either indirectly via a GUI or directly upon the data tiers themselves.

Some testing cycles may involve no data-related skills, others perhaps advanced SQL skills, but most projects will involve some level of moderate understanding and comfortability with back-end data and SQL technology.

Therefore, most applicants don’t need to be expert in data-related areas, but ideally, they should be familiar with the at least the SQL language, the basics of relational database management systems (RDBMS) principles and schemas (including stored procedures), and the concept of SQL vs. NoSQL databases. It is useful and relevant to explore the applicant’s potential knowledge of:

- Data error and optimization testing issues, such as data integrity and referential integrity (RI), key violations, deadlocks, data corruption, data loss and data breach, and connection issues as well as relational database normalization (which is a central issue regarding RDBMS performance, stability and scalability)

- Data schema and coding concepts such as tables, fields, records(rows), indexes, foreign keys (FKs), constraints, and SQL data types, and stored procedures, triggers, functions, views, and FK cascade update/delete

- Advanced concepts like business intelligence (BI), extraction transformation and loading (ETL), data warehouse and data mart, OLAP and CUBE, and proprietary SQL languages like PL/SQL (Oracle), T-SQL (Microsoft), and UNQL variants for NoSQL databases

Find out what experience the candidate has had in past projects pertaining to testing and using RDBMSes, SQL, and NoSQL data technologies.

Q: When completing test execution, how do you generally decide when it is time to stop testing? Describe an occasion when it was difficult to know when testing should be concluded, and why, and how that issue was resolved.

The test plan is intended to define benchmarks and criteria to use in order to know when testing should stop. But applications, projects and processes are complex, and it is not always an easy task to identify the precise time that testing has been successfully completed.

Encourage the applicant to outline criteria for making such determinations, and to describe a situation in which it was a challenging matter and how they solved the issue.

A summary of several of the typical considerations would include:

- Budget, deadlines, and stakeholder consensus

- Test coverage and test case pass rate are high/above benchmarks

- Coverage of code/functionality/requirements is satisfactory

- Defect discovery/density are low/below benchmarks

- High priority and critical defects and test cases are satisfactorily resolved

More Questions

There’s a host of additional questions that may be asked with a prospective candidate, such as the following:

At this point, an interviewer should have a good idea of their candidate’s skill level. What about their specific experiences?

Resume Review

By this point, various aspects of the candidate’s professional history will already have been discussed, including years of experience, how they fit your organization’s job description, as well as tactical issues such as time zones and their compatibility with the rest of your development team.

Now it’s time to go over the applicant’s resume and tie their complete professional history together with the preceding activities and question-answer rounds, comparing their written CV with what has been learned thus far.

There may be yet more specific domains, industry niches, skills, and roles in the candidate’s history, as well as potential markers relating to personality and behavioral traits that will help to shed more light on their professional and personality profiles.

QA Tools Portfolio

Next, follow up the resume review with a focused inspection of the tools with which they have experience, and the level of proficiency with each one. Get the applicant’s perspective and opinion on them as you go.

QA activities are highly tool-oriented, if not tool-dependent, and there are hundreds of products available for use across all functions and aspects within the industry. Therefore, we won’t list them here, but it’s easy to find detailed, categorized lists of tool-based resources.

As mentioned before, it is not important for the candidate to know every particular tool. Rather, this serves as a reference to an additional part of the candidate’s overall profile. It may shed a little more light on which capacities the applicant has typically performed, or even which they prefer, depending on the types of tools they are most proficient with or which ones they may express enthusiasm over. This will also provide insight as to potential training requirements, or absence thereof, for new additions to the team.

Who knows, a prospective hire may already be proficient in a specific tool that your organization was considering switching over to, and provide additional insight into that decision process, or even be able to spearhead training the department on it—that would be a nice win!

Career Path Aspirations and Preferences, and Your Organization’s Projects

Engineers often wear many different types of hats throughout their career. Technical platforms, system architectures, and coding languages may change from one project to the next; tools, testing methodologies, and team structures will vary across their employment path with each organization; they might collaborate with test user groups in the morning, coordinate with development leads and DevOps engineers mid-day, and go over deadlines and deliverables with management in the afternoon.

Candidates also sometimes change career genres, going from software development or project management into QA, or vice versa; or, perhaps from IT into a non-IT discipline, then back into IT. And, although it is certainly a cornerstone characteristic within the industry to remain open for anything and readily adaptable to new and different prospects, either technical or otherwise, an applicant may have general or specific work environment preferences and career path goals in mind that may be important to address.

So, learn what they might be looking for, or not looking for, concerning their immediate and future on-the-job settings and career development aspirations, and why.

Address the following potential inquiries, according to what has been learned about the applicant’s history and expertise thus far in the interview:

- Are there any kinds of software project profiles that you prefer to work with, or that you prefer to avoid, such as larger vs. smaller systems, larger vs. smaller team sizes, web architectures vs. mobile or desktop, or other relevant project characteristics? Why?

- Are there any roles and activities within quality assurance that you prefer, or do not prefer, such as planning and strategizing, team leading and managing, cross-functional collaboration, or different types of testing, analyses, and metrics? Why?

- What are your career goals and aspirations for the immediate future, and mid- or longer-term, and what do you plan to do to successfully achieve them?

- When discussing goals, ask candidates how they see themselves growing in the role and what they’ve learned about how to be a QA engineer in dynamic environments.

- What do you feel you could bring to our organization and add to our development projects?

- What do you expect from our organization, and do you have any questions about our organization’s philosophies, methodologies, and projects?

Then, follow up these questions with any relevant discussions about the natures of projects at hand in your organization.

Assessment Category #3: Communicating, Coordinating, Documenting, and Reporting

Interviewers should now have a nearly complete picture of their candidates’ professional QA profiles. The third area in which a quality candidate should display superior performance and ability is communication and coordination, including written documentation and reports.

Good communication skills are no doubt a desirable characteristic in any team member in any given capacity or role within an organization, and the previous two sections will have already provided ample opportunity to assess this area. That said, there are a few additional aspects worth checking.

It is essential for the candidate to be able to translate abstract, hard-to-explain ideas into understandable verbal communications, as well as clear written text. Also, they should be confident, positive, and effective when coordinating and interacting with cross-functional departments, e.g.:

- Working with team leads and managers to plan and organize high level processes and protocols

- Bug tracking: Reporting the bugs and errors that have been found in other teams’ design and development work

- Collaborating with test users and groups

It’s inevitable that the engineer will have to explain and defend complicated and abstract logic and reasoning behind bug, performance, and usability reports.

There will be times when other members in the organization will disagree with interpretations of test results, or perhaps with the test results themselves, and the candidate should be able to resolve such scenarios with positivity and clarity. Testers will also need to calmly and confidently address defect escape rates regarding bugs that will invariably slip through even the most stringent testing procedures all the way to production.

It’s imperative the candidate engage all of this in a highly cooperative way.

Considering that the end goal of the IT endeavor is to assure a positive and error-free experience for end users of the organization’s products, communication, documentation and reports are a key element in the engineer’s role. They must effectively advocate for users by clearly conveying accurate interpretations and relevant concepts and dynamics relating to QA findings. This includes when the end users are in-house management and personnel, such as with internal applications that manage corporate processes and play key parts in maintaining a healthy organization.

There are three subsections to review in this assessment category:

- General communication styles and approaches, including interaction with fellow team members.

- Cross-functional coordination and collaboration.

- Documentation and reporting skills, including portfolio demos.

Communication and QA In-team Collaboration Styles and Approaches

Move into the last section of the interview process with an open-ended review and discussion about how the applicant sees themself as a communicator, including their levels of self-confidence in varying interactive situations.

Ask them to tie in their own perspective regarding their communication styles and approaches with how they like to work together with fellow engineers. It is just as important to interact and coordinate positively and effectively with other personnel with whom they will have more regular contact, as it is to do so with development, management and other non-QA roles in the organization. And there is just as much possibility for disagreement and disparate points of view within the team as there is with cross-departmental collaborators.

There doesn’t need to be a particular structure to this portion, and the preceding parts of the interview should help set this up for a natural progression through topics and questions such as these:

- What do they see as their stronger points, and weaker points, regarding their general communication styles, and how does that directly or indirectly relate to interaction with fellow team members?

- Does the candidate consider themselves to be introverted, extroverted, both/neither, or does it depend on the situation? How does their on-the-job communication style change if they are under stress, such as with deadlines or other pressures?

- When and why do they feel more confident, or more apprehensive, about engaging others in topics that relate to potential disagreements?

- How do they tend to approach and handle communication in the workplace when there is some level of disagreement? Can they cite examples with past team members or other coworkers?

- How would the candidate say past co-workers might describe their confidence level and communication styles regarding both positive topics as well as potentially negative issues or disagreements?

- What kinds of attitudes and communication styles do they prefer to see in fellow team members or other coworkers, and why?

Make sure to ask for real-world examples when and where possible relating to their comments and answers.

Cross-functional Coordination and Reporting

Next, move on to address the type of outlook and experience the candidate has had when working together with software developers and architects, project managers, DevOps engineers, and those from other departments who collaborate regularly with QA. Also get an idea what kind of contact and interaction they may have had with test users and test groups.

These interactions are typically more formalized and intermittent, and relate to key areas of processes such as planning and strategizing, reviewing and reporting on processes and productivity, and explaining and summarizing test results and methodologies with other departments. We suggest selecting from the following questions:

- Considering that the essential role of quality assurance is to critique not only the organization’s software products, but also to review and give feedback on the related development processes, strategies, etc., is there anything that you try to do in order to inject positive elements into the endeavor while working with programmers and other departments?

- Can you give any examples of previous experiences that stand out as either very positive or very negative while working closely with leads, managers, and team members from other departments? What happened, and how did you handle those situations, and how were they ultimately resolved?

- How do you tend to approach a situation in which you must account for an error or oversight on your part that affects other departments, such as a potentially important defect that slipped past a testing procedure that you were responsible for performing, then ended up in a production release, and may involve effort, resources, and maybe even customer impact, in order to remedy? Can you think of an example of such an incident from past projects?

- How do you handle and approach pushback from non-QA personnel regarding testing methods or testing results that others disagree with or question? Can you describe specific examples from previous projects?

- How do you approach a situation where you feel strongly about a certain point of feedback that you have not yet delivered to developers or other departments, but you believe that they will probably either disagree with your findings, and/or react negatively?

- What has been your experience working with test users and test user groups, and is there anything particularly positive or negative that stands out regarding such interactions, and why?

Documentation Styles, Approaches, and Portfolio Samples

Documentation and reporting is essential to every stage and process in QA. Every candidate should demonstrate familiarity and comfortability with skills in this area.

Before the interview, ask them to bring one or two portfolio samples of past documentation and/or reports that would help illustrate their paper-based proficiencies. NDAs or other factors may prevent this; in that case, request that they prepare a couple of fictitious examples of documentation or reporting with which they have experience instead. Other kinds of non-QA portfolio samples might be available depending on the applicant’s history, such as those relating to software design and development, project management, DevOps, or data analysis; at minimum, technical writing of any kind should be helpful. The interviewer might also request ahead of time a specific kind of sample for this segment.

Begin by looking over the documents the applicant has supplied and discuss any relevant points. If no demos are available, either move on to the following verbal segment, or perhaps have a short conversation as to why document and reports portfolios were not an option.

Next, pose some questions to the candidate to get a better idea of specific skills in this arena, such as the following:

- In what ways do you feel documentation and reporting is important or unimportant in the QA field, and why?

- What roles has documentation and reporting played for you in your work history?

- Is there anything that you enjoy or do not enjoy about documentation and reporting, and why?

- What is your favorite, and your least favorite, type of documentation and reporting (or a related task), and why?

- Can you list a quick summary of the various kinds of deliverables, artifacts, and reporting in QA?

- Do you have any experience in other types of documentation and reporting outside of QA, and if so, how do those relate to your paper-based efforts within quality assurance?

As always, encourage the applicant to provide meaningful examples.

Where to Hire QA Engineers

QA engineering teams can be built in many different ways, including in-house hiring, outsourcing, or via on-demand, vetted talent platforms.

In-house Hiring

Larger companies typically hire at least a small number of full-time, in-house QA engineers to build and/or maintain their core quality assurance processes. Hiring is typically accomplished through technology recruiters or by placing job ads on sites like Indeed, Glassdoor, or LinkedIn.

Outsourcing

With outsourcing, a firm hires external individuals or companies—sometimes located in different countries—to handle QA engineering tasks. This approach allows businesses to access specialized skills, reduce costs, and speed up project delivery by leveraging global talent and resources. Typically, the client and the outsourced quality assurance developer establish a clear agreement outlining project expectations and timelines before work begins.

Sourcing QA developers through a vetted, on-demand developer platform is a good option for startups, SMBs, and enterprise-level companies. In this model, the marketplace verifies the skill set of each local or offshore QA expert through testing and interviews. Some platforms also offer:

- Matching services in which a talent specialist matches companies looking to hire a QA team with candidates who offer the preferred experience, communication skills, industry specialization, and price

- Full-time dedicated QA specialists for your project, as well as part-time or hourly QA developers who may be working on multiple projects

- The ability to outsource work to remote, offshore QA engineers, QA development teams, or individual QA consultants who will be managed by your in-house product owner or engineering lead

How Much Does It Cost to Hire a QA Engineer?

The cost to hire QA depends on several factors, including project complexity, qualifications, and location. Companies looking to hire agile QA developers for test automation, performance testing, or other specialized tasks may encounter higher rates.

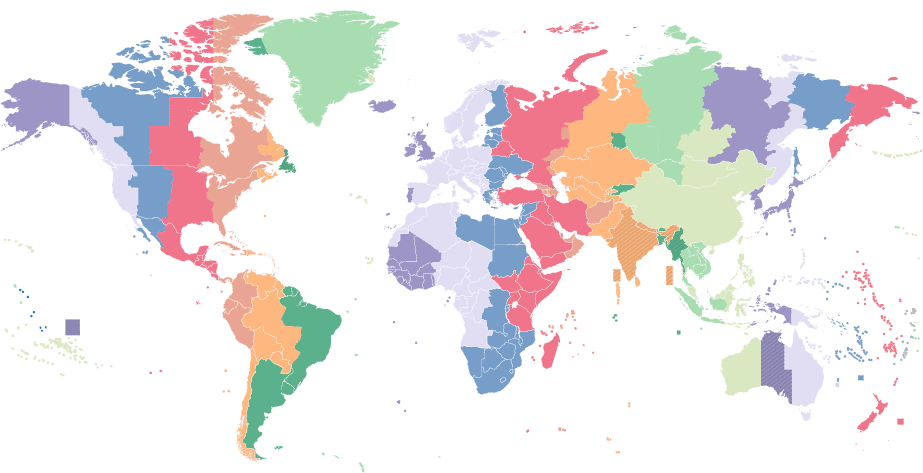

We’ve compiled information on the average salaries of QA engineers from several countries via Glassdoor, and have reported them below in US dollars. Please note that actual pricing can vary greatly, with experienced QA experts able to demand higher pay.

Country | Median total salary per year, USD |

United States | $101,000 |

Canada | $54,000 |

Mexico | $22,000 |

Brazil | $17,000 |

United Kingdom | $57,000 |

Germany | $69,000 |

Romania | $19,000 |

China | $31,000 |

India | $8,000 |

Australia | $53,000 |

Source: Glassdoor, [July 2025]

These numbers represent the median incomes as reported by Glassdoor’s proprietary Total Pay Estimate model based on salaries collected from the platform users. Wages in many areas consist of a base salary and additional pay, which may include cash bonuses, commissions, tips, and profit sharing. Glassdoor information and the currency exchange rates were accurate as of July 2025.

Why Do Companies Hire QA Engineers?

In today’s fast-paced development environments, are QA engineers in demand? Absolutely. Businesses across industries rely on them to maintain reliability, usability, and speed to market. QA engineers play a vital role in ensuring product quality by identifying and fixing errors before product launch, thereby improving the reliability, security, and usability of the software. Their proactive approach to detecting problems early in the development cycle prevents costly fixes later in the process by addressing issues when they’re easier to resolve.

What’s more, these professionals assess software from the user’s point of view, a valuable insight that helps uncover usability concerns that developers might otherwise overlook, ultimately leading to a better user experience and a more successful product.

In this hiring guide, we’ve delved thoroughly into the quality assurance process. In so doing, we’ve drawn a clear picture of everything interviewers need to know to create the best possible hiring process.

It’s usually inherently difficult to gauge several important soft skills and traits of a QA engineer. These include a solid level of self-confidence, deductive and intuitive capacities for logical reasoning and technical investigation, and fearless-yet-positive and team-oriented communication styles.

But our approach here allows interviewers to cover these difficult points “for free,” as it were, during more typical-looking interview segments. Through a couple of simple (but somewhat intensive) exercises and a complete review of the candidate and their experience, technical skill sets, documentation approaches, and portfolio samples, interviewers can turn potential assessment hurdles into golden opportunities.

We hope this guide helps you gain accurate and valuable insights about your candidates so that, ultimately, you can hire not only a great QA/test engineer, but also the right one for your team.