10 Essential QA Interview Questions *

Toptal sourced essential questions that the best QA engineers can answer. Driven from our community, we encourage experts to submit questions and offer feedback.

Hire a Top QA Engineer NowInterview Questions

Software quality assurance (SQA) represents all the activities and procedures that are focused on the whole process of software development. Its goal is to minimize the risks of causing defects and failures in the final product prior to its release. It is done by designing, implementing, and maintaining procedures that help developers and software testers do their jobs in the most efficient way.

Software quality control (SQC), unlike SQA, is completely product-oriented. The goal of SQC is to perform testing activities on the final product to validate that the product that was developed is in accordance with the needs and expectations of the customer.

You have an input field that accepts an integer. The valid input is a positive two-digit integer. Specify test cases according to equivalence partitioning and boundary value analysis testing techniques.

Quite often in QA, it is not feasible to test all the possible test cases for all scenarios. In the case of valid input being a positive two-digit integer, there are already 90 valid test cases, and there are many more invalid test cases. In such situations, we need a better way of choosing test cases, while making sure that all the scenarios are covered.

Equivalence partitioning testing is a software testing technique which partitions input test data in such a manner that, for a single input from the entire partition, the system under test would act the same. For the example in question, we can create four partitions:

- Any positive two-digit integer: a number greater than 9 and smaller than 100 (valid input)

- Any single-digit integer: a number greater than -10 and smaller than 10 (either positive or negative) (invalid input)

- Any negative two-digit integer: a number smaller than -9 (invalid input)

- Any three-digit integer: a number greater than 99 (invalid input)

Boundary value analysis testing is a software testing technique that uses the values of extremes or boundaries between partitions as inputs for test cases.

| -10 | -9 9 | 10 99 |

100 |

|---|---|---|---|

| invalid | invalid | valid | invalid |

| Partition 1 | Partition 2 | Partition 3 | Partition 4 |

From those four partitions and the boundaries between partitions, we can devise the following test cases:

- Test cases for equivalence partitioning are: 42 (valid); -15, 2, and 107 (invalid)

- Test cases for boundary value analysis are: 10 and 99 (valid); -10, -9, 9, and 100 (invalid)

In test automation, we use both assert and verify commands. What is the difference between them and when are they used?

The essential part of testing is the validation of results. In test automation, throughout each test case, we make validation checks to assure that we are getting the right results.

In order to perform those checks, for example by using Selenium [https://www.toptal.com/selenium/interview-questions] framework, we use the Assert* and Verify* classes of commands.

Both assert and verify command groups check if the given conditions are true. The difference is what happens if the condition is false and the check fails. When an assert command fails, the code following it will not be executed and the test will break immediately. On the other hand, when a verify command fails, the code will continue with execution regardless.

We use assert commands when the code following them depends on their success. E.g. we want to perform actions on a page only if we are logged in as an admin user. In that case, we will assert that the currently logged-in user equals the admin user, and test code will execute only if that condition is met—otherwise there’s no point, because we know we’re testing in the wrong environment.

We use verify commands when the code after them can be executed regardless of the condition having been met. E.g. checking a web page title: We just want to make sure that the page title is as expected and we will log the outcome of the verify command. An incorrect title alone shouldn’t affect the rest of what’s being tested.

Apply to Join Toptal's Development Network

and enjoy reliable, steady, remote Freelance QA Engineer Jobs

Verification and validation are two fundamental terms in quality assurance that are often mistakenly interchanged. When it comes to developing the right solution accurately, we can ask ourselves two questions:

- Are we building the system properly? (verification)

- Are we building the proper system? (validation)

Firstly we will make sure that the system we are building is working and that it has all the necessary parts according to the specified requirements. This process is called verification. After that, at the end of the software development process, we will focus our efforts on assuring that the system satisfies the needs and expectations of the end-user, in a process that we call validation.

Both verification and validation are vital in the QA process as both will allow for recognizing defects in a different manner. Verification identifies defects in the specification documentation, while validation finds defects in the software implementation.

What is the difference between severity and priority? Give examples of issues having high severity and low priority versus low severity and high priority.

Issue severity and issue priority are important for proper issue management. Severity represents the harshness of the issue, while priority represents how urgently the issue should be resolved.

Severity is a characteristic that is precisely defined as it is based on how the issue affects the end users. If the end user will be able to normally interact with the application and the normal use of the application is not obstructed, then the severity is low. But if the end user encounters application crashes or similar problems while using the application, the severity increases to high.

On the other hand, the value for priority is defined by the individual judgment of a responsible person in accordance with the specified requirements. Usually, the priority increases as the issue is more reachable by the end user.

High severity but low priority issues include scenarios like:

- Application crashes when an obscure button is clicked on a legacy page which users rarely interact with

- Unpublished posts (draft versions) in admin can be accessed without a valid login if the exact address is typed in manually (e.g.

https://www.private.domain/admin/panel/posts/drafts/19380108/edit) - Clicking on a submit button 50 times causes the application to crash

Low severity but high priority issues include these types of scenarios:

- Incorrect company logo on the landing page

- Typo in the page title on the landing page

- Wrong product photo is being displayed

There are many advantages of test automation and test cases should be automated when possible and when appropriate. However, there are certain scenarios when test automation is not preferable and manual testing is a better option:

- When the validation depends on the person performing the test (UI/UX, usability, look-and-feel)

- When the feature is being developed with constant changes and automating the test cases would mean a waste of resources

- When the test cases have extreme complexity and automating them would be a waste of resources

- When the requirement is for testers to perform manual sessions in order to gain deeper insight into the system

An RTM is a document that shows the relationship between test cases (written by the QA engineer) and the business/technical requirements (specified by the client or the development team.) The principal idea of RTM is to ensure that all the requirements are covered with test cases, thus ensuring that no functionality is left untested.

Using an RTM, we can confirm 100 percent test coverage of the business and technical requirements, as well as have a clear overview of defects and execution status. It undoubtedly highlights any missing requirements and/or discrepancies in the documentation.

RTMs allow a deeper insight into QA work and the impact that going through test cases and re-working them has on QA engineers.

For example, say we have the following requirements:

- R.01: A user can log in to the system

- R.02: A user can open the profile page

- R.03: A user can send messages to other users

- R.04: A user can have a profile picture

- R.05: A user can edit sent messages

Then we can design the following test cases:

- T.01: Verify that a user is able to log in

- T.02: Verify that a user can open the profile page and edit the profile picture

- T.03: Verify that a user can send and edit messages

This will give the following RTM showing the relationship between the requirements and test cases:

| Requirements | ||||||

|---|---|---|---|---|---|---|

| R.01 | R.02 | R.03 | R.04 | R.05 | ||

| Test Cases | T.01 | X | ||||

| T.02 | X | X | ||||

| T.03 | X | X | ||||

Theoretically, for some products and cases, testing activities could take enormous amounts of resources, and could also be impossible or impractical. In order to have a proper QA process—one where we can conclude with certain confidence that the product is ready for users—we need to be able to tell when we are done with testing.

That is where we apply an exit criteria document, which lists the conditions that have to be met prior to the release of the product. Exit criteria are defined in the test planning phase and allow the QA managers and test engineers to build an effective and efficient QA process that will conform to the pre-set conditions, thus ensuring the system being built is meeting the requirements and is delivered on time.

Exit criteria can be comprised of: test case coverage, remaining issues by priority and/or severity, feature coverage, deadlines, business requirements, etc.

For example, a brief exit criteria list could be:

- All test cases have been executed

- 95 percent of tests are passing

- No high-priority and no high-severity issues are remaining

- Any changes to user stories are documented

From the example, we can see that the exit criteria need to be strict, yet reasonable. It may not be realistic to expect that 100 percent of all tests will be passing all the time, but we must ensure that there are no critical fails that would cause the system to malfunction or the user to not be able to use the system in an expected manner.

Define the minimum number of test cases required for full statement, branch, and path coverage for the following code snippet:

Read X

Read Y

IF X > 0 THEN

IF Y > 0 THEN

Print "Positive"

ENDIF

ENDIF

IF X < 0 THEN

Print "Negative"

ENDIF

First, we start off by drawing the flowchart representation of the code:

To get the number of test cases for full, 100 percent statement coverage, we must find the lowest number of paths in which all the nodes are located. If we follow the path 1-A-2-C-3-E-4-F-G-5-I-6-J we will have covered all the nodes (1-2-3-4-5-6), thus satisfying all the statements in the code with a single test case. However, as the two statements (nodes 2 and 5) are in collision, a single test case does not work for the code in question. We will need two test cases to fully cover all statements:

- 1-A-2-C-3-E-4-F-G-5-H

- 1-A-2-B-G-5-I-6-J

Getting the number of test cases for 100 percent branch coverage means finding the minimum number of paths that will cover all the edges, essentially covering all the possible true and false statements. By using the same two paths from the full statement coverage we can achieve almost full branch coverage. The only branch those two paths are not covering is the “D” branch, for which we need an additional path to the two mentioned before. So our three tests would cover these paths:

- 1-A-2-C-3-E-4-F-G-5-H

- 1-A-2-B-G-5-I-6-J

- 1-A-2-C-3-D-G-5-H

Path coverage ensures that all the possible paths are covered throughout the code. Keeping the statement limitations in mind (X > 0 and X < 0), these are all the paths that can be followed:

- 1-A-2-C-3-E-4-F-G-5-H

- 1-A-2-C-3-D-G-5-H

- 1-A-2-B-G-5-I-6-J

- 1-A-2-B-G-5-H (when X = 0)

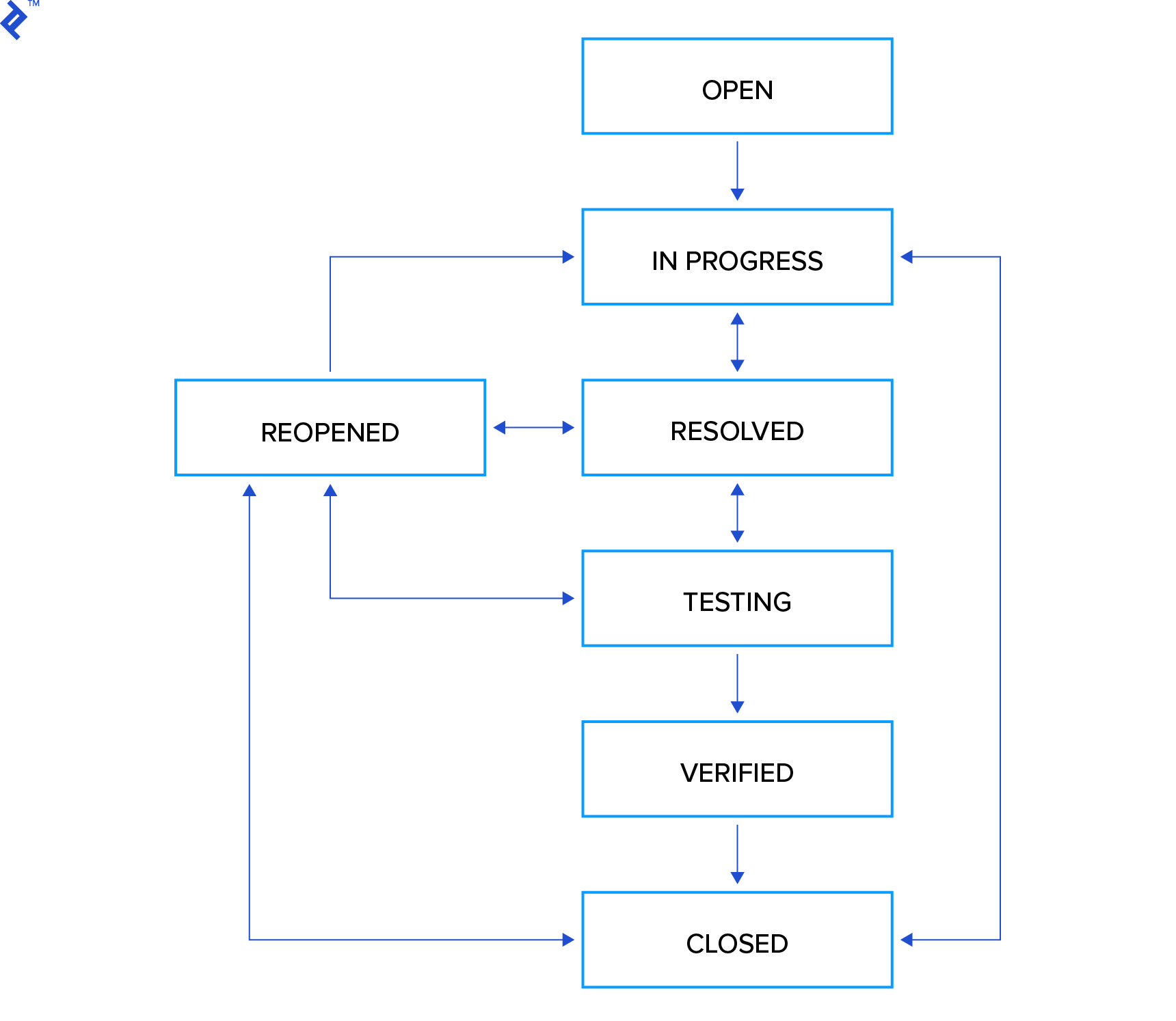

When a QA engineer creates a bug ticket, this ticket follows the sequence of states shown in the diagram from being created until it is verified and closed. If the resolution is not correct, the ticket may be moved back to an earlier state or reopened to prevent closure.

There are certain restrictions:

- If the ticket in state Reopened has been Closed, it can only be restored to the same state Reopened.

- If the ticket in state In progress has been Closed, it can only be restored to the same state In progress.

Starting from Reopened, what is the number of 0-switch transitions and what is the number of allowed 1-switch transitions?

State transition testing is a black-box testing technique in which changes in input conditions cause state changes in the application under test. The amount of 0-switch transitions from a state equals the amount of the transitions of length 1 starting from that state. From the diagram, we can see that the possible transitions from Reopened are:

Reopened -> In Progress

Reopened -> Resolved

Reopened -> Testing

Reopened -> Closed

That brings us to a total of four 0-switch transitions.

1-switch coverage from a state is equal to all the transitions of length 2 starting from the original state. From the information on 0-switch transitions and the diagram, we can list possible 1-switch transitions:

Reopened -> In Progress -> Closed

Reopened -> In Progress -> Resolved

Reopened -> Resolved -> In Progress

Reopened -> Resolved -> Reopened

Reopened -> Resolved -> Testing

Reopened -> Testing -> Resolved

Reopened -> Testing -> Reopened

Reopened -> Testing -> Verified

Reopened -> Closed -> Reopened

Reopened -> Closed -> In Progress

We have a list of 10 possible 1-switch transitions starting from the Reopened state, but due to the limitation of the specification that states if the ticket in state Reopened has been Closed it can only be restored to the same state Reopened, we can label the last 1-switch transition as invalid. That leads us to the final count of nine 1-switch transitions.

There is more to interviewing than tricky technical questions, so these are intended merely as a guide. Not every “A” candidate worth hiring will be able to answer them all, nor does answering them all guarantee an “A” candidate. At the end of the day, hiring remains an art, a science — and a lot of work.

Why Toptal

Submit an interview question

Submitted questions and answers are subject to review and editing, and may or may not be selected for posting, at the sole discretion of Toptal, LLC.

Looking for QA Engineers?

Looking for QA Engineers? Check out Toptal’s QA engineers.

Gareth Leonard

Gareth is an expert in quality engineering with a proven history in architecting test automation frameworks. He has held positions with Cisco, Wells Fargo, and Republic Services, where his expertise has been key in developing and testing critical software systems. Gareth is a natural leader with an aptitude for assembling and developing highly functional quality engineering teams.

Show MoreAli Mesbah

Ali is an expert in software quality and dependability. With more than a decade of R&D experience in the field of software testing and analysis, he has a proven track record in quality assurance, code quality assessment, test design, test effectiveness and adequacy, test automation, root cause analysis, and program repair.

Show MorePeter Marton

Peter has nearly a decade of experience as a software engineer in test. He's designed and developed test automation solutions while maintaining daily contact with clients. Peter has proven expertise in designing test frameworks, scripting, leading teams, and mentoring others as well.

Show MoreToptal Connects the Top 3% of Freelance Talent All Over The World.

Join the Toptal community.