How to Avoid the Curse of Premature Optimization

Premature optimization hurts experts and novices alike. How can you know when and how to optimize without shooting your project in the foot?

This week, Toptal Freelance Software Engineer Kevin Bloch walks project managers through some time-tested optimization strategies and when to use them.

Premature optimization hurts experts and novices alike. How can you know when and how to optimize without shooting your project in the foot?

This week, Toptal Freelance Software Engineer Kevin Bloch walks project managers through some time-tested optimization strategies and when to use them.

Kevin has 20+ years among full-stack, desktop, and indie game development. He lately specializes in PostgreSQL, JavaScript, Perl, and Haxe.

Expertise

It’s almost guarantee-worthy, really. From novices to experts, from architecture down to ASM, and optimizing anything from machine performance to developer performance, chances are quite good that you and your team are short-circuiting your own goals.

What? Me? My team?

That’s a pretty hefty accusation to level. Let me explain.

Optimization is not the holy grail, but it can be just as difficult to obtain. I want to share with you a few simple tips (and a mountain of pitfalls) to help transform your team’s experience from one of self-sabotage to one of harmony, fulfillment, balance, and, eventually, optimization.

What Is Premature Optimization?

Premature optimization is attempting to optimize performance:

- When first coding an algorithm

- Before benchmarks confirm you need to

- Before profiling pinpoints where it makes sense to bother optimizing

- At a lower level than your project currently dictates

Now, I’m an optimist, Optimus.

At least, I’m going to pretend to be an optimist while I write this article. For your part, you can pretend your name is Optimus, so this will speak more directly to you.

As someone in tech, you probably sometimes wonder just how it could possibly be $year and yet, despite all our advancement, it’s somehow an acceptable standard for $task to be so annoyingly time-consuming. You want to be lean. Efficient. Awesome. Someone like the Rockstar Programmers whom those job postings are clamoring for, but with leader chops. So when your team writes code, you encourage them to do it right the first time (even if “right” is a highly relative term, here). They know that’s the way of the Clever Coder, and also the way of the Those Who Don’t Need to Waste Time Refactoring Later.

I feel that. The force of perfectionism is sometimes strong within me, too. You want your team to spend a little time now to save a lot of time later, because everyone’s slogged through their share of “Shitty Code Other People Wrote (What the Hell Were They Thinking?).” That’s SCOPWWHWTT for short, because I know you like unpronounceable acronyms.

I also know you don’t want your team’s code to be that for themselves or anyone else down the line.

So let’s see what can be done to guide your team in the right direction.

What to Optimize: Welcome to This Being an Art

First of all, when we think of program optimization, often we immediately assume we’re talking about performance. Even that is already more vague than it may seem (speed? memory usage? etc.) so let’s stop right there.

Let’s make it even more ambiguous! Just at first.

My cobwebby brain likes to create order where possible, so it will take every ounce of optimism for me to consider what I’m about to say to be a good thing.

There’s a simple rule of (performance) optimization out there that goes Don’t do it. This sounds quite easy to follow rigidly but not everybody agrees with it. I also don’t agree with it entirely. Some people will simply write better code out of the gate than others. Hopefully, for any given person, the quality of the code they would write in a brand new project will generally improve over time. But I know that, for many programmers, this will not be the case, because the more they know, the more ways they will be tempted to prematurely optimize.

For many programmers… the more they know, the more ways they will be tempted to prematurely optimize.

So this Don’t do it cannot be an exact science but is only meant to counteract the typical techie’s inner urge to solve the puzzle. This, after all, is what attracts many programmers to the craft in the first place. I get that. But ask them to save it, to resist the temptation. If one needs a puzzle-solving outlet right now, one can always dabble in the Sunday paper’s Sudoku, or pick up a Mensa book, or go code golfing with some artificial problem. But leave it out of the repo until the proper time. Almost always this is a wiser path than pre-optimization.

Remember, this practice is notorious enough that people ask whether premature optimization is the root of all evil. (I wouldn’t go quite that far, but I agree with the sentiment.)

I’m not saying we should pick the most brain-dead way we can think of at every level of design. Of course not. But instead of picking the most clever-looking, we can consider other values:

- The easiest to explain to your new hire

- The most likely to pass a code review by your most seasoned developer

- The most maintainable

- The quickest to write

- The easiest to test

- The most portable

- etc.

But here is where the problem shows itself to be difficult. It’s not just about avoiding optimizing for speed, code size, memory footprint, flexibility, or future-proofed-ness. It’s about balance and about whether what you’re doing is actually in line with your values and goals. It’s entirely contextual, and sometimes even impossible to measure objectively.

Why is this a good thing? Because life is like this. It’s messy. Our programming-oriented brains sometimes want to create order in the chaos so badly that we end up ironically multiplying the chaos. It’s like the paradox of trying to force someone to love you. If you think you’ve succeeded at that, it’s no longer love; meanwhile, you’re charged with hostage-taking, you probably need more love than ever, and this metaphor has got to be one of the most awkward I could have picked.

Anyway, if you think you’ve found the perfect system for something, well…enjoy the illusion while it lasts, I guess. It’s okay, failures are wonderful opportunities to learn.

Keep the UX in Mind

Let’s explore how user experience fits in among these potential priorities. After all, even wanting something to perform well is, at some level, about UX.

If you’re working on a UI, no matter what framework or language the code uses, there will be a certain amount of boilerplate and repetition. It can definitely be valuable in terms of programmer time and code clarity to try to reduce that. To help with the art of balancing priorities, I want to share a couple stories.

At one job, the company I worked for used a closed-source enterprise system that was based on an opinionated tech stack. In fact, it was so opinionated that the vendor who sold it to us refused to make UI customizations that didn’t fit the stack’s opinions, because it was so painful for their developers. I’ve never used their stack, so I don’t condemn them for this, but the fact was that this “good for the programmer, bad for the user” trade-off was so cumbersome for my coworkers in certain contexts that I ended up writing a third-party add-on re-implementing this part of the system’s UI. (It was a huge productivity booster. My coworkers loved it! Over a decade later, it’s still saving everyone there time and frustration.)

I’m not saying that opinionation is a problem in itself; just that too much of it became a problem in our case. As a counterexample, one of the big draws of Ruby on Rails is precisely that it is opinionated, in a front-end ecosystem where one easily gets vertigo from having too many options. (Give me something with opinions until I can figure out my own!)

In contrast, you may be tempted to crown UX the King of Everything in your project. A worthy goal, but let me tell my second story.

A few years after the success of the above project, one of my coworkers came to me to ask me to optimize the UX by automating a certain messy real-life scenario that sometimes arose, so that it could be solved with a single click. I started analyzing whether it was even possible to design an algorithm that wouldn’t have any false positives or negatives because of the many and strange edge cases of the scenario. The more I talked with my coworker about it, the more I realized that the requirements were simply not going to pay off. The scenario only came up once in a while—monthly, let’s say—and currently took one person a few minutes to solve. Even if we could successfully automate it, without any bugs, it would take centuries for the required development and maintenance time to be paid off in terms of time saved by my coworkers. The people-pleaser in me had a difficult moment saying “no,” but I had to cut the conversation short.

So let the computer do what it can to help the user, but only to a sane extent. How do you know what extent that is, though?

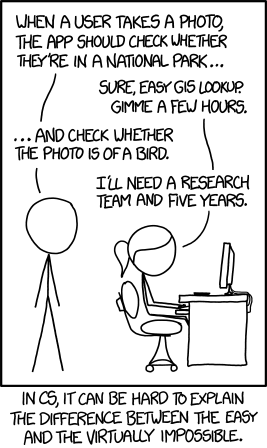

An approach I like to take is to profile the UX like your developers profile their code. Find out from your users where they spend the most time clicking or typing the same thing over and over again, and see if you can optimize those interactions. Can your code make some educated guesses as to what they’re most likely going to input, and make that a no-input default? Aside from certain prohibited contexts (no-click EULA confirmation?) this can really make a difference to your users’ productivity and happiness. Do some hallway usability testing if you can. Sometimes, you may have trouble explaining what’s easy for computers to help with and what isn’t… but overall, this value is likely to be of pretty high importance to your users.

Avoiding Premature Optimization: When and How to Optimize

Our exploration of other contexts notwithstanding, let’s now explicitly assume that we’re optimizing some aspect of raw machine performance for the rest of this article. My suggested approach applies to other targets too, like flexibility, but each target will have its own gotchas; the main point is that prematurely optimizing for anything will probably fail.

So, in terms of performance, what optimization methods are there to actually follow? Let’s dig in.

This Isn’t a Grassroots Initiative, It’s Triple-Eh

The TL;DR is: Work down from the top. Higher-level optimizations can be made earlier in the project, and lower-level ones should be left for later. That’s is all you need to get most of the meaning of the phrase “premature optimization”; doing things out of this order has a high probability of wasting your team’s time and being counter-effective. After all, you don’t write the entire project in machine code from the get-go, do you? So our AAA modus operandi is to optimize in this order:

- Architecture

- Algorithms

- Assembly

Common wisdom has it that algorithms and data structures are often the most effective places to optimize, at least where performance is concerned. Keep in mind, though, that architecture sometimes determines which algorithms and data structures can be used at all.

I once discovered a piece of software doing a financial report by querying an SQL database multiple times for every single financial transaction, then doing a very basic calculation on the client side. It took the small business using the software only a few months of use before even their relatively small amount of financial data meant that, with brand new desktops and a fairly beefy server, the report generation time was already up to several minutes, and this was one they needed to use fairly frequently. I ended up writing a straightforward SQL statement that contained the summing logic—thwarting their architecture by moving the work to the server to avoid all the duplication and network round-trips—and even several years’ worth of data later, my version could generate the same report in mere milliseconds on the same old hardware.

Sometimes you don’t have influence over the architecture of a project because it’s too late in the project for an architecture change to be feasible. Sometimes your developers can skirt around it like I did in the example above. But if you are at the start of a project and have some say in its architecture, now is the time to optimize that.

Architecture

In a project, the architecture is the most expensive part to change after the fact, so this is a place where it can make sense to optimize at the beginning. If your app is to deliver data via ostriches, for example, you’ll want to structure it towards low-frequency, high-payload packets to avoid making a bad bottleneck even worse. In this case, you’d better have a full implementation of Tetris to entertain your users, because a loading spinner just isn’t going to cut it. (Kidding aside: Years ago I was installing my first Linux distribution, Corel Linux 2.0, and was delighted that the long-running installation process included just that. Having seen the Windows 95 installer’s infomercial screens so many times that I had memorized them, this was a breath of fresh air at the time.)

As an example of architectural change being expensive, the reason for the aforementioned SQL report being so highly unscalable in the first place is clear from its history. The app had evolved over time, from its roots in MS-DOS and a home-grown, custom database that wasn’t even originally multi-user. When the vendor finally made the switch to SQL, the schema and reporting code seem to have been ported one for one. This left them years’ worth of impressive 1,000%+ performance improvements to sprinkle throughout their updates, whenever they got around to completing the architecture switch by actually making use of SQL’s advantages for a given report. Good for business with locked-in clients like my then-employer, and clearly attempting to prioritize coding efficiency during the initial transition. But meeting clients’ needs, in some cases, about as effectively as a hammer turns a screw.

Architecture is partly about anticipating to what degree your project will need to be able to scale, and in what ways. Because architecture is so high-level, it’s difficult to get concrete with our “dos and don’ts” without narrowing our focus to specific technologies and domains.

I Wouldn’t Call It That, but Everyone Else Does

Thankfully, the Internet is rife with collected wisdom about most every kind of architecture ever dreamt up. When you know it’s time to optimize your architecture, researching pitfalls pretty much boils down to figuring out the buzzword that describes your brilliant vision. Chances are someone has thought along the same lines as you, tried it, failed, iterated, and published about it in a blog or a book.

Buzzword identification can be tricky to accomplish just by searching, because for what you call a FLDSMDFR, someone else already coined the term SCOPWWHWTT, and they describe the same problem you’re solving, but using a completely different vocabulary than you would. Developer communities to the rescue! Hit up StackExchange or HashNode with as thorough a description as you can, plus all the buzzwords that your architecture isn’t, so they know you did sufficient preliminary research. Someone will be glad to enlighten you.

Meanwhile, some general advice might be good food for thought.

Algorithms and Assembly

Given a conducive architecture, here is where the coders on your team will get the most T-bling for their time. The basic avoidance of premature optimization applies here too, but your programmers would do well to consider some of the specifics at this level. There’s so much to think about when it comes to implementation details that I wrote a separate article about code optimization geared toward front-line and senior coders.

But once you and your team have implemented something performance-wise unoptimized, do you really leave it at Don’t do it? You never optimize?

You’re right. The next rule is, for experts only, Don’t do it yet.

Time to Benchmark!

Your code works. Maybe it’s so dog-slow that you already know you will need to optimize, because it’s code that will run often. Maybe you aren’t sure, or you have an O(n) algorithm and figure it’s probably fine. No matter what the case, if this algorithm might ever be worth optimizing, my recommendation at this point is the same: Run a simple benchmark.

Why? Isn’t it clear that my O(n³) algorithm can’t possibly be worse than anything else? Well, for two reasons:

- You can add the benchmark to your test suite, as an objective measure of your performance goals, regardless of whether they are currently being met.

- Even experts can inadvertently make things slower. Even when it seems obvious. Really obvious.

Don’t believe me on that second point?

How to Get Better Results from $1,400 Hardware Than from $7,000 Hardware

Jeff Atwood of StackOverflow fame once pointed out that it can sometimes (usually, in his opinion) be more cost-effective to just buy better hardware than to spend valuable programmer time on optimization. OK, so suppose you’ve reached a reasonably objective conclusion that your project would fit this scenario. Let’s further assume that what you’re trying to optimize is compilation time, because it’s a hefty Swift project you’re working on, and this has become a rather large developer bottleneck. Hardware shopping time!

What should you buy? Well, obviously, yen for yen, more expensive hardware tends to perform better than cheaper hardware. So obviously, a $7,000 Mac Pro should compile your software faster than some mid-range Mac Mini, right?

Wrong!

It turns out that sometimes more cores means more efficient compilation… and in this particular case, LinkedIn found out the hard way that the opposite is true for their stack.

But I have seen management that made one mistake further: They didn’t even benchmark before and after, and found that a hardware upgrade didn’t make their software “feel” faster. But there was no way to know for sure; and further, they still had no idea where the bottleneck was, so they remained unhappy regarding performance, having used up the time and money they were willing to allocate to the problem.

OK, I’ve Benchmarked Already. Can I Actually Optimize Yet??

Yes, assuming you’ve decided you need to. But maybe that decision will wait until more/all of the other algorithms are implemented too, so you can see how the moving parts fit together and which are most important via profiling. This may be at the app level for a small app, or it may only apply to one subsystem. Either way, remember, a particular algorithm may seem important to the overall app, but even experts—especially experts—are prone to misdiagnosing this.

Think before You Disrupt

“I don’t know about you people, but…”

As some final food for thought, consider how you can apply the idea of false optimization to a much broader view: your project or company itself, or even a sector of the economy.

I know, it’s tempting to think that technology will save the day, and that we can be the heroes who make it happen.

Plus, if we don’t do it, someone else will.

But remember that power corrupts, despite the best of intentions. I won’t link to any particular articles here, but if you haven’t wandered across any, it’s worth seeking some out about the wider impact of disrupting the economy, and who this sometimes ultimately serves. You may be surprised at some of the side-effects of trying to save the world through optimization.

Postscript

Did you notice something, Optimus? The only time I called you Optimus was in the beginning and now at the end. You weren’t called Optimus throughout the article. I’ll be honest, I forgot. I wrote the whole article without calling you Optimus. At the end when I realized I should go back and sprinkle your name throughout the text, a little voice inside me said, don’t do it.

Understanding the basics

What is premature optimization?

Trying to optimize when first coding. Performance optimization is best done from the highest level possible at any given moment. For greenfield projects, at the architecture stage. For legacy projects, after proper profiling to identify bottlenecks, rather than playing a costly guessing game.

Why is premature optimization bad?

It’s a hidden pitfall to assume that (supposedly) performance-optimized code is actually your first priority, above correctness, clarity, testability, and so on. Another pitfall is assuming that the code in question has enough impact on overall performance to be worth optimizing. Premature optimization hits both.

Tags

Bergerac, France

Member since January 31, 2017

About the author

Kevin has 20+ years among full-stack, desktop, and indie game development. He lately specializes in PostgreSQL, JavaScript, Perl, and Haxe.