Software Entropy Explained: Causes, Effects, and Remedies

In this article, Toptal Freelance Developer Adam Wasserman explains what software entropy is, what causes it and how it manifests itself, and what can be done to prevent it or mitigate its effects.

In this article, Toptal Freelance Developer Adam Wasserman explains what software entropy is, what causes it and how it manifests itself, and what can be done to prevent it or mitigate its effects.

Adam is particularly interested in multi-threaded programming and distributing computing and considers himself an excellent communicator.

Expertise

PREVIOUSLY AT

This article is aimed at the software developer or project manager who is curious about the definition of entropy in software engineering, , its practical effects on their work, and the underlying factors contributing to its growth.

The primary goal is to create an awareness of software entropy because it is a factor in all forms of software development. Furthermore, we explore a means by which software entropy can be assigned a concrete value. Only by quantifying software entropy and observing its growth over successive releases can we truly understand the risk it poses to our current objectives and future plans.

What Is Software Entropy?

Software entropy gains its name from the chief characteristic of entropy in the real world: It is a measure of chaos that either stays the same or increases over time. Stated another way, it is a measure of the inherent instability built into a software system with respect to altering it. There are several contexts for entropy in computer science, but the type of software entropy we will explore in this article has very practical implications for development teams and is rarely accorded the importance it deserves.

Entropy in software is given no consideration when pulling someone from a development team, prematurely starting a development cycle, or implementing “quick fixes”—moments when, in fact, it is most likely to grow.

Software development is an art and a trade whose core building blocks are ill-defined. Whereas builders work with cement and nails, the software developer works with logical assertions and a set of assumptions. These cannot be held in the hand and examined, nor can they be ordered by the eighth of an inch. Although the casual observer might be tempted to compare software development with construction, beyond a few superficial similarities it is counterproductive to do so.

Despite these difficulties, we can nonetheless lay out the guidelines that will enable us to understand sources of software entropy, measure the extent of the risk it poses to our objectives, and (if necessary) what steps can be taken to limit its growth.

The proposed definition of software entropy is as follows:

where

The concept of a development iteration is integral to our understanding of software entropy. These are cycles of activity that lead from one behavior of the system to another. The goals we set for ourselves during the software iteration are called its scope. In software development, such cycles involve modifying or expanding the software’s underlying code.

All modifications to code occur in a development iteration even if those carrying them out don’t think of them that way. That is, smaller organizations that pride themselves on building their systems using a “quick and dirty” methodology—with little or no gathering of requirements or analysis—still use development iterations to achieve their goals. They simply don’t plan them. Similarly, even if a modified system’s behavior diverges in some way from our intentions, it was still achieved by means of a development iteration.

In fact, the risk that this will happen is exactly what our awareness of software entropy is intended to explain and our desire to measure it intended to limit. In practical terms, then, we can define software entropy as follows.

Any given system has a finite set of known, open issues I0. At the end of the next development iteration, there will be a finite set of known, open issues I1. The system’s inherent entropy specifies how much our expectation of I1 is likely to differ from its actual value and how much the difference is likely to grow in subsequent iterations.

Effects of Software Entropy

Our practical experience of the effects of software entropy depends upon how we interact with the system in question.

Users have a static view of software; they are concerned with how it behaves now and do not care about a system’s internals. By performing a predefined action, the users assume that the software will respond with a corresponding predefined behavior. However, the users are the least prepared to deduce the level of entropy in the software they are using.

Software entropy is tied to the notion of change and has no meaning in a static system. If there is no intent to alter the system, we cannot speak of its entropy. Likewise, a system which does not yet exist (i.e., the next iteration is actually the first of its existence) has no entropy.

Software may be perceived as “buggy” from the standpoint of a user, but if during subsequent iterations software developers and managers are meeting their goals as planned, we must conclude that the entropy in the system is actually quite low! The experience of users, therefore, tells us very little, if anything, about software entropy:

-

Software developers have a fluid view of software. They are concerned with how a system currently functions only insofar as it must be changed, and they are concerned with the details of how it works to devise an appropriate strategy.

-

Managers have perhaps the most complicated view of software, both static and fluid. They must balance the exigencies of the short term with the demands of a business plan that extends further into the future.

People in both these roles have expectations. Software entropy manifests itself whenever those expectations are spoiled. That is, software developers and managers do their best to assess risks and plan for them, but—excluding external disruptions—if their expectations are nonetheless disappointed, only then can we speak of software entropy. It is the invisible hand that breaks component interactions that weren’t in scope, causes production servers to inexplicably crash, and withholds a timely and cost-effective hotfix.

Many systems with high levels of entropy rely on specific individuals, particularly if there are junior members of the development team. This person possesses knowledge such that the others cannot perform their tasks without his “valuable” input. It cannot be captured in standard UML diagrams or technical specifications because it consists of an amalgam of exceptions, hunches, and tips. This knowledge is not dependent upon a logically consistent framework and it is therefore difficult—if not impossible—to transfer to anyone else.

Let us suppose that with great determination and effort, such an organization is able to turn itself around and stabilize its IT department. The situation seems to have improved because, when software development has ground to a halt, any progress whatsoever is encouraging. However, in reality, the expectations being met are low and the achievements uninteresting compared to the lofty goals that were within reach while entropy was still low.

When software entropy overwhelms a project, the project freezes.

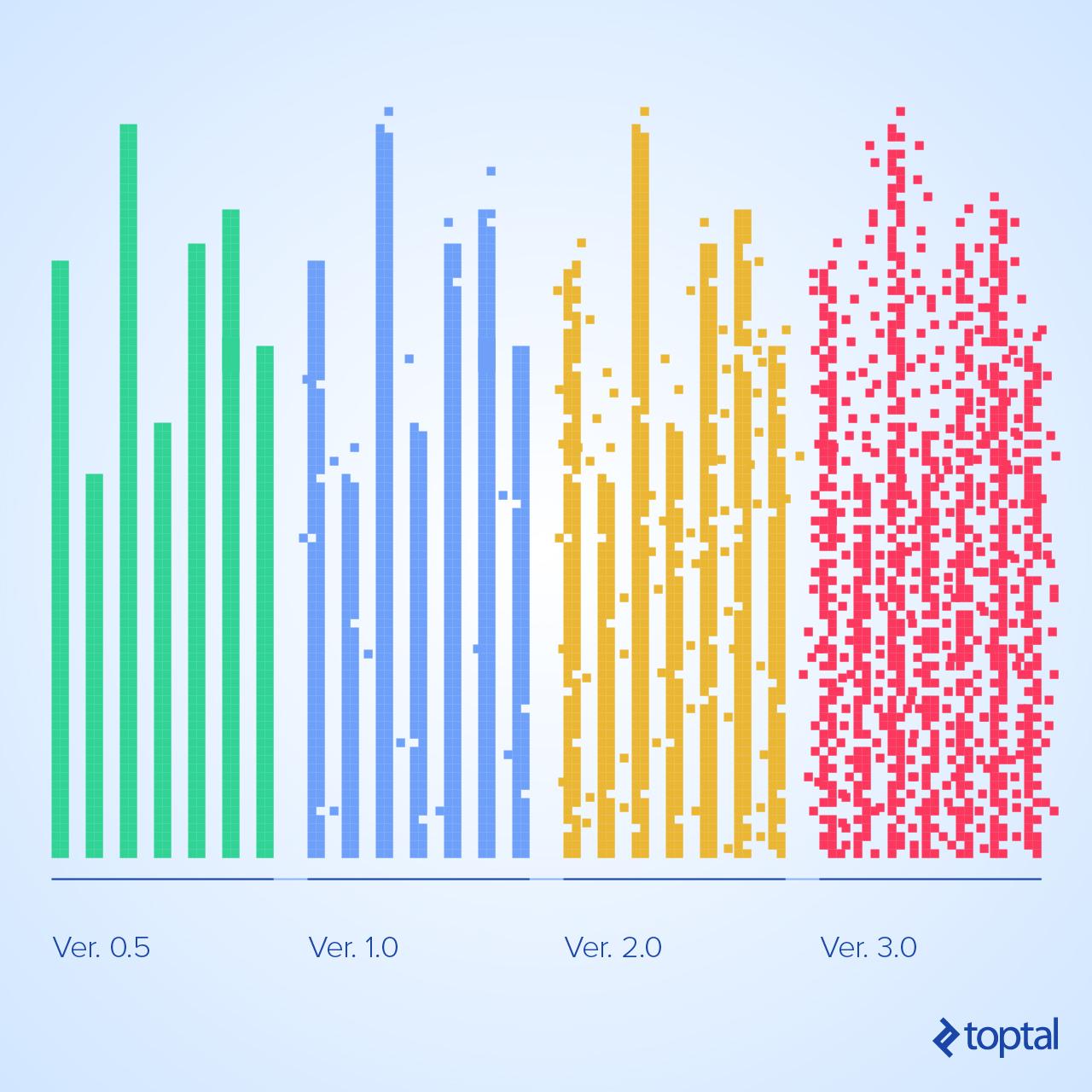

There can be no more development iterations. Fortunately, this situation does not arise instantaneously. The slow but precipitous march toward the edge of a cliff is something every system undergoes. No matter how well organized and efficient the first version may be, over successive development iterations its entropy—and therefore the risk that things will unexpectedly go wrong—will grow unless specific steps are taken to prevent it.

Software entropy cannot be reduced. Just like entropy in the real world, it only grows or remains the same. At first, its effects are negligible. When they begin to manifest, the symptoms are mild and can be masked or ignored. But if an organization only attempts to combat software entropy once it has become the dominant risk in a project, it will sadly find that its efforts are in vain. Awareness of software entropy is therefore most useful when its extent is low and the adverse effects minimal.

It does not follow that every organization should devote resources to limiting the growth of entropy in its products. Much of the software being developed today—consumer-oriented software in particular—has a relatively short expected lifetime, perhaps a few years. In this case, its stakeholders need not consider the effects of software entropy, as it will rarely become a serious obstacle before the entire system is discarded. There are, however, less obvious reasons to consider the effects of software entropy.

Consider the software that runs the internet’s routing infrastructure or transfers money from one bank account to another. At any given time, there are one or more versions of this software in production, and their collective behaviors can be documented with reasonably high accuracy.

The risk tolerance of a system is a measure of how much and what type of unexpected behavior we can comfortably permit from one development iteration to the next. For the types of systems just mentioned, the risk tolerance is far lower than, say, the software which routes phone calls. This software, in turn, has a lower risk tolerance than the software which drives the shopping cart of many commercial websites.

Risk tolerance and software entropy are related in that software entropy must be minimal to be certain that we will stay within a system’s risk tolerance during the next development iteration.

Sources of Software Entropy

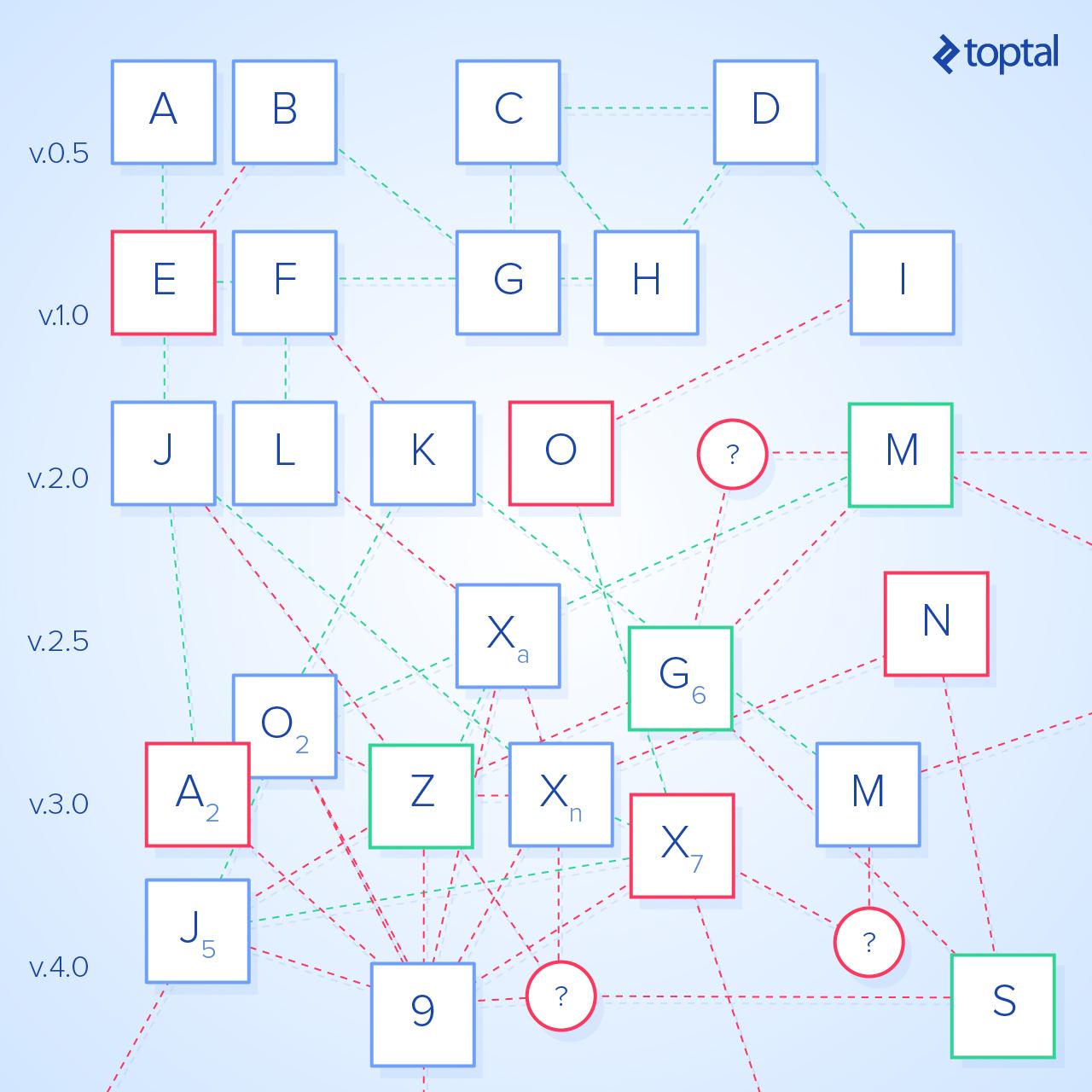

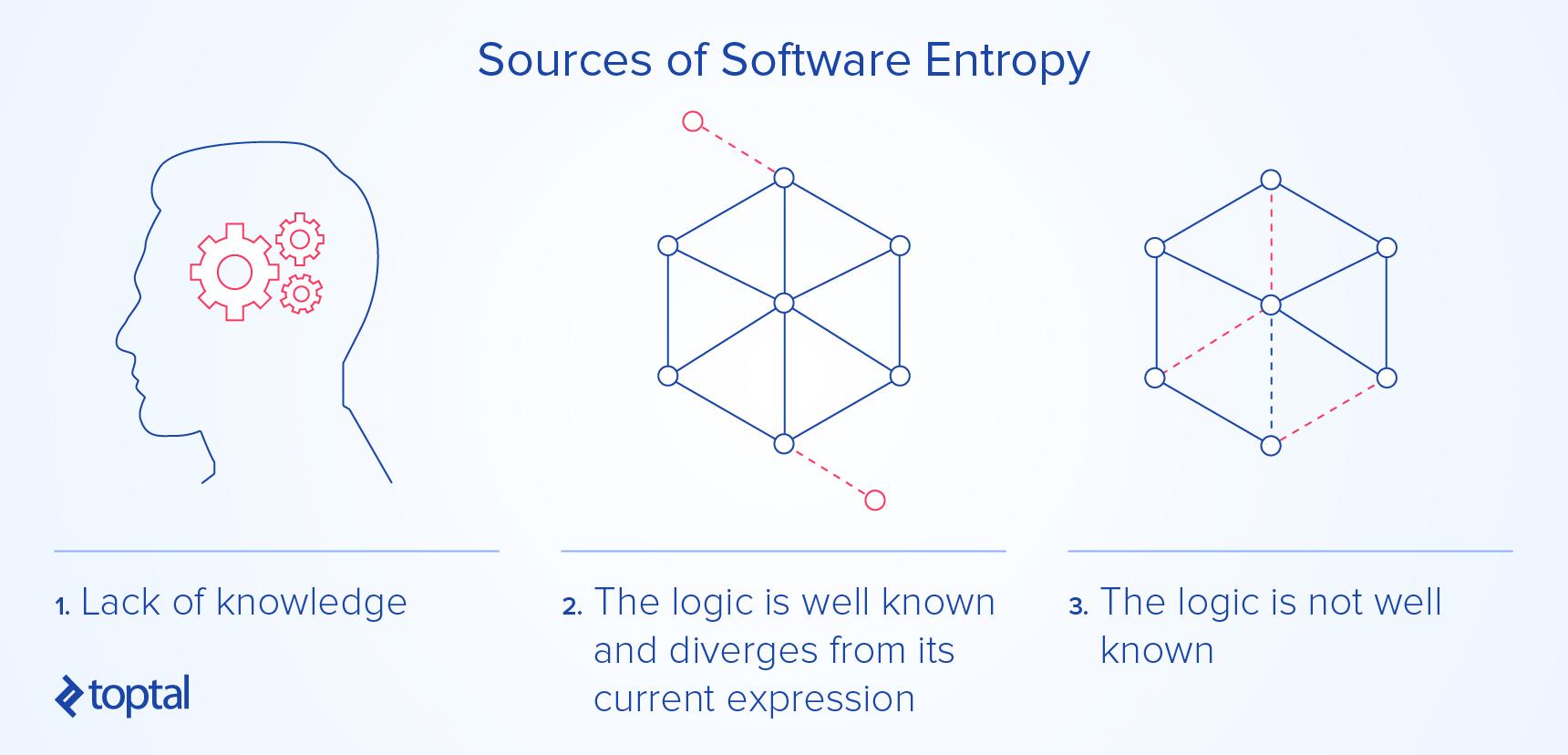

Software entropy arises from a lack of knowledge. It results from the divergence between our communal assumptions and the actual behavior of an existing system. This fact explains why software entropy only has meaning in the context of modifying an existing system; it is the only time our lack of understanding will have any practical effect. Software entropy finds most fertile ground in a system whose details cannot be understood by a single person but are instead spread around a development team. Time, too, is a powerful eraser of knowledge.

Code is the expression of a series of logical assertions. When the software’s behavior (and therefore its code) does not correspond to the logic it is intended to express, we can speak of its inherent code entropy. This situation can arise in three ways: The logic is well known and diverges from its expression, there are multiple understandings of the logic the code is intended to express, or the logic is not well known.

-

The first situation—the logic is well known and diverges from its current expression—is the easiest to address but also the least common. Only very small systems maintained by perhaps two participants, a developer and someone responsible for the business plan, will fall into this category.

-

The second situation—the logic is not well known—is rare. For all intents and purposes, the development iteration cannot even start. If at some point all stakeholders can agree, they are then likely to face the first situation.

The human mind interprets its experiences, effectively filtering and modifying them in an attempt to fit them into a personal framework. In the absence of effective development habits—based on a free exchange of ideas, mutual respect, and clear expectations—there may be as many interpretations of how a system presently functions as there are team members. The team’s goals for the current development iteration may themselves be in dispute.

Many developers protect themselves from this situation by reinforcing their own unique understandings of what is required of them and how the system “ought” to be organized. Managers and other stakeholders, on the other hand, might unwittingly seek to change the assumptions of other team members in a misguided attempt to make their own lives easier.

We have now arrived at the most common source of software entropy: There are multiple, incomplete interpretations of the logic the system is intended to express. Since there is only ever a single manifestation of this logic, the situation is by definition problematic.

How does this lack of knowledge arise? Incompetence is one way. And as we have already seen, a lack of agreement about the next development iteration’s goals is another. But there are other factors to consider. An organization may purport to employ a development methodology but then haphazardly abandon some or all of its aspects on the floor. Software developers are then tasked with completing vague assignments, often open to interpretation. Organizations implementing changes to their development methodology are particularly vulnerable to this phenomenon, although they are by no means the only ones. Personal conflicts which management is unaware of or otherwise unable to resolve can also lead to a dangerous divergence between expectations and results.

There is a second, more important way we lose technical knowledge about a product: in transfer. Capturing technical knowledge on paper is challenging even for the most proficient of writers. The reason is that what to transcribe is equally as important as how. Without the proper discipline, a technical writer may record too much information. Alternatively, they may make assumptions that cause them to omit essential points. After it is produced, technical documentation must then be meticulously kept up to date, a difficult proposition for many organizations that lose track of documents almost as soon as they are written. Because of these difficulties, technical knowledge is rarely transferred in documents alone but also in pairing or other forms of close, human-to-human interaction.

Software entropy makes leaps and bounds whenever an active participant leaves a development team. They are taking a valuable chunk of know-how with them. Replicating that know-how is impossible, and approximating it requires considerable effort. With sufficient attention and resources, however, enough knowledge may be preserved that the growth of the system’s entropy can be minimized.

There are other sources of software entropy, but these are relatively minor. For example, software rot is the process by which a system is unexpectedly affected by changes to its environment. We can think of third-party software on which it relies (such as libraries), but there are other, more insidious causes of software rot, usually resulting from changes to the assumptions upon which the system was based. For example, if a system was designed with certain assumptions about the availability of memory, it may start to malfunction at unexpected moments if the memory available to it is reduced.

How to Calculate Entropy: Assigning Values to C, S, and I

I is the number of unresolved behavioral problems in a software system, including the absence of promised features. This is a known quantity that is often tracked in a system like JIRA. The value of I´ is derived directly from it.

C is the perceived probability that, when the work units in scope have been implemented, the number of actual open issues I1 in the next iteration will be greater than its estimation now. This value lives in the domain 0 <= C <= 1.

One might argue that the probability C is precisely what software entropy purports to measure. However, there are fundamental differences between this value and our measure of software entropy. The perceived probability that something will go wrong is exactly that: It is not a real value. However, it is useful in that the feelings of the people who are participating in a project are a valuable source of information about how stable it is.

The scope S takes into account the magnitude of the proposed changes and must be expressed in the same units as I´. A larger value of S decreases entropy because we are increasing the scope of the proposed changes. Although this may sound counterintuitive, we must keep in mind that entropy is a measure of how development of the system may not meet our expectations. It is not enough to say that entropy is a measure of the chance of introducing problems. We naturally try and anticipate risks and take them into account in our planning (and therefore in our estimation of I1, which may well increase over 0). Clearly, the more confident we are about taking on a large change scope, the less entropy can be said to be in the system—unless those planning the changes and/or carrying them out are incompetent. This possibility, however, is probably reflected in the current value of

Note that we need not attempt to specify the magnitude of the difference between the actual value of I1 and its expected value. If we assume that our plans are correct when we make them, it is not also reasonable to assume we can predict the degree to which the result will not meet our expectations; we can only specify a perceived chance C that it won’t. However, the degree to which the actual value I1 differs from its expected value is an important input into the calculation of entropy in the next iteration in the form of the derived value

Theoretically, it is possible for I´ to have a negative value. In practice, however, this situation will never occur; we do not usually solve problems accidentally. Negative values for

C is a subjective value. It exists in the minds of a development iteration’s participants and can be deduced by polling them and averaging the results. The question should be asked in a positive way. For example: “On a scale of 0 to 10 with 10 most likely, how would you estimate the team’s chances of achieving all of its goals this development iteration?”

Note that the question is being posed about the team’s objectives as a whole, not a subset. A response of 10 would indicate a value of 0 for C, whereas a response of 7 would indicate a value of .3. In larger teams, each response might be weighted depending upon relevant factors, such as how long an individual has been involved in the project and how much of their time is actually spent on it. Competence, however, should not be a factor in weighting responses. Before long, even a junior member of the team will have a sense of how effective it is in achieving its objectives. Sufficiently large teams might wish to discard the highest and lowest reported values before averaging the remainder.

Asking professionals how likely their team is to fail is a sensitive and provocative proposition. However, this is exactly the question any organization wishing to quantify entropy needs to ask. To ensure honest answers, participants should give their estimation of C anonymously and without fear of repercussion, even if they report an abysmally high value.

S must be assigned a value in the same “work units” as

Note that we do not consider hotfixes or other unscheduled releases to production as defining the extent of a development iteration, nor should we subtract any associated stories from

The difficulty with this approach is that a period of discovery and analysis must occur before bugs can subsequently be broken down into stories. The true value of I´ can therefore only be defined after a delay. In addition, polling for C naturally occurs at the start of each sprint. The results obtained must therefore once again be averaged over the span of the entire release. Despite these difficulties, any team employing aspects of an Agile methodology are likely to find that stories are the most accurate unit for quantifying S (and therefore

There are other development methodologies in use today. Whatever the methodology employed, the principles for measuring software entropy remain the same: Software entropy should be measured between releases to production, S and I´ should be measured in the same “work units,” and estimates of C should be taken from direct participants in a non-threatening and preferably anonymous manner.

Reducing the Growth of E

Once knowledge about a system is lost, it can never be regained. For this reason, software entropy cannot be reduced. All we can hope to do is restrain its growth.

The growth of entropy in software can be limited by adopting appropriate measures during software development. The pair-programming aspect of Agile development is particularly useful in this regard. Because more than one developer is involved at the time code is written, knowledge of essential details is distributed and the effects of departing team members mitigated.

Another useful practice is the production of well-structured and easily consumed documentation, especially within organizations employing a waterfall methodology. Such documentation must be backed by strict and well-defined principles understood by everyone. Organizations relying on documentation as a principle means of communication and safeguarding technical knowledge are well suited for this approach. It is only when there are no guidelines or training on how to write and consume internally written documents—as is often the case in younger organizations employing RAD or Agile methodologies—that their value becomes suspect.

There are two ways to reduce the growth of entropy in a system: Execute changes intended to reduce

The first involves refactoring. Actions intended to reduce I´ tend to be technical in nature and are probably already familiar to the reader. A development iteration must occur. The portion of this iteration that is intended to reduce the growth of entropy will not deliver any tangible business benefits while it will consume time and resources. This fact often makes reducing the growth of entropy a hard sell within any organization.

Reducing the value of C is a more powerful strategy because the effect is longer-term. In addition, C acts as an important check on the growth of the ratio of I´ to S. Actions to reduce C tend to be focused on human behavior and thinking. Although these actions may not require a development iteration per se, they will slow subsequent iterations as participants accept and adjust to new procedures. The seemingly simple act of agreeing on what improvements ought to be made is a path fraught with dangers of its own, as the disparate goals of the project’s participants and stakeholders suddenly come to light.

Wrapping Up

Software entropy is the risk that changing existing software will result in unexpected problems, unmet objectives, or both.

Although negligible when software is first created, software entropy grows with each development iteration. If allowed to proceed unchecked, software entropy will eventually bring further development to a halt.

However, not every project should reckon with the effects of software entropy. Many systems will be taken out of production before entropy can exert noticeable and deleterious effects. For those whose lifetimes are long enough that entropy poses a credible risk, creating an awareness of it and measuring its extent, while still low, provides a means to ensure that development is not cut off prematurely.

Once a system has been completely overwhelmed by the effects of software entropy, no more changes can be made and development has essentially come to an end.

Understanding the basics

What’s the definition of entropy?

A measure of chaos in information systems that either stays the same or increases over time.

What is software entropy?

Software entropy is the risk that changing existing software will result in unexpected problems, unmet objectives, or both. Although negligible when software is first created, software entropy grows with each development iteration.

How do I calculate entropy?

E = I’C/S where I´ is derived from the number of unexpected problems introduced during the last development iteration, C is the perceived probability that implementing changes to the system now results in a new I´ > 0, and S is the scope of the next development iteration.

Adam Wasserman

Providence, RI, United States

Member since May 25, 2017

About the author

Adam is particularly interested in multi-threaded programming and distributing computing and considers himself an excellent communicator.

Expertise

PREVIOUSLY AT