WebVR and the Browser Edge Computing Revolution

Virtual Reality (VR) is making inroads into various industries but is not mainstream yet. WebVR and edge computing have the potential to boost adoption and bring VR to a wider audience.

In this series of articles, Toptal Full-stack Developer Michael Cole introduces you to the basics of WebVR and edge computing, complete with elaborate examples.

Virtual Reality (VR) is making inroads into various industries but is not mainstream yet. WebVR and edge computing have the potential to boost adoption and bring VR to a wider audience.

In this series of articles, Toptal Full-stack Developer Michael Cole introduces you to the basics of WebVR and edge computing, complete with elaborate examples.

Michael is an expert full-stack web engineer, speaker, and consultant with over two decades of experience and a degree in computer science.

Expertise

PREVIOUSLY AT

A huge technology wave has arrived - Virtual Reality (VR). Whatever you felt the first time you held a smartphone, experiencing VR for the first time delivers a more substantial emotional experience in every aspect of computing. It’s only been 12 years since the first iPhone. As a concept, VR has been around even longer, but the technology needed to bring VR to average users was not available until recently.

The Oculus Quest is Facebook’s consumer gaming platform for VR. Its main feature is that it doesn’t need a PC. It provides a wireless, mobile VR experience. You can hand someone a VR headset in a coffee shop to share a 3D model with about the same awkwardness as googling something in a conversation, yet the payoff of the shared experience is a lot more compelling.

VR will change the way we work, shop, enjoy content, and much more.

In this series, we will explore the current browser technologies that enable WebVR and browser edge computing. This first post highlights those technologies and an architecture for our simulation.

In the following articles, we’ll highlight some unique challenges and opportunities in code. To explore this tech, I made a Canvas and WebVR demo and published the code on GitHub.

What VR Means for UX

As a Toptal Developer, I help businesses get projects from idea to a beta test with users. So how is VR relevant to business web applications?

Entertainment content will lead the uptake of Virtual Reality (like it did on mobile). However, once VR becomes mainstream like mobile, “VR-first design” will be the expected experience (similar to “mobile-first”).

“Mobile-first” was a paradigm shift, “Offline-first” is a current paradigm shift, and “VR-first” is on the horizon. This is a very exciting time to be a designer and developer, because VR is a completely different design paradigm (we will explore this in the last article of the series). You are not a VR designer if you can’t grip.

VR started in the Personal Computing (PC) revolution but is arriving as the next step in the mobile revolution. Facebook’s Oculus Quest builds on Google Cardboard using Qualcomm’s Snapdragon 835 System-on-Chip (SoC), headset tracking (employing mobile cameras), and runs on Android - all packaged to comfortably mount on the tender sense organs of your face.

The $400 Oculus Quest holds amazing experiences I can share with my friends. A new $1,000 iPhone doesn’t impress anyone anymore. Humanity is not going to spit the VR hook.

VR Industry Applications

VR is starting to make its presence felt in numerous industries and fields of computing. Aside from content consumption and gaming, which tend to get a lot of press coverage, VR is slowly changing industries ranging from architecture to healthcare.

- Architecture and Real Estate create the value of a physical reality at extraordinary costs (compared to digital), so it’s a natural fit for architects and real estate agents to bring clients through a virtual reality to show the experience. VR offers a “beta test” of your $200m stadium, or a virtual walkthrough over the phone.

- Learning and Education in VR convey experiences that would otherwise be impossible to replicate with images or video.

- Automotive companies benefit from VR, from design and safety to training and marketing.

- Healthcare professionals at Stanford’s Lucile Packard Children’s Hospital have been using VR to plan heart surgeries, allowing them to understand a patient’s anatomy before making a single incision. VR is also replacing pharmaceuticals for pain relief.

- Retail, Marketing, and Hospitality already offer virtual tours of products and places. As retailers begin to understand how compelling their shopping experience could be, retail innovators will put the final nail in the brick and mortar shopping coffin.

As technology advances, we are going to see increased adoption across various industries. The question now is how fast this shift is going to occur and which industries will be affected the most.

What VR Means for the Web and Edge Computing

“Edge Computing” moves computing out of your main application server cluster and closer to your end user. It’s got marketing buzz because hosting companies can’t wait to rent you a low-latency server in every city.

A B2C edge computing example is Google’s Stadia service, which runs CPU/GPU intensive gaming workloads on Google’s servers, then sends the game to a device like Netflix. Any dumb Netflix Chromebook can suddenly play games like a high-end gaming computer. This also creates new architecture options of tightly integrated monolithic multiplayer games.

A B2B edge computing example is Nvidia’s GRID, which provides Nvidia GPU-enabled virtual remote workstations to cheap Netflix class devices.

Question: Why not move edge computing out of the data center into the browser?

A use case for browser edge computing is an “animation render farm” of computers that render a 3D movie by breaking the day-long process into chunks that thousands of computers can process in a few minutes.

Technologies like Electron and NW.js brought web programming to desktop applications. New browser technologies (like PWA’s) are bringing the web application distribution model (SaaS is about distribution) back to desktop computing. Examples include projects like SETI@Home, Folding@Home (protein-folding), or various render farms. Instead of having to download an installer, it is now possible to join the compute farm by just visiting the website.

Question: Is WebVR a “real thing” or will VR content be bustled into “app stores” and walled gardens?

As a Toptal freelancer and technologist, it’s my job to know. So I built a tech prototype to answer my own questions. The answers I found are very exciting, and I wrote this blog series to share them with you.

Spoiler: Yes, WebVR is a real thing. And yes, browser edge computing can use the same API’s to access the compute power that enables WebVR.

Very Fun To Write! Let’s Build a Proof of Concept

To test this out, we’ll make an astrophysics simulation of the n-body problem.

Astronavigators can use equations to calculate gravitational forces between two objects. However, there are no equations for systems with three or more bodies, which is inconveniently every system in the known universe. Science!

While the n-body problem has no analytical solution (equations), it does have a computational solution (algorithms), which is O(n²). O(n²) is pretty much the worst possible case, but it’s how to get what we want, and kind of why Big O notation was invented in the first place.

Figure 2: “Up and to the right? Well I’m no engineer, but performance looks good to me!”

If you’re dusting off your Big O skills, remember that Big O notation measures how an algorithm’s work “scales” based on the size of the data it’s operating on.

Our collection is all the bodies in our simulation. Adding a new body means adding a new two-body gravity calculation for every existing body in the set.

While our inner loop is < n, it is not <= O(log n), so the whole algorithm is O(n²). Those are the breaks, no extra credit.

for (let i: i32 = 0; i < numBodies; i++) { // n

// Given body i: pair with every body[j] where j > i

for (let j: i32 = i + 1; j < numBodies; j++) { // ½ n is > log n, so n.

// Calculate the force the bodies apply to one another

stuff = stuff

}

}

The n-body solution also puts us right in the world of physics/game engines and an exploration of the tech needed for WebVR.

For our prototype, once we’ve built the simulation, we’ll make a 2D visualization.

Finally, we’ll swap out the Canvas visualization for a WebVR version.

If you’re impatient, you can jump right to the project’s code.

Web Workers, WebAssembly, AssemblyScript, Canvas, Rollup, WebVR, Aframe

Buckle up for an action-packed, fun-filled romp through a cluster of new technologies that have already arrived in your modern mobile browser (sorry, not you Safari):

- We’ll use Web Workers to move the simulation into its own CPU thread - improving perceived and actual performance.

- We’ll use WebAssembly to run the O(n²) algorithm in high-performance (C/C++/Rust/AssemblyScript/etc.) compiled code in that new thread.

- We’ll use Canvas to visualize our simulation in 2D.

- We’ll use Rollup and Gulp as a lightweight alternative to Webpack.

- Finally, we’ll use WebVR and Aframe to create a Virtual Reality for your phone.

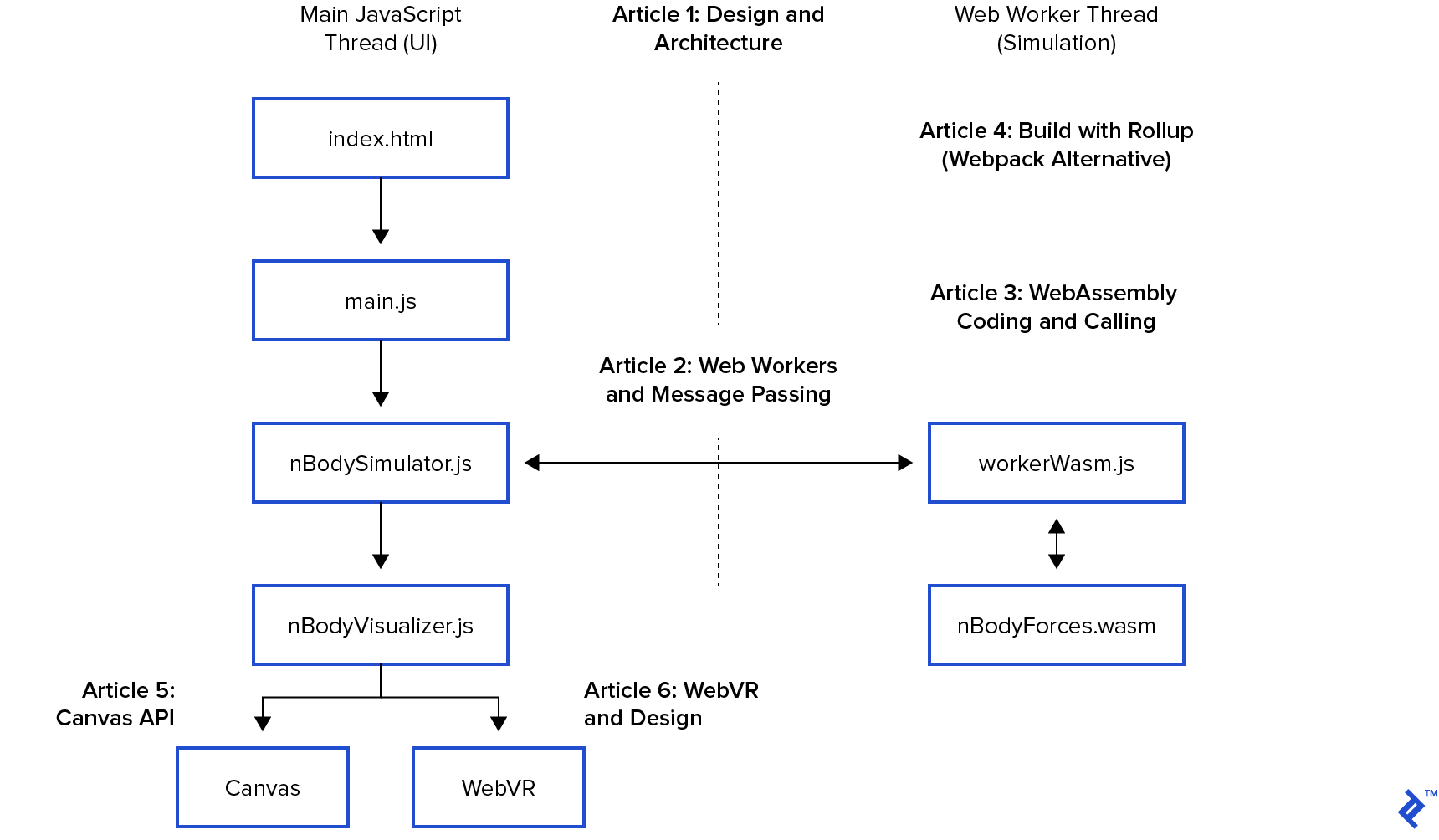

Back-of-the-napkin Architecture Before Diving into Code

We’ll start with a Canvas version because you’re probably reading this at work.

In the first few posts, we’ll use existing browser APIs to access the computing resources required to create a CPU intensive simulation without degrading the user experience.

Then, we’ll visualize this in the browser using Canvas, finally swapping our Canvas visualization for a WebVR using Aframe.

API Design and Simulation Loop

Our n-body simulation predicts the position of celestial objects using the forces of gravity. We can calculate the exact force between two objects with an equation, but to calculate the forces between three or more objects, we need to break the simulation up into small time segments and iterate. Our goal is 30 frames/second (movie speed) or ~33 ms/frame.

To get oriented, here’s a quick overview of the code:

- Browser GET’s index.html

- Which runs

main.jsas the code below. Theimports are handled with Rollup, an alternative to Webpack. - Which creates a new nBodySimulator()

- Which has an external API:

- sim.addVisualization()

- sim.addBody()

- sim.start()

// src/main.js

import { nBodyVisPrettyPrint, nBodyVisCanvas } from "./nBodyVisualizer"

import { Body, nBodySimulator } from "./nBodySimulator"

window.onload = function() {

// Create a Simulation

const sim = new nBodySimulator()

// Add some visualizers

sim.addVisualization(new nBodyVisPrettyPrint(document.getElementById("visPrettyPrint")))

sim.addVisualization(new nBodyVisCanvas(document.getElementById("visCanvas")))

// This is a simulation, using opinionated G = 6.674e-11

// So boring values are allowed and create systems that collapse over billions of years.

// For spinny, where distance=1, masses of 1e10 are fun.

// Set Z coords to 1 for best visualization in overhead 2D Canvas.

// lol, making up stable universes is hard

// name color x y z m vz vy vz

sim.addBody(new Body("star", "yellow", 0, 0, 0, 1e9))

sim.addBody(new Body("hot jupiter", "red", -1, -1, 0, 1e4, .24, -0.05, 0))

sim.addBody(new Body("cold jupiter", "purple", 4, 4, -.1, 1e4, -.07, 0.04, 0))

// A couple far-out asteroids to pin the canvas visualization in place.

sim.addBody(new Body("asteroid", "black", -15, -15, 0, 0))

sim.addBody(new Body("asteroid", "black", 15, 15, 0, 0))

// Start simulation

sim.start()

// Add another

sim.addBody(new Body("saturn", "blue", -8, -8, .1, 1e3, .07, -.035, 0))

// That is the extent of my effort to handcraft a stable solar system.

// We can now play in that system by throwing debris around (inner plants).

// Because that debris will have significantly smaller mass, it won't disturb our stable system (hopefully :-)

// This requires we remove bodies that fly out of bounds past our 30x30 space created by the asteroids.

// See sim.trimDebris(). It's a bit hacky, but my client (me) doesn't want to pay for it and wants the WebVR version.

function rando(scale) {

return (Math.random()-.5) * scale

}

document.getElementById("mayhem").addEventListener('click', () => {

for (let x=0; x<10; x++) {

sim.addBody(new Body("debris", "white", rando(10), rando(10), rando(10), 1, rando(.1), rando(.1), rando(.1)))

}

})

}

The two asteroids have zero mass so they are not affected by gravity. They keep our 2D visualization zoomed out to at least 30x30. The last bit of code is our “mayhem” button to add 10 small inner planets for some spinny fun!

Next is our “simulation loop” - every 33ms, re-calculate and repaint. If you’re having fun, we could call it a “game loop.” The simplest thing that could possibly work to implement our loop is setTimeout() - and that fulfilled my purpose. An alternative could be requestAnimationFrame().

sim.start() starts the action by calling sim.step() every 33ms (about 30 frames per second).

// Methods from class nBodySimulator

// The simulation loop

start() {

// This is the simulation loop. step() calls visualize()

const step = this.step.bind(this)

setInterval(step, this.simulationSpeed)

}

// A step in the simulation loop.

async step() {

// Skip calculation if worker not ready. Runs every 33ms (30fps), so expect skips.

if (this.ready()) {

await this.calculateForces()

} else {

console.log(`Skipping: ${this.workerReady}, ${this.workerCalculating}`)

}

// Remove any "debris" that has traveled out of bounds - this is for the button

this.trimDebris()

// Now Update forces. Reuse old forces if we skipped calculateForces() above

this.applyForces()

// Ta-dah!

this.visualize()

}

Hurray! We are moving past design to implementation. We will implement the physics calculations in WebAssembly and run them in a separate Web Worker thread.

nBodySimulator wraps that implementation complexity and splits it into several parts:

-

calculateForces()promises to calculate the forces to apply.- These are mostly floating point operations and done in WebAssembly.

- These calculations are O(n²) and our performance bottleneck.

- We use a Web Worker to move them out of the main thread for better perceived and actual performance.

-

trimDebris()removes any debris that’s no longer interesting (and would zoom out our visualization). O(n) -

applyForces()applies the calculated forces to the bodies. O(n)- We reuse old forces if we skipped calculateForces() because the worker was already busy. This improves perceived performance (removing jitter) at the cost of accuracy.

- The main UI thread can paint the old forces even if the calculation takes longer than 33ms.

-

visualize()passes the array of bodies to each visualizer to paint. O(n)

And it all happens in 33ms! Could we improve this design? Yes. Curious or have a suggestion? Check the comments below. If you’re looking for an advanced modern design and implementation, check out the open-source Matter.js.

Blastoff!

I had so much fun creating this and I’m excited to share it with you. See you after the jump!

- Intro - this page

- Web Workers - accessing multiple threads

- WebAssembly - browser computing without JavaScript

- Rollup and Gulp - an alternative to WebPack

- Canvas - Drawing to the Canvas API and the “sim loop”

- WebVR - Swapping our Canvas visualizer for WebVR and Aframe

Entertainment will lead content in Virtual Reality (like mobile), but once VR is normal (like mobile), it will be the expected consumer and productivity experience (like mobile).

We have never been more empowered to create human experiences. There has never been a more exciting time to be a designer and developer. Forget web pages - we will build worlds.

Our journey begins with the humble Web Worker, so stay tuned for the next part of our WebVR series.

Understanding the basics

How does VR work?

VR happens when a person straps a screen to their face, creating an immersive experience that gives their “make-believe” permission to pretend they are really there.

What is Web based VR?

Web based VR uses the bizarre reality of ads, SEO rankings, and cat-memes (colloquially “the web”) to deliver virtual realities more engaging to the human psyche, but outside the rigid “information is a page in book” paradigm. WebVR is the VR commons.

What browser supports VR?

Scuba diving without scuba gear is called drowning or snorkeling. Like scuba, VR is also a “gear sport” and all the gear supports WebVR. Luddites can use any modern web browser on their $1500 desktop or $700 mobile phone to “2D snorkel” VR before purchasing a quality wireless VR headset ($400).

What is meant by edge computing?

“Edge computing” is harnessing the computing power of all the edge devices running your app before renting new ones.

Michael Cole

Dallas, United States

Member since September 10, 2014

About the author

Michael is an expert full-stack web engineer, speaker, and consultant with over two decades of experience and a degree in computer science.

Expertise

PREVIOUSLY AT