A Deep Look At JSON vs. XML, Part 2: The Strengths and Weaknesses of Both

Nearly all computer applications rely on either JSON or XML. Today, JSON has overtaken XML, but is it better? In part 1 of this series on JSON vs. XML, we take a closer look at the history of the web to uncover the original purpose of XML and JSON and analyze how JSON became the popular choice.

Nearly all computer applications rely on either JSON or XML. Today, JSON has overtaken XML, but is it better? In part 1 of this series on JSON vs. XML, we take a closer look at the history of the web to uncover the original purpose of XML and JSON and analyze how JSON became the popular choice.

Seva is a veteran of both enterprise and startups with 20 years of industry experience and a UC Berkeley graduate in EECS and MSE.

Expertise

PREVIOUSLY AT

In Part 1 of this article, we took a closer look at the evolution of Web 1.0 to 2.0, and how its growing pains gave rise to XML and JSON. With JavaScript’s influence on software trends in the last decade, JSON continues to receive increasingly more attention than any other data interchange format. The blogosphere proclaims countless articles comparing the two standards and amounts to a progressively expanding bias praising JSON’s simplicity and criticizing XML’s verbosity. Is JSON simply better than XML? A deeper look at data interchange will reveal the strengths and weaknesses of JSON and XML, as well as the suitability of each standard for common applications versus the enterprise.

In Part 2 of this article, we will:

- Evaluate the fundamental differences between JSON and XML, and relate the suitability of each standard for simple applications and the complex.

- Explore how JSON and XML affect the software risk for common applications versus the enterprise, and explore possible methods for its mitigation with each standard.

The software landscape is broad and wide, differentiated by a variety of platforms, languages, business contexts, and scale. The term “scale” is often associated to user interaction, but is also used to refer to qualities of software that tell apart the simple from the intricate: “scale of development,” “scale of investment,” and “scale of complexity.” Though all developers—both individual and teams—aspire to mitigate the software risk of their applications, the rigor in compliance with requirements and specification distinguishes common applications from the enterprise. With regard to JSON and XML, the consideration of this rigor brings to light the strengths and weaknesses, and fundamental differences of the two standards.

JSON for Common Applications Versus the Enterprise

In order to evaluate the suitability of JSON and XML for common applications and the enterprise, let’s define data interchange, and present a simple use-case to help elucidate the differences between the two standards.

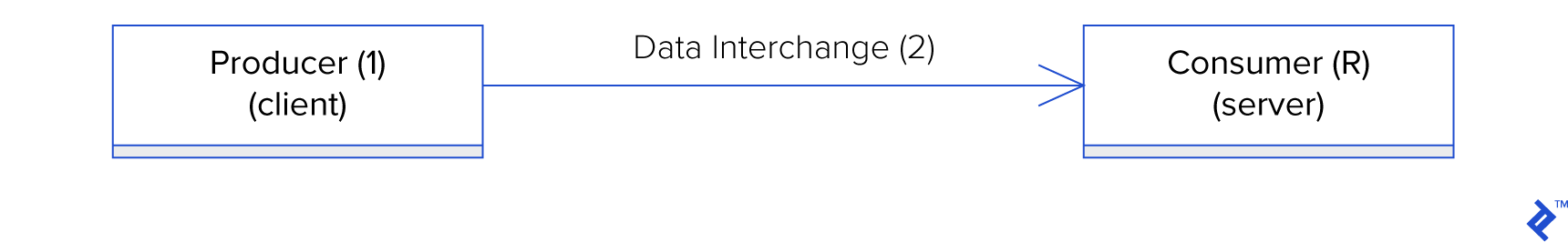

Data interchange concerns two endpoints, distinguished as two actors: a producer and a consumer. The simplest form of data interchange is one-directional, which involves a sequence of 3 general phases: data is produced by the producer (1), data is interchanged (2), and data is consumed by the consumer (3).

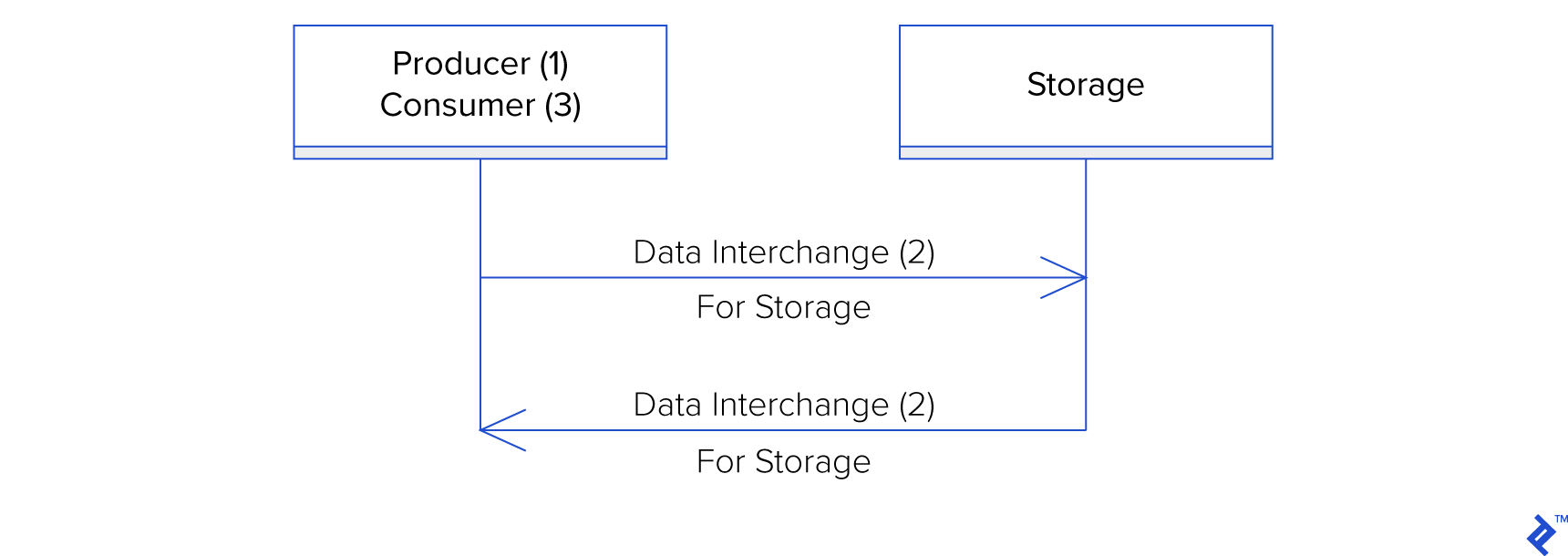

The one-directional data interchange use-case could only be simplified further if the producer and consumer are the same logical actor, such as when data interchange is used for disk storage by an application producing the data at time $\xi_1$, and consuming it at time $\xi_2$.

Irrespective of this simplification, the point of the use-case is to identify the problem that data interchange is solving. Sitting between data production (1) and data consumption (3), the data interchange (2) provides a format for the interchange of data between a producer and consumer. It does not get simpler than that!

JSON as Data Interchange

Crockford himself claimed that an enormous advantage for JSON is that JSON was designed as a data interchange format.1 It was meant to carry structured information in a concise manner between programs from the very beginning. Crockford defined JSON in a succinct 9 page RFC document, describing a format with terse semantics for specifically what it was designed for: data interchange.2

JSON fulfills the immediate requirements of interchanging data between two actors, efficiently and effectively, but in the real world of software development, immediate requirements are often just the first layer in a sandwich of other requirements. For simple applications, developed to satisfy a simple specification, a simple data interchange is all that is necessary. For most common applications, the simplicity of requirements surrounding data interchange suggests JSON as the optimal solution. However, as the requirements surrounding data interchange become complex, the deficiencies of JSON are revealed.

Complex requirements surrounding data interchange refer to higher considerations defining the business relationship between the producer and consumers. For instance, the evolutionary path of a software system may not be known at the time of its architecture and reference implementation. Objects or properties in a message format may have to be added or removed in the future. If the producer or consumer involve a large number of parties, it may also be necessary for the system to support current and prior versions of the message format. Referred to as consumer-driven contracts (CDC), a common problem for service providers is to evolve the contract between the producer and consumer.3

Consumer-Driven Contracts

Let’s expand our user-case to include a consumer-driven contract between a European bank and a retailer that communicates customers’ bank information for a purchase.

SWIFT is one of the earliest standards for electronic communication of bank transactions.4 Stripped of its purchase information, a bare SWIFT message includes a SWIFT code that identifies an account.

{

"code": "CTBAAU2S"

}

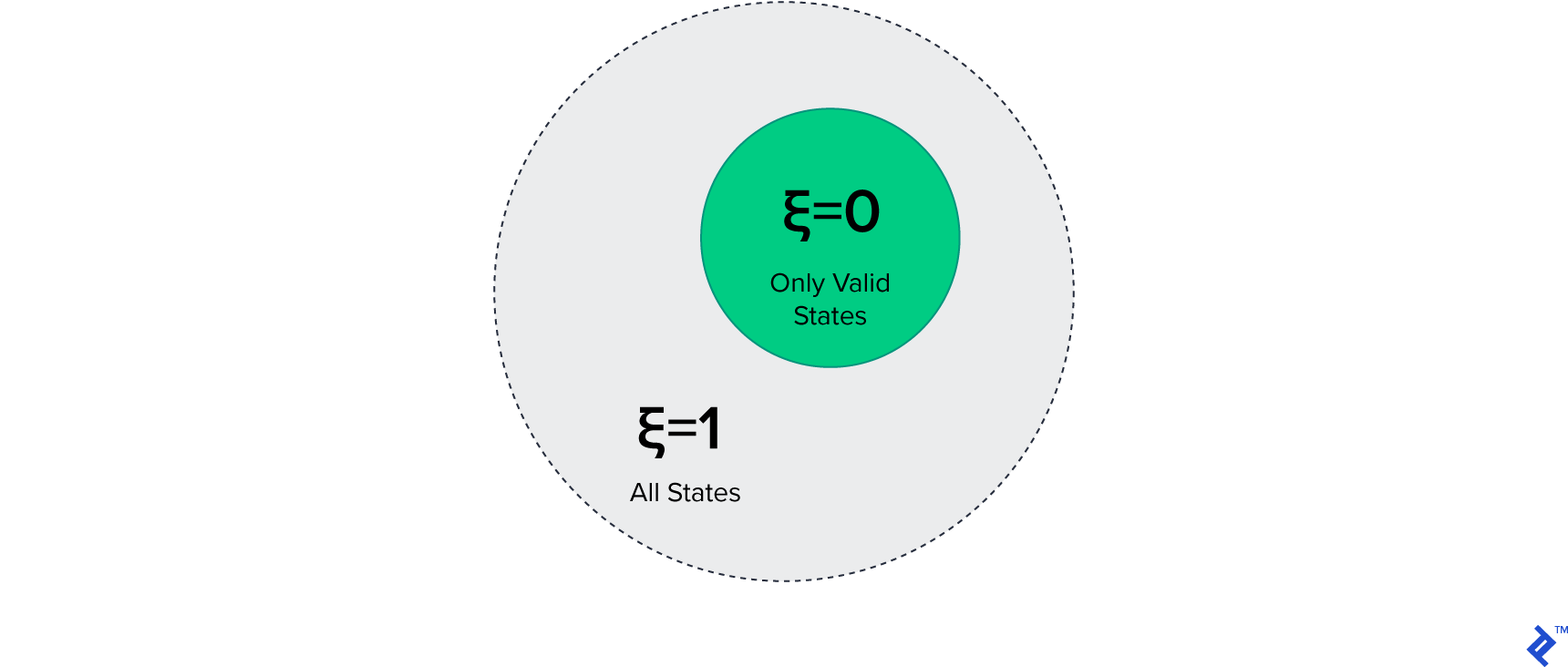

This message, with the property code, has a certain value that represents its state space. The state space of the message is defined as the complete space of all states that can be expressed. By differentiating between states that are valid and invalid, we can isolate the error part of the state space, and refer to it as the error space. Let $\xi$ represent the error space of the message, whereby $\xi = 1$ represents the space of all valid and invalid states (i.e., all states of the state space), and $\xi = 0$ the space of only valid states (i.e., the valid states of the state space).

The data interchange layer is the entry point to the consumer, and its error space $\xi_0$ (subscript 0 is used to represent the entry point layer) is determined by the proportion of all possible inputs—valid and invalid—versus those that are valid. The validity of an input message is defined in two layers: technical and logical. For JSON, technical correctness is determined by syntactical compliance of the message to JSON semantics as specified in Crockford’s RFC document.2 Logical correctness, however, involves the determination of the validity of account identifiers, as defined by the SWIFT standard: “a length of 8 or 11 alphanumeric characters and a structure that conforms to ISO 9362.”5

After years of successful operation, the European bank and retailers are required to amend their consumer-driven contract to include support for a new account identification standard: IBAN. The JSON message is modified to support a new property denoting type, to distinguish identifiers as SWIFT or IBAN.

{

"type": "IBAN",

"code": "DE91 1000 0000 0123 4567 89"

}

Thereafter, the European bank decides to enter the USA market and forms a consumer-driven contract with retailers in the USA. The bank must now support the ACH account identification system, expanding the message to support "type : "ACH".

{

"type": "ACH",

"code": "379272957729384",

"routing": "021000021"

}

Each extension to the JSON message format results in an increase in the systematic risk of the application. At the time the bank integrated SWIFT, it was not known that support for IBAN and ACH would follow. With a large number of retailers exchanging data with the bank, the risk for error is accentuated, prompting the bank to devise a solution to mitigate this risk.

The European bank is an enterprise solution, and is adamant to assert its rigor in assuring the error-free operation with retailers. To mitigate the software risk for errors, the bank strives to reduce the error space value $\xi_0$ from 1 to 0. To help banking systems reduce software risk, organizations governing the SWIFT, IBAN, and ACH code standards define simple test functions to determine the logical correctness of identifiers. The test functions for each standard can be expressed in regular expression form:

𝒗SWIFT(id) = regexid( “[A-Z]{6}[A-Z0-9]{2}([A-Z0-9]{3})?” )

𝒗IBAN (id) = regexid( “[A-Z]{2}\d{2} ?\d{4} ?\d{4} ?\d{4} ?\d{4} ?\d{0,2}” )

𝒗ACH(id, rt) = regexid( “\w{1,17}” ) × regexrt( “\d{9}” )

To bring $\xi_0$ closer to 0, the bank’s system must test each input message with enough scrutiny to ensure it detects as many errors that could be encountered. Since JSON is only capable of data interchange, the logic for validation would have to be implemented in a layer within the system itself. An example of such logic would be:

isValid(message) {

if (!message || !message.type) {

return false;

}

if (message.type == "SWIFT") {

return message.code &&

message.code.matches("[A-Z]{6}[A-Z0-9]{2}([A-Z0-9]{3})?"));

}

if (message.type == "IBAN") {

return message.code &&

message.code.matches("[A-Z]{2}\d{2} ?\d{4} ?\d{4} ?\d{4} ?\d{4} ?\d{0,2}"));

}

if (message.type == "ACH") {

return message.code &&

message.code.matches("\w{1,17}") &&

message.routing &&

message.routing.matches("\d{9}"));

}

return false;

}

The scale of the validation logic is proportional to the complexity of message variants being validated. The stripped-down message format of the European bank is simple, and thus the scale of its validation logic is proportionally simple. For messages with more properties, and especially those with nested objects, the validation footprint can grow tremendously, in both line-count and logical complexity. With JSON as data interchange, the validation layer is implemented as a coupled component to the system. Since the validation layer consumes input messages, it incurs the $\xi_0$ of the data interchange layer.

Every layer in a software system has a value $\xi$. The validation layer immediately above data interchange has its own space of potential error states $\xi_1$ resultant from its own complexity. For the European Bank, this complexity is the isValid(message) function. Since the validation layer consumes input from the data interchange layer, its $\xi_1$ becomes a function of $\xi_0$. Abstract extrapolation of this relationship indicates that $\xi$ of a layer absorbs $\xi$ of the previous layer, which absorbs $\xi$ of the previous layer, and so on. This relationship can be represented with the following expression:

$\xi_n = \alpha_n \times \xi_{n - 1}$

Here, $\xi_n$ represents the error space of layer $n$ as a function of $\xi_{n-1}$ (i.e. the error space of the previous layer), multiplied by $\alpha_n$, which represents the error component resulting from the software complexity of layer $n$ itself (i.e., the compounding of error in lieu of the code complexity in layer $n$).

A resilient system is one that mitigates its software risk by reducing the space of complexity of its inputs, its internal processing logic, and its outputs.

The Software Risk of Complex Requirements

What we refer to as the “software risk of a system” is equivalent to $\xi_N$, where $N$ represents the highest layer in the system. When architecting a new application, it may be challenging to evaluate $\xi_N$ due to the limited visibility of the implementation. However, the overall system complexity can be estimated by analyzing the complexity of the requirements.

Software risk is proportional to the complexity of the requirements. As the complexity of the requirements increases, so does the space for bugs. The more complex the message format, the more error cases have to be considered by the system. In particular, for service providers involving disparate systems, the service provider (consumer) must adopt a defensive policy with regard to messages from its clients (producers). A service provider cannot assume the data interchanged from its clients will be free of error. To shield itself from erroneous input data, the consumer validates the content of each message.

For applications with complex requirements, and enterprise systems in particular, the mitigation of software risk is a prime consideration.

To mitigate the risk of logical complexity, the programming principle of “encapsulation” helps developers organize code into layers with logical boundaries. At each encapsulated layer, the inputs are verified, processed, and delivered to the next layer. This principle provides a methodical approach for the progressive reduction of the “error space” $\xi$ at each layer $n$, which further insulates the higher business logic from bugs due to erroneous inputs. Proper encapsulation results in higher cohesion, whereby the responsibility of a particular encapsulated module is clearly defined and is not coupled to auxiliary responsibilities. With a clear separation of ideas, the “specific risk” $\xi_0$ of message variability is reduced, as well as the “general risk” $\xi_n$ of each layer above data interchange, as well as the “total risk” $\xi_N$ of the application in whole.

JSON as Data Interchange for Complex Requirements

With JSON as the data interchange format, complex requirements of a communication protocol must be dealt with in layers outside of JSON. Though JSON is great due to its simple semantics, complexities surrounding the data interchange inevitably end up spilling into other layers of the application. To hedge the risk of errors due to input complexity, systems rely on encapsulation to separate message validation from the business logic. JSON’s close integration with JavaScript, however, leads developers to integrate this logic in layers much higher in the application stack, often intertwined with the business logic itself. Unfortunately, such approaches are encouraged by the overly simple nature of JSON—an intermediary layer that encapsulates the logic for translation of different variants of a message format is the risk-reduced solution, but such patterns are often seen as excessive, not popular, and are thus rarely used. The logic to check whether an account identifier is valid can be implemented in:

- A validation layer just above data interchange, resulting in the encapsulation of responsibility and logical cohesion.

- Amidst the business logic, resulting in the merging of responsibility and logical coupling.

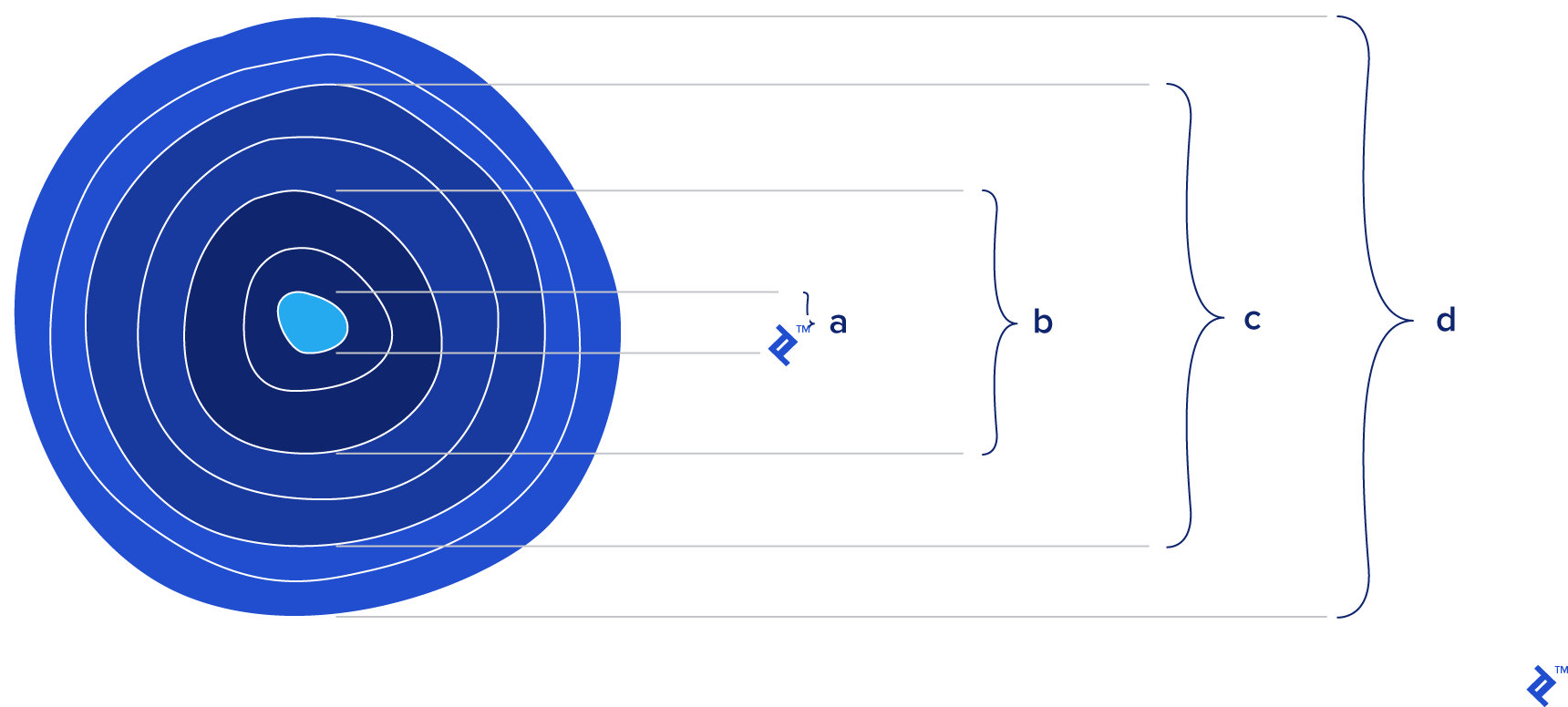

JSON as data interchange necessitates validation logic to be absorbed in the application code. Implementing the isValid(message) function within the application results in a coupling between the logical meaning of the message format—a format that is decided upon in negotiation between the producer and consumer parties of the consumer-driven contract protocol—and the functional implementation of the processing of messages. With consumer-driven contracts, the scope of data interchange expands to include validation and the processing code supporting the message variants. When seen from this perspective, JSON is the small center of an onion, whereby the onion’s layers represent the additional logic required for validation, processing, business logic, and so on.

When choosing the best data interchange format for an application, is it only the center of the onion we care about, or is it the onion as a whole?

Custom validation logic implemented in the consumer is not usable by the producer, or vice versa. Especially if systems are implemented on disparate platforms, it is not reasonable to expect that validation functions can be shared between the parties of a communication protocol. Only if two systems share the same platform, and the validation function is properly encapsulated and uncoupled from the application, could the code be shared. This unique use-case of perfect conditions is, however, unrealistic. As validation logic gets more complex, its direct integration within an application can lead to unintended (or intended) coupling, thus rendering the function unshareable. The European bank, for instance, would like to further reduce its software risk by requiring the retailers to validate all messages on their ends as well. To achieve this, the bank and the retailers would be required to implement their own versions of isValid(message). Since each party would be responsible for their own implementations, this would:

- Leave room for discrepancies in each party’s implementation.

- For each party’s system, couple the logical meaning of the message format to the technical implementation for its processing.

The dotted line represents the lack of a normative standard for the expression of a consumer-driven contract to functionally bind the producer and consumer. To enforce the consumer-driven contract, each party is responsible for its own implementation.

Open-source Libraries to Bridge the Gap

JSON stands out as the simple and efficient data interchange standard, but it does not provide standard patterns to help developers with the outer layers of the onion. To address the intricacies of data interchange, developers choose between two general architectural approaches:

- Implement custom code such as

isValid(message)above, at the cost of functional and logical coupling. - Integrate 3rd-party libraries implementing common solutions matching the problem, at the cost of incurring the software risk of the 3rd-party libraries.

Many open-source solutions have been developed to address common intricacies surrounding data interchange. In general, these solutions are specific, designed to address one part of the problem, but not another. OpenAPI, for instance, is an open-source specification that defines an interface description format for REST APIs.6 OpenAPI solves the discovery and specification of REST services, but the normative enforcement of consumer-driven contracts is outside its scope. GraphQL is another popular library that was developed by Facebook for fulfilling queries with existing data.7 GraphQL provides a standardized way for consumers to access data from producers by exposing APIs in the data layer directly to the producer’s data interchange. For simple applications with simple data, interconnecting the data layer directly to the transport layer can save the developer a lot of boilerplate code. For enterprise systems, however, adamant in reducing the software risk in their applications, the GraphQL approach leads to a reduction in encapsulation, reduction in cohesion, and increase in coupling.

With JSON as the data interchange format, companies and individual developers are left on their own to piece together a puzzle of a collection of libraries and frameworks that fulfill the complex requirements. With each solution intended to address one part of the problem, but not another, it becomes ever so difficult for developers to find solutions that:

- Solve the complexities surrounding data interchange to satisfy the system’s requirements and specification.

- Keep encapsulation and cohesion high, and coupling low, to reduce the total software risk $\xi_N$.

Due to the non-standard nature of these solutions, projects absorb the software risk of each constituent piece of the puzzle.

Applications that rely on more external libraries carry a higher software risk than those that rely on fewer. In order to mitigate the software risk of a complex application, developers and software architects often choose to rely on standards rather than custom solutions. An application does not need to be concerned that a standard will change, break, or even cease to exist. With custom solutions, however, this is not the case. Custom solutions that integrate a mixed bag of open-source projects, for instance, absorb the risk of each constituent dependency.

The Strengths and Weaknesses of JSON

Compared to other data interchange standards, JSON stands out as the most simple, most compact human-readable format. For simple applications, developed to satisfy a simple specification, a simple data interchange is all that is necessary. However, as the requirements surrounding data interchange become more complex, the deficiencies of JSON are revealed.

Like the “divergence catastrophe” for HTML that led to the invention of XML, a similar effect is realized in complex codebases that rely on JSON for data interchange. The JSON specification does not encapsulate the immediate functionalities surrounding data interchange which can lead to fragmentation of logic in the higher layers of the application. With JSON as data interchange, the higher layers of the application are left on their own to implement the functionalities that are missing from JSON itself. By containing the complexity surrounding data interchange on the surface layers of the application, the ability to catch data-related errors is also pushed to the surface of the application. This architectural side-effect can result in the expression of data-related errors directly to the users of the application, which for the enterprise is unacceptable.

The fundamental deficiency of JSON is its lack in providing a general standard for the normative description of the logical constituents of its documents.

For enterprise applications that strive to assert error-free operation, the use of JSON as data interchange lead to patterns that promote higher software risk and result in unintended obstacles that deter from the objective for high code quality, stability, and resilience to future unknowns.

JSON offers terse semantics and base simplicity for data interchange, but it has the potential to result in a system-wide reduction in encapsulation. The scope of consumer-driven contracts, or other complex requirements surrounding data interchange such as protocol versioning and content validation, must be addressed outside of JSON. With proper encapsulation, the complex requirements could be implemented in a cohesive and non-coupling manner, but this would a dedicated architectural focus at the very beginning. To save themselves from the excessive effort required for proper encapsulation, developers often implement solutions that blur the lines between the layers, whereby the complexities surrounding the data interchange diffuse into other layers of the application and result in a progressive increase to potential error.

Developers can also piece together the missing gap with a collection of libraries and frameworks to fulfill the higher requirements. By relying on a mixed bag of external libraries, however, applications absorb the risk of each constituent dependency. Since these solutions are specific, designed to address one part of the problem but not another, this further leads to a reduction in encapsulation, reduction in cohesion, and increase in coupling.

JSON is a convenient format that is great for simple applications, but its use with complex requirements surrounding data interchange invites higher software risk. JSON may seem appealing due to its base simplicity, but for enterprise systems, in particular, its use may result in undesired patterns that hamper the system’s code quality, stability, and the resilience to future unknowns.

In Part 3 of this article, we will explore XML as data interchange and evaluate its strengths and weaknesses compared to JSON. With a deeper understanding of XML’s capabilities, we will explore the future of data interchange as well as the current projects that bring the power of XML to JSON.

References

1. Douglas Crockford: The JSON Saga (Yahoo!, July 2009)

2. RFC 4627 (Crockford, July 2006)

3. Consumer-Driven Contracts: A Service Evolution Pattern (MartinFowler.com, June 2006)

4. Society for Worldwide Interbank Financial Telecommunication (Wikipedia)

Understanding the basics

What is XML and why is it important?

XML provides a way for the industry to specify, with strict semantics, custom markup languages for any application. Considered the holy grail of computing, XML remains the world’s most widely-used format for representing and exchanging information.

What is XML and its advantages?

XML is a language to specify custom markup languages and defines a schema standard to assert the integrity of data of XML documents. The XML Schema is detached from the application, allowing for the logical meaning of the message format and the functional implementation of its processing to be decoupled.

Why is JSON so popular?

JSON’s semantics map directly to JavaScript. With JavaScript’s proliferation in the last decade, JSON continues to receive increasingly more attention than any other data interchange format. Due to its ease-of-use and short learning curve, JSON has become the most accessible format for developers of every caliber.

Where is JSON used?

JSON is the native format for data interchange in JavaScript applications and is used to carry structured information in a concise manner between programs on any platform.

What is software risks, and is it avoidable?

Software risk represents the inverse of an application’s resilience to bugs and its malleability to future unknowns. All software risk is a result of coupling, either functional or logical. Each application is coupled to the context of its purpose, making software risk an unavoidable certainty.

How do you identify risks in software projects?

Software risk is proportional to the complexity of the requirements. When architecting a new application, it may be challenging to evaluate the software risk due to the limited visibility of the implementation. However, the overall system complexity can be estimated by analyzing the complexity of the requirements.

Seva Safris

Bangkok, Thailand

Member since July 17, 2014

About the author

Seva is a veteran of both enterprise and startups with 20 years of industry experience and a UC Berkeley graduate in EECS and MSE.

Expertise

PREVIOUSLY AT