Automatically Update Elastic Stack with Ansible Playbooks

The Elastic Stack is constantly releasing new and amazing features, and often delivers two new releases every month. However, even though the Elastic website maintains clear and detailed documentation, every upgrade involves a series of tedious steps. That is why one engineer decided to automate the whole process.

In this article, Toptal Freelance Linux Developer Renato Araujo walks us through a series of Ansible Playbooks he developed to auto-upgrade his Elastic Stack installation.

The Elastic Stack is constantly releasing new and amazing features, and often delivers two new releases every month. However, even though the Elastic website maintains clear and detailed documentation, every upgrade involves a series of tedious steps. That is why one engineer decided to automate the whole process.

In this article, Toptal Freelance Linux Developer Renato Araujo walks us through a series of Ansible Playbooks he developed to auto-upgrade his Elastic Stack installation.

Renato is a Linux and network system administrator with 20+ years of experience designing, deploying, and automating systems.

Expertise

PREVIOUSLY AT

Log analysis for a network composed of thousands of devices used to be a complex, time-consuming, and boring task before I decided to use the Elastic Stack as the centralized logging solution. It proved to be a very wise decision. Not only do I have a single place to search for all my logs, but I get almost instantaneous results on my searches, powerful visualizations that are incredibly helpful for analysis and troubleshooting, and beautiful dashboards that give me a useful overview of the network.

The Elastic Stack is constantly releasing new and amazing features, keeping a very active pace of development, it often delivers two new releases every month. I like to always keep my environment up-to-date to make sure I can take advantage of the new features and improvements. Also to keep it free from bugs and security issues. But this requires me to be constantly updating the environment.

Even though the Elastic website maintains clear and detailed documentation, including on the upgrade process of their products, manually upgrading is a complex task, especially the Elasticsearch cluster. There are a lot of steps involved, and a very specific order needs to be followed. That is why I decided to automate the whole process, long ago, using Ansible Playbooks.

In this Ansible tutorial, I’ll walk us through a series of Ansible Playbooks that were developed to auto-upgrade my Elastic Stack installation.

What is the Elastic Stack

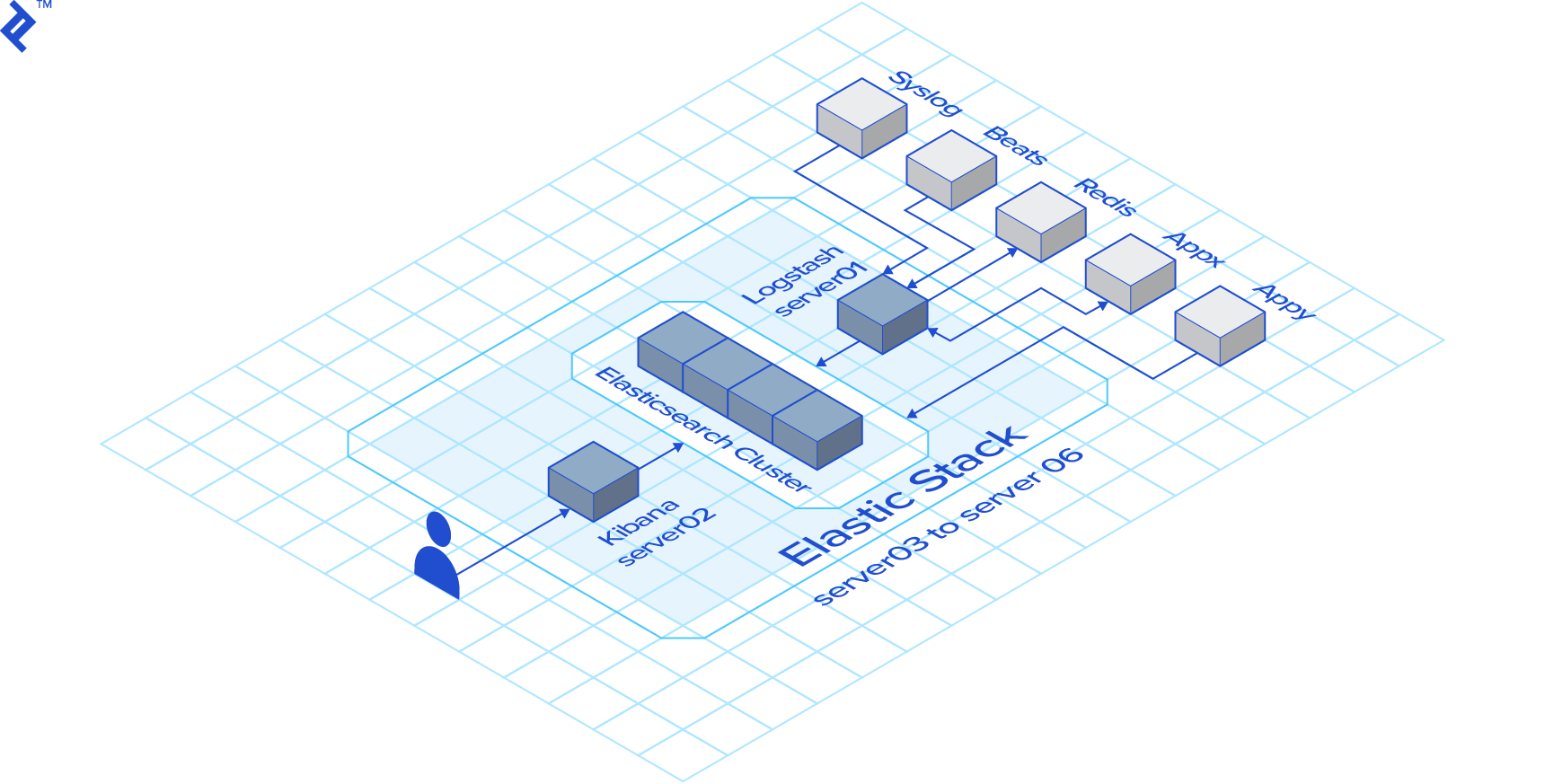

The Elastic Stack, formerly known as the ELK stack, is composed of Elasticsearch, Logstash, and Kibana, from the open-source company Elastic, that together provides a powerful platform for indexing, searching and analyzing your data. It can be used for a broad range of applications. From logging and security analysis to application performance management and site search.

-

Elasticsearch is the core of the stack. It is a distributed search and analytics engine capable of delivering near real-time search results even against a huge volume of stored data.

-

Logstash is a processing pipeline that gets or receives data from many different sources (50 official input plugins as I am writing), parse it, filter and transforms and send it to one or more of possible outputs. In our case, we are interested in the Elasticsearch output plugin.

-

Kibana is your user and operation frontend. It lets you visualize, search, navigate your data and create dashboards that give you amazing insights about it.

What is Ansible

Ansible is an IT automation platform that can be used to configure systems, deploy or upgrade software and orchestrate complex IT tasks. Its main goals are simplicity and ease of use. My favorite feature of Ansible is that it is agentless, meaning that I do not need to install and manage any extra software on the hosts and devices I want to manage. We’ll be using the power of Ansible automation to auto upgrade our Elastic Stack.

Disclaimer and a Word of Caution

The playbooks that I’ll share here are based on the steps described in the official product’s documentation. It is meant to be used only for upgrades of the same major version. For example: 5.x → 5.y or 6.x → 6.y where x>y. Upgrades between major versions often require extra steps and those playbooks will not work for those cases.

Regardless, always read the release notes, especially the breaking changes section before using the playbooks to upgrade. Make sure that you understand the tasks executed in the playbooks and always check the upgrade instructions to make sure that nothing important change.

Having said that, I’ve been using those playbooks (or earlier versions) since version 2.2 of Elasticsearch without any issues. At the time I had completely separate playbooks for each product, as they did not share the same version number as they do know.

Having said that, I am not responsible in any way whatsoever for your use of the information contained in this article.

Our Fictitious Environment

The environment against which our playbooks will run will consist of 6 CentOS 7 servers:

- 1 x Logstash Server

- 1 x Kibana Server

- 4 x Elasticsearch nodes

It does not matter if your environment has a different number of servers. You can simply reflect it accordingly in the inventory file and the playbooks should run without a problem. If, however, you are not using an RHEL based distribution, I’ll leave it as an exercise for you to change the few tasks that are distribution specific (mainly the package manager stuff)

The Inventory

Ansible needs an inventory to know against which hosts it should run the playbooks. Four our imaginary scenario we are going to use the following inventory file:

[logstash]

server01 ansible_host=10.0.0.1

[kibana]

server02 ansible_host=10.0.0.2

[elasticsearch]

server03 ansible_host=10.0.0.3

server04 ansible_host=10.0.0.4

server05 ansible_host=10.0.0.5

server06 ansible_host=10.0.0.6

In an Ansible inventory file, any [section] represents a group of hosts. Our inventory has 3 groups of hosts: logstash, kibana, and elasticsearch. You will notice that I only use the group names in the playbooks. That means that it does not matter the number of hosts in the inventory, as long as the groups are correct the playbook will run.

The Upgrade Process

The upgrade process will consist of the following steps:

1) Pre-download the packages

2) Logstash Upgrade

3) Rolling Upgrade of the Elasticsearch cluster

4) Kibana Upgrade

The main goal is to minimize downtime. Most of the time the user will not even notice. Sometimes Kibana might be unavailable for a few seconds. That is acceptable to me.

Main Ansible Playbook

The upgrade process consists of a set of different playbooks. I’ll be using the import_playbook feature of Ansible to organize all the playbooks in one main playbook file that can be called to take care of the whole process.

- name: pre-download

import_playbook: pre-download.yml

- name: logstash-upgrade

import_playbook: logstash-upgrade.yml

- name: elasticsearch-rolling-upgrade

import_playbook: elasticsearch-rolling-upgrade.yml

- name: kibana-upgrade

import_playbook: kibana-upgrade.yml

Fairly simple. It is just a way to organize the execution of the playbooks in one specific order.

Now, let’s consider how we would use the Ansible playbook example above. I’ll explain how we implement it later, but this is the command I would execute to upgrade to version 6.5.4:

$ ansible-playbook -i inventory -e elk_version=6.5.4 main.yml

Pre-download the Packages

That first step is actually optional. The reason I use this is that I consider a generally good practice to stop a running service before upgrading it. Now, if you have a fast Internet connection the time for your package manager to download the package might be negligible. But that is not always the case and I want to minimize the amount of time that any service is down. That is way my first playbook will use yum to pre-download all the packages. That way when the upgrade times come, the download step was already taken care of.

- hosts: logstash

gather_facts: no

tasks:

- name: Validate logstash Version

fail: msg="Invalid ELK Version"

when: elk_version is undefined or not elk_version is match("\d+\.\d+\.\d+")

- name: Get logstash current version

command: rpm -q logstash --qf %{VERSION}

args:

warn: no

changed_when: False

register: version_found

- name: Pre-download logstash install package

yum:

name: logstash-{{ elk_version }}

download_only: yes

when: version_found.stdout is version_compare(elk_version, '<')

The first line indicates that this play will only apply to the logstash group. The second line tells Ansible not to bother collecting facts about the hosts. This will speed up the play, but make sure that none of the tasks in the play will need any facts about the host.

The first task in the play will validate the elk_version variable. This variable represents the version of the Elastic Stack we are upgrading to. That is passed when you invoke the ansible-playbook command. If the variable is not passed or is not a valid format the play will bail out immediately. That task will actually be the first task in all the plays. The reason for that is to allow the plays to be executed isolated if desired or necessary.

The second task will use the rpm command to get the current version of Logstash and register in the variable version_found. That information will be used in the next task. The lines args:, warn: no and changed_when: False are there to make ansible-lint happy, but are not strictly necessary.

The final task will execute the command that actually pre-download the package. But only if the installed version of Logstash is older than the target version. Not point download and older or same version if it will not be used.

The other two plays are essentially the same, except that instead of Logstash they will pre-download Elasticsearch and Kibana:

- hosts: elasticsearch

gather_facts: no

tasks:

- name: Validate elasticsearch Version

fail: msg="Invalid ELK Version"

when: elk_version is undefined or not elk_version is match("\d+\.\d+\.\d+")

- name: Get elasticsearch current version

command: rpm -q elasticsearch --qf %{VERSION}

args:

warn: no

changed_when: False

register: version_found

- name: Pre-download elasticsearch install package

yum:

name: elasticsearch-{{ elk_version }}

download_only: yes

when: version_found.stdout is version_compare(elk_version, '<')

- hosts: kibana

gather_facts: no

tasks:

- name: Validate kibana Version

fail: msg="Invalid ELK Version"

when: elk_version is undefined or not elk_version is match("\d+\.\d+\.\d+")

- name: Get kibana current version

command: rpm -q kibana --qf %{VERSION}

args:

warn: no

changed_when: False

register: version_found

- name: Pre-download kibana install package

yum:

name: kibana-{{ elk_version }}

download_only: yes

when: version_found.stdout is version_compare(elk_version, '<')

Logstash Upgrade

Logstash should be the first component to be upgraded. That is because Logstash is guaranteed to work with an older version of Elasticsearch.

The first tasks of the play are identical to the pre-download counterpart:

- name: Upgrade logstash

hosts: logstash

gather_facts: no

tasks:

- name: Validate ELK Version

fail: msg="Invalid ELK Version"

when: elk_version is undefined or not elk_version is match("\d+\.\d+\.\d+")

- name: Get logstash current version

command: rpm -q logstash --qf %{VERSION}

changed_when: False

register: version_found

The two final tasks are contained in a block:

- block:

- name: Update logstash

yum:

name: logstash-{{ elk_version }}

state: present

- name: Restart logstash

systemd:

name: logstash

state: restarted

enabled: yes

daemon_reload: yes

when: version_found.stdout is version_compare(elk_version, '<')

The conditional when guarantees that the tasks in the block will only be executed if the target version is newer than the current version. The first task inside the block performs the Logstash upgrade and the second task restart the service.

Elasticsearch Cluster Rolling Upgrade

To make sure that there will be no downtime to the Elasticsearch cluster we must perform a rolling upgrade. This means that we will upgrade one node at a time, only starting the upgrade of any node after we make sure that the cluster is in a green state (fully healthy).

From the start of the play you will notice something different:

- name: Elasticsearch rolling upgrade

hosts: elasticsearch

gather_facts: no

serial: 1

Here we have the line serial: 1. The default behavior of Ansible is to execute the play against multiple hosts in parallel, the number of simultaneous hosts defined in the configuration. This line makes sure that the play will be executed against only one host at a time.

Next, we define a few variables to be used along the play:

vars:

es_disable_allocation: '{"transient":{"cluster.routing.allocation.enable":"none"}}'

es_enable_allocation: '{"transient":{"cluster.routing.allocation.enable": "all","cluster.routing.allocation.node_concurrent_recoveries": 5,"indices.recovery.max_bytes_per_sec": "500mb"}}'

es_http_port: 9200

es_transport_port: 9300

The meaning of each variable will be clear as they appear in the play.

As always the first task is to validate the target version:

tasks:

- name: Validate ELK Version

fail: msg="Invalid ELK Version"

when: elk_version is undefined or not elk_version is match("\d+\.\d+\.\d+")

Many of the following tasks will consist in executing REST calls against the Elasticsearch cluster. The call can be executed against any of the nodes. You could simply execute it against the current host in the play, but some of the commands will be executed while the Elasticsearch service is down for the current host. So, in the next tasks, we make sure to select a different host to run the REST calls against. For this, we will use the set_fact module and the groups variable from Ansible inventory.

- name: Set the es_host for the first host

set_fact:

es_host: "{{ groups.elasticsearch[1] }}"

when: "inventory_hostname == groups.elasticsearch[0]"

- name: Set the es_host for the remaining hosts

set_fact:

es_host: "{{ groups.elasticsearch[0] }}"

when: "inventory_hostname != groups.elasticsearch[0]"

Next, we make sure that the service is up in the current node before continuing:

- name: Ensure elasticsearch service is running

systemd:

name: elasticsearch

enabled: yes

state: started

register: response

- name: Wait for elasticsearch node to come back up if it was stopped

wait_for:

port: "{{ es_transport_port }}"

delay: 45

when: response.changed == true

Like in the previous plays, we will check the current version. Except for this time, we will use the Elasticsearch REST API instead of running the rpm. We could also have used the rpm command, but I want to show this alternative.

- name: Check current version

uri:

url: http://localhost:{{ es_http_port }}

method: GET

register: version_found

retries: 10

delay: 10

The remaining tasks are inside a block that will only be executed if the current version is older than the target version:

- block:

- name: Enable shard allocation for the cluster

uri:

url: http://localhost:{{ es_http_port }}/_cluster/settings

method: PUT

body_format: json

body: "{{ es_enable_allocation }}"

Now, if you followed my advice and read the documentation you will have noticed that this step should be to the opposite: to disable shard allocation. I like to put this task here first in case the shards were disabled before for some reason. This is important because the next task will wait for the cluster to become green. If the shard allocation is disabled the cluster will stay yellow and the tasks will hang until it times out.

So, after making sure that shard allocation is enabled we make sure that the cluster is in a green state:

- name: Wait for cluster health to return to green

uri:

url: http://localhost:{{ es_http_port }}/_cluster/health

method: GET

register: response

until: "response.json.status == 'green'"

retries: 500

delay: 15

After a node service restart, the cluster can take a long time to return to green. That’s the reason for the lines retries: 500 and delay: 15. It means we will wait 125 minutes (500 x 15 seconds) for the cluster to return to green. You might need to adjust that if your nodes hold a really huge amount of data. For the majority of cases, it is way more than enough.

Now we can disable the shard allocation:

- name: Disable shard allocation for the cluster

uri:

url: http://localhost:{{ es_http_port }}/_cluster/settings

method: PUT

body_format: json

body: {{ es_disable_allocation }}

And before shutting down the service, we execute the optional, yet recommended, sync flush. It is not uncommon to get a 409 error for some of the indices when we perform a sync flush. Since this is safe to ignore I added 409 to the list of success status codes.

- name: Perform a synced flush

uri:

url: http://localhost:{{ es_http_port }}/_flush/synced

method: POST

status_code: "200, 409"

Now, this node is ready to be upgraded:

- name: Shutdown elasticsearch node

systemd:

name: elasticsearch

state: stopped

- name: Update elasticsearch

yum:

name: elasticsearch-{{ elk_version }}

state: present

With the service stopped we wait for all shards to be allocated before starting the node again:

- name: Wait for all shards to be reallocated

uri: url=http://{{ es_host }}:{{ es_http_port }}/_cluster/health method=GET

register: response

until: "response.json.relocating_shards == 0"

retries: 20

delay: 15

After the shards are reallocated we restart the Elasticsearch service and wait for it to be completely ready:

- name: Start elasticsearch

systemd:

name: elasticsearch

state: restarted

enabled: yes

daemon_reload: yes

- name: Wait for elasticsearch node to come back up

wait_for:

port: "{{ es_transport_port }}"

delay: 35

- name: Wait for elasticsearch http to come back up

wait_for:

port: "{{ es_http_port }}"

delay: 5

Now we make sure that the cluster is yellow or green before reenabling shard allocation:

- name: Wait for cluster health to return to yellow or green

uri:

url: http://localhost:{{ es_http_port }}/_cluster/health

method: GET

register: response

until: "response.json.status == 'yellow' or response.json.status == 'green'"

retries: 500

delay: 15

- name: Enable shard allocation for the cluster

uri:

url: http://localhost:{{ es_http_port }}/_cluster/settings

method: PUT

body_format: json

body: "{{ es_enable_allocation }}"

register: response

until: "response.json.acknowledged == true"

retries: 10

delay: 15

And we wait for the node to fully recover before processing the next one:

- name: Wait for the node to recover

uri:

url: http://localhost:{{ es_http_port }}/_cat/health

method: GET

return_content: yes

register: response

until: "'green' in response.content"

retries: 500

delay: 15

Of course, as I said before, this block should only be executed if we are really upgrading the version:

when: version_found.json.version.number is version_compare(elk_version, '<')

Kibana Upgrade

The last component to be upgraded is Kibana.

As you might expect, the first tasks are not different from the Logstash upgrade or the pre-download plays. Except for the definition of one variable:

- name: Upgrade kibana

hosts: kibana

gather_facts: no

vars:

set_default_index: '{"changes":{"defaultIndex":"syslog"}}'

tasks:

- name: Validate ELK Version

fail: msg="Invalid ELK Version"

when: elk_version is undefined or not elk_version is match("\d+\.\d+\.\d+")

- name: Get kibana current version

command: rpm -q kibana --qf %{VERSION}

args:

warn: no

changed_when: False

register: version_found

I’ll explain the set_default_index variable when we get to the task that uses it.

The remaining of the tasks will be inside a block that will only execute if the installed version of Kibana is older than the target version. The first two tasks will update and restart Kibana:

- name: Update kibana

yum:

name: kibana-{{ elk_version }}

state: present

- name: Restart kibana

systemd:

name: kibana

state: restarted

enabled: yes

daemon_reload: yes

And for Kibana that should have been enough. Unfortunately, for some reason, after the upgrade, Kibana loses its reference to its default index pattern. This causes it to asks for the first user that access after the upgrade to define the default index pattern, which may cause confusion. To avoid it make sure to include a task to reset the default index pattern. In the example below, it is syslog, but you should change it to whatever it is you use. Before setting the index, though, we have to make sure that Kibana is up and ready to serve requests:

- name: Wait for kibana to start listening

wait_for:

port: 5601

delay: 5

- name: Wait for kibana to be ready

uri:

url: http://localhost:5601/api/kibana/settings

method: GET

register: response

until: "'kbn_name' in response and response.status == 200"

retries: 30

delay: 5

- name: Set Default Index

uri:

url: http://localhost:5601/api/kibana/settings

method: POST

body_format: json

body: "{{ set_default_index }}"

headers:

"kbn-version": "{{ elk_version }}"

Conclusion

The Elastic Stack is a valuable tool and I definitely recommend you to take a look if you don’t use it yet. It is great as it is and is constantly improving, so much so that it might be hard to keep up with the constant upgraded. I hope that those Ansible Playbooks might be as useful for you as they are for me.

I made them available on GitHub at https://github.com/orgito/elk-upgrade. I recommend that you test it in a non-production environment.

If you’re a Ruby on Rails developer looking to incorporate Elasticsearch in your app, check out Elasticsearch for Ruby on Rails: A Tutorial to the Chewy Gem by Core Toptal Software Engineer Arkadiy Zabazhanov.

Understanding the basics

What is Elastic Stack?

The Elastic Stack is a set of products from the open-source company Elastic. The main components of the stack are Logstash, Kibana, and Elasticsearch. Together they provide a complete and powerful solution for collecting, parsing, visualizing, and analyzing huge amounts of data in real-time.

What is Elasticsearch?

Elasticsearch is the flagship product from Elastic and the core of the Elastic Stack. It is a powerful search and analytics engine that stores your data and provides almost instantaneous search results.

What is Kibana?

Kibana is the front end of the Elastic Stack. It is a data visualization web app that provides easy access to the powerful search capabilities of Elasticsearch and allows you to create beautiful and useful dashboards.

What is Ansible?

Ansible is an IT automation tool that’s used for provisioning, automation management, deployments, and pretty much any complex and repetitive IT task. It is agentless, requiring only SSH access and Python to be present in the nodes it manages.

Renato Araujo

Serra - ES, Brazil

Member since November 7, 2018

About the author

Renato is a Linux and network system administrator with 20+ years of experience designing, deploying, and automating systems.

Expertise

PREVIOUSLY AT