Building a Data Warehouse Data Quality Process

Data quality is a crucial element of any successful data warehouse solution. As the complexity of data warehouses increases, so does the need for data quality processes.

In this article, Toptal Data Quality Developer Alexander Hauskrecht outlines how you can ensure a high degree of data quality and why this process is so important.

Data quality is a crucial element of any successful data warehouse solution. As the complexity of data warehouses increases, so does the need for data quality processes.

In this article, Toptal Data Quality Developer Alexander Hauskrecht outlines how you can ensure a high degree of data quality and why this process is so important.

Alexander has more than 20 years of experience in data warehousing in various roles and environments. He has extensive experience in insurance and banking.

Data quality in data warehouse systems is getting more and more important. Increasing regulatory requirements, but also the growing complexity of data warehouse solutions, force companies to intensify (or start) a data quality (DQ) initiative.

This article’s main focus will be on “traditional” data warehousing, but data quality is also an issue in more “modern” concepts such as data lakes. It will show some main points to consider and also some common pitfalls to avoid when implementing a data quality strategy. It does not cover the part on choosing the right technology/tool to build a DQ framework.

One of the most obstructive problems of a DQ project is the fact that at first sight, it creates a lot of work for the business units without providing any extra functionality. A data quality initiative usually only has strong proponents if:

- There are data quality issues with a severe impact on the business.

- Regulatory bodies enforce data quality standards (e.g., BCBS 239 in the finance industry).

DQ’s treatment is similar to that of testing in software development—if a project runs out of time and/or budget, this part tends to be reduced first.

This, of course, is not the whole truth. A good data quality system helps detect errors early, thus speeding up the process of delivering data of “good enough” quality to the users.

Definition of Terms

Before discussing the topic, a common understanding of the terms used is important.

Data Warehouse (DWH)

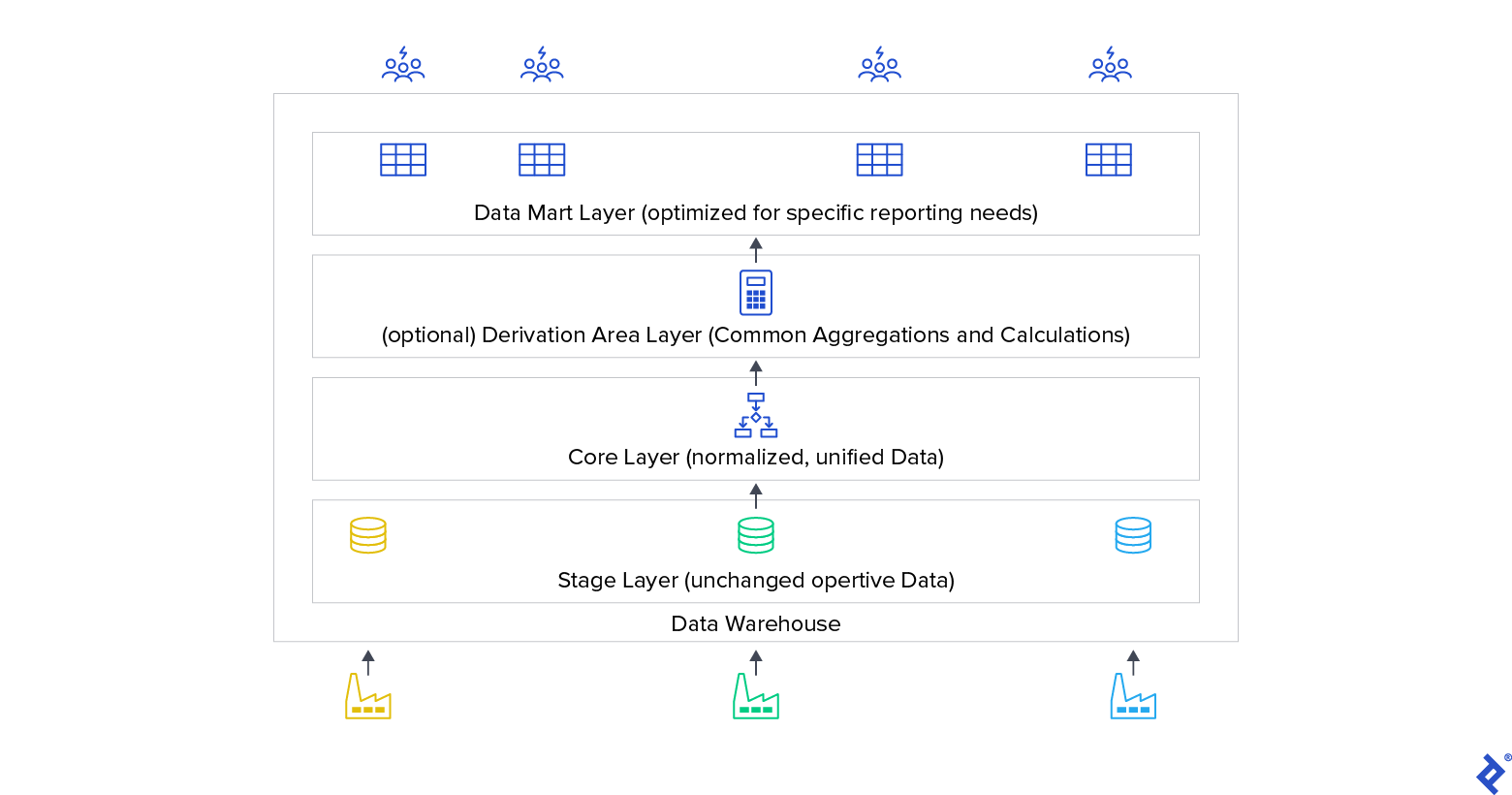

A data warehouse (DWH) is a non-operational system mainly used for decision support. It consolidates the data of the operational systems (all of them or a smaller subset) and provides query-optimized data for the users of the DWH system. The data warehouse should provide “a single version of truth” within the enterprise. A data warehouse is usually built of stages/layers:

The operational data is stored mostly unchanged into a staging layer. The core layer contains consolidated and unified data. The next optional stage is a derivation area, providing derived data (for example, a customer score for sales) and aggregations. The data mart layer contains data optimized for a given group of users. Data marts often contain aggregations and lots of derived metrics. Data warehouse users often work only with the data mart layer.

Between each stage, some kind of data transformation takes place. Usually, a data warehouse is periodically loaded with delta extractions of the operational data and contains algorithms to keep historical data.

Data Quality

Data quality is usually defined as a metric on how well a product meets user requirements. Different users might have different requirements for a product so the data quality implementation depends on the user’s perspective, and it is important to identify these needs.

Data quality does not mean the data has to be completely or almost error-free—it depends on the users’ requirements. A “good enough” approach is a good choice to start with. Nowadays, bigger companies have “a data (or information) government policy,” and data quality is a part of it. A data government policy should describe how your company deals with data and how it makes sure that data has the right quality and that data privacy rules are not violated.

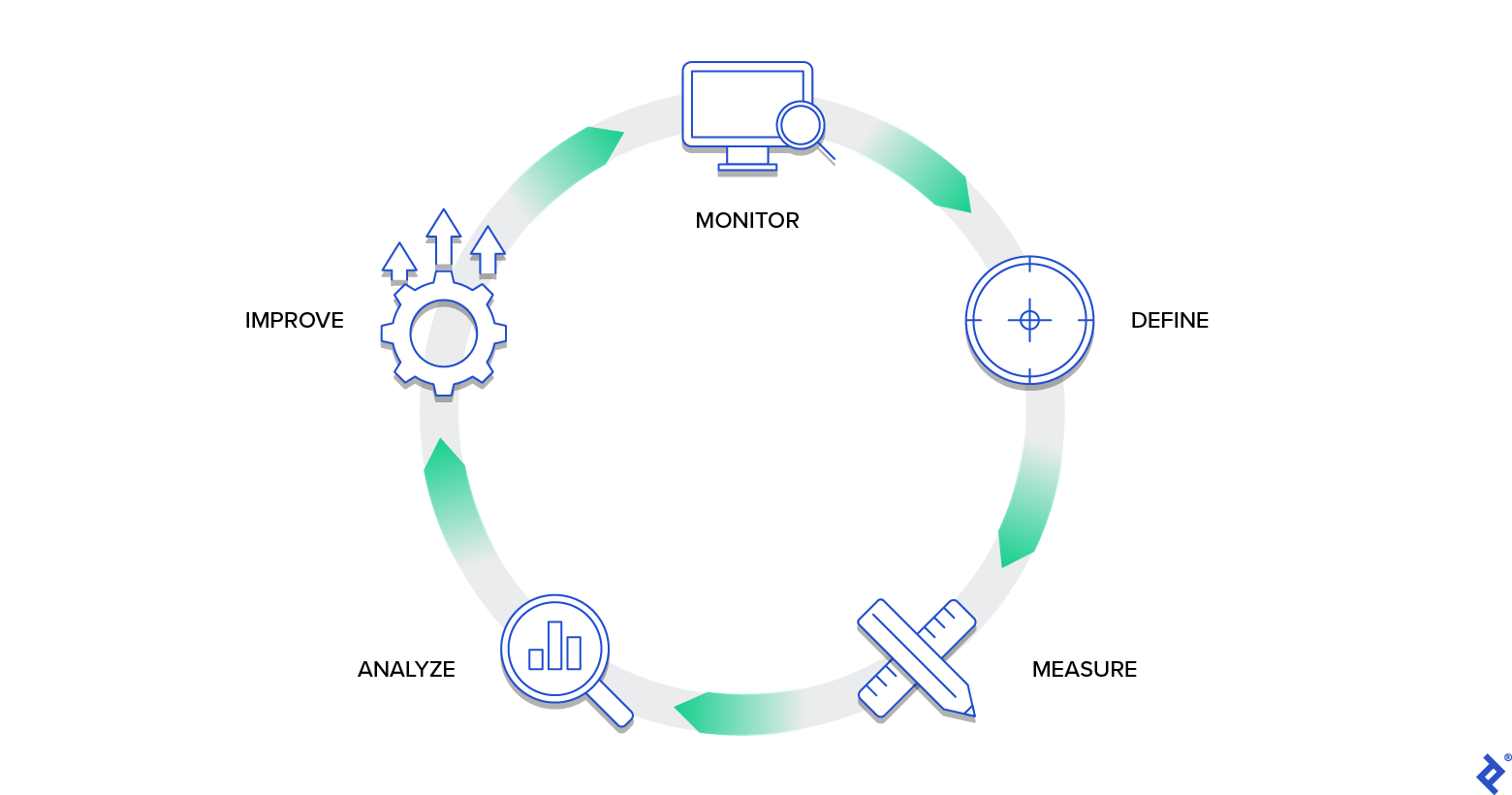

Data quality is an ongoing topic. A DQ circuit loop has to be implemented (see next chapter). Regulatory requirements and compliance rules also have an impact on the data quality needed, such as TCPA (US Telephone Consumer Protection Act) or GDPR in Europe for privacy issues, but also industry-specific rules like Solvency II for insurances in the EU, BCBS 239 and others for banking, and so on.

Data Quality Circuit Loop

As with all quality topics, DQ is an ongoing activity designed to maintain satisfactory quality. As a result of a DQ project, a circuit loop similar to the one below has to be implemented:

The steps within this loop will be described in the next chapters.

Data Quality Roles

Next, we will see how to improve data quality in data warehouse contexts as well as how to implement a successful DQ initiative. To do this, the following roles are needed:

- Data Owner. A data owner is responsible for data quality, but also for data privacy protection. The data owner “owns” a data domain, controls access, and is responsible for assuring data quality and taking action to fix findings. In larger organizations, it’s common to find several data owners. Data domains could be, for example, marketing data, controlling data, etc. If more than one data owner exists in a company, there should be one person (a data owner or someone else) responsible for the overall data quality process. A data owner should have a strong authority to enforce data quality and support the DQ process; therefore, data owners are often senior stakeholders. A good understanding of the business domain along with good communication skills are important.

- Data Steward. A data steward helps implement data quality within an enterprise, supporting data users on questions about how to interpret data/the data model, data quality issues, etc. Data stewards are often the data owner’s staff or can be organized in a data quality competence center or a DQ team. Data stewards can have an IT or business background but should know both sides. Analytical skills along with a good understanding of the business domain they support, combined with strong communication skills, are chief prerequisites for a successful data steward.

- Data User. These are data warehouse users working with data. Data users typically work with the data mart layer and are responsible for work results with the data. Data users make sure there are adequate data quality checks for the quality level they need. Data users need a strong understanding of their data, business domain, and the required analytical skills to interpret data. It is reasonable to find a few people among data users in every business unit who will be responsible for data quality issues.

To ensure success, it is important to have these roles clearly defined and widely accepted within your organization in the early stages of your DQ project. It is equally important to find competent data specialists for these roles who support the project.

Defining DQ Rules in Data Warehousing

The data quality/data warehouse relationship is quite strong as defining the rules of the former requires a good understanding of the latter.

How to Find DQ Rules?

As discussed earlier, data users (and the data owner) are responsible for data use and therefore also for the needed level of data quality. Data users should have a good understanding of their data so they can give the best input for useful data quality rules.

They are also the ones who analyze the results of the data quality rules, so it is always a good idea to let them define their own rules. This further enhances the acceptance to check and rate the result of the DQ rules assigned to a data user unit (see “Analyze” chapter).

The drawback of this approach is that data users normally only know the data mart layer, not the earlier layers of the data warehouse. If data was corrupted in the “lower” stages, this won’t be detected by checking just the “top” layer of your data warehouse.

Tackling Errors

What kind of known errors might occur in a data warehouse?

-

Wrong transformation logic in the data warehouse

- The more complex your IT landscape, the more complex the transformation logic tends to be. These are the most common DQ problems, and the effect of such errors can be “lost” data, duplicates, incorrect values, etc.

-

Unstable load process or wrong handling of loads

- The load of a data warehouse can be a complex process that might include errors in the definition of the job orchestration (jobs starting too early or too late, jobs not executed, etc.). Errors due to manual intervention (e.g., some jobs are skipped, some jobs are started with the wrong due date or with the data files of yesterday) happen often when the load process is run out of band due to some disruption.

-

Wrong data transfer of data sources

- Data transfer is often implemented as a task of the source system. Anomalies or disruption in the job flows might cause the delivery of empty or incomplete data.

-

Wrong operational data

- The data in the operational system contains errors not recognized so far. It may sound strange, but it is a platitude in data warehouse projects that the quality of the operational data is often not seen until the data is included in a DWH.

-

Misinterpretation of data

- The data is correct, but users don’t know how to interpret it right. This is a very common “error” that is not strictly a data quality issue but something that has to do with data governance and is a task for the data stewards.

These problems are often caused by people lacking the appropriate know-how and skills to define, implement, run, and work with a data warehouse solution.

Data Quality Dimensions

DQ dimensions are a common way to identify and cluster DQ checks. There are many definitions, and the number of dimensions varies considerably: You might find 16, or even more dimensions. From a practical perspective, it is less confusing to start with a few dimensions and find a general understanding of them among your users.

- Completeness: Is all the data required available and accessible? Are all sources needed available and loaded? Was data lost between stages?

- Consistency: Is there erroneous/conflicting/inconsistent data? For example, the termination date of a contract in a “Terminated” state must contain a valid date higher than or equal to the start date of the contract.

- Uniqueness: Are there any duplicates?

- Integrity: Is all data linked correctly? For example, are there orders linking to nonexistent customer IDs (a classic referential integrity problem)?

- Timeliness: Is the data current? For example, in a data warehouse with daily updates, I would expect yesterday’s data available today.

Data generated by the data warehouse load process can be helpful as well.

- Tables with discarded data. Your data warehouse might have processes to skip/delay data that can’t be loaded due to technical issues (e.g., format conversion, missing mandatory values, etc.).

- Logging information. Noticeable problems might be written into logging tables or log files.

- Bill of delivery. Some systems use “bills of delivery” for data provided by operational systems (e.g., number of records, number of distinct keys, sums of values). These can be used for reconciliation checks (see below) between the data warehouse and the operational systems.

Keep in mind that each data quality check has to be analyzed by at least one data user (see “Analyze” chapter) in case errors are found, for which you’ll need someone responsible and available to look after every check implemented.

Within a complex data warehouse, you might end up with many (sometimes thousands) DQ rules. The process to execute data quality rules should be robust and fast enough to handle this.

Don’t check facts that are guaranteed by technical implementation. For example, if the data is stored in a relational DBMS, it is not necessary to check if:

- Columns defined as mandatory contain NULL values.

- The primary key field(s) values are unique in a table.

- There are no existing foreign keys in a table with relational integrity checks enabled.

That said, always keep in mind that a data warehouse is in constant change and that the data definition of fields and tables might change over time.

Housekeeping is very important. Rules defined by different data user units might overlap and should be consolidated. The more complex your organization, the more housekeeping will be needed. Data owners should implement a process of rules consolidation as a kind of “data quality for data quality rules.” Also, data quality checks might become useless if the data is no longer used or if its definition has changed.

Classes of Data Quality Rules

Data quality rules can be classified based on the type of test.

- Data quality check. The “normal” case, checking data within one data warehouse layer (see Figure 1) either within one table or a set of tables.

- Reconciliation. Rules that check if data was transported correctly between data warehouse layers (see Figure 1). These rules are mostly used to check the DQ dimension of “Completeness.” Reconciliation can use a single row or a summarized approach. Checking single rows is much more granular, but you’ll have to reproduce the transformation steps (data filtering, changes in field values, denormalization, joins, etc.) between the compared layers. The more layers you skipped, the more complex transformation logic must be implemented. Therefore, it is a good choice to do reconciliation between each layer and its predecessor instead of comparing the staging to the data mart layer. If transformations have to be implemented in reconciliation rules, use the specification, not the data warehouse code! For summarized reconciliation, find meaningful fields (e.g., summarization, count of distinct values, etc.).

- Monitoring. A data warehouse usually contains historical data and is loaded with delta extracts of operational data. There is the danger of a slowly increasing gap between the data warehouse and the operational data. Building summarized time series of data helps identify issues like this (e.g., comparing last month’s data with the data of the current month). Data users with a good knowledge of their data can provide useful measures and thresholds for monitoring rules.

How to Quantify a Data Quality Issue

Once you have defined what to check, you’ll have to specify how to quantify the identified issues. Information such as “five data rows violate the DQ rule with ID 15” makes little sense for data quality.

The following parts are missing:

- How to quantify/count the detected errors. You might count “number of rows,” but you also might use a monetary scale (for example, exposure). Keep in mind that monetary values might have different signs, so you’ll have to investigate how to meaningfully summarize them. You might consider using both quantification units (count of rows and summarization) for a data quality rule.

- Population. What is the number of units checked by the data quality rule? “Five data rows out of five” has a different quality from “five out of 5 million.” The population should be measured using the same quantification(s) as for the errors. It is common to show the result of a data quality rule as a percentage. The population must not be identical to the number of rows in a table. If a DQ rule checks only a subset of the data (e.g., only terminated contracts in the contracts table), the same filter should be applied to measure the population.

- Definition of the result. Even if a data quality check finds issues, this does not always have to cause an error. For data quality, a traffic light system (red, yellow, green) using threshold values to rate findings is very helpful. For example, green: 0-2%, yellow: 2-5%, red: above 5%. Keep in mind that if data user units share the same rules, they might have very different thresholds for a given rule. A marketing business unit might not mind a loss of a few orders, whereas an accounting unit might mind even cents. It should be possible to define thresholds on percentage or on absolute figures.

- Collect sample error rows. It helps if a data quality rule provides a sample of the detected errors—normally, the (business!) keys and the checked data values are sufficient to help examine the error. It is a good idea to limit the number of written error rows for a data quality rule.

- Sometimes, you might find “known errors” in the data that won’t be fixed but are found by useful data quality checks. For these cases, the use of whitelists (keys of records that should be skipped by a data quality check) is recommended.

Other Metadata

Metadata is important to route the “Analyze” and monitor the phases of the data quality control loop.

- Checked items. It helps to assign the checked table(s) and field(s) to a data quality rule. If you have an enhanced metadata system, this might help to automatically assign data users and a data owner to this rule. For regulatory reasons (such as BCBS 239), it is also necessary to prove how the data is checked by DQ. However, assigning rules automatically to data users/data owners via data lineage (*) might be a double-edged sword (see below).

- Data user. Every DQ rule must have at least one data user/data user unit assigned to check the result during the “Analyze” phase and decide if and how a finding influences their work with the data.

- Data owner. Every DQ rule must have a data owner assigned.

(*) Data lineage shows the flow of data between two points. With data lineage, you can find all data elements influencing a given target field within your warehouse.

Using data lineage to assign users to rules can be problematic. As mentioned before, business users usually know only the data mart layer (and the operating system), but not the lower levels of the data warehouse. By mapping via data lineage, data users will be assigned rules they’re not familiar with. For the lower levels, IT staff may be needed to evaluate a data quality finding. In many cases, a manual mapping or a mixed approach (mapping via data lineage only within the data mart) can help.

Measuring Data Quality

Measuring data quality means executing the available data quality rules, which should be done automatically, triggered by the load processes of the data warehouse. As we’ve seen before, there might be a remarkable number of data quality rules, so the checks will be time-consuming.

In a perfect world, a data warehouse would be loaded only if all data is error-free. In the real world, this is seldom the case (realistically, it is almost never the case). Depending on the overall loading strategy, your data warehouse data quality process should or should not (the latter is far more likely) rule the load process. It is a good design to have data quality processes (job networks) parallel and linked to the “regular” data warehouse load processes.

If there are defined service-level agreements, make sure not to thwart the data warehouse loads with the data quality checks. Errors/abends in data quality processes should not stop the regular load process. Unexpected errors within the data quality processes should be reported and shown up for the “Analyze” phase (see next chapter).

Keep in mind that a data quality rule might crash because of unexpected errors (maybe the rule itself was wrongly implemented, or the underlying data structure changed over time). It would help if your data quality system provided a mechanism to deactivate such rules, especially if your company has few releases per year.

DQ processes should be executed and reported as early as possible—ideally, right after the data checked was loaded. This helps detect errors as early as possible during the load of the data warehouse (some complex warehouse system loads have a duration of several days).

Analyze

In this context, “analyze” means reacting to data quality findings. This is a task for the assigned data users and the data owner.

The way to react should be clearly defined by your data quality project. Data users should be obligated to comment on a rule with findings (at least rules with a red light), explaining what measures are being taken to handle the finding. The data owner needs to be informed and should decide together with the data user(s).

The following actions are possible:

- Serious problem: The problem has to be fixed and the data load repeated.

- Problem is acceptable: Try to fix it for future data loads and handle the problem within the data warehouse or the reporting.

- Defective DQ rule: Fix the problematic DQ rule.

In a perfect world, every data quality problem would be fixed. However, lack of resources and/or time often results in workarounds.

To be able to react in time, the DQ system must inform the data users about “their” rules with findings. Using a data quality dashboard (maybe with sending messages that something came up) is a good idea. The earlier the users are informed about findings, the better.

The data quality dashboard should contain:

- All rules assigned to a given role

- The rules’ results (traffic light, measures, and example rows) with the ability to filter rules by result and data domain

- A mandatory comment that data users have to enter for findings

- A feature to optionally “overrule” the result (if the data quality rule reports errors due to a defective implementation, for example). If more than one business unit has the same data quality rule assigned, “overruling” is only valid for the data user’s business unit (not the whole company).

- Showing rules that were not executed or that abended

The dashboard should also show the current status of the recent data warehouse load process, giving the users a 360-degree view of the data warehouse load process.

The data owner is responsible for making sure that every finding was commented on and the status of the data quality (original or overruled) is at least yellow for all data users.

For a quick overview, it would help to build a kind of simple KPIs (key performance indicators) for data users/data owner. Having an overall traffic light for all associated rules’ results is quite easy if each rule is given the same weight.

Personally, I think computing an overall value of data quality for a given data domain is rather complex and tends to be cabalistic, but you could at least show the number of overall rules grouped by result for a data domain (e.g., “100 DQ rules with 90% green, 5% yellow, and 5% red results”).

It is the data owner’s task to ensure that the findings will be fixed and data quality improved.

Improving Processes

As the data warehouse processes often change, the data quality mechanism also needs maintenance.

A data owner should always take care of the following points:

- Keep it up to date. Changes in the data warehouse need to be caught in the data quality system.

- Enhance. Implement new rules for errors that are not covered by data quality rules yet.

- Streamline. Disable data quality rules that are no longer needed. Consolidate overlapping rules.

Monitoring Data Quality Processes

Monitoring the entire data quality process helps to improve it over time.

Things worth watching would be:

- The coverage of your data with data quality rules

- The percentage of data quality findings within the active rules over time

- The number of active data quality rules (Keep an eye on it—I have seen data users solving their findings by simply disabling more and more data quality rules.)

- The time needed within a data load to have all findings rated and fixed

Final Data Warehouse Data Quality Process Tips

Many of the following points are important in any kind of project.

Anticipate resistance. As we have seen, if there is no urgent quality issue, data quality is often viewed as an additional burden without offering new functionality. Keep in mind that it might create additional workload for the data users. In many cases, compliance and regulatory demands can help you to convince the users to see it as an unavoidable requirement.

Find a sponsor. As noted above, DQ is not a fast-selling item, so a powerful sponsor/stakeholder is needed—the higher in the management, the better.

Find allies. As with the sponsor, anyone who shares the idea of strong data quality would be most helpful. The DQ circuit loop is an ongoing process and needs people to keep the circuit loop alive.

Start small. If there’s been no DQ strategy so far, look for a business unit that needs better data quality. Build a prototype to show them the benefit of better data. If your task is to improve or even replace a given data quality strategy, look at things working well/being accepted in the organization, and keep them.

Don’t lose sight of the whole picture. Although starting small, keep in mind that some points, especially the roles, are prerequisites for a successful DQ strategy.

Once implemented, don’t let go. The data quality process needs to be part of data warehouse use. Over time, focus on data quality tends to get a bit lost, and it’s up to you to maintain it.

Understanding the basics

How do you determine data quality?

Data quality is determined based on data quality rules, which are defined by people who know and work with the data. Data quality checks should be defined for every relevant data object.

What is good data quality?

Good data quality means the data stored in the data warehouse is of sufficient quality for the user’s needs and applicable regulatory requirements.

Why is data quality important?

Erroneous data causes false estimations and bad business decisions. In addition, a lack of data quality can result in serious regulatory compliance issues.

What is a data warehouse?

A data warehouse is a non-operational system mainly used for decision support. It consolidates the data of the operational systems (all of them or a smaller subset) and provides query-optimized data for the users of the data warehouse system.

Stuttgart, Baden-Württemberg, Germany

Member since March 4, 2020

About the author

Alexander has more than 20 years of experience in data warehousing in various roles and environments. He has extensive experience in insurance and banking.