Three Principles of Data Warehouse Development

Data warehouses aren’t exactly a new concept, but industry demand for data science services, coupled with the rise of AI and machine learning, is making them more relevant than ever.

In this post, Toptal Data Warehouse Developer Chamitha Wanaguru outlines three basic principles you need to keep in mind when developing a new data warehouse.

Data warehouses aren’t exactly a new concept, but industry demand for data science services, coupled with the rise of AI and machine learning, is making them more relevant than ever.

In this post, Toptal Data Warehouse Developer Chamitha Wanaguru outlines three basic principles you need to keep in mind when developing a new data warehouse.

Chamitha is an IT veteran specializing in data warehouse system architecture, data engineering, business analysis, and project management.

Expertise

PREVIOUSLY AT

Gartner estimates that close to 70 to 80 percent of newly initiated business intelligence projects fail. This is due to myriad reasons, from bad tool choice to a lack of communication between IT and business stakeholders. Having successfully implemented BI projects across industries, I hope to share my experiences in this blog post and highlight key reasons why business intelligence projects fail. This article will present counter-measures to failure based on three principles that should govern how data warehouses are built. Following these data warehouse concepts should help you as a data warehouse developer to navigate the development journey avoiding the common potholes or even sinkholes of BI implementations.

Business Intelligence Data Warehouse Implementation

While the criteria for a successful business intelligence data warehouse would vary by project, certain minimums are expected and required across all projects. Here’s a list of the main attributes usually found in a successful business intelligence data warehouse:

- Value: Business intelligence projects can span the course of many months or even years. However, it is important to show the benefits of a data warehouse to your business stakeholders very early on in the project to ensure continued funding and interest. Ideally, stakeholders should be shown some meaningful business value out of the new system within the first three weeks of a project.

- Self-service BI: The days of waiting on IT to fulfill data requests or carry out data analyses are over. The success of any BI project is now measured by how well it empowers the business users to extract value out of the system themselves.

- Cost: BI projects generally have relatively high up-front implementation costs. To counterbalance and offset the high initial cost, it is important to design warehouses with low maintenance costs. If the client requires a full-fledged team of BI developers to ensure/diagnose data quality issues, make routine changes to the data models, or handle ETL failures, the system would be expensive to budget and at risk of being turned off after some time.

- Adaptability: The ability to adapt to evolving business demands is crucial. It is important to keep in mind the countless number of BI tools that are available on the market and the pace at which they evolve to include additional functionality and features. Coupled with the fact that businesses continuously evolve, requirements for the warehouse will change; adaptability requires data warehouses to be designed to enable the use of alternative BI tools such as different back-ends or visualization tools in the future and be adaptable to often unforeseen changes in requirements.

Through my experience building successful solutions, and perhaps even more importantly, being involved in failed projects, I have come to the conclusion that three key principles are paramount in increasing the likelihood of a successful business intelligence system implementation. However, before covering them in detail, let’s start with some context.

What Is a Data Warehouse?

Before delving into different data warehouse concepts, it is important to understand what a data warehouse actually is.

Data warehouses are often thought of as business intelligence systems created to help with the day-to-day reporting needs of a business entity. They don’t have the same real-time performance requirements (in standard implementations) as OLTP data systems, and whereas OLTP systems will only contain the data relating to one small subset of the business, data warehouses look to encompass all data relating to the business.

Data warehouse models offer benefits to a business only when the the warehouse is regarded as the central hub of “all things data” and not just a tool through which your operational reports are produced. All operational systems should have two-way communication with the data warehouse to feed data in and to receive feedback on how to improve operational efficiency. Any business change, such as an increase in prices or reduction of supply/inventory should first be prototyped and forecasted within your data warehouse environment so that your business can reliably predict and quantify the outcome. In this context, all data science and data analytics functions would be centered around the data warehouse.

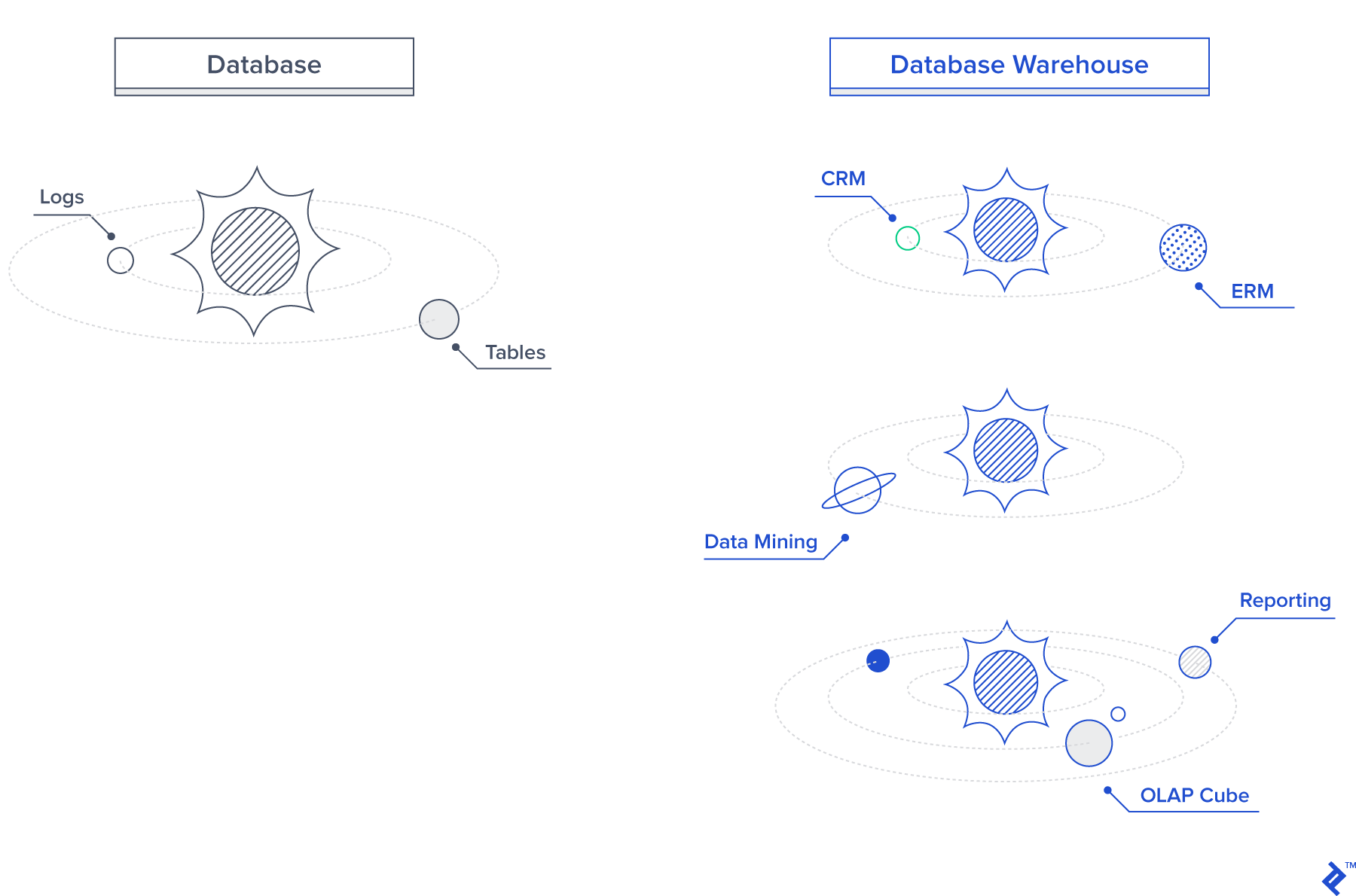

There are many components of a data warehouse, and it is not simply a database:

- A database is a medium through which you store your data.

- A data warehouse goes beyond that to include tools and components necessary to extract business value out of your data and can include components such as integration pipelines, data quality frameworks, visualization tools, and even machine learning plugins.

Here is a more visual representation of the difference between a database and a database warehouse structure. Databases or new logical data meta stores such as Hive form the central star to a data warehouse’s stellar system, with all other components as its revolving planets. However, unlike a star system, a data warehouse can have one or more databases and these databases should be interchangeable with new technologies, as we will discuss later on in the article.

First Data Warehouse Principle: Data Quality Reigns Supreme

Data warehouses are only useful and valuable to the extent that the data within is trusted by the business stakeholders. To ensure this, frameworks that automatically capture and correct (where possible) data quality issues have to be built. Data cleansing should be part of the data integration process with regular data audits or data profiling are conducted to identify any data issues. While these proactive measures are implemented, you also need to consider reactive measures when bad data slips these gates and is reported by the user.

To ensure user confidence in the data warehouse system, any bad data highlighted by business users should be investigated as a priority. To help with these efforts, data lineage and data control frameworks should be built into the platform to ensure that any data issues can be identified and remediated quickly by the support staff. Most data integration platforms integrate some degree of data quality solutions, such as DQS in MS SQL Server or IDQ in Informatica.

Take advantage of these built-in platforms if you are using a commercial tool in your data integration pipelines, but additionally or otherwise, ensure you build out the mechanisms that would help you to maintain the quality of your data. For example, most data integration tools lack good functionality to track data lineage. To overcome this limitation, a custom batch control framework can be built using a series of control tables to track every data flow that occurs within the system.

It is very difficult to regain the trust of your business stakeholders if they encounter bad quality within your platform, so the up-front investment in data quality frameworks should be well worth the cost.

Second Data Warehouse Principle: Flip the Triangle

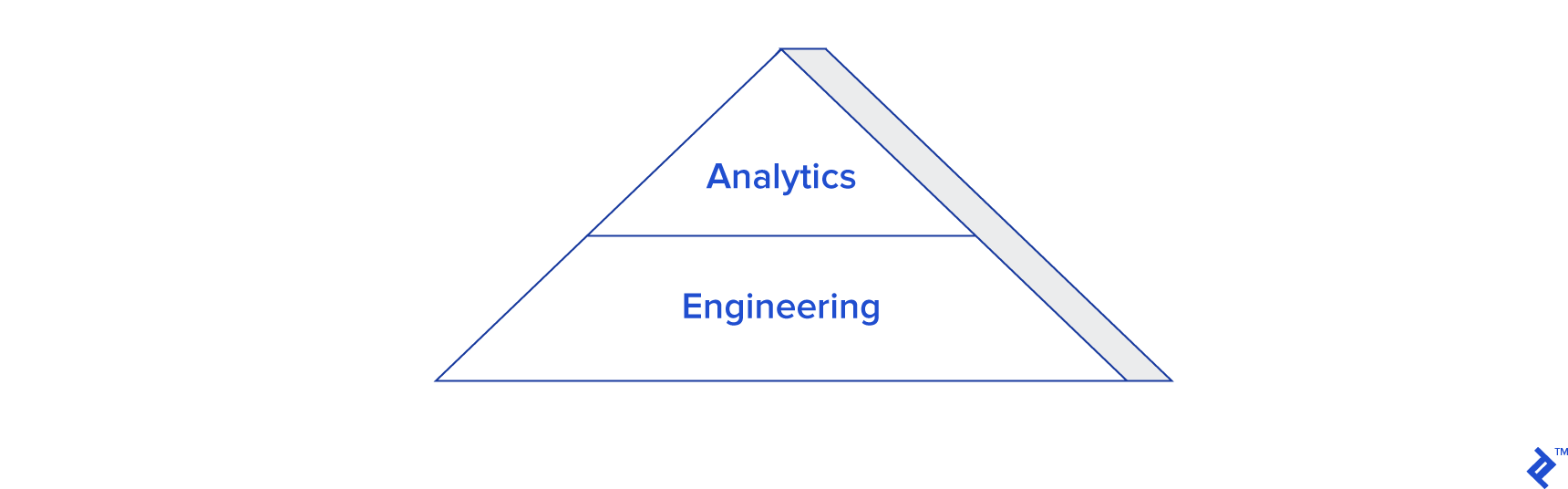

This figure illustrates the division of effort in the implementation and usage of most data warehouses.

Most effort is invested in building and maintaining the warehouse while the value-add of having a warehouse for business analytics is a much smaller portion of the effort. This is another reason why business intelligence projects often fail. Sometimes, it takes too long in the project cycle to show any meaningful value to the client, and when the system is finally in place, it still requires a lot of IT effort to get any business value out of it. As we said in the introduction, designing and deploying business intelligence systems can be an expensive and lengthy process. Therefore, stakeholders will rightfully expect to quickly start reaping the value added by their business intelligence and data warehousing efforts. If no added value materializes, or if the results are simply too late to be of any real value, there’s not much stopping them from pulling the plug.

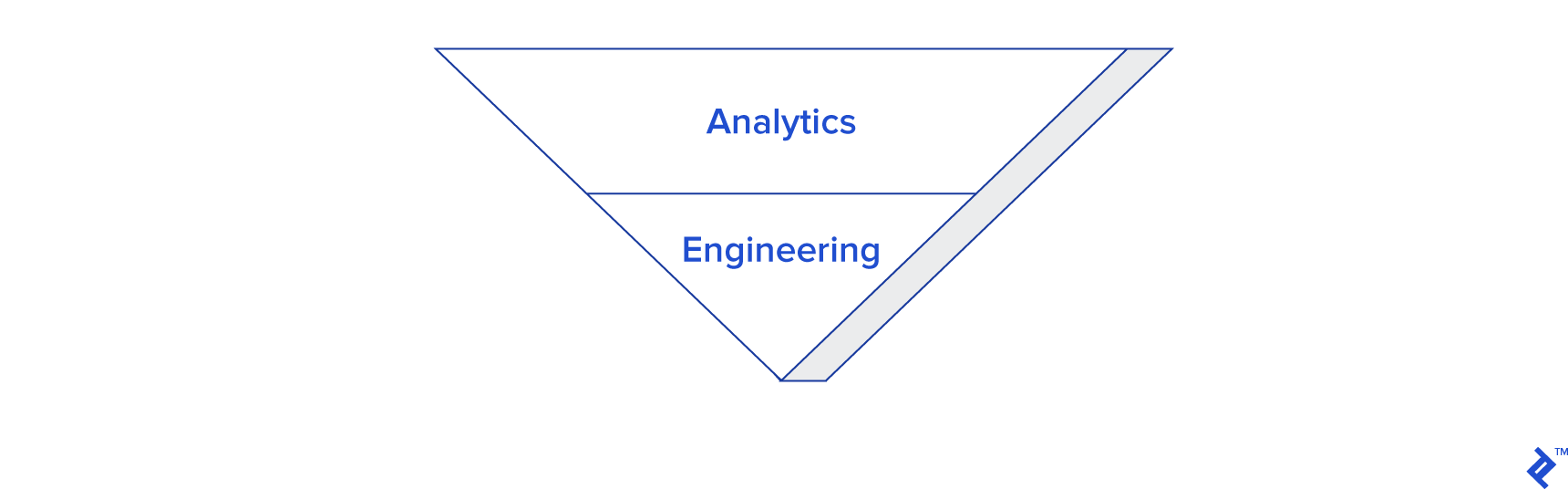

The second principle of data warehouse development is to flip the triangle as illustrated here.

Your choice of business intelligence tools and the frameworks you put in place need to ensure that a larger portion of the effort going into the warehouse is to extract business value than to build and maintain it. This will ensure high levels of engagement from your business stakeholders because they will immediately see the value of investing in the project. More importantly, you enable the business to be self-sufficient in extracting value without having such a strong dependency on IT.

You can adhere to this principle by following incremental development methodologies when building the warehouse to ensure you deliver production functionality as quickly as possible. Following Kimball’s data mart strategy or Linstedt’s Data Vault data warehouse design methodologies will help you develop systems that build incrementally whilst accounting for change smoothly. Use a semantic layer in your platform such as a MS SSAS cube or even a Business Objects Universe to provide an easy-to-understand business interface to your data. In the case of the former, you will also be providing an easy mechanism for users to query data from Excel—still the most popular data analytics tool.

Incorporating BI tools that champion self-service BI such as Tableau or PowerBI will only help improve user engagement, as the interface to query data is now drastically simplified as opposed to writing SQL.

Storing source data in a data lake before populating a database will help to expose the source data to users very early in the on-boarding process. At least advanced users such as business quants will now be able to digest the source data (through the raw files) by connecting tools such as Hive/Impala on top of the files. This will help reduce the time required for the business to analyze a new data point from weeks to days or even hours.

Third Database Warehouse Principle: Plug and Play

Data is on the verge becoming the digital equivalent of oil. In recent years, we’ve witnessed an explosion in the number of tools that can be used as part of a data warehouse platform and the rate of innovation. Leading the charge are the myriad visualization tools available right now, with advanced options for back-ends close behind. Given this environment and the propensity for business requirements to constantly change, it is important to keep in mind that you would need to swap out components of your technology stack or even introduce/remove others with time, as business and technology changes dictate.

Based on personal experience, it would be fortunate if a platform could last 12 months without some sort of significant change. A reasonable amount of effort is unavoidable in these situations; however, it should always be possible to change technologies or design, and your platform should be designed to cater to this eventual need. If the migration cost of a warehouse is too high, the business could simply decide the cost is not justified and abandon what you built instead of looking to migrate the existing solution to new tools.

Building a system that would cater to all imaginable future needs is impossible. Therefore, a certain level of appreciation that whatever you design and build now could be replaced with time is needed when building data warehouses. To this end, I would advocate the use of generic tools and designs where possible rather than tightly coupling your platform to the tools it’s running on. Of course, this needs to be done after careful planning and consideration as the power in a lot of tools, especially databases, is in their individuality and in close complement.

For example, ETL performance is dramatically improved when using stored procedures in a database to create new business analytics data as opposed to extracting and processing the data outside the database using Python or SSIS. With regards to the reporting layer, visualization tools would offer certain functionalities that aren’t readily available in others—e.g., Power BI supports custom MDX queries, but Tableau doesn’t. My point isn’t to advocate the desertion of stored procedures or the avoidance of SSAS cubes or Tableau in your systems. My intention is merely to promote the importance of being mindful in justifying any decisions to tightly couple your platform to its tools.

Another potential sinkhole is in the integration layer. It’s very easy to use a tool like SSIS for your data integration because of its debug capabilities or ease of use with the SQL Server platform. However, migrating hundreds of SSIS packages to another tool would become a very expensive project. In cases where you are mostly doing “EL,” look to use a generic tool to do your processing. Using a programming language like Python or Java to write one generic loader to load your staging layer will help to cut down on individual SSIS packages you would have required otherwise. This approach not only helps reduce maintenance and future migration costs but also helps automate more aspects of the data onboarding process with not having to write new individual packages (tying in with Principle 2).

In all these instances, you need to decide on a practical compromise between the immediate benefits and the future migration costs to ensure the warehouse doesn’t get scrapped because it can’t handle change, or because the change would have necessitated too much time, effort, or investment.

Wrapping Up

There are many reasons why a certain business intelligence system may fail, and there are also some common oversights that can lead to eventual failure. The ever-changing technology landscape, limited budget for data systems because of misconceived secondary priority to operational systems, and the sheer complexity and difficulty of working with data means that careful consideration of not only immediate goals but also future plans needs to happen when designing and building the components of a data warehouse.

The data warehousing fundamentals outlined in this article are intended to help guide you when making these important considerations. Of course, taking into account these principles does not guarantee success, but they will certainly go a long way toward helping you avoid failure.

Understanding the basics

What does a data warehouse developer do?

Data warehouse developers or more commonly referred to now as data engineers are responsible for the overall development and maintenance of the data warehouse. It would be up to them to decide on the technology stack as well as any custom frameworks and processing and to make data ready for consumers.

What is a data warehouse example?

The use of various technologies means that most data warehouses are very different from one another. A basic example would consist of a SQL server database, with SSIS forming the data integration layer, and Power BI and SSRS sitting on top of the database to fulfill visualization and reporting requirements.

How does a data warehouse work?

A data warehouse is formed by myriad tools and frameworks working holistically together to make data ready for deriving insights. At the heart of a data warehouse is a database or a logical meta store of data with a data integration framework making up the backbone.

What are the benefits of a data warehouse?

Data warehouses provide the mechanism for an organization to store and model all of its data from different departments into one cohesive structure. From this, various consumers of your company’s data can be served, both internal and external. A data warehouse is capable of being the one single source of truth.

Chamitha Wanaguru

Colombo, Sri Lanka

Member since June 18, 2020

About the author

Chamitha is an IT veteran specializing in data warehouse system architecture, data engineering, business analysis, and project management.

Expertise

PREVIOUSLY AT