OpenCV Tutorial: Real-time Object Detection Using MSER in iOS

Detecting objects of interest in images has always been an interesting challenge in the realm of computer vision, and many approaches have been developed over recent years. As mobile platforms are becoming increasingly powerful, now is the perfect opportunity to develop interesting mobile applications that take advantages of these algorithms. This article walks us through the process of building a simple iOS application for detecting objects in images.

Detecting objects of interest in images has always been an interesting challenge in the realm of computer vision, and many approaches have been developed over recent years. As mobile platforms are becoming increasingly powerful, now is the perfect opportunity to develop interesting mobile applications that take advantages of these algorithms. This article walks us through the process of building a simple iOS application for detecting objects in images.

With a master’s degree in AI and 6+ years of professional experience, Altaibayar does full-stack and mobile development with a focus on AR.

PREVIOUSLY AT

Over the last few years, the average mobile phone performance has increased significantly. Be it for sheer CPU horsepower or RAM capacity, it is now easier to do computation-heavy tasks on mobile hardware. Although these mobile technologies are headed in the right direction, there is still a lot to be done on mobile platforms, especially with the advent of augmented reality, virtual reality, and artificial intelligence.

A major challenge in computer vision is to detect objects of interest in images. The human eye and brain do an exceptional job , and replicating this in machines is still a dream. Over recent decades, approaches have been developed to mimic this in machines, and it is getting better.

In this tutorial, we will explore an algorithm used in detecting blobs in images. We will also use the algorithm, from the open source library, OpenCV, to implement a prototype iPhone application that uses the rear-camera to acquire images and detect objects in them.

OpenCV Tutorial

OpenCV is an open source library that provides implementations of major computer vision and machine learning algorithms. If you want to implement an application to detect faces, playing cards on a poker table, or even a simple application for adding effects on to an arbitrary image, then OpenCV is a great choice.

OpenCV is written in C/C++, and has wrapper libraries for all major platforms. This makes it especially easy to use within the iOS environment. To use it within an Objective-C iOS application, download the OpenCV iOS Framework from the official website. Please, make sure that you are using version 2.4.11 of OpenCV for iOS (which this article assumes you are using), as the lastest version, 3.0, has some compatibility-breaking changes in how the header files are organized. Detailed information on how to install it is documented on its website.

MSER

MSER, short for Maximally Stable Extremal Regions, is one of the many methods available for blob detection within images. In simple words, the algorithm identifies contiguous sets of pixels whose outer boundary pixel intensities are higher (by a given threshold) than the inner boundary pixel intensities. Such regions are said to be maximally stable if they do not change much over a varying amount of intensities.

Although a number of other blob detection algorithms exist, MSER was chosen here because it has a fairly light run-time complexity of O(n log(log(n))) where n is the total number of pixels on the image. The algorithm is also robust to blur and scale, which is advantageous when it comes to processing images acquired through real-time sources, such as the camera of a mobile phone.

For the purpose of this tutorial, we will design the application to detect the logo of Toptal. The symbol has sharp corners, and that may lead one to think about how effective corner detection algorithms may be in detecting Toptal’s logo. After all, such an algorithm is both simple to use and understand. Although corner based methods may have a high success rate when it comes to detecting objects that are distinctly separate from the background (such as black objects on white backgrounds), it would be difficult to achieve real-time detection of Toptal’s logo on real-world images, where the algorithm would be constantly detecting hundreds of corners.

Strategy

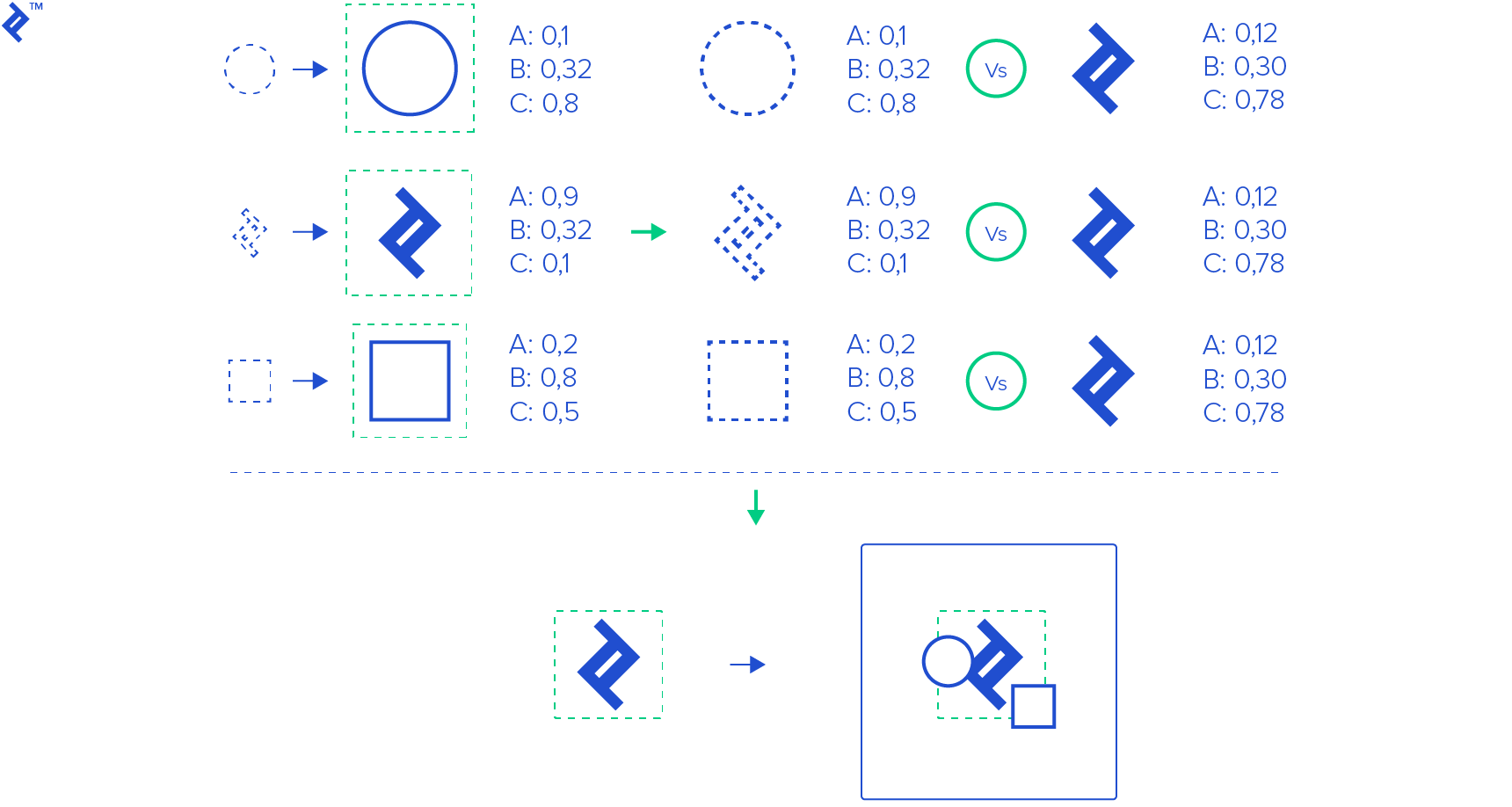

For each frame of image the application acquires through the camera, it’s converted first to grayscale. Grayscale images have only one channel of color, but the logo will be visible, nonetheless. This makes it easier for the algorithm to deal with the image and significantly reduces the amount of data the algorithm has to process for little to no extra gain.

Next, we will use OpenCV’s implementation the algorithm to extract all MSERs. Next, each MSER will be normalized by transforming its minimum bounding rectangle into a square. This step is important because the logo may be acquired from different angles and distances and this will increase tolerance of perspective distortion.

Furthermore, a number of properties are computed for each MSER:

- Number of holes

- Ratio of the area of MSER to the area of its convex hull

- Ratio of the area of MSER to the area of its minimum-area rectangle

- Ratio of the length of MSER skeleton to area of the MSER

- Ratio of the area of MSER to the area of its biggest contour

In order to detect Toptal’s logo in an image, properties of the all the MSERs are compared to already learned Toptal logo properties. For the purpose of this tutorial, maximum allowed differences for each property were chosen empirically.

Finally, the most similar region is chosen as the result.

iOS Application

Using OpenCV from iOS is easy. If you haven’t done it yet, here is a quick outline of the steps involved in setting up Xcode to create an iOS application and use OpenCV in it:

-

Create a new project name “SuperCool Logo Detector.” As the language, leave Objective-C selected.

-

Add a new Prefix Header (.pch) file and name it PrefixHeader.pch

-

Go into project “SuperCool Logo Detector” Build Target and in the Build Settings tab, find the “Prefix Headers” setting. You can find it in the LLVM Language section, or use the search feature.

-

Add “PrefixHeader.pch” to Prefix Headers setting

-

At this point, if you haven’t installed OpenCV for iOS 2.4.11, do it now.

-

Drag-and-drop the downloaded framework into the project. Check “Linked Frameworks and Libraries” in your Target Settings. (It should be added automatically, but better to be safe.)

-

Additionally, link the following frameworks:

- AVFoundation

- AssetsLibrary

- CoreMedia

-

Open “PrefixHeader.pch” and add the following 3 lines:

#ifdef __cplusplus #include <opencv2/opencv.hpp> #endif” -

Change extensions of automatically created code files from “.m” to “.mm”. OpenCV is written in C++ and with *.mm you are saying that you will be using Objective-C++.

-

Import “opencv2/highgui/cap_ios.h” in ViewController.h and change ViewController to conform with the protocol CvVideoCameraDelegate:

#import <opencv2/highgui/cap_ios.h> -

Open Main.storyboard and put an UIImageView on the initial view controller.

-

Make an outlet to ViewController.mm named “imageView”

-

Create a variable “CvVideoCamera *camera;” in ViewController.h or ViewController.mm, and initialize it with a reference to the rear-camera:

camera = [[CvVideoCamera alloc] initWithParentView: _imageView]; camera.defaultAVCaptureDevicePosition = AVCaptureDevicePositionBack; camera.defaultAVCaptureSessionPreset = AVCaptureSessionPreset640x480; camera.defaultAVCaptureVideoOrientation = AVCaptureVideoOrientationPortrait; camera.defaultFPS = 30; camera.grayscaleMode = NO; camera.delegate = self; -

If you build the project now, Xcode will warn you that you didn’t implement the “processImage” method from CvVideoCameraDelegate. For now, and for the sake of simplicity, we will just acquire the images from the camera and overlay them with a simple text:

- Add a single line to “viewDidAppear”:

[camera start];-

Now, if you run the application, it will ask you for permission to access the camera. And then you should see video from camera.

-

In the “processImage” method add the following two lines:

const char* str = [@"Toptal" cStringUsingEncoding: NSUTF8StringEncoding]; cv::putText(image, str, cv::Point(100, 100), CV_FONT_HERSHEY_PLAIN, 2.0, cv::Scalar(0, 0, 255));

That is pretty much it. Now you have a very simple application that draws the text “Toptal” on images from camera. We can now build our target logo detecting application off this simpler one. For brevity, in this article we will discuss only a handful of code segments that are critical to understanding how the application works, overall. The code on GitHub has a fair amount of comments to explain what each segment does.

Since the application has only one purpose, to detect Toptal’s logo, as soon as it is launched, MSER features are extracted from the given template image and the values are stored in memory:

cv::Mat logo = [ImageUtils cvMatFromUIImage: templateImage];

//get gray image

cv::Mat gray;

cvtColor(logo, gray, CV_BGRA2GRAY);

//mser with maximum area is

std::vector<cv::Point> maxMser = [ImageUtils maxMser: &gray];

//get 4 vertices of the maxMSER minrect

cv::RotatedRect rect = cv::minAreaRect(maxMser);

cv::Point2f points[4];

rect.points(points);

//normalize image

cv::Mat M = [GeometryUtil getPerspectiveMatrix: points toSize: rect.size];

cv::Mat normalizedImage = [GeometryUtil normalizeImage: &gray withTranformationMatrix: &M withSize: rect.size.width];

//get maxMser from normalized image

std::vector<cv::Point> normalizedMser = [ImageUtils maxMser: &normalizedImage];

//remember the template

self.logoTemplate = [[MSERManager sharedInstance] extractFeature: &normalizedMser];

//store the feature

[self storeTemplate];

The application has only one screen with a Start/Stop button, and all necessary information, as FPS and number of detected MSERs, are drawn automatically on the image. As long as the application is not stopped, for every image frame in the camera, the following processImage method is invoked:

-(void)processImage:(cv::Mat &)image

{

cv::Mat gray;

cvtColor(image, gray, CV_BGRA2GRAY);

std::vector<std::vector<cv::Point>> msers;

[[MSERManager sharedInstance] detectRegions: gray intoVector: msers];

if (msers.size() == 0) { return; };

std::vector<cv::Point> *bestMser = nil;

double bestPoint = 10.0;

std::for_each(msers.begin(), msers.end(), [&] (std::vector<cv::Point> &mser)

{

MSERFeature *feature = [[MSERManager sharedInstance] extractFeature: &mser];

if(feature != nil)

{

if([[MLManager sharedInstance] isToptalLogo: feature] )

{

double tmp = [[MLManager sharedInstance] distance: feature ];

if ( bestPoint > tmp ) {

bestPoint = tmp;

bestMser = &mser;

}

}

}

});

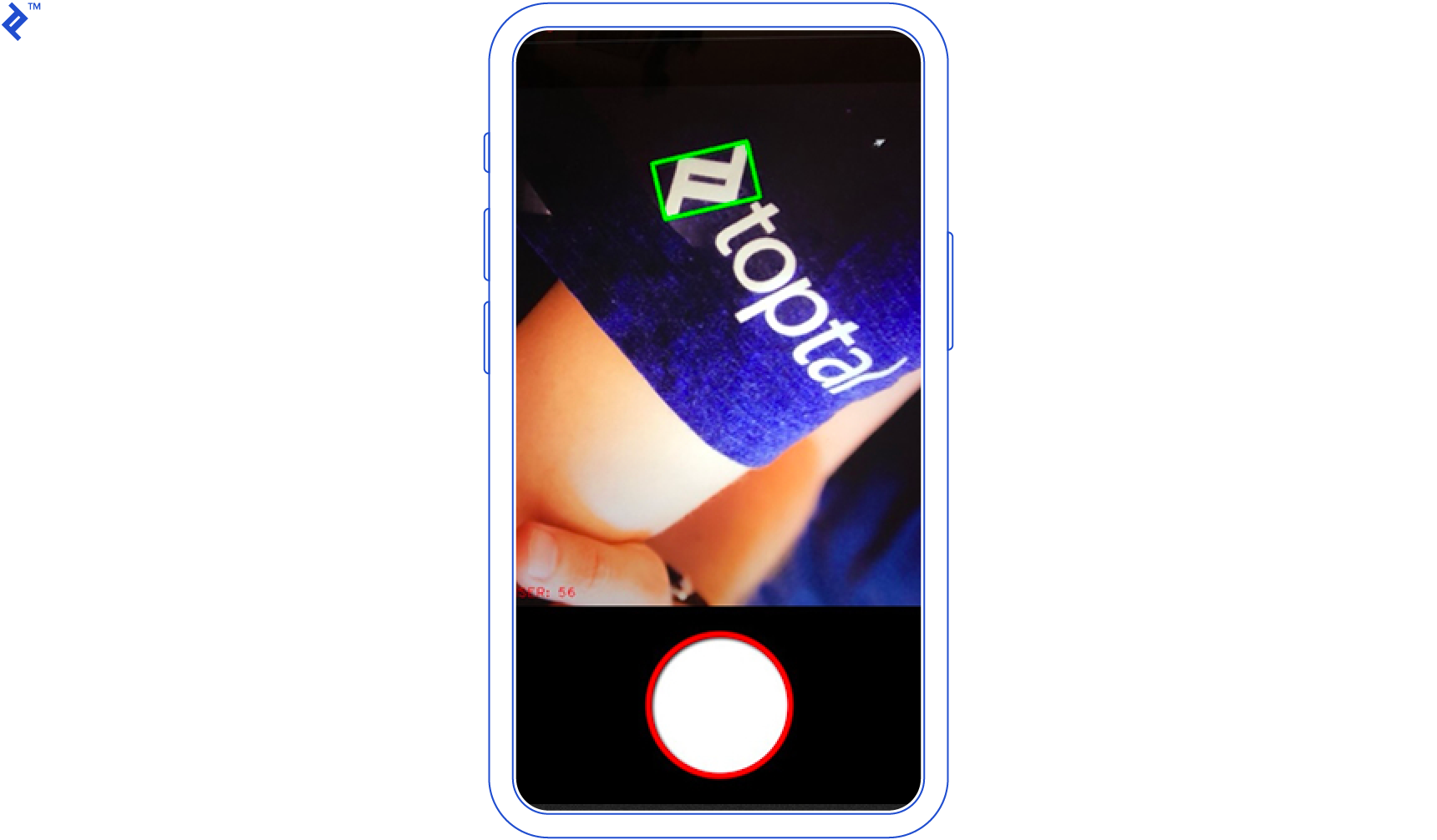

if (bestMser)

{

NSLog(@"minDist: %f", bestPoint);

cv::Rect bound = cv::boundingRect(*bestMser);

cv::rectangle(image, bound, GREEN, 3);

}

else

{

cv::rectangle(image, cv::Rect(0, 0, W, H), RED, 3);

}

// Omitted debug code

[FPS draw: image];

}

This method, in essence, creates a grayscale copy of the original image. It identifies all MSERs and extracts their relevant features, scores each MSER for similarity with the template and picks the best one. Finally, it draws a green boundary around the best MSER and overlays the image with meta information.

Below are the definitions of a few important classes, and their methods, in this application. Their purposes are described within comments.

GeometryUtil.h

/*

This static class provides perspective transformation function

*/

@interface GeometryUtil : NSObject

/*

Return perspective transformation matrix for given points to square with

origin [0,0] and with size (size.width, size.width)

*/

+ (cv::Mat) getPerspectiveMatrix: (cv::Point2f[]) points toSize: (cv::Size2f) size;

/*

Returns new perspecivly transformed image with given size

*/

+ (cv::Mat) normalizeImage: (cv::Mat *) image withTranformationMatrix: (cv::Mat *) M withSize: (float) size;

@end

MSERManager.h

/*

Singelton class providing function related to msers

*/

@interface MSERManager : NSObject

+ (MSERManager *) sharedInstance;

/*

Extracts all msers into provided vector

*/

- (void) detectRegions: (cv::Mat &) gray intoVector: (std::vector<std::vector<cv::Point>> &) vector;

/*

Extracts feature from the mser. For some MSERs feature can be NULL !!!

*/

- (MSERFeature *) extractFeature: (std::vector<cv::Point> *) mser;

@end

MLManager.h

/*

This singleton class wraps object recognition function

*/

@interface MLManager : NSObject

+ (MLManager *) sharedInstance;

/*

Stores feature from the biggest MSER in the templateImage

*/

- (void) learn: (UIImage *) templateImage;

/*

Sum of the differences between logo feature and given feature

*/

- (double) distance: (MSERFeature *) feature;

/*

Returns true if the given feature is similar to the one learned from the template

*/

- (BOOL) isToptalLogo: (MSERFeature *) feature;

@end

After everything is wired together, with this application, you should be able to use the camera of your iOS device to detect Toptal’s logo from different angles and orientations.

Conclusion

In this article we have shown how easy it is to detect simple objects from an image using OpenCV. The entire code is available on GitHub. Feel free to fork and send push requests, as contributions are welcome.

As is true for any machine learning problems, the success rate of the logo detection in this application may be increased by using a different set of features and different method for object classification. However, I hope that this article will help you get started with object detection using MSER and applications of computer vision techniques, in general.

Further Reading

- J. Matas, O. Chum, M. Urban, and T. Pajdla. “Robust wide baseline stereo from maximally stable extremal regions.”

- Neumann, Lukas; Matas, Jiri (2011). “A Method for Text Localization and Recognition in Real-World Images”

About the author

With a master’s degree in AI and 6+ years of professional experience, Altaibayar does full-stack and mobile development with a focus on AR.

PREVIOUSLY AT