Advancing AI Image Labeling and Semantic Metadata Collection

Image labeling can be a tedious, time-consuming task, compounded by the sheer volume of data needed to train deep neural networks. This article breaks down large data set processing and explains how a new SaaS product can help automate image labeling.

Neven Pičuljan

Ask an AI Engineer: Trending Questions About Artificial Intelligence

In this ask-me-anything-style Q&A, leading Toptal AI developer Joao Diogo de Oliveira fields questions from fellow engineers about resources for pivoting to ML, approaches to large language models, and the most critical future applications of AI.

Joao Diogo de Oliveira

Ask an NLP Engineer: From GPT Models to the Ethics of AI

Want to expand your skills amid the current surge of revolutionary language models like GPT-4? In this ask-me-anything-style tutorial, Toptal data scientist and AI engineer Daniel Pérez Rubio fields questions from fellow programmers on a wide range of machine learning, natural language processing, and artificial intelligence topics.

Daniel Pérez Rubio

Identifying the Unknown With Clustering Metrics

Clustering in machine learning has a variety of applications, but how do you know which algorithm is best suited to your data? Here’s how to amplify your data insights with comparison metrics, including the F-measure.

Surbhi Gupta

Ensemble Methods: The Kaggle Machine Learning Champion

Two heads are better than one. This proverb describes the concept behind ensemble methods in machine learning. Let’s examine why ensembles dominate ML competitions and what makes them so powerful.

Juan Manuel Ortiz de Zarate

Machine Learning Number Recognition: From Zero to Application

Harnessing the potential of machine learning for computer vision is not a new concept but recent advances and the availability of new tools and datasets have made it more accessible to developers.

In this article, Toptal Software Developer Teimur Gasanov demonstrates how you can create an app capable of identifying handwritten digits in under 30 minutes, including the API and UI.

Teimur Gasanov

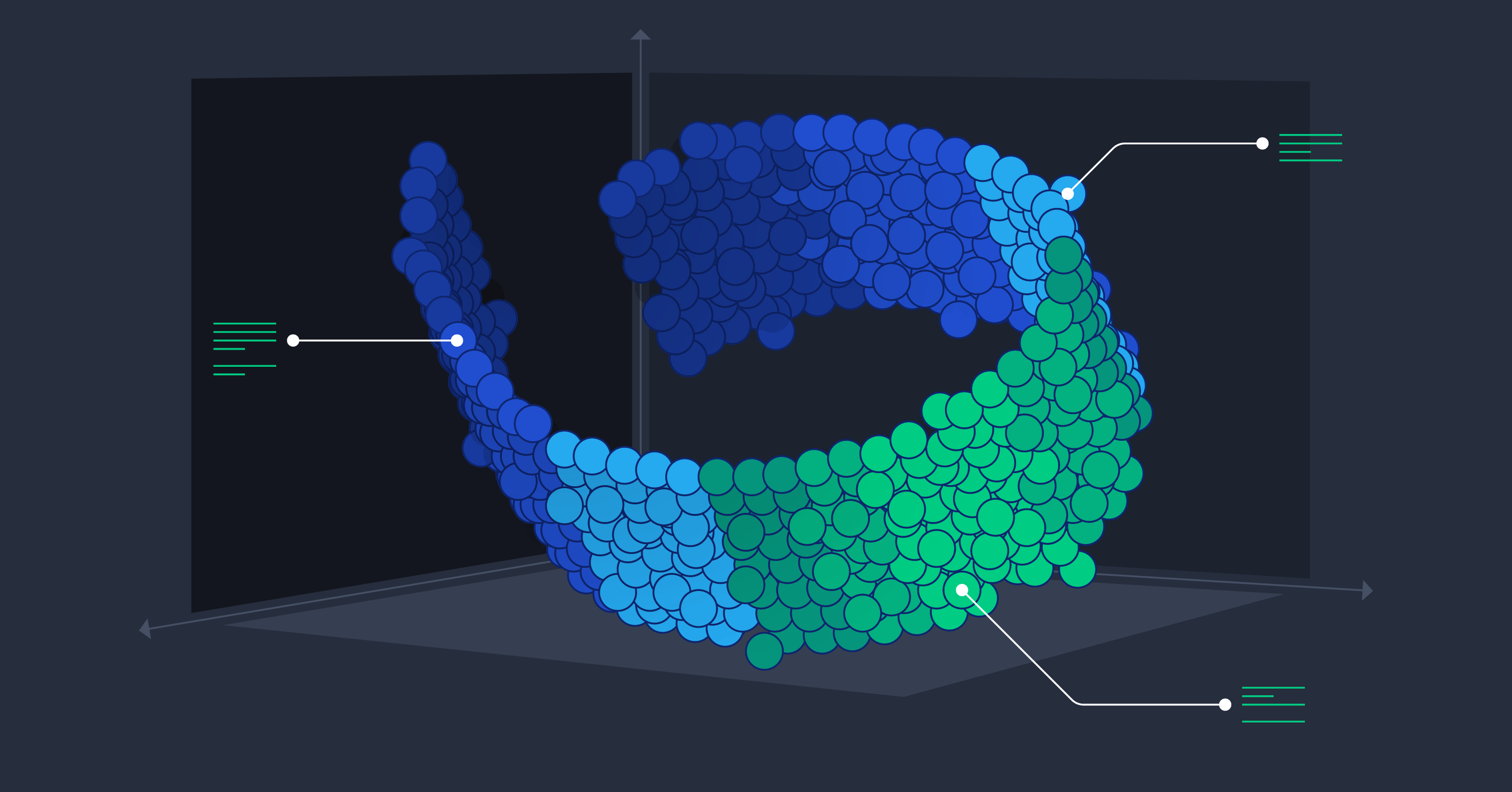

Embeddings in Machine Learning: Making Complex Data Simple

Working with non-numerical data can be challenging, even for seasoned data scientists. To make good use of such data, it needs to be transformed. But how?

In this article, Toptal Data Scientist Yaroslav Kopotilov will introduce you to embeddings and demonstrate how they can be used to visualize complex data and make it usable.

Yaroslav Kopotilov

The Many Applications of Gradient Descent in TensorFlow

TensorFlow is one of the leading tools for training deep learning models. Outside that space, it may seem intimidating and unnecessary, but it has many creative uses—like producing highly effective adversarial input for black-box AI systems.

Alan Reiner

Getting the Most Out of Pre-trained Models

Pre-trained models are making waves in the deep learning world. Using massive pre-training datasets, these NLP models bring previously unheard-of feats of AI within the reach of app developers.

Nauman Mustafa

World-class articles, delivered weekly.

Toptal Developers

- Algorithm Developers

- Angular Developers

- AWS Developers

- Azure Developers

- Big Data Architects

- Blockchain Developers

- Business Intelligence Developers

- C Developers

- Computer Vision Developers

- Django Developers

- Docker Developers

- Elixir Developers

- Go Engineers

- GraphQL Developers

- Jenkins Developers

- Kotlin Developers

- Kubernetes Experts

- Machine Learning Engineers

- Magento Developers

- .NET Developers

- R Developers

- React Native Developers

- Ruby on Rails Developers

- Salesforce Developers

- SQL Developers

- Sys Admins

- Tableau Developers

- Unreal Engine Developers

- Xamarin Developers

- View More Freelance Developers

Join the Toptal® community.