Benchmarking A Node.js Promise

You can just write sequentially executed code in JavaScript, but should you?

In this article, Toptal Freelance JavaScript Developer Omar Waleed tests the widespread Node.js belief that synchronous code degrades performance and is, in a sense, just plain evil. Is this really true?

You can just write sequentially executed code in JavaScript, but should you?

In this article, Toptal Freelance JavaScript Developer Omar Waleed tests the widespread Node.js belief that synchronous code degrades performance and is, in a sense, just plain evil. Is this really true?

Omar is a full-stack developer and architect with over 4 years of experience working with companies of varying sizes.

Expertise

We are living in a brave new world. A world filled with JavaScript. In recent years, JavaScript has dominated the web taking the whole industry by storm. After the introduction of Node.js, the JavaScript community was able to utilize the simplicity and dynamicity of the language to be the sole language to do everything, handling server side, client side, and even went boldly and claimed a position for machine learning. But JavaScript has changed drastically as a language over the past few years. New concepts have been introduced that were never there before, like arrow functions and promises.

Ah, promises. The whole concept of a promise and callback didn’t make much sense to me when I first started learning Node.js. I was used to the procedural way of executing code, but in time I understood why it was important.

This brings us to the question, why were callbacks and promises introduced anyway? Why can’t we just write sequentially executed code in JavaScript?

Well, technically you can. But should you?

In this article, I will give a brief introduction about JavaScript and its runtime, and more importantly, test the widespread belief in the JavaScript community that synchronous code is sub-par in performance and, in a sense, just plain evil, and should never be used. Is this myth really true?

Before you begin, this article assumes you’re already familiar with promises in JavaScript, however, if you aren’t or need a refresher, please see JavaScript Promises: A Tutorial with Examples

N.B. This article has been tested on a Node.js environment, not a pure JavaScript one. Running Node.js version 10.14.2. All benchmarks and syntax will rely heavily on Node.js. Tests were run on a MacBook Pro 2018 with an Intel i5 8th Generation Quad-Core Processor running base clock speed of 2.3 GHz.

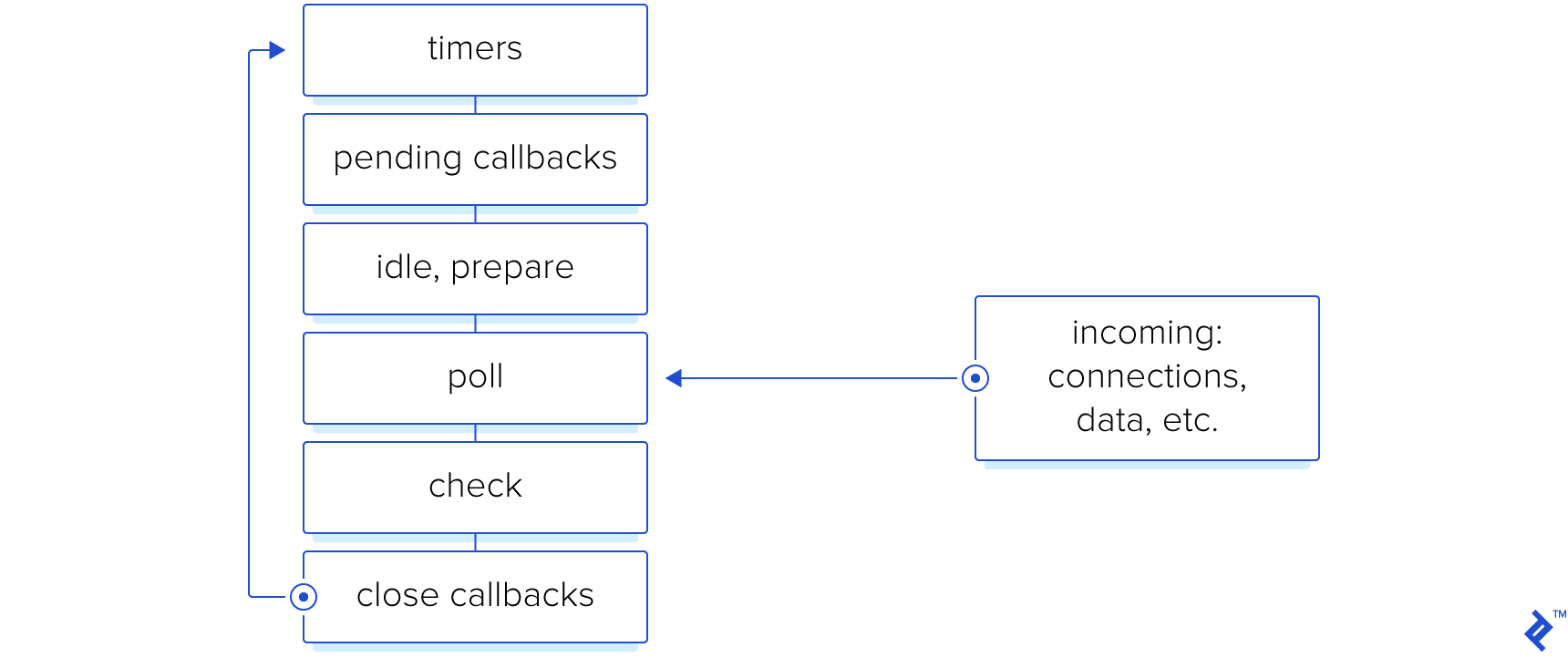

The Event Loop

The problem with writing JavaScript is that the language itself is single threaded. This means that you can’t execute more than one single procedure at a time unlike other languages, such as Go or Ruby, that have the ability to spawn threads and execute multiple procedures at the same time, either on kernel threads or on process threads.

To execute code, JavaScript relies on a procedure called the event loop which is composed of multiple stages. The JavaScript process goes through each stage, and at the end, it starts all over again. You can read more about the details in node.js’ official guide here.

But JavaScript has something up its sleeves to fight back the blocking problem. I/O callbacks.

Most of the real-life use cases that require us to create a thread are the fact that we are requesting some action that the language is not responsible for—for example, requesting a fetch of some data from the database. In multithreaded languages, the thread that created the request simply hangs or waits for the response from the database. This is just a waste of resources. It also puts a burden on the developer in choosing the correct number of threads in a thread pool. This is to prevent memory leaks and the allocation of a lot of resources when the app is in high demand.

JavaScript’s excels in one thing more than any other factor, handling I/O operations. JavaScript allows you to call an I/O operation such as requesting data from a database, reading a file into memory, writing a file to disk, executing a shell command, etc. When the operation is complete, you execute a callback. Or in case of promises, you resolve the promise with the result or reject it with an error.

JavaScript’s community always advises us to never ever use synchronous code when doing I/O operations. The well-known reason for that is that we do NOT want to block our code from running other tasks. Since it’s single-threaded, if we have a piece of code that reads a file synchronously, the code will block the whole process until reading is complete. Instead, if we rely on asynchronous code, we can do multiple I/O operations and handle the response of each operation individually when it is complete. No blocking whatsoever.

But surely in an environment where we don’t care at all about handling a lot of processes, using synchronous and asynchronous code doesn’t make a difference at all, right?

Benchmark

The test we’re going to run will aim to provide us with benchmarks on how fast does sync and async code run and if there is a difference in performance.

I decided to choose reading a file as the I/O operation to test.

First, I wrote a function that will write a random file filled with random bytes generated with Node.js Crypto module.

const fs = require('fs');

const crypto = require('crypto');

fs.writeFileSync( "./test.txt", crypto.randomBytes(2048).toString('base64') )

This file would act as a constant for our next step which is to read the file. Here’s the code

const fs = require('fs');

process.on('unhandledRejection', (err)=>{

console.error(err);

})

function synchronous() {

console.time("sync");

fs.readFileSync("./test.txt")

console.timeEnd("sync")

}

async function asynchronous() {

console.time("async");

let p0 = fs.promises.readFile("./test.txt");

await Promise.all([p0])

console.timeEnd("async")

}

synchronous()

asynchronous()

Running the previous code resulted in the following results:

| Run # | Sync | Async | Async/Sync Ratio |

|---|---|---|---|

| 1 | 0.278ms | 3.829ms | 13.773 |

| 2 | 0.335ms | 3.801ms | 11.346 |

| 3 | 0.403ms | 4.498ms | 11.161 |

This was unexpected. My initial expectations were that they should take the same time. Well, how about we add another file and read 2 files instead of 1?

I replicated the file generated test.txt and called it test2.txt. Here’s the updated code:

function synchronous() {

console.time("sync");

fs.readFileSync("./test.txt")

fs.readFileSync("./test2.txt")

console.timeEnd("sync")

}

async function asynchronous() {

console.time("async");

let p0 = fs.promises.readFile("./test.txt");

let p1 = fs.promises.readFile("./test2.txt");

await Promise.all([p0,p1])

console.timeEnd("async")

}

I simply added another read for each of them, and in promises, I was awaiting the reading promises that should be running in parallel. These were the results:

| Run # | Sync | Async | Async/Sync Ratio |

|---|---|---|---|

| 1 | 1.659ms | 6.895ms | 4.156 |

| 2 | 0.323ms | 4.048ms | 12.533 |

| 3 | 0.324ms | 4.017ms | 12.398 |

| 4 | 0.333ms | 4.271ms | 12.826 |

The first has completely different values than the 3 runs that follow. My guess is that it is related to JavaScript JIT compiler which optimizes the code on each run.

So, things aren’t looking so good for async functions. Maybe if we make things more dynamic and maybe stress the app a bit more, we could yield a different result.

So my next test involves writing 100 different files and then reading them all.

First, I modified the code to write 100 files before the execution of the test. Files are different on each run, although maintaining almost the same size, so we clear out the old files before each run.

Here’s the updated code:

let filePaths = [];

function writeFile() {

let filePath = `./files/${crypto.randomBytes(6).toString('hex')}.txt`

fs.writeFileSync( filePath, crypto.randomBytes(2048).toString('base64') )

filePaths.push(filePath);

}

function synchronous() {

console.time("sync");

/* fs.readFileSync("./test.txt")

fs.readFileSync("./test2.txt") */

filePaths.forEach((filePath)=>{

fs.readFileSync(filePath)

})

console.timeEnd("sync")

}

async function asynchronous() {

console.time("async");

/* let p0 = fs.promises.readFile("./test.txt");

let p1 = fs.promises.readFile("./test2.txt"); */

// await Promise.all([p0,p1])

let promiseArray = [];

filePaths.forEach((filePath)=>{

promiseArray.push(fs.promises.readFile(filePath))

})

await Promise.all(promiseArray)

console.timeEnd("async")

}

And for clean up and execution:

let oldFiles = fs.readdirSync("./files")

oldFiles.forEach((file)=>{

fs.unlinkSync("./files/"+file)

})

if (!fs.existsSync("./files")){

fs.mkdirSync("./files")

}

for (let index = 0; index < 100; index++) {

writeFile()

}

synchronous()

asynchronous()

And let’s run.

Here’s the results table:

| Run # | Sync | Async | Async/Sync Ratio |

|---|---|---|---|

| 1 | 4.999ms | 12.890ms | 2.579 |

| 2 | 5.077ms | 16.267ms | 3.204 |

| 3 | 5.241ms | 14.571ms | 2.780 |

| 4 | 5.086ms | 16.334ms | 3.213 |

These results start to draw a conclusion here. It indicates that with the increase in demand or concurrency, promises overhead start to make sense. For elaboration, if we are running a web server that is supposed to run hundreds or maybe thousands of requests per second per server, running I/O operations using sync will start to lose its benefit quite fast.

Just for the sake of experimentation, let’s see if it’s actually a problem with promises themselves or is it something else. For that, I wrote a function that will calculate the time to resolve one promise that does absolutely nothing and another that resolves 100 empty promises.

Here’s the code:

function promiseRun() {

console.time("promise run");

return new Promise((resolve)=>resolve())

.then(()=>console.timeEnd("promise run"))

}

function hunderedPromiseRuns() {

let promiseArray = [];

console.time("100 promises")

for(let i = 0; i < 100; i++) {

promiseArray.push(new Promise((resolve)=>resolve()))

}

return Promise.all(promiseArray).then(()=>console.timeEnd("100 promises"))

}

promiseRun()

hunderedPromiseRuns()

| Run # | Single Promise | 100 Promises |

|---|---|---|

| 1 | 1.651ms | 3.293ms |

| 2 | 0.758ms | 2.575ms |

| 3 | 0.814ms | 3.127ms |

| 4 | 0.788ms | 2.623ms |

Interesting. It appears that promises are not the main cause of the delay, which makes me guess that the source of delay is the kernel threads doing the actual reading. This might take a bit more experimentation to come up with a decisive conclusion on the main reason behind the delay.

A Final Word

So should you use promises or not? My opinion would be the following:

If you’re writing a script that will run on a single machine with a specific flow triggered by a pipeline or a single user, then go with sync code. If you’re writing a web server that will be responsible for handling a lot of traffic and requests, then the overhead that comes from async execution will overcome the performance of sync code.

You can find the code for all the functions in this article in the repository.

The logical next step on your JavaScript Developer journey, from promises, is the async/await syntax. If you’d like to learn more about it, and how we got here, see Asynchronous JavaScript: From Callback Hell to Async and Await.

Further Reading on the Toptal Blog:

Understanding the basics

What is Node.js promise?

A promise may at first glance look like syntactic sugar for a callback but actually, it isn’t. A promise may serve the same purpose of a callback in a different way but underneath it is so much different. You can read more about this here https://www.promisejs.org/

What is a promise in JavaScript?

It’s the same exact thing as in Node.js. Actually, Node.js is just JavaScript running outside the browser in an environment using Google Chrome’s very own JavaScript engine V8.

What is the use of callback function in JavaScript?

JavaScript is a single-threaded language. And since it can’t spawn more threads, if an I/O operation is started, it will block the execution of the main (and only) thread. Therefore, it has what is called a callback. A callback is a function passed to an I/O op that gets called when that op has been completed.

Is callback a keyword in JavaScript?

No. A callback is a concept.

What is an async function?

An async function is a function that returns a promise. The special thing about this type of function is that you can use the word await which allows you to pause the execution of a function when a promise is called without having to use the then function to retrieve the value from the promise.

Cairo, Cairo Governorate, Egypt

Member since September 30, 2018

About the author

Omar is a full-stack developer and architect with over 4 years of experience working with companies of varying sizes.