Python Machine Learning Prediction With a Flask REST API

Employing Python to make machine learning predictions can be a daunting task, especially if your goal is to create a real-time solution. However, Tensorflow and Scikit-Learn can significantly speed up implementation.

In this article, Toptal Python Developer Guillaume Ferry outlines a simple architecture that should help you progress from a basic proof of concept to a minimal viable product without much hassle.

Employing Python to make machine learning predictions can be a daunting task, especially if your goal is to create a real-time solution. However, Tensorflow and Scikit-Learn can significantly speed up implementation.

In this article, Toptal Python Developer Guillaume Ferry outlines a simple architecture that should help you progress from a basic proof of concept to a minimal viable product without much hassle.

Guillaume is a Kaggle expert specialized in ML and AI. He’s experienced in tackling large projects and exploring new solutions for scaling.

Expertise

PREVIOUSLY AT

This article is about using Python in the context of a machine learning or artificial intelligence (AI) system for making real-time predictions, with a Flask REST API. The architecture exposed here can be seen as a way to go from proof of concept (PoC) to minimal viable product (MVP) for machine learning applications.

Python is not the first choice one can think of when designing a real-time solution. But as Tensorflow and Scikit-Learn are some of the most used machine learning libraries supported by Python, it is used conveniently in many Jupyter Notebook PoCs.

What makes this solution doable is the fact that training takes a lot of time compared to predicting. If you think of training as the process of watching a movie and predicting the answers to questions about it, then it seems quite efficient to not have to re-watch the movie after each new question.

Training is a sort of compressed view of that “movie” and predicting is retrieving information from the compressed view. It should be really fast, whether the movie was complex or long.

Let’s implement that with a quick [Flask] example in Python!

Generic Machine Learning Architecture

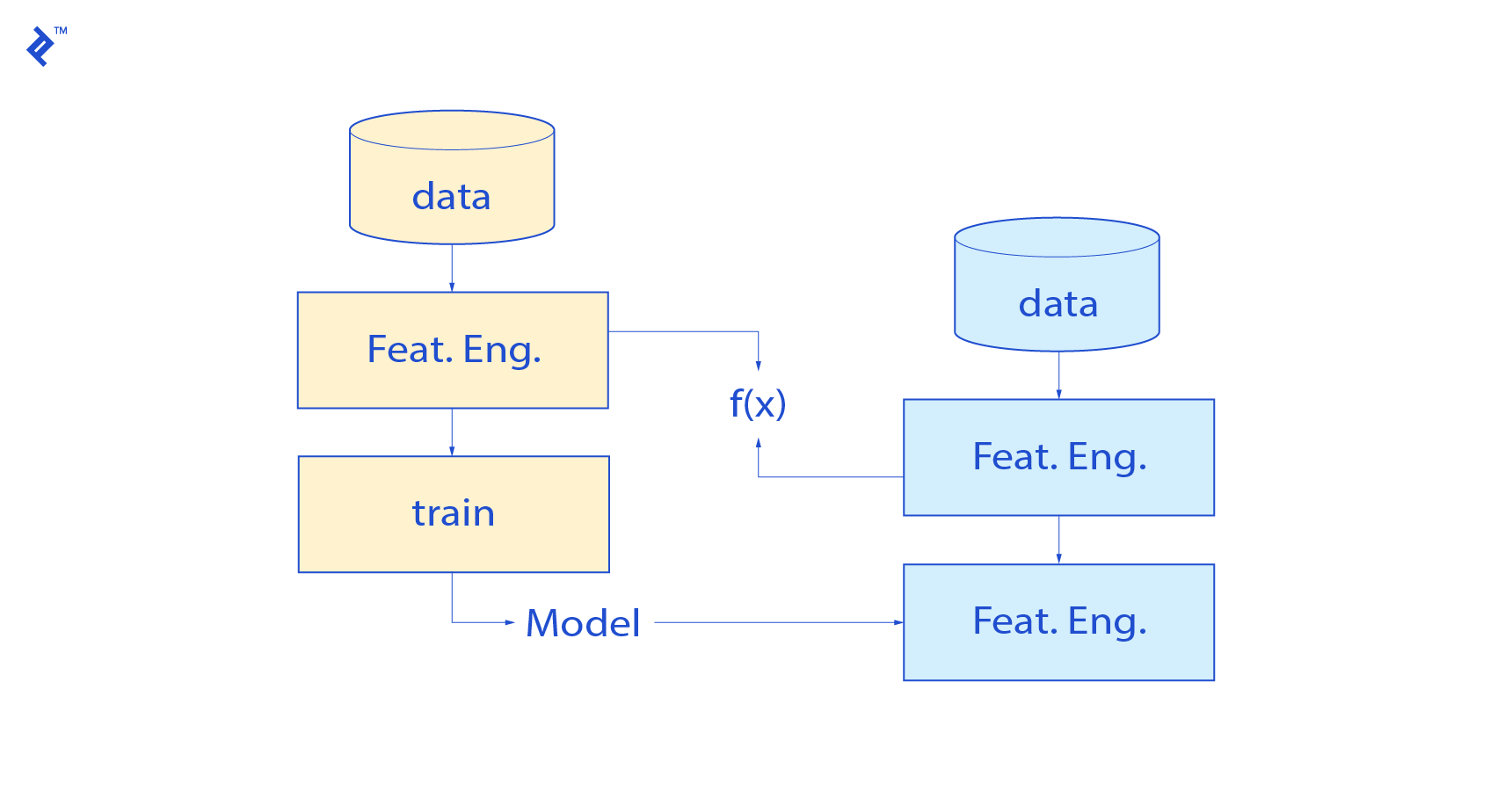

Let’s start by outlining a generic training and prediction architecture flow:

First, a training pipeline is created to learn about the past data according to an objective function.

This should output two key elements:

- Feature engineering functions: the transformations used at training time should be reused at prediction time.

- Model parameters: the algorithm and hyperparameters finally selected should be saved, so they can be reused at prediction time

Note that feature engineering done during training time should be carefully saved in order to be applicable to prediction. One usual problem among many others that can emerge along the way is feature scaling which is necessary for many algorithms.

If feature X1 is scaled from value 1 to 1000 and is rescaled to the [0,1] range with a function f(x) = x/max(X1), what would happen if the prediction set has a value of 2000?

Some careful adjustments should be thought of in advance so that the mapping function returns consistent outputs that will be correctly computed at prediction time.

Machine Learning Training vs Predicting

There is a major question to be addressed here. Why are we separating training and prediction to begin with?

It is absolutely true that in the context of machine learning examples and courses, where all the data is known in advance (including the data to be predicted), a very simple way to build the predictor is to stack training and prediction data (usually called a test set).

Then, it is necessary to train on the “training set” and predict on the “test set” to get the results, while at the same time doing feature engineering on both train and test data, training and predicting in the same and unique pipeline.

However, in real life systems, you usually have training data, and the data to be predicted comes in just as it is being processed. In other words, you watch the movie at one time and you have some questions about it later on, which means answers should be easy and fast.

Moreover, it is usually not necessary to re-train the entire model each time new data comes in since training takes time (could be weeks for some image sets) and should be stable enough over time.

That is why training and predicting can be, or even should be, clearly separated on many systems, and this is also better reflecting how an intelligent system (artificial or not) learns.

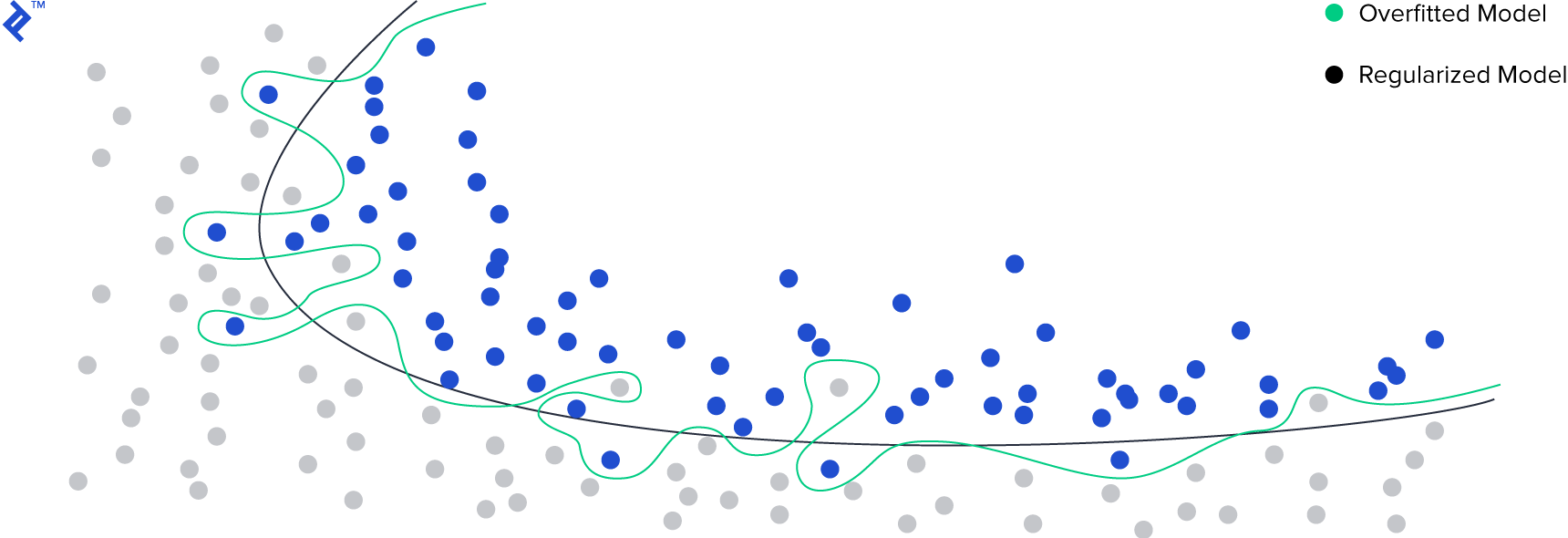

The Connection With Overfitting

The separation of training and prediction is also a good way to address the overfitting problem.

In statistics, overfitting is “the production of an analysis that corresponds too closely or exactly to a particular set of data, and may, therefore, fail to fit additional data or predict future observations reliably”.

Overfitting is particularly seen in datasets with many features, or with datasets with limited training data. In both cases, the data has too much information compared to what can be validated by the predictor, and some of them might not even be linked to the predicted variable. In this case, noise itself could be interpreted as a signal.

A good way of controlling overfitting is to train on part of the data and predict on another part on which we have the ground truth. Therefore the expected error on new data is roughly the measured error on that dataset, provided the data we train on is representative of the reality of the system and its future states.

So if we design a proper training and prediction pipeline together with a correct split of data, not only we address the overfitting problem but also we can reuse that architecture for predicting on new data.

The last step would be controlling that the error on new data is the same as expected. There is always a shift (the actual error is always below the expected one), and one should determine what is an acceptable shift—but that’s not the topic of this article.

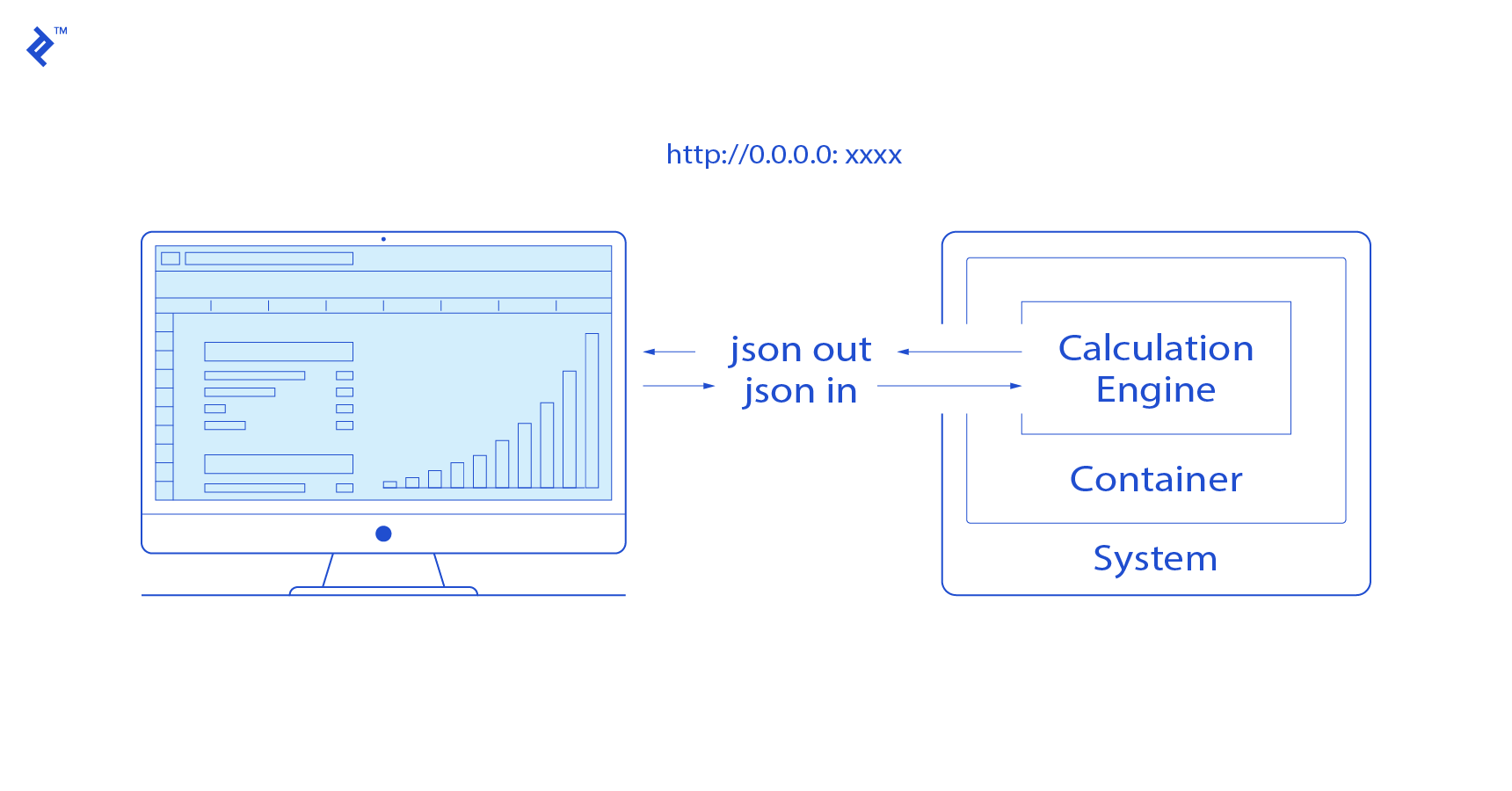

A REST API for Predicting

That’s where clearly separating training and prediction comes in handy. If we saved our feature engineering methods and our model parameters, then we can build a simple REST API with these elements.

The key here is to load the model and parameters at the API launch. Once launched and stored in memory, each API call triggers the feature engineering calculation and the “predict” method of the ML algorithm. Both are usually fast enough to ensure a real-time response.

The API can be designed to accept a unique example to be predicted, or several different ones (batch predictions).

Here is the minimal Python/Flask code that implements this principle, with JSON in and JSON out (question in, answer out):

app = Flask(__name__)

@app.route('/api/makecalc/', methods=['POST'])

def makecalc():

"""

Function run at each API call

No need to re-load the model

"""

# reads the received json

jsonfile = request.get_json()

res = dict()

for key in jsonfile.keys():

# calculates and predicts

res[key] = model.predict(doTheCalculation(key))

# returns a json file

return jsonify(res)

if __name__ == '__main__':

# Model is loaded when the API is launched

model = pickle.load(open('modelfile', 'rb'))

app.run(debug=True)

Note that the API can be used for predicting from new data, but I don’t recommend using it for training the model. It could be used, but this complexifies model training code and could be more demanding in terms of memory resources.

Implementation Example - Bike Sharing

Let’s take a Kaggle dataset, bike sharing, as an example. Say we are a bike sharing company that wants to forecast the number of bike rentals each day in order to better manage the bike’s maintenance, logistics and other aspects of business.

Rentals mainly depend on the weather conditions, so with the weather forecast, that company could get a better idea when rentals will peak, and try to avoid maintenance on these days.

First, we train a model and save it as a pickle object which can be seen in the Jupyter notebook.

Model training and performance is not dealt with here, this is just an example for understanding the full process.

Then we write the data transformation that will be done at each API call:

import numpy as np

import pandas as pd

from datetime import date

def doTheCalculation(data):

data['dayofyear']=(data['dteday']-

data['dteday'].apply(lambda x: date(x.year,1,1))

.astype('datetime64[ns]')).apply(lambda x: x.days)

X = np.array(data[['instant','season','yr','holiday','weekday','workingday',

'weathersit','temp','atemp','hum','windspeed','dayofyear']])

return X

This is just a calculation of a variable (day of year) to include both the month and the precise day. There is also a selection of columns and their respective order to be kept.

We need, then, to write the REST API with Flask:

from flask import Flask, request, redirect, url_for, flash, jsonify

from features_calculation import doTheCalculation

import json, pickle

import pandas as pd

import numpy as np

app = Flask(__name__)

@app.route('/api/makecalc/', methods=['POST'])

def makecalc():

"""

Function run at each API call

"""

jsonfile = request.get_json()

data = pd.read_json(json.dumps(jsonfile),orient='index',convert_dates=['dteday'])

print(data)

res = dict()

ypred = model.predict(doTheCalculation(data))

for i in range(len(ypred)):

res[i] = ypred[i]

return jsonify(res)

if __name__ == '__main__':

modelfile = 'modelfile.pickle'

model = pickle.load(open(modelfile, 'rb'))

print("loaded OK")

app.run(debug=True)

Run this program, it will serve the API on port 5000 by default.

If we test a request locally, still with Python:

import requests, json

url = '[http://127.0.0.1:5000/api/makecalc/](http://127.0.0.1:5000/api/makecalc/)'

text = json.dumps({"0":{"instant":1,"dteday":"2011-01-01T00:00:00.000Z","season":1,"yr":0,"mnth":1,"holiday":0,"weekday":6,"workingday":0,"weathersit":2,"temp":0.344167,"atemp":0.363625,"hum":0.805833,"windspeed":0.160446},

"1":{"instant":2,"dteday":"2011-01-02T00:00:00.000Z","season":1,"yr":0,"mnth":1,"holiday":0,"weekday":3,"workingday":0,"weathersit":2,"temp":0.363478,"atemp":0.353739,"hum":0.696087,"windspeed":0.248539},

"2":{"instant":3,"dteday":"2011-01-03T00:00:00.000Z","season":1,"yr":0,"mnth":1,"holiday":0,"weekday":1,"workingday":1,"weathersit":1,"temp":0.196364,"atemp":0.189405,"hum":0.437273,"windspeed":0.248309}})

The request contains all the information that was fed to the model. Therefore, our model will respond with a forecast of bike rentals for the specified dates (here we have three of them).

headers = {'content-type': 'application/json', 'Accept-Charset': 'UTF-8'}

r = requests.post(url, data=text, headers=headers)

print(r,r.text)

<Response [200]> {

"0": 1063,

"1": 1028,

"2": 1399

}

That’s it! This service could be used in any company’s application easily, for maintenance planning or for users to be aware of bike traffic, demand, and the availability of rental bikes.

Putting it all Together

The major flaw of many machine learnings systems, and especially PoCs, is to mix training and prediction.

If they are carefully separated, real-time predictions can be performed quite easily for an MVP, at a quite low development cost and effort with Python/Flask, especially if, for many PoCs, it was initially developed with Scikit-learn, Tensorflow, or any other Python machine learning library.

However, this might not be feasible for all applications, especially applications where feature engineering is heavy, or applications retrieving the closest match that need to have the latest data available at each call.

In any case, do you need to watch movies over and over to answer questions about them? The same rule applies to machine learning!

Further Reading on the Toptal Blog:

Understanding the basics

What is a REST API?

In the context of web services, RESTful APIs are defined with the following aspects: a URL, a media type and an HTTP method (GET, POST, etc. ). They can be used as a unified way of exchanging information between applications.

What is machine learning?

Machine learning is a subset of artificial intelligence in the field of computer science that often uses statistical techniques to give computers the ability to “learn” (i.e., progressively improve performance on a specific task) with data, without being explicitly programmed.

What is Tensorflow?

TensorFlow is an open-source software library for dataflow programming across a range of tasks. It is a symbolic math library and is also used for machine learning applications such as neural networks.

What is Scikit-Learn?

Scikit-learn, also sklearn, is a free software machine learning library for the Python programming language.

What is feature engineering?

Feature engineering is the process of using domain knowledge of the data to create features that make machine learning algorithms work. It augments the available data with additional information relevant to the predicted target.

What is Jupyter?

Jupyter Notebook (formerly IPython Notebooks) is a web-based interactive computational environment supporting the Python programming language.

What is a PoC?

A PoC, or proof of concept, is a first-stage piece of program that demonstrates the feasibility of a project.

Guillaume Ferry

Paris, France

Member since August 22, 2017

About the author

Guillaume is a Kaggle expert specialized in ML and AI. He’s experienced in tackling large projects and exploring new solutions for scaling.

Expertise

PREVIOUSLY AT