Introduction to HTTP Live Streaming: HLS on Android and More

Despite its age, HTTP Live Streaming (HLS) remains a widely used standard in adaptive bitrate video and a de-facto Apple alternative to MPEG-DASH.

In this tutorial, Toptal Java Developer Tomo Krajina explains what makes HLS tick and demonstrates how to create an HLS player in Android.

Despite its age, HTTP Live Streaming (HLS) remains a widely used standard in adaptive bitrate video and a de-facto Apple alternative to MPEG-DASH.

In this tutorial, Toptal Java Developer Tomo Krajina explains what makes HLS tick and demonstrates how to create an HLS player in Android.

Tomo is a Java, Android, and Golang developer with 13+ years of experience. He has worked on telecom, and banking systems.

Expertise

PREVIOUSLY AT

Video streaming is an integral part of the modern internet experience. It’s everywhere: on mobile phones, desktop computers, TVs, and even wearables. It needs to work flawlessly on every device and network type, be it on slow mobile connections, WiFi, behind firewalls, etc. Apple’s HTTP Live Streaming (HLS) was created exactly with these challenges in mind.

Almost all modern devices come endowed with modern hardware that’s fast enough to play video, so network speed and reliability emerge as the biggest problem. Why is that? Up until a few years ago, the canonical way of storing and publishing video were UDP-based protocols like RTP. This proved problematic in many ways, to list just a few:

- You need a server (daemon) service to stream content.

- Most firewalls are configured to allow only standard ports and network traffic types,such as http, email, etc.

- If your audience is global, you need a copy of your streaming daemon service running in all major regions.

Of course, you may think all these problems are easy to solve. Just store video files (for example, mp4 files) on your http server and use your favourite CDN service to serve them anywhere in the world.

Where Legacy Video Streaming Falls Short

This is far from the best solution for a few reasons, efficiency being one of them. If you store original video files in full resolution, users in rural areas or parts of the world with poor connectivity will have a hard time enjoying them. Their video players will struggle to download enough data to play it in runtime.

Therefore, you need a special version of the file so that the amount of video downloaded is approximately the same that can be played. For example, if the video resolution and quality are such that in five seconds it can download another five seconds of video, that’s optimal. However, if it takes five seconds do download just three seconds worth of video, the player will stop and wait for the next chunk of the stream to download.

On the other hand, reducing quality and resolution any further would only degrade the user experience on faster connections, as you’d be saving bandwidth unnecessarily. However, there is a third way.

Adaptive Bitrate Streaming

While you could upload different versions of video for different users, you’d then need to have the ability to control their players and calculate what is the best stream for their connection and device. Then, the player needs to switch between them (for example, when a user switches from 3G to WiFi). And even then, what if the client changes the network type? Then the player must switch to a different video, but it must start playing not from the start, but somewhere in the middle of the video. So how do you calculate the byte range to request?

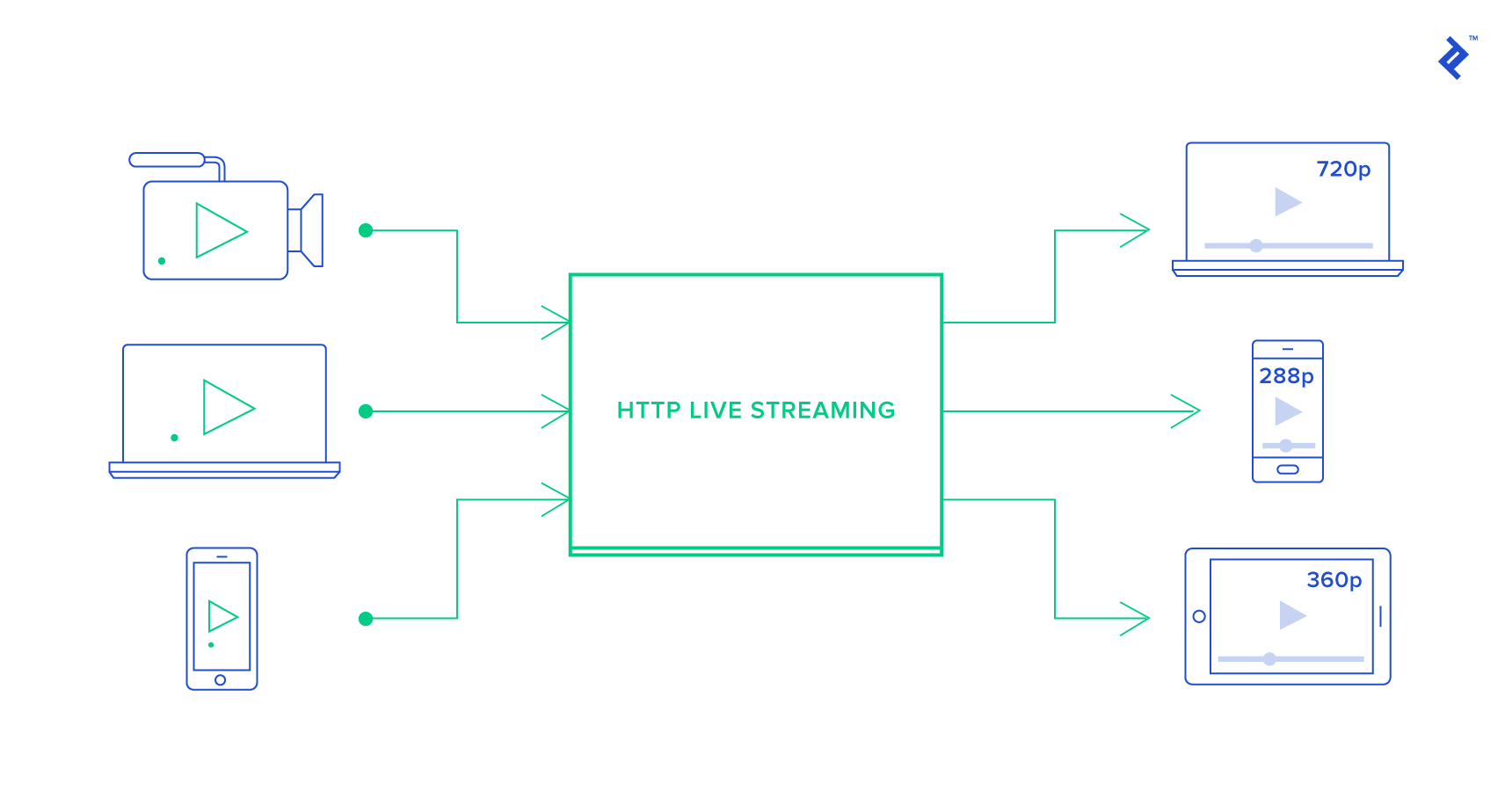

A cool thing would be if video players could detect changes in network type and available bandwidth, and then switch transparently between different streams (of the same video prepared for different speeds) until it finds the best one.

That’s exactly what Adaptive bitrate streaming solves.

Note: This HLS tutorial will not cover encryption, synchronized playbacks and IMSC1.

What is HLS?

HTTP Live Streaming is an adaptive bitrate streaming protocol introduced by Apple in 2009. It uses m3u8 files to describe media streams and it uses HTTP for the communication between the server and the client. It is the default media streaming protocol for all iOS devices, but it can be used on Android and web browsers.

The basic building blocks of a HLS streams are:

- M3U8 playlists

- Media files for various streams

M3U8 Playlists

Let’s start by answering a basic question: What are M3U8 files?

M3U (or M3U8) is a plain text file format originally created to organize collections of MP3 files. The format is extended for HLS, where it’s used to define media streams. In HLS there are two kinds of m3u8 files:

- Media playlist: containing URLs of the files needed for streaming (i.e. chunks of the original video to be played).

- Master playlist: contains URLs to media playlists which, in turn, contain variants of the same video prepared for different bandwidths.

A so-called M3U8 live stream URL is nothing more than URLs to M3U8 files.

Sample M3U8 File for HLS Stream

An M3U8 file contains a list of urls or local file paths with some additional metadata. Metadata lines start with #.

This example illustrates what an M3U8 file for a simple HLS stream looks like:

#EXTM3U

#EXT-X-VERSION:3

#EXT-X-MEDIA-SEQUENCE:0

#EXT-X-ALLOW-CACHE:YES

#EXT-X-TARGETDURATION:11

#EXTINF:5.215111,

00000.ts

#EXTINF:10.344822,

00001.ts

#EXTINF:10.344822,

00002.ts

#EXTINF:9.310344,

00003.ts

#EXTINF:10.344822,

00004.ts

...

#EXT-X-ENDLIST

- The first four lines are global (header) metadata for this M3U8 playlist.

- The

EXT-X-VERSIONis the version of the M3U8 format (must be at least 3 if we want to useEXTINFentries). - The

EXT-X-TARGETDURATIONtag contain the maximum duration of each video “chunk”. Typically, this value is around 10s. - The rest of the document contains pairs of lines such as:

#EXTINF:10.344822,

00001.ts

This is a video “chunk.” This one represents the 00001.ts chunk which is exactly 10.344822 seconds long. When a client video player needs to start a video from a certain point in said video, it can easily calculate which .ts file it needs to request by adding up the durations of previously viewed chunks. The second line can be a local filename or a URL to that file.

The M3U8 file with its .ts files represents the simplest form of a HLS stream – a media playlist.

Please keep in mind that not every browser can play HLS streams by default.

Master Playlist or Index M3U8 File

The previous M3U8 example points to a series of .ts chunks. They are created from the original video file, which is resized encoded and split into chunks.

That means we still have the problem outlined in the introduction – what about clients on very slow (or unusually fast) networks? Or, clients on fast networks with very small screen sizes? It makes no sense to stream a file in maximum resolution if it can’t be shown in all its glory on your shiny new phone.

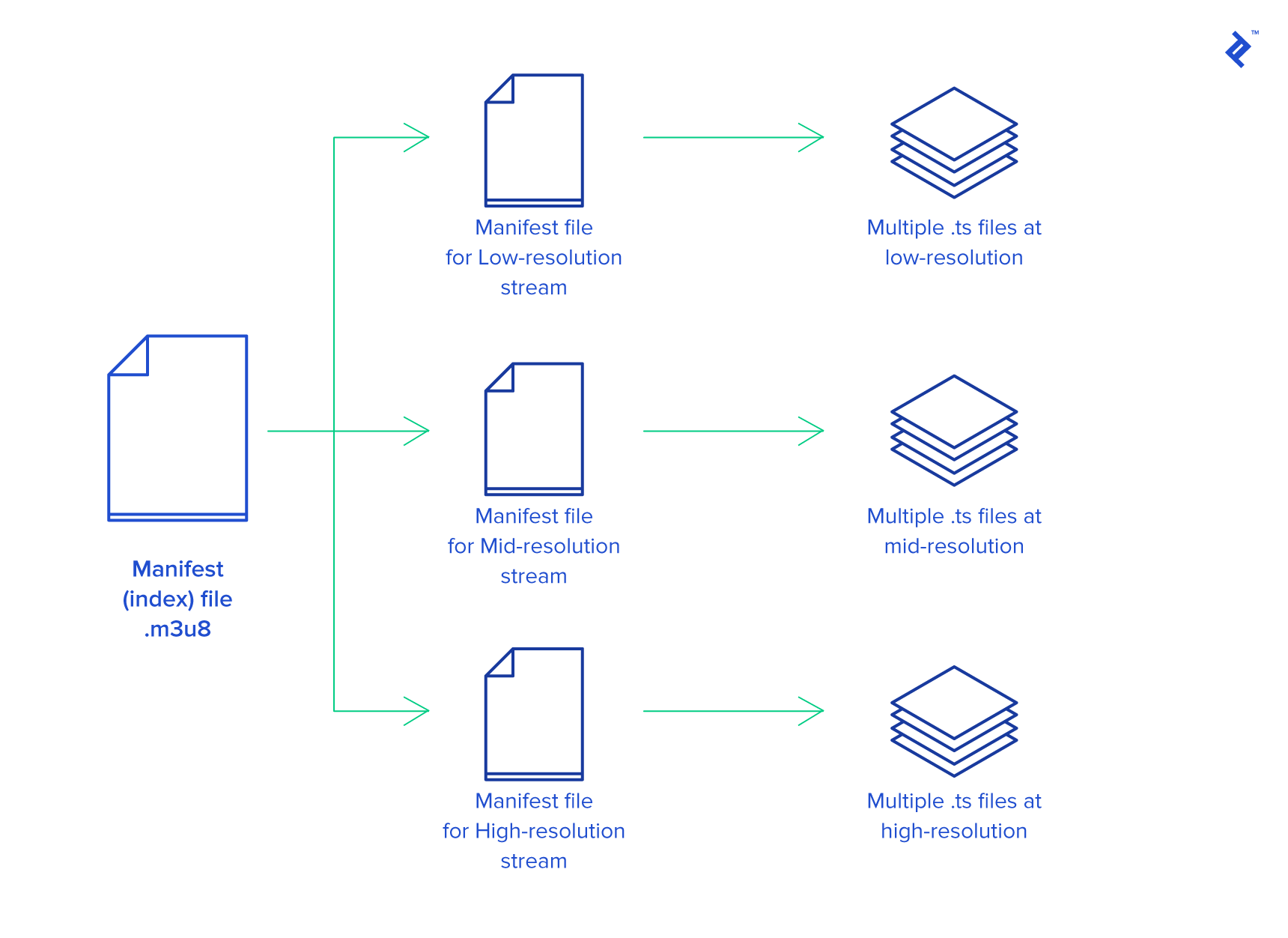

HLS solves this problem by introducing another “layer” of M3U8. This M3U8 file will not contain pointers to .ts files, but it has pointers to other M3U8 files which, in turn, contain video files prepared in advance for specific bitrates and resolutions.

Here is an example of such an M3U8 file:

#EXTM3U

#EXT-X-STREAM-INF:BANDWIDTH=1296,RESOLUTION=640x360

https://.../640x360_1200.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=264,RESOLUTION=416x234

https://.../416x234_200.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=464,RESOLUTION=480x270

https://.../480x270_400.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=1628,RESOLUTION=960x540

https://.../960x540_1500.m3u8

#EXT-X-STREAM-INF:BANDWIDTH=2628,RESOLUTION=1280x720

https://.../1280x720_2500.m3u8

The video player will pick pairs of lines such as:

#EXT-X-STREAM-INF:BANDWIDTH=1296,RESOLUTION=640x360

https://.../640x360_1200.m3u8

These are called variants of the same video prepared for different network speeds and screen resolutions. This specific M3U8 file (640x360_1200.m3u8) contains the video file chunks of the video resized to 640x360 pixels and prepared for bitrates of 1296kbps. Note that the reported bitrate must take into account both the video and audio streams in the video.

The video player will usually start playing from the first stream variant (in the previous example this is 640x360_1200.m3u8). For that reason, you must take special care to decide which variant will be the first in the list. The order of the other variants isn’t important.

If the first .ts file takes too long to download (causing “buffering”, i.e. waiting for the next chunk) the video player will switch to a to a stream with a smaller bitrate. And, of course, if it’s loaded fast enough it means that it can switch to a better quality variant, but only if it makes sense for the resolution of the display.

If the first stream in the index M3U8 list isn’t the best one, the client will need one or two cycles until it settles with the right variant.

So, now we have three layers of HLS:

- Index M3U8 file (the master playlist) containing pointers (URLs) to variants.

- Variant M3U8 files (the media playlist) for different streams for different screen sizes and network speeds. They contain pointers (URLs) to .ts files.

-

.tsfiles (chunks) which are binary files with parts of the video.

You can watch an example index M3U8 file here (again, it depends on your browser/OS).

Sometimes, you know in advance that the client is on a slow or fast network. In that case you can help the client choose the right variant by providing an index M3U8 file with a different first variant. There are two ways of doing this.

- The first is to have multiple index files prepared for different network types and prepare the client in advance to request the right one. The client will have to check the network type and then request for example

http://.../index_wifi.m3u8orhttp://.../index_mobile.m3u8. - You also can make sure the client sends the network type as part of the http request (for example if it’s connected to a wifi, or mobile 2G/3G/…) and then have the index M3U8 file prepared dynamically for each request. Only the index M3U8 file needs a dynamic version, the single streams (variant M3U8 files) can still be stored as static files.

Preparing Video Files for HLS

There are two important building blocks of Apple’s HTTP Live Streaming. One is the way how video files are stored (to be served via HTTP later) and the other are the M3U8 index file(s) which tells to the player (the streaming client app) where to get which video file.

Let’s start with video files. The HLS protocol expects the video files stored in smaller chunks of equal length, typically 10 seconds each. Originally, those files had to be stored in MPEG-2 TS files (.ts) and encoded with the H.264 format with audio in MP3, HE-AAC, or AC-3.

That means that a video of 30 seconds will be split into 3 smaller .ts files, each approximately 10s long.

Note, the latest version of HLS allows for fragmented .mp4 files, too. Since this is a still a new thing, and some video players still need to implement it, the examples in this article will use .ts files.

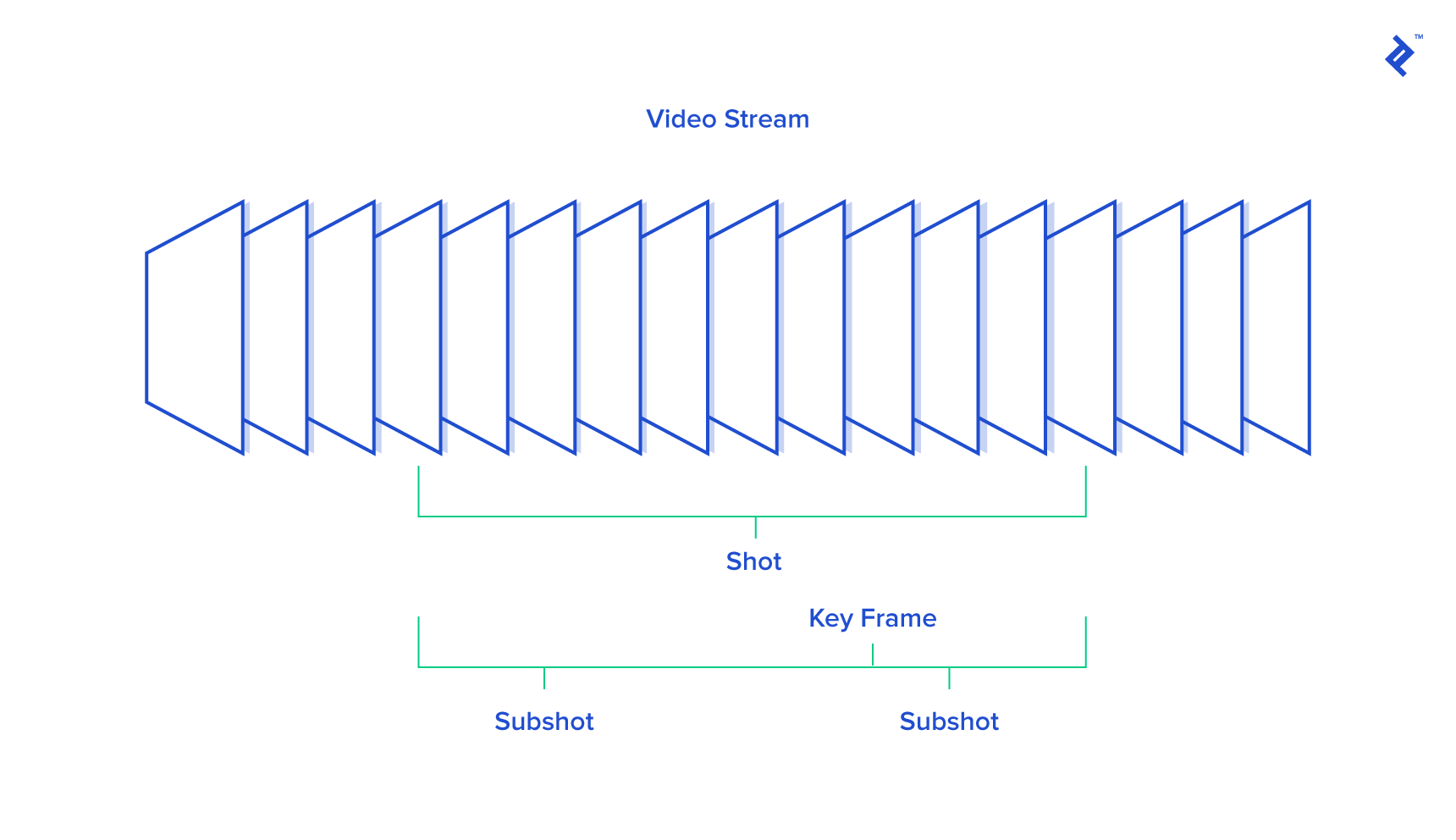

Keyframes

Chunks must be encoded with a keyframe at the start of each file. Each video contains frames. Frames are images, but video formats don’t store complete images, that would take too much disk space. They encode just the difference from the previous frame. When you jump to a middle point in the video, the player needs a “starting point” from where to apply all those diffs in order to show the initial image, and then start playing the video.

That’s why .ts chunks must have a keyframe at the start. Sometimes players need to start in the middle of the chunk. The player can always calculate the current image by adding all the “diffs” from the first keyframe. But, if it starts 9 seconds from the start, it needs to calculate 9 seconds of “diffs.” To make that computation faster, it is best to create keyframes every few seconds (best cca 3s).

HLS Break Points

There are situations when you want multiple video clips played in succession. One way to do that is to merge the original video files, and then create the HLS streams with that file, but that’s problematic for multiple reasons. What if you want to show an ad before or after your video? Maybe you don’t want to do that for all users, and probably you want different ads for different users. And, of course, you don’t want to prepare HLS files with different ads in advance.

In order to fix that problem, there is a tag #EXT-X-DISCONTINUITY which can be used in the m3u8 playlist. This line basically tells to the video player to prepare in advance for the fact that from this point on, the .ts files may be created with a different configuration (for example, the resolution may change). The player will need to recalculate everything and possibly to switch to another variant and it needs to be prepared to such “discontinuity” points.

Live Streaming With HLS

There are basically two kinds of “video streaming”. One is Video On Demand (VOD) for videos recorded in advance and streamed to the user when he decides to. And there is Live Streaming. Even though HLS is an abbreviation for HTTP Live Streaming, everything explained so far has been centered around VOD, but there is a way to make live streaming with HLS, too.

There are a few changes in your M3U8 files. First, there must be a #EXT-X-MEDIA-SEQUENCE:1 tag in the variant M3U8 file. Then, the M3U8 file must not end the with #EXT-X-ENDLIST (which otherwise must always be placed at the end).

While you record your stream you will constantly have new .ts files. You need to append them in the M3U8 playlist and each time you add a new one the counter in the #EXT-X-MEDIA-SEQUENCE:<counter> must be increased by 1.

The video player will check the counter. If changed from the last time it knows if there are new chunks to be downloaded and played. Make sure that the M3U8 file is served with the no-cache headers, because clients will keep reloading M3U8 files waiting for new chunks to play.

VTT

Another interesting feature for HLS streams is that you can embed Web Video Text Track (VTT) files in them. VTT files can be used for various uses. For example, for a web HLS player you can specify image snapshots for various parts of the video. When the user moves the mouse over the video timer area (below the video player), the player can show snapshots from that position in the video.

Another obvious use for VTT files are subtitles. The HLS stream can specify multiple subtitles for multiple languages:

#EXT-X-MEDIA:TYPE=SUBTITLES,GROUP-ID="subs",NAME="English",DEFAULT=YES,AUTOSELECT=YES,FORCED=NO,LANGUAGE="en",CHARACTERISTICS="public.accessibility.transcribes-spoken-dialog, public.accessibility.describes-music-and-sound",URI="subtitles/eng/prog_index.m3u8"

Then, theprog_index.m3u8 looks like:

#EXTM3U

#EXT-X-TARGETDURATION:30

#EXT-X-VERSION:3

#EXT-X-MEDIA-SEQUENCE:0

#EXT-X-PLAYLIST-TYPE:VOD

#EXTINF:30,

0000.webvtt

#EXTINF:30,

0001.webvtt

...

The actual VTT (for example 0000.webvtt):

WEBVTT

X-TIMESTAMP-MAP=MPEGTS:900000,LOCAL:00:00:00.000

00:00:01.000 --> 00:00:03.000

Subtitle -Unforced- (00:00:01.000)

00:00:03.000 --> 00:00:05.000

<i>...text here... -Unforced- (00:00:03.000)</i>

<i>...text here...</i>

In addition to VTT files, Apple recently announced HLS will feature support for IMSC1, a new subtitle format optimized for streaming delivery. Its most important advantage is that it can be styled using CSS.

HTTP Live Streaming Tools and Potential Issues

Apple introduced a number of useful HSL tools, which are described in greater detail in the official HLS guide.

- For live streams, Apple prepared a tool named

mediastreamsegmenterto create segment files on the fly from an ongoing video stream. - Another important tool is

mediastreamvalidator. It will check your M3U8 playlists, download the video files and report various problems. For example when the reported bitrate is not the same as calculated from the .ts files. - Of course, when you must encode/decode/mux/demux/chunk/strip/merge/join/… video/audio files, there is ffmpeg. Be ready to compile your own custom version(s) of ffmpeg for specific use cases.

One of the most frequent problems encountered in video is audio synchronization. If you find that audio in some of your HLS streams is out of sync with the video (i.e. an actor open their mouth, but you notice the voice is a few milliseconds early or late), it is possible that the original video file was filmed using a variable framerate. Make sure to convert it to constant bitrate.

If possible, it’s even better to make sure that your software is set to record video at a constant framerate.

HTTP Live Streaming Example

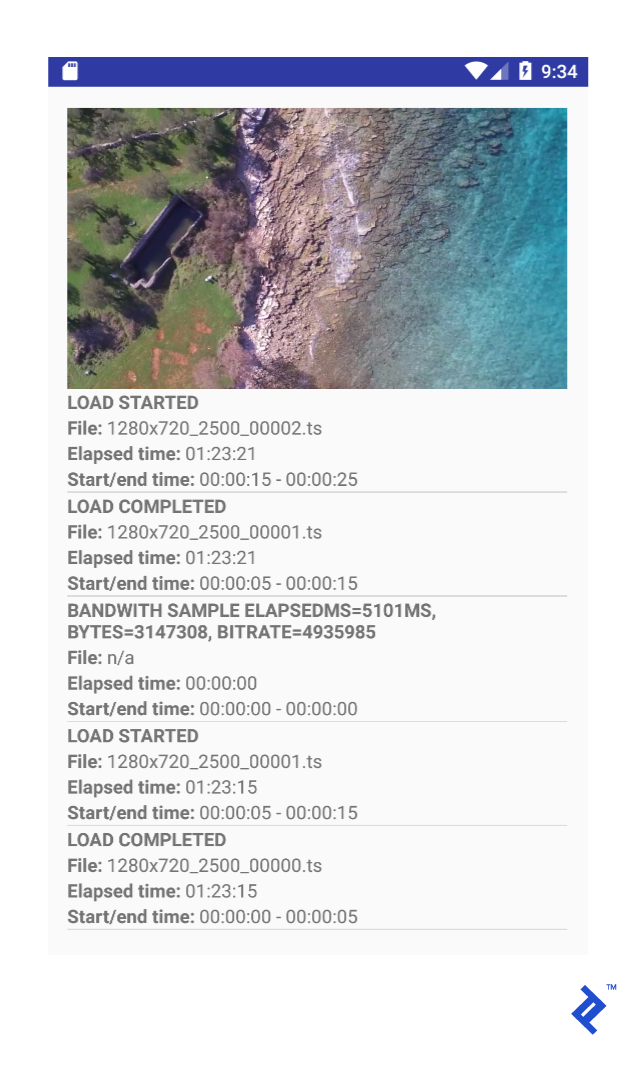

I prepared an HLS Android application which streams a predefined HLS using Google’s ExoPlayer player. It will show a video and a list of HLS “events” below it. Those events include: every .ts file downloaded, or each time the player decides to switch to a higher or lower bitrate stream.

Let’s go through the main parts of the viewer initialization. In the first step we’ll retrieve the device’s current connection type and use that information to decide which m3u8 file to retrieve.

String m3u8File = "hls.m3u8";

ConnectivityManager connectivity = (ConnectivityManager) this.getSystemService(Context.CONNECTIVITY_SERVICE);

NetworkInfo activeNetwork = connectivity.getActiveNetworkInfo();

if (activeNetwork != null && activeNetwork.isConnectedOrConnecting()) {

int type = activeNetwork.getType();

int subType = activeNetwork.getSubtype();

if (type == ConnectivityManager.TYPE_MOBILE && subType == TelephonyManager.NETWORK_TYPE_GPRS) {

m3u8File = "hls_gprs.m3u8";

}

}

String m3u8URL = "https://s3-us-west-2.amazonaws.com/hls-playground/" + m3u8File;

Note that this isn’t strictly necessary. The HLS player will always adjust to the right HLS variant after a few chunks, but that means that in the first 5-20 seconds the user might not watch the ideal variant of the stream.

Remember, the first variant in the m3u8 file is the one the viewer will start with. Since we’re on the client side and we can detect the connection type, we can at least try to avoid the initial player’s switching between variants by requesting the m3u8 file which is prepared in advance for this connection type.

In the next step, we initialize and start our HLS player:

Handler mainHandler = new Handler();

DefaultBandwidthMeter bandwidthMeter = new DefaultBandwidthMeter.Builder()

.setEventListener(mainHandler, bandwidthMeterEventListener)

.build();

TrackSelection.Factory videoTrackSelectionFactory = new AdaptiveTrackSelection.Factory(bandwidthMeter);

TrackSelector trackSelector = new DefaultTrackSelector(videoTrackSelectionFactory);

LoadControl loadControl = new DefaultLoadControl();

SimpleExoPlayer player = ExoPlayerFactory.newSimpleInstance(this, trackSelector, loadControl);

Then we prepare the player and feed it with the right m3u8 for this network connection type:

DataSource.Factory dataSourceFactory = new DefaultDataSourceFactory(this, Util.getUserAgent(this, "example-hls-app"), bandwidthMeter);

HlsMediaSource videoSource = new HlsMediaSource(Uri.parse(m3u8URL), dataSourceFactory, 5, mainHandler, eventListener);

player.prepare(videoSource);

And here is the result:

HLS Browser Compatibility, Future Developments

There is a requirement from Apple for video streaming apps on iOS that they must use HLS if the videos are longer than 10 minutes or bigger than 5mb. That in itself is a guarantee that HLS is here to stay. There were some worries about HLS and MPEG-DASH and which one will be the winner in the web browsers arena. HLS isn’t implemented in all modern browsers (you probably noticed that if you clicked the previous m3u8 url examples). On Android, for example, in versions less than 4.0 it won’t work at all. From 4.1 to 4.4 it works only partially (for example, the audio is missing, or video missing but audio works).

But this “battle” got slightly more simple recently. Apple announced that the new HLS protocol will allow fragmented mp4 files (fMP4). Previously, if you wanted to have both HLS and MPEG-DASH support, you had to encode your videos twice. Now, you will be able to reuse the same video files, and repackage only the metadata files (.m3u8 for HLS and .mpd for MPEG-DASH).

Another recent announcement is support for High Efficiency Video Codec (HEVC). If used, it must be packaged in fragmented mp4 files. And that probably means that the future of HLS is fMP4.

The current situation in the world of browsers is that only some browser implementations of the <video> tag will play HLS out of the box. But there are open-source and commercial solutions which offer HLS compatibility. Most of them offer HLS by having a Flash fallback but there are a few implementations completely written in JavaScript.

Wrapping Up

This article focuses specifically on HTTP Live Streaming, but conceptually it can also be read as an explanation of how Adaptive Bitrate Streaming (ABS) works. In conclusion, we can say HLS is a technology that solves numerous important problems in video streaming:

- It simplifies storage of video files

- CDN

- Client players handling different client bandwidths and switching between streams

- Subtitles, encryption, synchronized playbacks, and other features not covered in this article

Regardless of whether you end up using HLS or MPEG-DASH, both protocols should offer similar functionalities and, with the introduction of fragmented mp4 (fMP4) in HLS, you can use the same video files. That means that in most cases you’ll need to understand the basics of both protocols. Luckily, they seem to be moving in the same direction, which should make them easier to master.

Further Reading on the Toptal Blog:

Understanding the basics

What is an M3U8 file?

The M3U (or M3U8) is a plain text file format originally created to organize collections of MP3 files. The format is extended for HLS, where it’s used to define media streams.

What is HLS?

HTTP Live Streaming (HLS) is an adaptive bitrate streaming protocol introduced by Apple in 2009. It uses m3u8 files to describe media streams and HTTP for communication between the server and the client. It is the default media streaming protocol for all iOS devices.

What is MPEG-DASH?

MPEG-DASH is a widely used streaming solution, built around HTTP just like Apple HLS. DASH stands for Dynamic Adaptive Streaming over HTTP.

What browser supports HLS?

In recent years, HLS support has been added to most browsers. However, differences persist. For example, Chrome and Firefox feature only partial support on desktop platforms.

Tomo Krajina

Poreč, Croatia

Member since February 5, 2014

About the author

Tomo is a Java, Android, and Golang developer with 13+ years of experience. He has worked on telecom, and banking systems.

Expertise

PREVIOUSLY AT