How to Improve ASP.NET App Performance in Web Farm With Caching

Caching is a powerful technique for increasing performance, but the whole idea behind caching works only as long as the result we cached remains valid. And here we get to the hard part of the problem: How do we determine when a cached item has become invalid and needs to be recreated?

In this article, Toptal Freelance Software Engineer Daniel Ivanov provides an ASP.NET-based solution to replace invalid cached items and assure high throughput and performance of web applications designed to handle a high load.

Caching is a powerful technique for increasing performance, but the whole idea behind caching works only as long as the result we cached remains valid. And here we get to the hard part of the problem: How do we determine when a cached item has become invalid and needs to be recreated?

In this article, Toptal Freelance Software Engineer Daniel Ivanov provides an ASP.NET-based solution to replace invalid cached items and assure high throughput and performance of web applications designed to handle a high load.

Daniel has helped startups bring products to markets for more than a decade using best-of-breed approaches to HTML/CSS, JS, Python, and C#.

PREVIOUSLY AT

There are only two hard things in Computer Science: cache invalidation and naming things.

- Author: Phil Karlton

A Brief Introduction to Caching

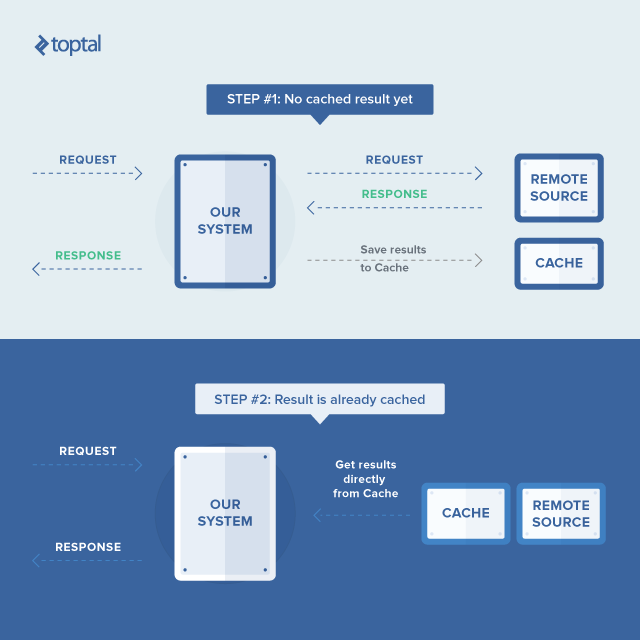

Caching is a powerful technique for increasing performance through a simple trick: Instead of doing expensive work (like a complicated calculation or complex database query) every time we need a result, the system can store – or cache – the result of that work and simply supply it the next time it is requested without needing to reperform that work (and can, therefore, respond tremendously faster).

Of course, the whole idea behind caching works only as long the result we cached remains valid. And here we get to the actual hard part of the problem: How do we determine when a cached item has become invalid and needs to be recreated?

and perfect to solve distributed web farm caching problem.

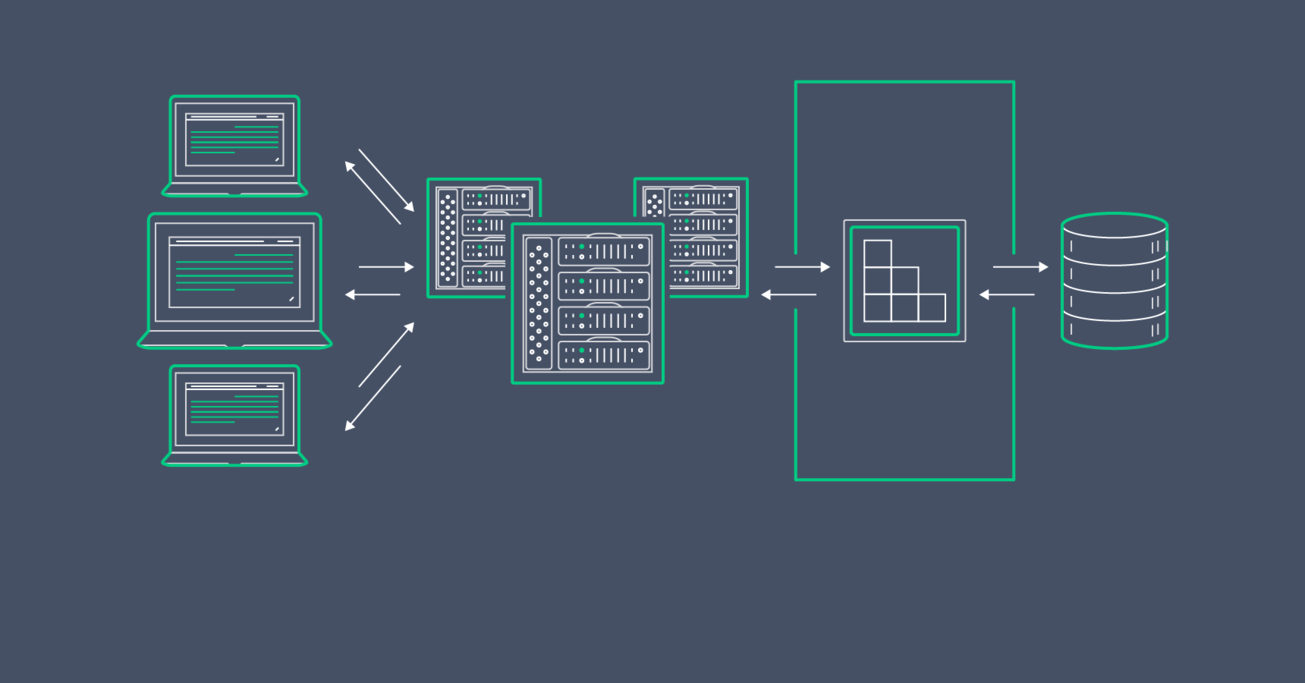

Usually, a typical web application has to deal with a much higher volume of read requests than write requests. That is why a typical web application that is designed to handle a high load is architected to be scalable and distributed, deployed as a set of web tier nodes, usually called a farm. All these facts have an impact on the applicability of caching.

In this article, we focus on the role caching can play in assuring high throughput and performance of web applications designed to handle a high load, and I am going to use the experience from one of my projects and provide an ASP.NET-based solution as an illustration.

The Problem of Handling a High Load

The actual problem I had to solve wasn’t an original one. My task was to make an ASP.NET MVC monolithic web application prototype be capable of handling a high load.

The necessary steps towards improving throughput capabilities of a monolithic web application are:

- Enable it to run multiple copies of the web application in parallel, behind a load balancer, and serve all concurrent requests effectively (i.e., make it scalable).

- Profile the application to reveal current performance bottlenecks and optimize them.

- Use caching to increase read request throughput, since this typically constitutes a significant part of the overall applications load.

Caching strategies often involve use of some middleware caching server, like Memcached or Redis, to store the cached values. Despite their high adoption and proven applicability, there are some downsides to these approaches, including:

- Network latencies introduced by accessing the separate cache servers can be comparable to the latencies of reaching the database itself.

- The web tier’s data structures can be unsuitable for serialization and deserialization out of the box. To use cache servers, those data structures should support serialization and deserialization, which requires ongoing additional development effort.

- Serialization and deserialization add runtime overhead with an adverse effect on performance.

All these issues were relevant in my case, so I had to explore alternative options.

The built-in ASP.NET in-memory cache (System.Web.Caching.Cache) is extremely fast and can be used without serialization and deserialization overhead, both during the development and at the runtime. However, ASP.NET in-memory cache has also its own drawbacks:

- Each web tier node needs its own copy of cached values. This could result in higher database tier consumption upon node cold start or recycling.

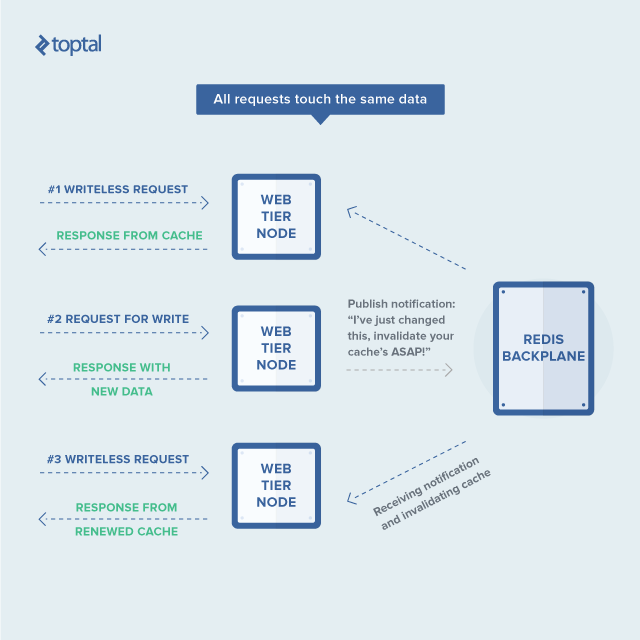

- Each web tier node should be notified when another node makes any portion of the cache invalid by writing updated values. Since the cache is distributed and without proper synchronization, most of the nodes will return old values which is typically unacceptable.

If the additional database tier load won’t lead to a bottleneck by itself, then implementing a properly distributed cache seems like an easy task to handle, right? Well, it’s not an easy task, but it is possible. In my case, benchmarks showed that the database tier shouldn’t be a problem, as most of the work happened in the web tier. So, I decided to go with the ASP.NET in-memory cache and focus on implementing the proper synchronization.

Introducing an ASP.NET-based Solution

As explained, my solution was to use the ASP.NET in-memory cache instead of the dedicated caching server. This entails each node of the web farm having its own cache, querying the database directly, performing any necessary calculations, and storing results in a cache. This way, all cache operations will be blazing fast thanks to the in-memory nature of the cache. Typically, cached items have a clear lifetime and become stale upon some change or writing of new data. So, from the web application logic, it is usually clear when the cache item should be invalidated.

The only problem left here is that when one of the nodes invalidates a cache item in its own cache, no other node will know about this update. So, subsequent requests serviced by other nodes will deliver stale results. To address this, each node should share its cache invalidations with the other nodes. Upon receiving such invalidation, other nodes could simply drop their cached value and get a new one at the next request.

Here, Redis can come into play. The power of Redis, compared to other solutions, comes from its Pub/Sub capabilities. Every client of a Redis server can create a channel and publish some data on it. Any other client is able to listen to that channel and receive the related data, very similar to any event-driven system. This functionality can be used to exchange cache invalidation messages between the nodes, so all nodes will be able to invalidate their cache when it is needed.

ASP.NET’s in-memory cache is straightforward in some ways and complex in others. In particular, it is straightforward in that it works as a map of key/value pairs, yet there is a lot of complexity related to its invalidation strategies and dependencies.

Fortunately, typical use cases are simple enough, and it’s possible to use a default invalidation strategy for all the items, enabling each cache item to have only a single dependency at most. In my case, I ended with the following ASP.NET code for the interface of the caching service. (Note that this is not the actual code, as I omitted some details for the sake of simplicity and the proprietary license.)

public interface ICacheKey

{

string Value { get; }

}

public interface IDataCacheKey : ICacheKey { }

public interface ITouchableCacheKey : ICacheKey { }

public interface ICacheService

{

int ItemsCount { get; }

T Get<T>(IDataCacheKey key, Func<T> valueGetter);

T Get<T>(IDataCacheKey key, Func<T> valueGetter, ICacheKey dependencyKey);

}

Here, the cache service basically allows two things. First, it enables storing the result of some value getter function in a thread safe manner. Second, it ensures that the then-current value is always returned when it is requested. Once the cache item becomes stale or is explicitly evicted from the cache, the value getter is called again to retrieve a current value. The cache key was abstracted away by ICacheKey interface, mainly to avoid hard-coding of cache key strings all over the application.

To invalidate cache items, I introduced a separate service, which looked like this:

public interface ICacheInvalidator

{

bool IsSessionOpen { get; }

void OpenSession();

void CloseSession();

void Drop(IDataCacheKey key);

void Touch(ITouchableCacheKey key);

void Purge();

}

Besides basic methods of dropping items with data and touching keys, which only had dependent data items, there are a few methods related to some kind of “session”.

Our web application used Autofac for dependency injection, which is an implementation of the inversion of control (IoC) design pattern for dependencies management. This feature allows developers to create their classes without the need to worry about dependencies, as the IoC container manages that burden for them.

The cache service and cache invalidator have drastically different lifecycles regarding IoC. The cache service was registered as a singleton (one instance, shared between all clients), while the cache invalidator was registered as an instance per request (a separate instance was created for each incoming request). Why?

The answer has to do with an additional subtlety we needed to handle. The web application is using a Model-View-Controller (MVC) architecture, which helps mainly in the separation of UI and logic concerns. So, a typical controller action is wrapped into a subclass of an ActionFilterAttribute. In the ASP.NET MVC framework, such C#-attributes are used to decorate the controller’s action logic in some way. That particular attribute was responsible for opening a new database connection and starting a transaction at the beginning of the action. Also, at the end of the action, the filter attribute subclass was responsible for committing the transaction in case of success and rolling it back in the event of failure.

If cache invalidation happened right in the middle of the transaction, there could be race condition whereby the next request to that node would successfully put the old (still visible to other transactions) value back into the cache. To avoid this, all invalidations are postponed until the transaction is committed. After that, cache items are safe to evict and, in the case of a transaction failure, there is no need for cache modification at all.

That was the exact purpose of the “session”-related parts in the cache invalidator. Also, that is the purpose of its lifetime being bound to the request. The ASP.NET code looked like this:

class HybridCacheInvalidator : ICacheInvalidator

{

...

public void Drop(IDataCacheKey key)

{

if (key == null)

throw new ArgumentNullException("key");

if (!IsSessionOpen)

throw new InvalidOperationException("Session must be opened first.");

_postponedRedisMessages.Add(new Tuple<string, string>("drop", key.Value));

}

...

public void CloseSession()

{

if (!IsSessionOpen)

return;

_postponedRedisMessages.ForEach(m => PublishRedisMessageSafe(m.Item1, m.Item2));

_postponedRedisMessages = null;

}

...

}

The PublishRedisMessageSafe method here is responsible for sending the message (second argument) to a particular channel (first argument). In fact, there are separate channels for drop and touch, so the message handler for each of them knew exactly what to do - drop/touch the key equal to the received message payload.

One of the tricky parts was to manage the connection to the Redis server properly. In the case of the server going down for any reason, the application should continue to function correctly. When Redis is back online again, the application should seamlessly start to use it again and exchange messages with other nodes again. To achieve this, I used the StackExchange.Redis library and the resulting connection management logic was implemented as follows:

class HybridCacheService : ...

{

...

public void Initialize()

{

try

{

Multiplexer = ConnectionMultiplexer.Connect(_configService.Caching.BackendServerAddress);

...

Multiplexer.ConnectionFailed += (sender, args) => UpdateConnectedState();

Multiplexer.ConnectionRestored += (sender, args) => UpdateConnectedState();

...

}

catch (Exception ex)

{

...

}

}

private void UpdateConnectedState()

{

if (Multiplexer.IsConnected && _currentCacheService is NoCacheServiceStub) {

_inProcCacheInvalidator.Purge();

_currentCacheService = _inProcCacheService;

_logger.Debug("Connection to remote Redis server restored, switched to in-proc mode.");

} else if (!Multiplexer.IsConnected && _currentCacheService is InProcCacheService) {

_currentCacheService = _noCacheStub;

_logger.Debug("Connection to remote Redis server lost, switched to no-cache mode.");

}

}

}

Here, ConnectionMultiplexer is a type from the StackExchange.Redis library, which is responsible for transparent work with underlying Redis. The important part here is that, when a particular node loses connection to Redis, it falls back to no cache mode to make sure no request will receive stale data. After the connection is restored, the node starts to use the in-memory cache again.

Here are examples of action without usage of the cache service (SomeActionWithoutCaching) and an identical operation which uses it (SomeActionUsingCache):

class SomeController : Controller

{

public ISomeService SomeService { get; set; }

public ICacheService CacheService { get; set; }

...

public ActionResult SomeActionWithoutCaching()

{

return View(

SomeService.GetModelData()

);

}

...

public ActionResult SomeActionUsingCache()

{

return View(

CacheService.Get(

/* Cache key creation omitted */,

() => SomeService.GetModelData()

);

);

}

}

A code snippet from an ISomeService implementation could look like this:

class DefaultSomeService : ISomeService

{

public ICacheInvalidator _cacheInvalidator;

...

public SomeModel GetModelData()

{

return /* Do something to get model data. */;

}

...

public void SetModelData(SomeModel model)

{

/* Do something to set model data. */

_cacheInvalidator.Drop(/* Cache key creation omitted */);

}

}

Benchmarking and Results

After the caching ASP.NET code was all set, it was time to use it in the existing web application logic, and benchmarking can be handy to decide where to put most efforts of rewriting the code to use the caching. It’s crucial to pick out a few most operationally common or critical use cases to be benchmarked. After that, a tool like Apache jMeter could be used for two things:

- To benchmark these key use cases via HTTP requests.

- To simulate high load for the web node under test.

To get a performance profile, any profiler which is capable of attaching to the IIS worker process could be used. In my case, I used JetBrains dotTrace Performance. After some time spent experimenting to determine the correct jMeter parameters (such as concurrent and requests count), it becomes possible to start to collect performance snapshots, which are very helpful in identifying the hotspots and bottlenecks.

In my case, some use cases showed that about 15%-45% overall code execution time was spent in the database reads with the obvious bottlenecks. After I applied caching, performance nearly doubled (i.e., was twice as fast) for most of them.

Conclusion

As you may see, my case could seem like an example of what is usually called “reinventing the wheel”: Why bother to try to create something new, when there are already best practices widely applied out there? Just set up a Memcached or Redis, and let it go.

I definitely agree that usage of best practices is usually the best option. But before blindly applying any best practice, one should ask oneself: How applicable is this “best practice”? Does it fit my case well?

The way I see it, proper options and tradeoff analysis is a must upon making any significant decision, and that was the approach I chose because the problem was not so easy. In my case, there were many factors to consider, and I did not want to take a one-size-fits-all solution when it might not be the right approach for the problem at hand.

In the end, with the proper caching in place, I did get almost 50% performance increase over the initial solution.

Further Reading on the Toptal Blog:

Daniel Ivanov

Moscow, Russia

Member since July 6, 2016

About the author

Daniel has helped startups bring products to markets for more than a decade using best-of-breed approaches to HTML/CSS, JS, Python, and C#.

PREVIOUSLY AT