CloudI: Bringing Erlang's Fault-Tolerance to Polyglot Development

Clouds must be efficient to provide useful fault-tolerance and scalability, but they also must be easy to use.

CloudI (pronounced “cloud-e” /klaʊdi/) is an open source cloud computing platform that is most closely related to the Platform as a Service (PaaS) clouds. CloudI differs in a few key ways, most importantly: software developers are not forced to use specific frameworks, slow hardware virtualization, or a particular operating system. By allowing cloud deployment to occur without virtualization, CloudI leaves development process and runtime performance unimpeded, while quality of service can be controlled with clear accountability.

Clouds must be efficient to provide useful fault-tolerance and scalability, but they also must be easy to use.

CloudI (pronounced “cloud-e” /klaʊdi/) is an open source cloud computing platform that is most closely related to the Platform as a Service (PaaS) clouds. CloudI differs in a few key ways, most importantly: software developers are not forced to use specific frameworks, slow hardware virtualization, or a particular operating system. By allowing cloud deployment to occur without virtualization, CloudI leaves development process and runtime performance unimpeded, while quality of service can be controlled with clear accountability.

Michael is a distributed systems and fault tolerance expert, having worked with AT&T, E*Trade, Nokia and others.

PREVIOUSLY AT

Clouds must be efficient to provide useful fault-tolerance and scalability, but they also must be easy to use.

CloudI (pronounced “cloud-e” /klaʊdi/) is an open source cloud computing platform built in Erlang that is most closely related to the Platform as a Service (PaaS) clouds. CloudI differs in a few key ways, most importantly: software developers are not forced to use specific frameworks, slow hardware virtualization, or a particular operating system. By allowing cloud deployment to occur without virtualization, CloudI leaves development process and runtime performance unimpeded, while quality of service can be controlled with clear accountability.

What makes a cloud a cloud?

The word “cloud” has become ubiquitous over the past few years. And its true meaning has become somewhat lost. In the most basic technological sense, these are the properties that a cloud computing platform must have:

And these are the properties that we’d like a cloud to have:

- Easy integration

- Simple deployment

My goal in building CloudI was to bring together these four attributes.

It’s important to understand that few programming languages can provide real fault-tolerance with scalability. In fact, I’d say Erlang is roughly alone in this regard.

I began by looking at the Erlang programming language (on top of which CloudI is built). The Erlang virtual machine provides fault-tolerance features and a highly scalable architecture while the Erlang programming language keeps the required source code small and easy to follow.

It’s important to understand that few programming languages can provide real fault-tolerance with scalability. In fact, I’d say Erlang is roughly alone in this regard. Let me take a detour to explain why and how.

What is fault-tolerance in cloud computing?

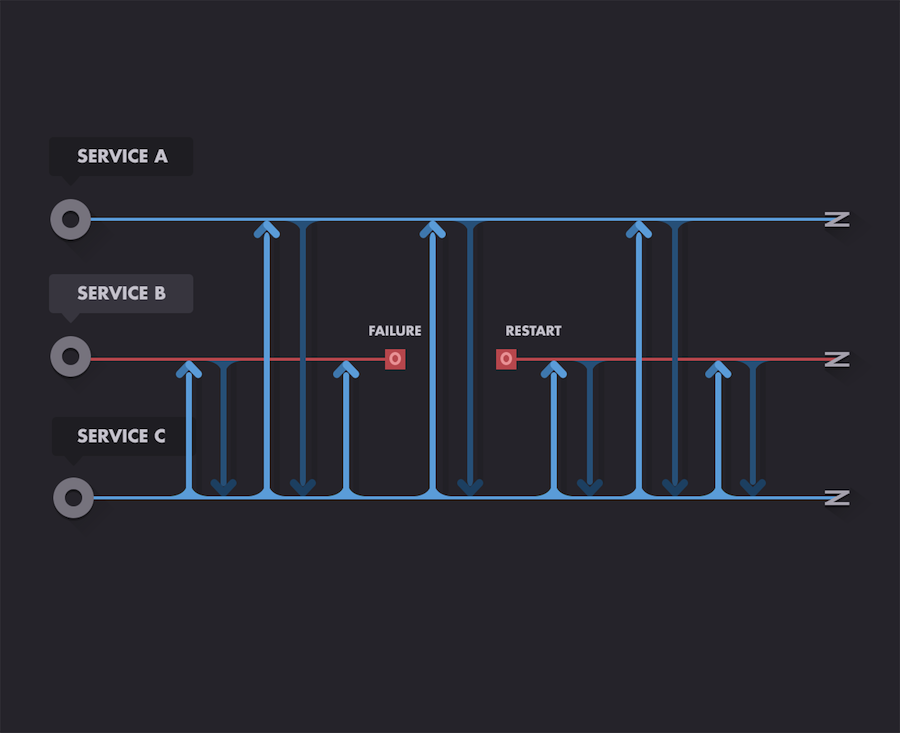

Fault-tolerance is robustness to error. That is, fault-tolerant systems are able to continue operating relatively normally even in the event of (hopefully isolated) errors.

Here, we see service C sending requests to services A and B. Although service B crashes temporarily, the rest of the system continues, relatively unimpeded.

Erlang tutorial for beginners

Erlang is known for achieving 9x9s of reliability (99.9999999% uptime, so less than 31.536 milliseconds of downtime per year) with real production systems (within the telecommunications industry). Normal web development techniques only achieve 5x9s reliability (99.999% uptime, so about 5.256 minutes of downtime per year), if they are lucky, due to slow update procedures and complete system failures. How does Erlang provide this advantage?

The Erlang virtual machine implements what is called an “Actor model”, a mathematical model for concurrent computation. Within the Actor model, Erlang’s lightweight processes are a concurrency primitive of the language itself. That is, within Erlang, we assume that everything is an actor. By definition, actors perform their actions concurrently; so if everything is an actor, we get inherent concurrency. (For more on Erlang’s Actor model, there’s a longer discussion here.)

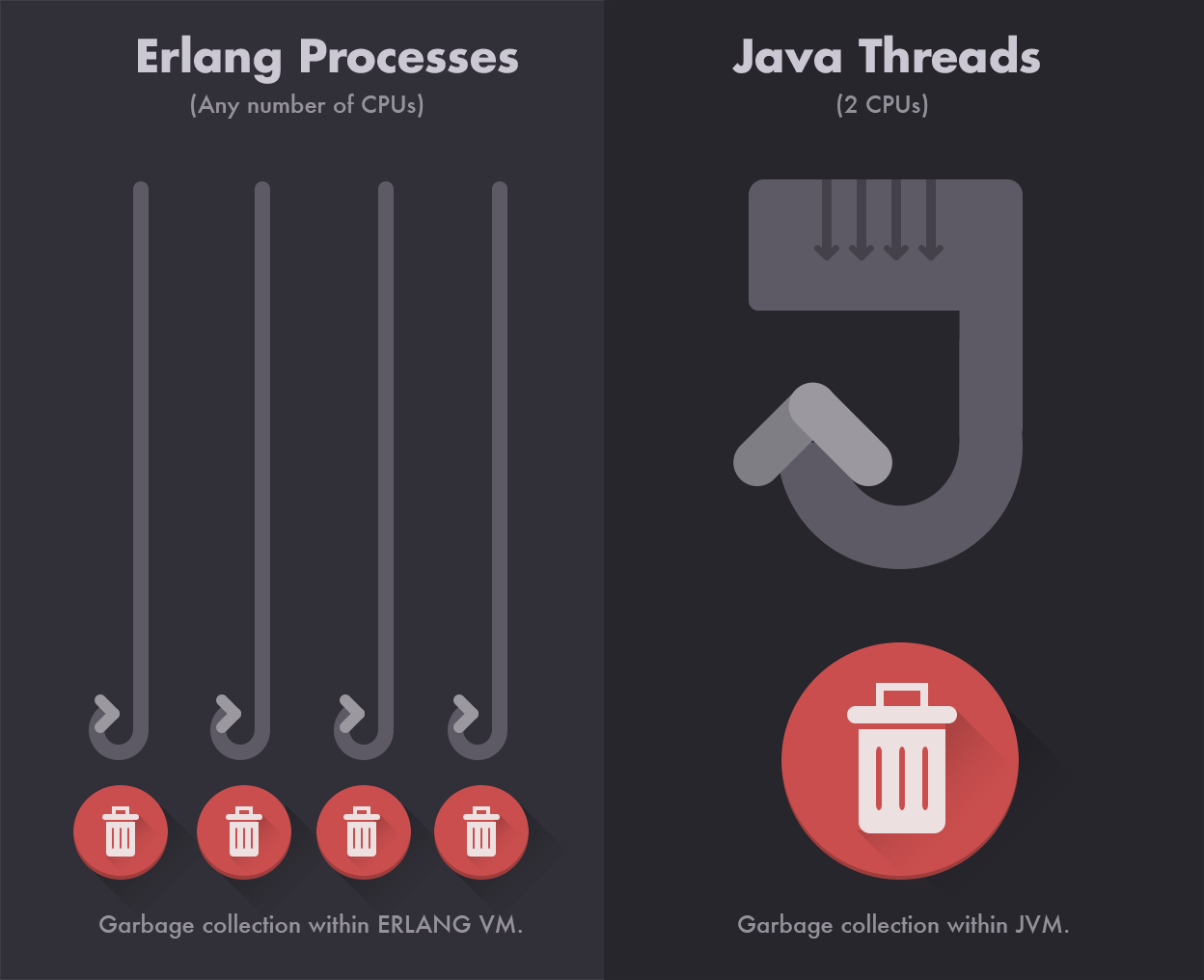

As a result, Erlang software is built with many lightweight processes that keep process state isolated while providing extreme scalability. When an Erlang process needs external state, a message is normally sent to another process, so that the message queuing can provide the Erlang processes with efficient scheduling. To keep the Erlang process state isolated, the Erlang virtual machine does garbage collection for each process individually so that other Erlang processes can continue running concurrently without being interrupted.

The Erlang virtual machine garbage collection is an important difference when compared with Java virtual machine garbage collection because Java depends on a single heap, which lacks the isolated state provided by Erlang. The difference between Erlang garbage collection and Java garbage collection means that Java is unable to provide basic fault-tolerance guarantees simply due to the virtual machine garbage collection, even if libraries or language support was developed on top of the Java virtual machine. There have been attempts to develop fault-tolerance features in Java and other Java virtual machine based languages, but they continue to be failures due to the Java virtual machine garbage collection.

Basically, building real-time fault-tolerance support on top of the JVM is by definition impossible, because the JVM itself is not fault-tolerant.

Erlang processes

At a low level, what happens when we get an error in an Erlang process? The language itself uses message passing between processes to ensure that any errors have a scope limited by a concurrent process. This works by storing data types as immutable objects; these objects are copied to limit the scope of the process state (large binaries are a special exception because they are reference counted to conserve memory).

In basic terms, that means that if we want to send variable X to another process P, we have to copy over X as its own immutable variable X’. We can’t modify X’ from our current process, and so even if we trigger some error, our second process P will be isolated from its effects. The end result is low-level control over the scope of any errors due to the isolation of state within Erlang processes. If we wanted to get even more technical, we’d mention that Erlang’s lack of mutability gives it referential transparency unlike, say, Java.

This type of fault-tolerance is deeper than just adding try-catch statements and exceptions. Here, fault-tolerance is about handling unexpected errors, and exceptions are expected. Here, you’re trying to keep your code running even when one of your variables unexpectedly explodes.

Erlang’s process scheduling provides extreme scalability for minimal source code, making the system simpler and easier to maintain. While it is true that other programming languages have been able to imitate the scalability found natively within Erlang by providing libraries with user-level threading (possibly combined with kernel-level threading) and data exchanging (similar to message passing) to implement their own Actor model for concurrent computation, the efforts have been unable to replicate the fault-tolerance provided within the Erlang virtual machine.

This leaves Erlang alone amongst programming languages as being both scalable and fault-tolerant, making it an ideal development platform for a cloud.

Taking advantage of Erlang

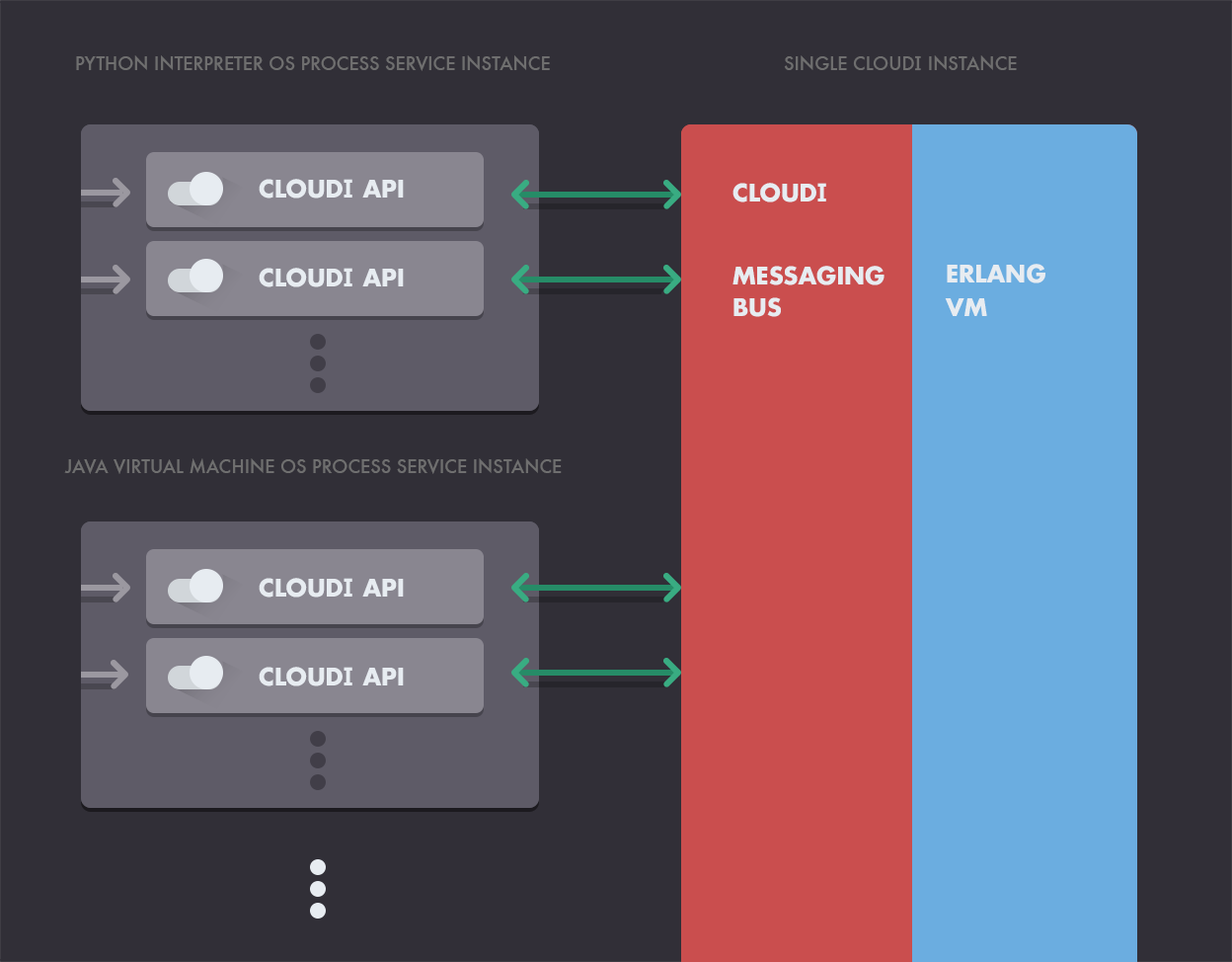

So, with all that said, I can make the claim that CloudI brings Erlang’s fault-tolerance and scalability to various other programming languages (currently C++/C, Erlang (of course), Java, Python, and Ruby), implementing services within a Service Oriented Architecture (SOA).

This simplicity makes CloudI a flexible framework for polyglot software development, providing Erlang’s strengths without requiring the programmer to write or even understand a line of Erlang code.

Every service executed within CloudI interacts with the CloudI API. All of the non-Erlang programming language services are handled using the same internal CloudI Erlang source code. Since the same minimal Erlang source code is used for all the non-Erlang programming languages, other programming language support can easily be added with an external programming language implementation of the CloudI API. Internally, the CloudI API is only doing basic serialization for requests and responses. This simplicity makes CloudI a flexible framework for polyglot software development, providing Erlang’s strengths without requiring the programmer to write or even understand a line of Erlang code.

The service configuration specifies startup parameters and fault-tolerance constraints so that service failures can occur in a controlled and isolated way. The startup parameters clearly define the executable and any arguments it needs, along with default timeouts used for service requests, the method to find a service (called the “destination refresh method”), both an allow and deny simple access control list (ACL) to block outgoing service requests and optional parameters to affect how service requests are handled. The fault-tolerance constraints are simply two integers (MaxR: maximum restarts, and MaxT: maximum time period in seconds) that control a service in the same way an Erlang supervisor behavior (an Erlang design pattern) controls Erlang processes. The service configuration provides explicit constraints for the lifetime of the service which helps to make the service execution easy to understand, even when errors occur.

To keep the service memory isolated during runtime, separate operating system processes are used for each non-Erlang service (referred to as “external” services) with an associated Erlang process (for each non-Erlang thread of execution) that is scheduled by the Erlang VM. The Erlang CloudI API creates “internal” services, which are also associated with an Erlang process, so both “external” services and “internal” services are processed in the same way within the Erlang VM.

In cloud computing, it’s also important that your fault-tolerance extends beyond a single computer (i.e., distributed system fault-tolerance). CloudI uses distributed Erlang communication to exchange service registration information, so that services can be utilized transparently on any instance of CloudI by specifying a single service name for a request made with the CloudI API. All service requests are load-balanced by the sending service and each service request is a distributed transaction, so the same service with separate instances on separate computers is able to provide system fault-tolerance within CloudI. If necessary, CloudI can be deployed within a virtualized operating system to provide the same system fault-tolerance while facilitating a stable development framework.

For example, if an HTTP request needs to store some account data in a database it could be made to the configured HTTP service (provided by CloudI) which would send the request to an account data service for processing (based on the HTTP URL which is used as the service name) and the account data would then be stored in a database. Every service request receives a Universally Unique IDentifier (UUID) upon creation, which can be used to track the completion of the service request, making each service request within CloudI a distributed transaction. So, in the account data service example, it is possible to make other service requests either synchronously or asynchronously and use the service request UUIDs to handle the response data before utilizing the database. Having the explicit tracking of each individual service request helps ensure that service requests are delivered within the timeout period of a request and also provides a way to uniquely identify the response data (the service request UUIDs are unique among all connected CloudI nodes).

With a typical deployment, each CloudI node could contain a configured instance of the account data service (which may utilize multiple operating system processes with multiple threads that each have a CloudI API object) and an instance of the HTTP service. An external load balancer would easily split the HTTP requests between the CloudI nodes and the HTTP service would route each request as a service request within CloudI, so that the account data service can easily scale within CloudI.

CloudI in action

CloudI lets you take unscalable legacy source code, wrap it with a thin CloudI service, and then execute the legacy source code with explicit fault-tolerance constraints. This particular development workflow is important for fully utilizing multicore machines while providing a distributed system that is fault-tolerant during the processing of real-time requests. Creating an “external” service in CloudI is simply instantiating the CloudI API object in all the threads that have been configured within the service configuration, so that each thread is able to handle CloudI requests concurrently. A simple service example can utilize the single main thread to create a single CloudI API object, like the following Python source code:

import sys

sys.path.append('/usr/local/lib/cloudi-1.2.3/api/python/')

from cloudi_c import API

class Task(object):

def __init__(self):

self.__api = API(0) # first/only thread == 0

def run(self):

self.__api.subscribe('hello_world_python/get', self.__hello_world)

self.__api.poll()

def __hello_world(self, command, name, pattern, request_info, request,

timeout, priority, trans_id, pid):

return 'Hello World!'

if __name__ == '__main__':

assert API.thread_count() == 1 # simple example, without threads

task = Task()

task.run()

The example service simply returns a “Hello World!” to an HTTP GET request by first subscribing with a service name and a callback function. When the service starts processing incoming CloudI service bus requests within the CloudI API poll function, incoming requests from the “internal” service which provides a HTTP server are routed to the example service based on the service name, because of the subscription. The service could also have returned no data as a response, if the request needed to be similar to a publish message in a typical distributed messaging API that provides publish/subscribe functionality. The example service wants to provide a response so that the HTTP server can provide the response to the HTTP client, so the request is a typical request/reply transaction. With both possible responses from a service, either data or no data, the service callback function controls the messaging paradigm used, instead of the calling service, so the request is able to route through as many services as necessary to provide a response when necessary, within the timeout specified for the request to occur within. Timeouts are very important for real-time event processing, so each request specifies an integer timeout which follows the request as it routes though any number of services. The end result is that real-time constraints are enforced alongside fault-tolerance constraints, to provide a dependable service in the presence of any number of software errors.

The presence of source code bugs in any software should be understood as a clear fact that is only mitigated with fault-tolerance constraints. Software development can reduce the presence of bugs as software is maintained, but it can also add bugs as software features are added. CloudI provides cloud computing which is able to address these important fault-tolerance concerns within real-time distributed systems software development. As I have demonstrated in this CloudI and Erlang tutorial, the cloud computing that CloudI provides is minimal so that efficiency is not sacrificed for the benefits of cloud computing.

Michael Truog

Seattle, WA, United States

Member since April 4, 2016

About the author

Michael is a distributed systems and fault tolerance expert, having worked with AT&T, E*Trade, Nokia and others.

PREVIOUSLY AT