Zero to Hero: Flask Production Recipes

Flask is a great way to get up and running quickly with a Python applications, but what if you wanted to make something a bit more robust?

In this article, Toptal Freelance Python Developer Ivan PoleschyuI shares some tips and useful recipes for building a complete production-ready Flask application.

Flask is a great way to get up and running quickly with a Python applications, but what if you wanted to make something a bit more robust?

In this article, Toptal Freelance Python Developer Ivan PoleschyuI shares some tips and useful recipes for building a complete production-ready Flask application.

Ivan is a passionate machine learning engineer and full-stack software developer with a master’s degree in computer science.

PREVIOUSLY AT

As a machine learning engineer and computer vision expert, I find myself creating APIs and even web apps with Flask surprisingly often. In this post, I want to share some tips and useful recipes for building a complete production-ready Flask application.

We will cover the following topics:

- Configuration management. Any real-life application has a lifecycle with specific stages—at the very least, it would be development, testing, and deployment. On each stage, the application code should work in a slightly different environment, which requires having a different set of settings, like database connection strings, external APIs keys, and URLs.

- Self-hosting Flask application with Gunicorn. Although Flask has a built-in web server, as we all know, it’s not suitable for production and needs to be put behind a real web server able to communicate with Flask through a WSGI protocol. A common choice for that is Gunicorn—a Python WSGI HTTP server.

- Serving static files and proxying request with Nginx. While being an HTTP web server, Gunicorn, in turn, is an application server not suited to face the web. That’s why we need Nginx as a reverse proxy and to serve static files. In case we need to scale up our application to multiple servers, Nginx will take care of load balancing as well.

- Deploying an app inside Docker containers on a dedicated Linux server. Containerized deployment has been an essential part of software design for quite a long time now. Our application is no different and will be neatly packaged in its own container (multiple containers, in fact).

- Configuring and deploying a PostgreSQL database for the application. Database structure and migrations will be managed by Alembic with SQLAlchemy providing object-relational mapping.

- Setting up a Celery task queue to handle long-running tasks. Every application will eventually require this to offload time or computation intensive processes—be it mail sending, automatic database housekeeping or processing of uploaded images—from web server threads on external workers.

Creating the Flask App

Let’s start by creating an application code and assets. Please note that I will not address proper Flask application structure in this post. The demo app consist of minimal number of modules and packages for the sake of brevity and clarity.

First, create a directory structure and initialize an empty Git repository.

mkdir flask-deploy

cd flask-deploy

# init GIT repo

git init

# create folder structure

mkdir static tasks models config

# install required packages with pipenv, this will create a Pipfile

pipenv install flask flask-restful flask-sqlalchemy flask-migrate celery

# create test static asset

echo "Hello World!" > static/hello-world.txt

Next, we’ll add the code.

config/__init__.py

In the config module, we’ll define our tiny configuration management framework. The idea is to make the app behave according to configuration preset selected by the APP_ENV environment variable, plus, add an option to override any configuration setting with a specific environment variable if required.

import os

import sys

import config.settings

# create settings object corresponding to specified env

APP_ENV = os.environ.get('APP_ENV', 'Dev')

_current = getattr(sys.modules['config.settings'], '{0}Config'.format(APP_ENV))()

# copy attributes to the module for convenience

for atr in [f for f in dir(_current) if not '__' in f]:

# environment can override anything

val = os.environ.get(atr, getattr(_current, atr))

setattr(sys.modules[__name__], atr, val)

def as_dict():

res = {}

for atr in [f for f in dir(config) if not '__' in f]:

val = getattr(config, atr)

res[atr] = val

return res

config/settings.py

This is a set of configuration classes, one of which is selected by the APP_ENV variable. When the application runs, the code in __init__.py will instantiate one of these classes overriding the field values with specific environment variables, if they are present. We will use a final configuration object when initializing Flask and Celery configuration later.

class BaseConfig():

API_PREFIX = '/api'

TESTING = False

DEBUG = False

class DevConfig(BaseConfig):

FLASK_ENV = 'development'

DEBUG = True

SQLALCHEMY_DATABASE_URI = 'postgresql://db_user:db_password@db-postgres:5432/flask-deploy'

CELERY_BROKER = 'pyamqp://rabbit_user:rabbit_password@broker-rabbitmq//'

CELERY_RESULT_BACKEND = 'rpc://rabbit_user:rabbit_password@broker-rabbitmq//'

class ProductionConfig(BaseConfig):

FLASK_ENV = 'production'

SQLALCHEMY_DATABASE_URI = 'postgresql://db_user:db_password@db-postgres:5432/flask-deploy'

CELERY_BROKER = 'pyamqp://rabbit_user:rabbit_password@broker-rabbitmq//'

CELERY_RESULT_BACKEND = 'rpc://rabbit_user:rabbit_password@broker-rabbitmq//'

class TestConfig(BaseConfig):

FLASK_ENV = 'development'

TESTING = True

DEBUG = True

# make celery execute tasks synchronously in the same process

CELERY_ALWAYS_EAGER = True

tasks/__init__.py

The tasks package contains Celery initialization code. Config package, which will already have all settings copied on module level upon initialization, is used to update Celery configuration object in case we will have some Celery-specific settings in the future—for example, scheduled tasks and worker timeouts.

from celery import Celery

import config

def make_celery():

celery = Celery(__name__, broker=config.CELERY_BROKER)

celery.conf.update(config.as_dict())

return celery

celery = make_celery()

tasks/celery_worker.py

This module is required to start and initialize a Celery worker, which will run in a separate Docker container. It initializes the Flask application context to have access to the same environment as the application. If that’s not required, these lines can be safely removed.

from app import create_app

app = create_app()

app.app_context().push()

from tasks import celery

api/__init__.py

Next goes the API package, which defines the REST API using the Flask-Restful package. Our app is just a demo and will have only two endpoints:

-

/process_data– Starts a dummy long operation on a Celery worker and returns the ID of a new task. -

/tasks/<task_id>– Returns the status of a task by task ID.

import time

from flask import jsonify

from flask_restful import Api, Resource

from tasks import celery

import config

api = Api(prefix=config.API_PREFIX)

class TaskStatusAPI(Resource):

def get(self, task_id):

task = celery.AsyncResult(task_id)

return jsonify(task.result)

class DataProcessingAPI(Resource):

def post(self):

task = process_data.delay()

return {'task_id': task.id}, 200

@celery.task()

def process_data():

time.sleep(60)

# data processing endpoint

api.add_resource(DataProcessingAPI, '/process_data')

# task status endpoint

api.add_resource(TaskStatusAPI, '/tasks/<string:task_id>')

models/__init__.py

Now we’ll add a SQLAlchemy model for the User object, and a database engine initialization code. The User object won’t be used by our demo app in any meaningful way, but we’ll need it to make sure database migrations work and SQLAlchemy-Flask integration is set up correctly.

import uuid

from flask_sqlalchemy import SQLAlchemy

db = SQLAlchemy()

class User(db.Model):

id = db.Column(db.String(), primary_key=True, default=lambda: str(uuid.uuid4()))

username = db.Column(db.String())

email = db.Column(db.String(), unique=True)

Note how UUID is generated automatically as an object ID by default expression.

app.py

Finally, let’s create a main Flask application file.

from flask import Flask

logging.basicConfig(level=logging.DEBUG,

format='[%(asctime)s]: {} %(levelname)s %(message)s'.format(os.getpid()),

datefmt='%Y-%m-%d %H:%M:%S',

handlers=[logging.StreamHandler()])

logger = logging.getLogger()

def create_app():

logger.info(f'Starting app in {config.APP_ENV} environment')

app = Flask(__name__)

app.config.from_object('config')

api.init_app(app)

# initialize SQLAlchemy

db.init_app(app)

# define hello world page

@app.route('/')

def hello_world():

return 'Hello, World!'

return app

if __name__ == "__main__":

app = create_app()

app.run(host='0.0.0.0', debug=True)</td>

</tr>

<tr>

<td>

Here we are:

- Configuring basic logging in a proper format with time, level and process ID

- Defining the Flask app creation function with API initialization and “Hello, world!” page

- Defining an entry point to run the app during development time

wsgi.py

Also, we will need a separate module to run Flask application with Gunicorn. It will have only two lines:

from app import create_app

app = create_app()

The application code is ready. Our next step is to create a Docker configuration.

Building Docker Containers

Our application will require multiple Docker containers to run:

- Application container to serve templated pages and expose API endpoints. It’s a good idea to split these two functions on the production, but we don’t have any templated pages in our demo app. The container will run Gunicorn web server which will communicate with Flask through WSGI protocol.

- Celery worker container to execute long tasks. This is the same application container, but with custom run command to launch Celery, instead of Gunicorn.

- Celery beat container—similar to above, but for tasks invoked on a regular schedule, such as removing accounts of users who never confirmed their email.

- RabbitMQ container. Celery requires a message broker to communicate between workers and the app, and store task results. RabbitMQ is a common choice, but you also can use Redis or Kafka.

- Database container with PostgreSQL.

A natural way to easily manage multiple containers is to use Docker Compose. But first, we will need to create a Dockerfile to build a container image for our application. Let’s put it to the project directory.

FROM python:3.7.2

RUN pip install pipenv

ADD . /flask-deploy

WORKDIR /flask-deploy

RUN pipenv install --system --skip-lock

RUN pip install gunicorn[gevent]

EXPOSE 5000

CMD gunicorn --worker-class gevent --workers 8 --bind 0.0.0.0:5000 wsgi:app --max-requests 10000 --timeout 5 --keep-alive 5 --log-level info

This file instructs Docker to:

- Install all dependencies using Pipenv

- Add an application folder to the container

- Expose TCP port 5000 to the host

- Set the container’s default startup command to a Gunicorn call

Let’s discuss more what happens in the last line. It runs Gunicorn specifying the worker class as gevent. Gevent is a lightweight concurrency lib for cooperative multitasking. It gives considerable performance gains on I/O bound loads, providing better CPU utilization compared to OS preemptive multitasking for threads. The --workers parameter is the number of worker processes. It’s a good idea to set it equal to an number of cores on the server.

Once we have a Dockerfile for application container, we can create a docker-compose.yml file, which will define all containers that the application will require to run.

version: '3'

services:

broker-rabbitmq:

image: "rabbitmq:3.7.14-management"

environment:

- RABBITMQ_DEFAULT_USER=rabbit_user

- RABBITMQ_DEFAULT_PASS=rabbit_password

db-postgres:

image: "postgres:11.2"

environment:

- POSTGRES_USER=db_user

- POSTGRES_PASSWORD=db_password

migration:

build: .

environment:

- APP_ENV=${APP_ENV}

command: flask db upgrade

depends_on:

- db-postgres

api:

build: .

ports:

- "5000:5000"

environment:

- APP_ENV=${APP_ENV}

depends_on:

- broker-rabbitmq

- db-postgres

- migration

api-worker:

build: .

command: celery worker --workdir=. -A tasks.celery --loglevel=info

environment:

- APP_ENV=${APP_ENV}

depends_on:

- broker-rabbitmq

- db-postgres

- migration

api-beat:

build: .

command: celery beat -A tasks.celery --loglevel=info

environment:

- APP_ENV=${APP_ENV}

depends_on:

- broker-rabbitmq

- db-postgres

- migration

We defined the following services:

-

broker-rabbitmq– A RabbitMQ message broker container. Connection credentials are defined by environment variables -

db-postgres– A PostgreSQL container and its credentials -

migration– An app container which will perform the database migration with Flask-Migrate and exit. API containers depend on it and will run afterwards. -

api– The main application container -

api-workerandapi-beat– Containers running Celery workers for tasks received from the API and scheduled tasks

Each app container will also receive the APP_ENV variable from the docker-compose up command.

Once we have all application assets ready, let’s put them on GitHub, which will help us deploy the code on the server.

git add *

git commit -a -m 'Initial commit'

git remote add origin git@github.com:your-name/flask-deploy.git

git push -u origin master

Configuring the Server

Our code is on a GitHub now, and all that’s left is to perform initial server configuration and deploy the application. In my case, the server is an AWS instance running AMI Linux. For other Linux flavors, instructions may differ slightly. I also assume that server already has an external IP address, DNS is configured with A record pointing to this IP, and SSL certificates are issued for the domain.

Security tip: Don’t forget to allow ports 80 and 443 for HTTP(S) traffic, port 22 for SSH in your hosting console (or using iptables) and close external access to all other ports! Be sure to do the same for the IPv6 protocol!

Installing Dependencies

First, we’ll need Nginx and Docker running on the server, plus Git to pull the code. Let’s login via SSH and use a package manager to install them.

sudo yum install -y docker docker-compose nginx git

Configuring Nginx

Next step is to configure Nginx. The main nginx.conf configuration file is often good as-is. Still, be sure to check if it suits your needs. For our app, we’ll create a new configuration file in a conf.d folder. Top-level configuration has a directive to include all .conf files from it.

cd /etc/nginx/conf.d

sudo vim flask-deploy.conf

Here is a Flask site configuration file for Nginx, batteries included. It has the following features:

- SSL is configured. You should have valid certificates for your domain, e.g., a free Let’s Encrypt certificate.

-

www.your-site.comrequests are redirected toyour-site.com - HTTP requests are redirected to secure HTTPS port.

- Reverse proxy is configured to pass requests to local port 5000.

- Static files are served by Nginx from a local folder.

server {

listen 80;

listen 443;

server_name www.your-site.com;

# check your certificate path!

ssl_certificate /etc/nginx/ssl/your-site.com/fullchain.crt;

ssl_certificate_key /etc/nginx/ssl/your-site.com/server.key;

ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

ssl_ciphers HIGH:!aNULL:!MD5;

# redirect to non-www domain

return 301 https://your-site.com$request_uri;

}

# HTTP to HTTPS redirection

server {

listen 80;

server_name your-site.com;

return 301 https://your-site.com$request_uri;

}

server {

listen 443 ssl;

# check your certificate path!

ssl_certificate /etc/nginx/ssl/your-site.com/fullchain.crt;

ssl_certificate_key /etc/nginx/ssl/your-site.com/server.key;

ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

ssl_ciphers HIGH:!aNULL:!MD5;

# affects the size of files user can upload with HTTP POST

client_max_body_size 10M;

server_name your-site.com;

location / {

include /etc/nginx/mime.types;

root /home/ec2-user/flask-deploy/static;

# if static file not found - pass request to Flask

try_files $uri @flask;

}

location @flask {

add_header 'Access-Control-Allow-Origin' '*' always;

add_header 'Access-Control-Allow-Methods' 'GET, POST, PUT, DELETE, OPTIONS';

add_header 'Access-Control-Allow-Headers' 'DNT,User-Agent,X-Requested-With,If-Modified-Since,Cache-Control,Content-Type,Range,Authorization';

add_header 'Access-Control-Expose-Headers' 'Content-Length,Content-Range';

proxy_read_timeout 10;

proxy_send_timeout 10;

send_timeout 60;

resolver_timeout 120;

client_body_timeout 120;

# set headers to pass request info to Flask

proxy_set_header Host $http_host;

proxy_set_header X-Forwarded-Proto $scheme;

proxy_set_header X-Forwarded-For $remote_addr;

proxy_redirect off;

proxy_pass http://127.0.0.1:5000$uri;

}

}

After editing the file, run sudo nginx -s reload and see if there are any errors.

Setting Up GitHub Credentials

It is a good practice to have a separate “deployment” VCS account for deploying the project and CI/CD system. This way you don’t risk by exposing your own account’s credentials. To further protect the project repository, you can also limit permissions of such account to read-only access. For a GitHub repository, you’ll need an organization account to do that. To deploy our demo application, we’ll just create a public key on the server and register it on GitHub to get access to our project without entering credentials every time.

To create a new SSH key, run:

cd ~/.ssh

ssh-keygen -b 2048 -t rsa -f id_rsa.pub -q -N "" -C "deploy"

Then log in on GitHub and add your public key from ~/.ssh/id_rsa.pub in account settings.

Deploying an App

The final steps are pretty straightforward—we need to get application code from GitHub and start all containers with Docker Compose.

cd ~

git clone https://github.com/your-name/flask-deploy.git

git checkout master

APP_ENV=Production docker-compose up -d

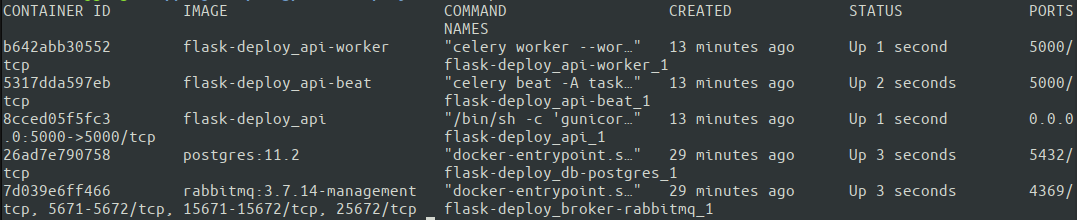

It might be a good idea to omit -d (which starts the container in detached mode) for a first run to see the output of each container right in the terminal and check for possible issues. Another option is to inspect each individual container with docker logs afterward. Let’s see if all of our containers are running with docker ps.

Great. All five containers are up and running. Docker Compose assigned container names automatically based on the service specified in docker-compose.yml. Now it’s time to finally test how the entire configuration works! It’s best to run the tests from an external machine to make sure the server has correct network settings.

# test HTTP protocol, you should get a 301 response

curl your-site.com

# HTTPS request should return our Hello World message

curl https://your-site.com

# and nginx should correctly send test static file:

curl https://your-site.com/hello-world.txt

That’s it. We have a minimalistic, but fully production-ready configuration of our app running on an AWS instance. Hope it will help you to start building a real-life application quickly and avoid some common mistakes! The complete code is available on a GitHub repository.

Conclusion

In this article, we discussed some of the best practices of structuring, configuring, packaging and deploying a Flask application to production. This is a very big topic, impossible to fully cover in a single blog post. Here is a list of important questions we didn’t address:

This article doesn’t cover:

- Continuous integration and continuous deployment

- Automatic testing

- Log shipping

- API monitoring

- Scaling up an application to multiple servers

- Protection of credentials in the source code

However, you can learn how to do that using some of the other great resources on this blog. For example, to explore logging, see Python Logging: An In-Depth Tutorial, or for a general overview on CI/CD and automated testing, see How to Build an Effective Initial Deployment Pipeline. I leave the implementation of these as an exercise to you, the reader.

Thanks for reading!

Understanding the basics

What is a Flask application?

A Flask application is a Python application built for the web with Flask library.

Which is better, Flask or Django?

Both frameworks are suitable for a wide variety of web-related tasks. Flask is often used for building web services which are not full-fledged websites, and is known for its flexibility.

What is Gunicorn?

Gunicorn is a Python WSGI HTTP server. Its common use case is serving Flask or Django Python applications through WSGI interface on production.

What is Celery and why use it with Flask?

Web servers, like Flask, are not suited for long-running tasks, such as video processing. Celery is a task queue for handling such tasks in a convenient and asynchronous manner. Task data is stored in a supported back-end storage engine, like RabbitMQ or Redis.

Why use Docker Compose?

Docker Compose is a convenient tool for Docker, which allows to work with containers on a higher level of abstraction by defining services. It also handles common scenarios of starting and stopping containers.

Why do we need an Nginx web server for Flask?

While Gunicorn is well-suited as an application server, it’s not a good idea to have it facing the internet because of security considerations and web request handling limitations. A common pattern is to use Nginx as a reverse-proxy in front of Gunicorn for routing requests between services.

When is PostgreSQL a good choice?

PostgreSQL is a mature high-performance relational database engine with many plugins and libraries for all major programming languages. It’s a perfect choice if your project, no matter big or small, just needs a database. Plus, it’s open source and free.

Ivan Poleschyuk

Dubai, United Arab Emirates

Member since February 21, 2018

About the author

Ivan is a passionate machine learning engineer and full-stack software developer with a master’s degree in computer science.

PREVIOUSLY AT