Accelerate With BERT: NLP Optimization Models

Data collection and preparation slow down traditional NLP projects. However, transfer learning and BERT can reduce the amount of data required and change the way companies execute NLP projects.

Data collection and preparation slow down traditional NLP projects. However, transfer learning and BERT can reduce the amount of data required and change the way companies execute NLP projects.

Jesse is a chief data scientist, CTO, and founder who has launched four companies, including an NLP news-parsing solution that was acquired in 2018. He has consulted at top technology companies like Zalando and MariaDB and is currently the head of data science at THINKAlpha.

PREVIOUSLY AT

There are two primary difficulties when building deep learning natural language processing (NLP) classification models.

- Data collection (getting thousands or millions of classified data points)

- Deep learning architecture and training

Our ability to build complex deep learning models that are capable of understanding the complexity of language has typically required years of experience across these domains. The harder your problem, the more diverse your output, the more time you need to spend on each of these steps.

Data collection is burdensome, time-consuming, expensive, and is the number one limiting factor for successful NLP projects. Preparing data, building resilient pipelines, making choices amongst hundreds of potential preparation options, and getting “model ready” can easily take months of effort even with talented machine learning engineers. Finally, training and optimizing deep learning models require a combination of intuitive understanding, technical expertise, and an ability to stick with a problem.

In this article, we’ll cover

- Trends in deep learning for NLP: How transfer learning is making world-class models open source

- Intro to BERT: An introduction to one of the more powerful NLP solutions available – Bidirectional encoder representations from transformers (BERT)

- How BERT works and why it will change the way companies execute on NLP projects

Trends in Deep Learning

Naturally, the optimization of this process started with increasing accuracy. LSTM (long-short term memory) networks revolutionized many NLP tasks, but they were (and are) incredibly data-hungry. Optimizing and training those models can take days or weeks on large and expensive machines. Finally, deploying those large models in production is costly and cumbersome.

To reduce these complexity creating factors, the field of computer vision has long made use of transfer learning. Transfer learning is the ability to use a model trained for a different but similar task to accelerate your solution on a new one. It takes far less effort to retrain a model that can already categorize trees than it does to train a new model to recognize bushes from scratch.

Imagine a scenario where someone had never seen a bush but had seen many trees in their lives. You would find it far easier to explain to them what a bush looks like in terms of what they know about trees rather than describing a bush from scratch. Transfer learning is a very human way to learn, so it makes intuitive sense that this would work in deep learning tasks.

BERT means you need less data, less training time, and you get more business value. The quality of NLP products that any business can build has become world-class.

In Comes BERT

BERT makes use of what are called transformers and is designed to produce sentence encodings. Essentially, BERT is a language model based on a specific deep learning model. It’s purpose-built to give a contextual, numeric, representation of a sentence or a string of sentences. That digital representation is the input to a shallow and uncomplicated model. Not only that, but the results are generally superior and require a fraction of the input data for a task that has yet to be solved.

Imagine being able to spend a day collecting data instead of a year and being able to build models around datasets that you would otherwise never have enough data to create an LSTM model. The number of NLP tasks that would be opened up for a business that, prior, could not afford the development time and expertise required is staggering.

How BERT Works

In traditional NLP, the starting point for model training is word vectors. Word vectors are a list of numbers [0.55, 0.24, 0.90, …] that attempt to numerically represent what that word means. With a numeric representation, we can use those words in training complex models, and with large word vectors, we can embed information about words into our models.

BERT does something similar (in fact, its starting point is word vectors), but it creates a numeric representation of an entire input sentence (or sentences).

Compared to LSTM models, BERT does many things differently.

- It reads all the words at once rather than left-to-right or right-to-left

- 15% of the words are randomly selected to be “masked” (literally replaced with the [MASK] token) during training time

- 10% of the randomly selected words are left unchanged

- 10% of the masked words are replaced with random words

- (a) and (b) work together to force the model to predict every word in the sentence (models are lazy)

- BERT then attempts to predict all the words in the sentence, and only the masked words contribute to the loss function - inclusive of the unchanged and randomly replaced words

- The model fine-tuned on next-sentence-prediction. In this step, the model tries to determine if a given sentence is the next sentence in the text

Convergence is slow, and BERT takes a long time to train. However, it learns the contextual relationships in text far better. Word vectors are very shallow representations that limit the complexity that they can model—BERT does not have this limitation.

Many businesses made use of the pre-trained LSTM models that utilized multiple GPUs and took days to train for their application. But compared to these older pre-trained models, BERT allows a team to accelerate solutions by tenfold. One can move to identify a business solution, to building a proof of concept, and finally moving that concept into production in a fraction of the time. That said, there are few cases where existing BERT models cannot be used in place or tuned to a specific use case.

Implementing BERT and Comparing Business Value

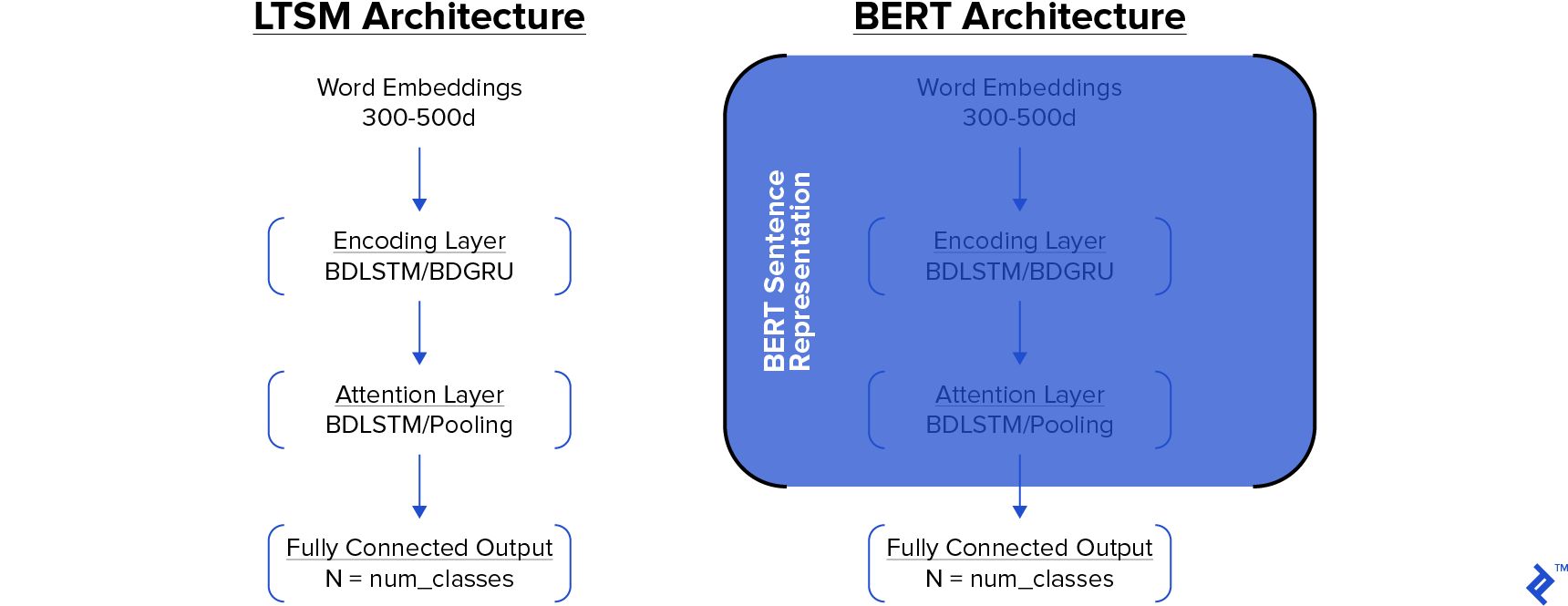

Since this article focuses on the business and engineering application of building a real product, we will create and train two models to better understand the comparative value.

- BERT: The most straightforward BERT pipeline. We process text in a standard way, we produce the BERT sentence encodings, and we feed those sentence encodings into a shallow neural network

- LSTM: The standard Embed - Encode - Attend - Predict architecture (pictured above)

The task? Predicting the origin of movies based on their plot from IMDB. Our dataset covers American, Australian, British, Canadian, Japanese, Chinese, South Korean, and Russian films in addition to sixteen other films for a total of 24 origins. We have just under 35,000 total training examples.

Here is an example snippet from a plot.

Thousands of years ago, Steppenwolf and his legions of Parademons attempt to take over Earth with the combined energies of three Mother Boxes. They are foiled by a unified army that includes the Olympian Gods, Amazons, Atlanteans, mankind, and the Green Lantern Corps. After repelling Steppenwolf ‘s army, the Mother Boxes are separated and hidden in locations on the planet. In the present, mankind is in mourning over Superman, whose death triggers the Mother Boxes to reactivate and Steppenwolf’s return to Earth in an effort to regain favor with his master, Darkseid. Steppenwolf aims to gather the artifacts to form “The Unity”, which will destroy Earth ‘s ecology and terraform it in the image of…

If you had not guessed, this is the plot of the Justice League — an American film.

The Results

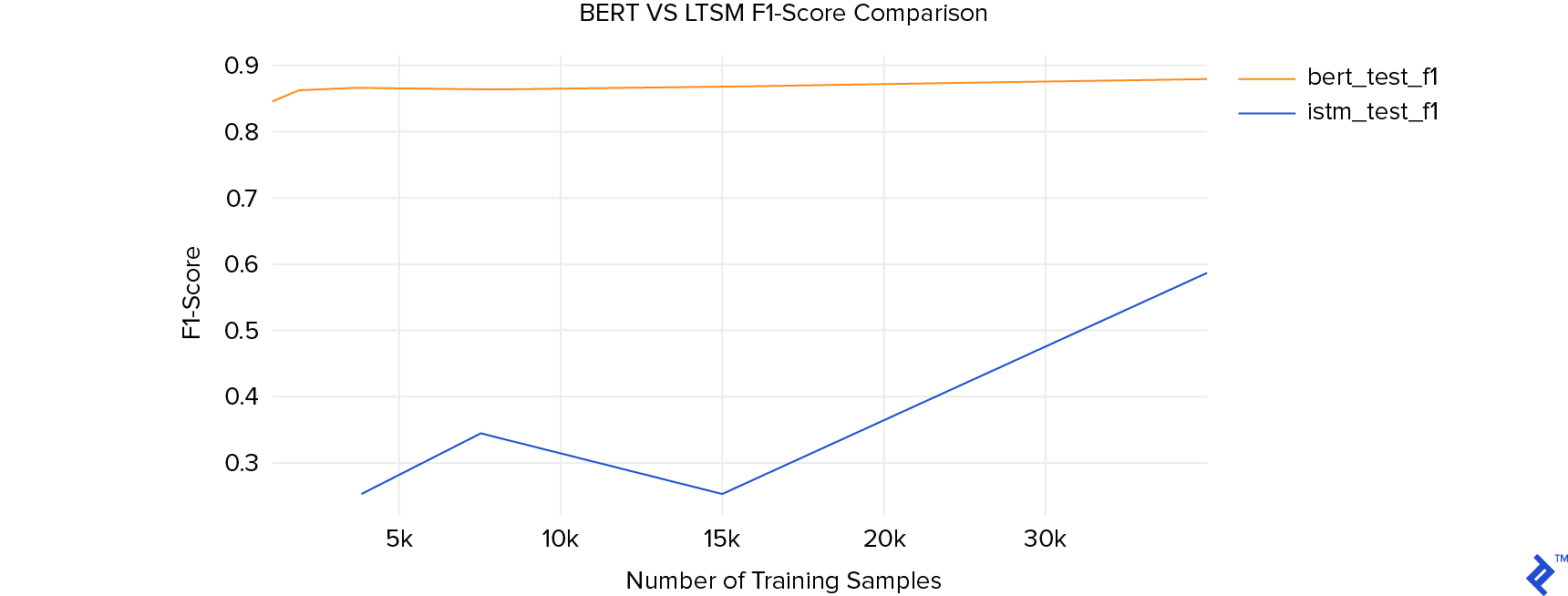

We trained a diverse set of parameters to understand how the results responded to varying amounts of data and model sizes. As we said, the most significant value add from BERT is the need for far less data.

For the LSTM model, we trained the largest model we could on our GPU and varied the size of the vocabulary and the word length to find the best performing model. For the BERT model, we had nothing more than a single layer.

We fixed our testing set across all these samples, so we are consistently scoring the same training set.

On this task, the model trained using BERT sentence encodings reaches an impressive F1-score of 0.84 after just 1000 samples. The LSTM network never exceeds 0.60. Even more impressive, training the BERT models took on average 1/20th of the time than preparing the LSTM models.

Conclusion

By any metric, these results point to a revolution in NLP. Using 100x less data and 20x less training time, we achieved world-class results. The ability to train high-quality models in seconds or minutes instead of hours or days opens up NLP in areas where it could not previously be afforded.

BERT has many more uses than the one in this post. There are multilingual models. It can be used to solve many different NLP tasks, either individually as in this post or simultaneously using multiple outputs. BERT sentence encodings are set to become a cornerstone of many NLP projects going forward.

The code behind this post is available on Github. I also encourage readers to check out Bert-as-a-service, which was a cornerstone of building the BERT sentence encodings for this post.

Further Reading on the Toptal Blog:

Understanding the basics

What is transfer learning?

Transfer learning is a method in machine learning where a model is built and trained on one task is used as the starting. It is a machine learning method where a model developed for one task is used as the starting point for a similar, related problem (e.g., NLP classification to named entity recognition).

What is BERT?

BERT is a powerful deep-learning model developed by Google based on the transformer architecture. BERT has shown state-of-the-art results and a number of the most common NLP tasks and can be used as a starting point for building NLP models in many domains.

How valuable is BERT in building production models?

BERT abstracts away some of the most complicated and time-consuming aspects of building an NLP and evidence has shown that BERT can be used to reduce the amount of data required to train a high performing model by over 90%. BERT also reduces production complexity, development time, and increases accuracy.

Where do I start with BERT?

The best place to get started with BERT is by getting familiar with Bert-as-a-service. The code behind this post is available on Github and can also be used to jumpstart a new NLP project.

Calgary, AB, Canada

Member since June 4, 2019

About the author

Jesse is a chief data scientist, CTO, and founder who has launched four companies, including an NLP news-parsing solution that was acquired in 2018. He has consulted at top technology companies like Zalando and MariaDB and is currently the head of data science at THINKAlpha.

PREVIOUSLY AT