8 Automated Testing Best Practices for a Positive Testing Experience

Testing doesn’t have to be tedious. With these automated testing best practices and tips, software engineers can leverage automated tests to boost their productivity and make their work more enjoyable.

Testing doesn’t have to be tedious. With these automated testing best practices and tips, software engineers can leverage automated tests to boost their productivity and make their work more enjoyable.

Lev is an accomplished C# and .NET developer who leverages test-driven development, static analysis, and in-depth knowledge of technologies to solve tasks with robust, clean code. At leading smart marine technology company Wärtsilä, he created an algorithm for 3D seafloor mapping that resolves conflicting data from thousands of nautical charts.

Expertise

Previous Role

Senior Software EngineerPREVIOUSLY AT

It’s no wonder many developers view testing as a necessary evil that saps time and energy: Testing can be tedious, unproductive, and entirely too complicated.

My first experience with testing was awful. I worked on a team that had strict code coverage requirements. The workflow was: implement a feature, debug it, and write tests to ensure full code coverage. The team didn’t have integration tests, only unit tests with tons of manually initialized mocks, and most unit tests tested trivial manual mappings while using a library to perform automatic mappings. Every test tried to assert every available property, so every change broke dozens of tests.

I disliked working with tests because they were perceived as a time-consuming burden. However, I didn’t give up. The confidence testing provides and the automation of checks after every small change piqued my interest. I started reading and practicing, and learned that tests, when done right, could be both helpful and enjoyable.

In this article, I share eight automated testing best practices I wish I had known from the beginning.

Why You Need an Automated Test Strategy

Automated testing is often focused on the future, but when you implement it correctly, you benefit immediately. Using tools that help you do your job better can save time and make your work more enjoyable.

Imagine you’re developing a system that retrieves purchase orders from the company’s ERP and places those orders with a vendor. You have the price of previously ordered items in the ERP, but the current prices may be different. You want to control whether to place an order at a lower or higher price. You have user preferences stored, and you’re writing code to handle price fluctuations.

How would you check that the code works as expected? You would probably:

- Create a dummy order in the developer’s instance of the ERP (assuming you set it up beforehand).

- Run your app.

- Select that order and start the order-placing process.

- Gather data from the ERP’s database.

- Request the current prices from the vendor’s API.

- Override prices in code to create specific conditions.

You stopped at the breakpoint and can go step by step to see what will happen for one scenario, but there are many possible scenarios:

| Preferences | ERP price | Vendor price | Should we place the order? | |

|---|---|---|---|---|

| Allow higher price | Allow lower price | |||

| false | false | 10 | 10 | true |

| (Here there would be three more preference combinations, but prices are equal, so the result is the same.) | ||||

| true | false | 10 | 11 | true |

| true | false | 10 | 9 | false |

| false | true | 10 | 11 | false |

| false | true | 10 | 9 | true |

| true | true | 10 | 11 | true |

| true | true | 10 | 9 | true |

In case of a bug, the company may lose money, harm its reputation, or both. You need to check multiple scenarios and repeat the check loop several times. Doing so manually would be tedious. But tests are here to help!

Tests let you create any context without calls to unstable APIs. They eliminate the need for repetitive clicking through old and slow interfaces that are all too common in legacy ERP systems. All you have to do is define the context for the unit or subsystem and then any debugging, troubleshooting, or scenario exploring happens instantly—you run the test and you are back to your code. My preference is to set up a keybinding in my IDE that repeats my previous test run, giving immediate, automated feedback as I make changes.

1. Maintain the Right Attitude

Compared to manual debugging and self-testing, automated tests are more productive from the very beginning, even before any testing code is committed. After you check that your code behaves as expected—by manually testing or perhaps, for a more complex module, by stepping through it with a debugger during testing—you can use assertions to define what you expect for any combination of input parameters.

With tests passing, you’re almost ready to commit, but not quite. Prepare to refactor your code because the first working version usually isn’t elegant. Would you perform that refactoring without tests? That’s questionable because you’d have to complete all the manual steps again, which could diminish your enthusiasm.

What about the future? While performing any refactoring, optimization, or feature addition, tests help ensure that a module still behaves as expected after you change it, thereby instilling lasting confidence and allowing developers to feel better equipped to tackle upcoming work.

It’s counterproductive to think about tests as a burden or something that makes only code reviewers or leads happy. Tests are a tool that we as developers benefit from. We like when our code works and we don’t like to spend time on repetitive actions or on fixing code to address bugs.

Recently, I worked on refactoring in my codebase and asked my IDE to clean up unused using directives. To my surprise, tests showed several failures in my email reporting system. However, it was a valid fail—the cleanup process removed some using directives in my Razor (HTML + C#) code for an email template, and the template engine was not able to build valid HTML as a result. I didn’t expect that such a minor operation would break email reporting. Testing helped me avoid spending hours catching bugs all over the app right before its release, when I assumed that everything would work.

Of course, you have to know how to use tools and not cut your proverbial fingers. It might seem that defining the context is tedious and can be harder than running the app, that tests require too much maintenance to avoid becoming stale and useless. These are valid points and we will address them.

2. Select the Right Type of Test

Developers often grow to dislike automated tests because they are trying to mock a dozen dependencies only to check if they’re called by the code. Alternatively, developers encounter a high-level test and try to reproduce every application state to check all variations in a small module. These patterns are unproductive and tedious, but we can avoid them by leveraging different test types as they were intended. (Tests should be practical and enjoyable, after all!)

Readers will need to know what unit tests are and how to write them, and be familiar with integration tests—if not, it’s worth pausing here to get up to speed.

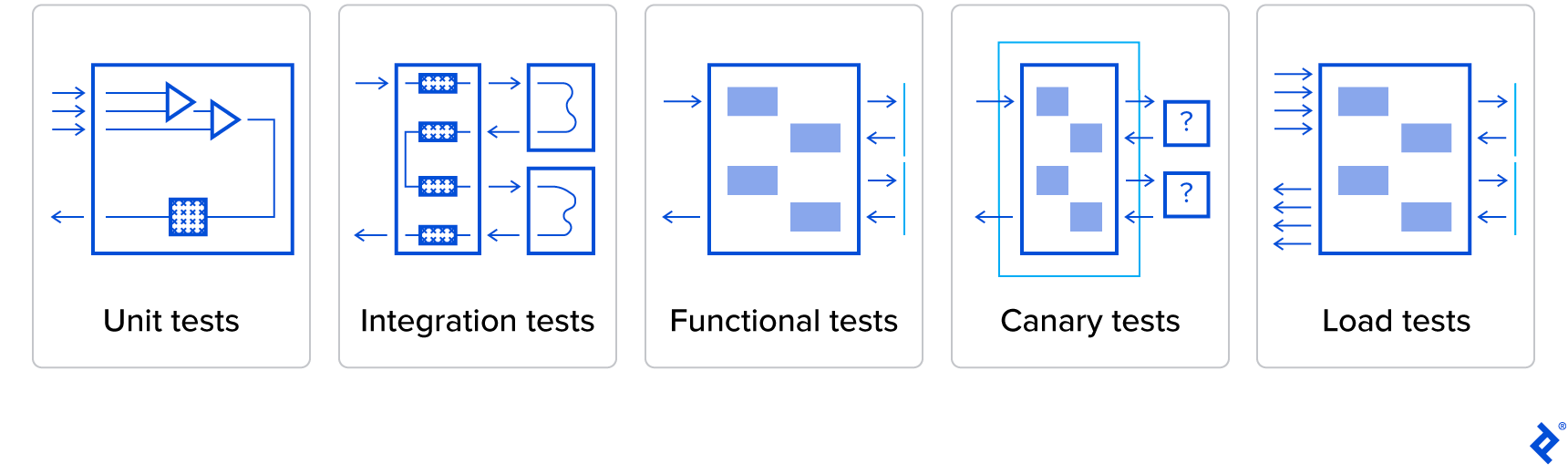

There are dozens of testing types, but these five common types make an extremely effective combination:

- Unit tests are used to test an isolated module by calling its methods directly. Dependencies are not being tested, thus, they’re mocked.

- Integration tests are used to test subsystems. You still use direct calls to the module’s own methods, but here we care about dependencies, so don’t use mocked dependencies—only real (production) dependent modules. You can still use an in-memory database or mocked web server because these are mocks of infrastructure.

- Functional tests are tests for the whole application. You use no direct calls. Instead, all the interaction goes through the API or user interface—these are the tests from the end-user perspective. However, infrastructure is still mocked.

- Canary tests are similar to functional tests but with production infrastructure and a smaller set of actions. They’re used to ensure that newly deployed applications work.

- Load tests are similar to canary tests but with real staging infrastructure and an even smaller set of actions, which are repeated many times.

It’s not always necessary to work with all five testing types from the beginning. In most cases, you can go a long way with the first three tests.

We’ll briefly examine the use cases of each type to help you select the right ones for your needs.

Unit Tests

Recall the example with different prices and handling preferences. It’s a good candidate for unit testing because we care only about what is happening inside the module, and the results have important business ramifications.

The module has a lot of different combinations of input parameters, and we want to get a valid return value for every combination of valid arguments. Unit tests are good at ensuring validity because they provide direct access to the input parameters of the function or method and you don’t have to write dozens of test methods to cover every combination. In many languages, you can avoid duplicating test methods by defining a method, which accepts arguments needed for your code and expected results. Then, you can use your test tooling to provide different sets of values and expectations for that parameterized method.

Integration Tests

Integration tests are a good fit for cases when you are interested in how a module interacts with its dependencies, other modules, or the infrastructure. You still use direct method calls but there’s no access to submodules, so trying to test all scenarios for all input methods of all submodules is impractical.

Typically, I prefer to have one success scenario and one failure scenario per module.

I like to use integration tests to check if a dependency injection container is built successfully, whether a processing or calculation pipeline returns the expected result, or whether complex data was read and converted correctly from a database or third-party API.

Functional Tests

These tests give you the most confidence that your app works because they verify that your app can at least start without a runtime error. It’s a little more work to start testing your code without direct access to its classes, but once you understand and write the first few tests, you’ll find it’s not too difficult.

Run the application by starting a process with command-line arguments, if needed, and then use the application as your prospective customer would: by calling API endpoints or pressing buttons. This is not difficult, even in the case of UI testing: Each major platform has a tool to find a visual element in a UI.

Canary Tests

Functional tests let you know if your app works in a testing environment but what about a production environment? Suppose you’re working with several third-party APIs and you want to have a dashboard of their states or want to see how your application handles incoming requests. These are common use cases for canary tests.

They operate by briefly acting on the working system without causing side effects to third-party systems. For example, you can register a new user or check product availability without placing an order.

The purpose of canary tests is to be sure that all major components are working together in a production environment, not failing because of, for example, credential issues.

Load Tests

Load tests reveal whether your application will continue to work when large numbers of people start using it. They’re similar to canary and functional tests but aren’t conducted in local or production environments. Usually, a special staging environment is used, which is similar to the production environment.

It’s important to note that these tests do not use real third-party services, which might be unhappy with external load testing of their production services and may charge extra as a result.

3. Keep Testing Types Separate

When devising your automated test plan, each type of test should be separated so as to be able to run independently. While this requires extra organization, it is worthwhile because mixing tests can create problems.

These tests have different:

- Intentions and basic concepts (so separating them sets a good precedent for the next person looking at the code, including “future you”).

- Execution times (so running unit tests first allows for a quicker test cycle when a test fails).

- Dependencies (so it’s more efficient to load only those needed within a testing type).

- Required infrastructures.

- Programming languages (in certain cases).

- Positions in the continuous integration (CI) pipeline or outside it.

It’s important to note that with most languages and tech stacks, you can group, for example, all unit tests together with subfolders named after functional modules. This is convenient, reduces friction when creating new functional modules, is easier for automated builds, results in less clutter, and is one more way to simplify testing.

4. Run Your Tests Automatically

Imagine a situation in which you’ve written some tests, but after pulling your repo a few weeks later, you notice those tests are no longer passing.

This is an unpleasant reminder that tests are code and, like any other piece of code, they need to be maintained. The best time for this is right before the moment you think you’ve finished your work and want to see if everything still operates as intended. You have all the context needed and you can fix the code or change the failing tests more easily than your colleague working on a different subsystem. But this moment only exists in your mind, so the most common way to run tests is automatically after a push to the development branch or after creating a pull request.

This way, your main branch will always be in a valid state, or you will, at least, have a clear indication of its state. An automated building and testing pipeline—or a CI pipeline—helps:

- Ensure code is buildable.

- Eliminate potential “It works on my machine” problems.

- Provide runnable instructions on how to prepare a development environment.

Configuring this pipeline takes time, but the pipeline can reveal a range of issues before they reach users or clients, even when you’re the sole developer.

Once running, CI also reveals new issues before they have a chance to grow in scope. As such, I prefer to set it up right after writing the first test. You can host your code in a private repository on GitHub and set up GitHub Actions. If your repo is public, you have even more options than GitHub Actions. For instance, my automated test plan runs on AppVeyor, for a project with a database and three types of tests.

I prefer to structure my pipeline for production projects as follows:

- Compilation or transpilation

- Unit tests: they’re fast and don’t require dependencies

- Setup and initialization of the database or other services

- Integration tests: they have dependencies outside of your code, but they’re faster than functional tests

- Functional tests: when other steps have completed successfully, run the whole app

There are no canary tests or load tests. Because of their specifics and requirements, they should be initiated manually.

5. Write Only Necessary Tests

Writing unit tests for all code is a common strategy, but sometimes this wastes time and energy, and doesn’t give you any confidence. If you’re familiar with the “testing pyramid” concept, you may think that all of your code must be covered with unit tests, with only a subset covered by other, higher-level tests.

I don’t see any need to write a unit test that ensures that several mocked dependencies are called in the desired order. Doing that requires setting up several mocks and verifying all the calls, but it still would not give me the confidence that the module is working. Usually, I only write an integration test that uses real dependencies and checks only the result; that gives me some confidence that the pipeline in the tested module is working properly.

In general, I write tests that make my life easier while implementing functionality and supporting it later.

For most applications, aiming for 100% code coverage adds a great deal of tedious work and eliminates the joy from working with tests and programming in general. As Martin Fowler’s Test Coverage puts it:

Test coverage is a useful tool for finding untested parts of a codebase. Test coverage is of little use as a numeric statement of how good your tests are.

Thus I recommend you install and run the coverage analyzer after writing some tests. The report with highlighted lines of code will help you better understand its execution paths and find uncovered places that should be covered. Also, looking at your getters, setters, and facades, you’ll see why 100% coverage is no fun.

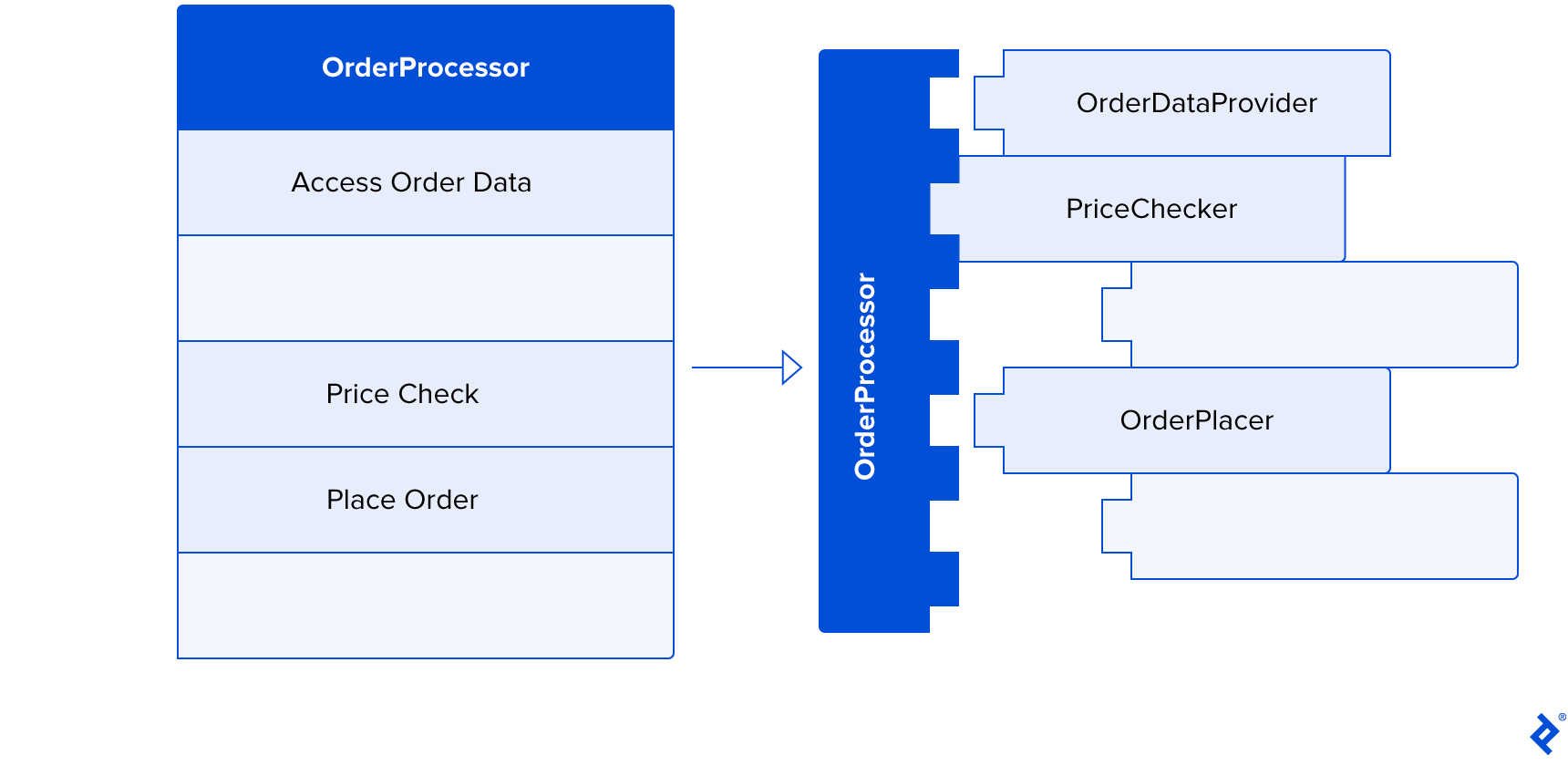

6. Play Lego

From time to time, I see questions like, “How can I test private methods?” You don’t. If you’ve asked that question, something has already gone wrong. Usually, it means you violated the Single Responsibility Principle, and your module doesn’t do something properly.

Refactor this module and pull the logic you think is important into a separate module. There’s no problem with increasing the number of files, which will lead to the code structured as Lego bricks: very readable, maintainable, replaceable, and testable.

Properly structuring code is easier said than done. Here are two suggestions:

Functional Programming

It’s worth learning about the principles and ideas of functional programming. Most mainstream languages, like C, C++, C#, Java, Assembly, JavaScript, and Python, force you to write programs for machines. Functional programming is better suited to the human brain.

This may seem counterintuitive at first, but consider this: A computer will be fine if you put all of your code in a single method, use a shared memory chunk to store temporary values, and use a fair amount of jump instructions. Moreover, compilers in the optimization stage sometimes do this. However, the human brain doesn’t easily handle this approach.

Functional programming forces you to write pure functions without side effects, with strong types, in an expressive manner. That way it’s much easier to reason about a function because the only thing it produces is its return value. The Programming Throwdown podcast episode Functional Programming With Adam Gordon Bell will help you to gain a basic understanding, and you can continue with the Corecursive episodes God’s Programming Language With Philip Wadler and Category Theory With Bartosz Milewski. The last two greatly enriched my perception of programming.

Test-driven Development

I recommend mastering TDD. The best way to learn is to practice. String Calculator Kata is a great way to practice with code kata. Mastering the kata will take time but will ultimately allow you to fully absorb the idea of TDD, which will help you create well-structured code that is a delight to work with and also testable.

One note of caution: Sometimes you’ll see TDD purists claiming that TDD is the only right way to program. In my opinion, it is simply another useful tool in your toolbox, nothing more.

Sometimes, you need to see how to adjust modules and processes in relation to each other and don’t know what data and signatures to use. In such cases, write code until it compiles, and then write tests to troubleshoot and debug the functionality.

In other cases, you know the input and the output you want, but have no idea how to write the implementation properly because of complicated logic. For those cases, it’s easier to start following the TDD procedure and build your code step by step rather than spend time thinking about the perfect implementation.

7. Keep Tests Simple and Focused

It’s a pleasure to work in a neatly organized code environment without unnecessary distractions. That’s why it’s important to apply SOLID, KISS, and DRY principles to tests—utilizing refactoring when it’s needed.

Sometimes I hear comments like, “I hate working in a heavily tested codebase because every change requires me to fix dozens of tests.” That’s a high-maintenance problem caused by tests that aren’t focused and try to test too much. The principle of “Do one thing well” applies to tests too: “Test one thing well”; each test should be relatively short and test only one concept. “Test one thing well” doesn’t mean that you should be limited to one assertion per test: You can use dozens if you’re testing non-trivial and important data mapping.

This focus is not limited to one specific test or type of test. Imagine dealing with complicated logic that you tested using unit tests, such as mapping data from the ERP system to your structure, and you have an integration test that is accessing mock ERP APIs and returning the result. In that case, it’s important to remember what your unit test already covers so you don’t test the mapping again in integration tests. Usually, it’s enough to ensure the result has the correct identification field.

With code structured like Lego bricks and focused tests, changes to business logic should not be painful. If changes are radical, you simply drop the file and its related tests, and make a new implementation with new tests. In case of minor changes, you typically change one to three tests to meet the new requirements and make changes to the logic. It’s fine to change tests; you can think about this practice as double-entry bookkeeping.

Other ways to achieve simplicity include:

- Coming up with conventions for test file structuring, test content structuring (typically an Arrange-Act-Assert structure), and test naming; then, most importantly, following these rules consistently.

- Extracting big code blocks to methods like “prepare request” and making helper functions for repeated actions.

- Applying the builder pattern for test data configuration.

- Using (in integration tests) the same DI container you use in the main app so every instantiation will be as trivial as

TestServices.Get()without manually creating dependencies. That way it will be easy to read, maintain, and write new tests because you already have useful helpers in place.

If you feel a test is becoming too complicated, simply stop and think. Either the module or your test needs to be refactored.

8. Use Tools to Make Your Life Easier

You will face many tedious tasks while testing. For example, setting up test environments or data objects, configuring stubs and mocks for dependencies, and so on. Luckily, every mature tech stack contains several tools to make these tasks much less tedious.

I suggest you write your first hundred tests if you haven’t already, then invest some time to identify repetitive tasks and learn about testing-related tooling for your tech stack.

For inspiration, here are some tools you can use:

- Test runners. Look for concise syntax and ease of use. From my experience, for .NET, I recommend xUnit (though NUnit is a solid choice too). For JavaScript or TypeScript, I go with Jest. Try to find the best match for your tasks and mindset because tools and challenges evolve.

- Mocking libraries. There may be low-level mocks for code dependencies, like interfaces, but there are also higher-level mocks for web APIs or databases. For JavaScript and TypeScript, low-level mocks included in Jest are OK. For .NET. I use Moq, though NSubstitute is great too. As for web API mocks, I enjoy using WireMock.NET. It can be used instead of an API to troubleshoot and debug response handling. It’s also very reliable and fast in automated tests. Databases could be mocked using their in-memory counterparts. EfCore in .NET provides such an option.

- Data generation libraries. These utilities fill your data objects with random data. They’re useful when, for example, you only care about a couple of fields from a big data transfer object (if that; maybe you only want to test mapping correctness). You can use them for tests and also as random data to display on a form or to fill your database. For testing purposes, I use AutoFixture in .NET.

- UI automation libraries. These are automated users for automated tests: They can run your app, fill out forms, click on buttons, read labels, and so on. To navigate through all of the elements of your app, you don’t need to deal with clicking by coordinates or image recognition; major platforms have the tooling to find needed elements by type, identifier, or data so you don’t need to change your tests with every redesign. They are robust, so once you’ve made them work for you and CI (sometimes you find out that things work only on your machine), they’ll keep working. I enjoy using FlaUI for .NET and Cypress for JavaScript and TypeScript.

- Assertion libraries. Most test runners include assertion tools, but there are cases in which an independent tool can help you write complex assertions using cleaner and more readable syntax, like Fluent Assertions for .NET. I especially like the function to assert that collections are equal regardless of an item’s order or its address in memory.

Boost Happiness Through Testing

Tests, particularly when approached with TDD, can help guide you and instill confidence. They help you to set specific goals, with each passed test being an indicator of your progress.

The right approach to testing can make you happier and more productive, and tests decrease the chances of burnout. The key is to view testing as a tool (or toolset) that can help you in your daily development routine, not as a burdensome step for future-proofing your code.

Testing is a necessary part of programming that allows software engineers to improve the way they work, deliver the best results, and use their time optimally. Perhaps even more importantly, tests can help developers enjoy their work more, thus boosting their morale and motivation.

Further Reading on the Toptal Blog:

Understanding the basics

How does automated testing work?

Automated tests execute your production code and ensure it behaves as expected.

What is automated testing used for?

First, automated testing is used to help you write and troubleshoot your code. Second, it ensures your code still works after refactoring, optimization, or other changes.

Is automation testing difficult?

Automated testing isn’t difficult if done properly. Learning how to do it right requires investing some time into mastering new skills and knowing when to use each one for maximum effectiveness.

Why do we need automation testing?

We need automated testing to optimize the time we spend writing a feature. It enables us troubleshoot and test when writing new code, and reduces the time needed to support that feature later.

Is automated testing worth it?

Automated testing is definitely worth it. When you master it, the effort invested in testing is worthwhile even when dealing with short-life-span prototype projects.

Antalya, Turkey

Member since April 29, 2020

About the author

Lev is an accomplished C# and .NET developer who leverages test-driven development, static analysis, and in-depth knowledge of technologies to solve tasks with robust, clean code. At leading smart marine technology company Wärtsilä, he created an algorithm for 3D seafloor mapping that resolves conflicting data from thousands of nautical charts.

Expertise

Previous Role

Senior Software EngineerPREVIOUSLY AT