Increase Developer Productivity With Generative AI: Tips From Leading Software Engineers

Generative AI is revolutionizing how software developers write code. In this article, three Toptal developers share how they’re using Gen AI in their daily work and offer actionable advice for others who want to utilize this nascent technology.

Generative AI is revolutionizing how software developers write code. In this article, three Toptal developers share how they’re using Gen AI in their daily work and offer actionable advice for others who want to utilize this nascent technology.

Sam Sycamore

Sam Sycamore is a Senior Editor of Engineering at Toptal and an open-source web developer. He has more than a decade of experience as a writer and editor across several industries, and has worked with international tech startups and digital product agencies to provide software documentation, educational resources, and multimedia marketing content.

Featured Experts

Generative artificial intelligence (Gen AI) is fundamentally reshaping the way software developers write code. Released upon the world just a few years ago, this nascent technology has already become ubiquitous: In the 2023 State of DevOps Report, more than 60% of respondents indicated that they were routinely using AI to analyze data, generate and optimize code, and teach themselves new skills and technologies. Developers are continuously discovering new use cases and refining their approaches to working with these tools while the tools themselves are evolving at an accelerating rate.

Consider tools like Cognition Labs’ Devin AI: In spring 2024, the tool’s creators said it could replace developers in resolving open GitHub issues at least 13.86% of the time. That may not sound impressive until you consider that the previous industry benchmark for this task in late 2023 was just 1.96%.

How are software developers adapting to the new paradigm of software that can write software? What will the duties of a software engineer entail over time as the technology overtakes the code-writing capabilities of the practitioners of this craft? Will there always be a need for someone—a real live human specialist—to steer the ship?

We spoke with three Toptal developers with various experience across back-end, mobile, web, and machine learning development to find out how they’re using generative AI to hone their skills and boost their productivity in their daily work. They shared what Gen AI does best and where it falls short; how others can make the most of generative AI for software development; and what the future of the software industry may look like if current trends prevail.

How Developers Are Using Generative AI

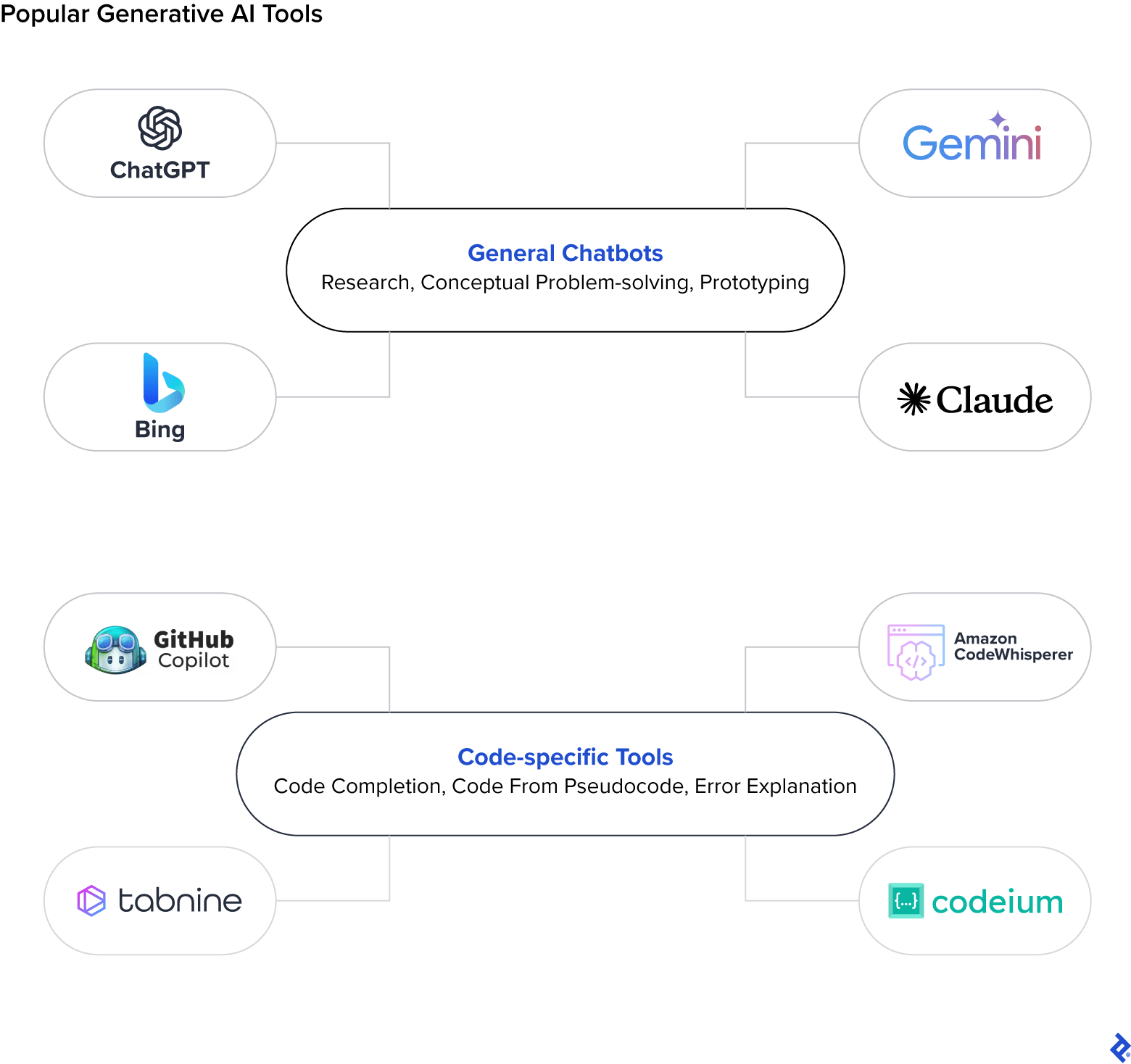

When it comes to AI for software development specifically, the most popular tools include OpenAI’s ChatGPT and GitHub Copilot. ChatGPT provides users with a simple text-based interface for prompting the large language model (LLM) about any topic under the sun, and is trained on the world’s publicly available internet data. Copilot, which sits directly inside of a developer’s integrated development environment, provides advanced autocomplete functionality by suggesting the next line of code to write, and is trained on all of the publicly accessible code that lives on GitHub. Taken together, these two tools theoretically contain the solutions to pretty much any technical problem that a developer might face.

The challenge, then, lies in knowing how to harness these tools most effectively. Developers need to understand what kinds of tasks are best suited for AI as well as how to properly tailor their input in order to get the desired output.

AI as an Expert and Intern Coder

“I use Copilot every day, and it does predict the exact line of code I was about to write more often than not,” says Aurélien Stébé, a Toptal full-stack web developer and AI engineer with more than 20 years of experience ranging from leading an engineering team at a consulting firm to working as a Java engineer at the European Space Agency. Stébé has taken the OpenAI API (which powers both Copilot and ChatGPT) a step further by building Gladdis, an open-source plugin for Obsidian that wraps GPT to let users create custom AI personas and then interact with them. “Generative AI is both an expert coworker to brainstorm with who can match your level of expertise, and a junior developer you can delegate simple atomic coding or writing tasks to.”

He explains that the tasks Gen AI is most useful for are those that take a long time to complete manually, but can be quickly checked for completeness and accuracy (think: converting data from one file format to another). GPT is also helpful for generating text summaries of code, but you still need an expert on hand who can understand the technical jargon.

Toptal iOS engineer Dennis Lysenko shares Stébé’s assessment of Gen AI’s ideal roles. He has several years of experience leading product development teams, and has observed significant improvements in his own daily workflow since incorporating Gen AI into it. He primarily uses ChatGPT and Codeium, a Copilot competitor, and he views the tools as both subject matter experts and interns who never get tired or annoyed about performing simple, repetitive tasks. He says that they help him to avoid tedious “manual labor” when writing code—tasks like setting up boilerplates, refactoring, and correctly structuring API requests.

For Lysenko, Gen AI has reduced the amount of “open loops” in his daily work. Before these tools became available, solving an unfamiliar problem necessarily caused a significant loss of momentum. This was especially noticeable when working on projects involving APIs or frameworks that were new to him due to the additional cognitive overhead required to figure out how to even approach finding a solution. “Generative AI is able to help me quickly solve around 80% of these problems and close the loops within seconds of encountering them, without requiring the back-and-forth context switching.”

An important step when using AI for these tasks is making sure important code is bug free before executing it, says Joao de Oliveira, a Toptal AI and machine learning engineer. Oliveira has developed AI models and worked on generative AI integrations for several product teams over the last decade and has witnessed firsthand what they do well, and where they fall short. As an MVP Developer at Hearst, he achieved a 98% success rate in using generative AI to extract structured data from unstructured data. In most cases it wouldn’t be wise to copy and paste AI-generated code wholesale and expect it to run properly—even when there are no hallucinations, there are almost always lines that need to be tweaked because AI lacks the full context of the project and its objectives.

Lysenko similarly advises developers who want to make the most of generative AI for coding not to give it too much responsibility all at once. In his experience, the tools work best when given clearly scoped problems that follow predictable patterns. Anything more complex or open-ended just invites hallucinations.

AI as a Personal Tutor and a Researcher

Oliveira frequently uses Gen AI to learn new programming languages and tools: “I learned Terraform in one hour using GPT-4. I would ask it to draft a script and explain it to me; then I would request changes to the code, asking for various features to see if they were possible to implement.” He says that he finds this approach to learning to be much faster and more efficient than trying to acquire the same information through Google searches and tutorials.

But as with other use cases, this only really works if the developer possesses enough technical know-how to be able to make an educated guess as to when the AI is hallucinating. “I think it falls short anytime we expect it to be 100% factual—we can’t blindly rely on it,” says Oliveira. When faced with any important task where small mistakes are unacceptable, he always cross-references the AI output against search engine results and trusted resources.

That said, some models are preferable when factual accuracy is of the utmost importance. Lysenko strongly encourages developers to opt for GPT-4 or GPT-4 Turbo over earlier ChatGPT models like 3.5: “I can’t stress enough how different they are. It’s night and day: 3.5 just isn’t capable of the same level of complex reasoning.” According to OpenAI’s internal evaluations, GPT-4 is 40% more likely to provide factual responses than its predecessor. Crucially for those who use it as a personal tutor, GPT-4 is able to accurately cite its sources so its answers can be cross-referenced.

Lysenko and Stébé also describe using Gen AI to research new APIs and help brainstorm potential solutions to problems they’re facing. When used to their full potential, LLMs can reduce research time down to near zero thanks to their massive context window. While humans are only capable of holding a few elements in our context window at once, LLMs can handle an ever-increasing number of source files and documents. The difference can be described in terms of reading a book: As humans, we’re only able to see two pages at a time—this would be the extent of our context window; but an LLM can potentially “see” every page in a book simultaneously. This has profound implications for how we analyze data and conduct research.

“ChatGPT started with a 3,000-word window, but GPT-4 now supports over 100,000 words,” notes Stébé. “Gemini has the capacity for up to one million words with a nearly perfect needle-in-a-haystack score. With earlier versions of these tools I could only give them the section of code I was working on as context; later it became possible to provide the README file of the project along with the full source code. Nowadays I can basically throw the whole project as context in the window before I ask my first question.”

Optimizing Generative AI Tool Use

Gen AI can greatly boost developer productivity for coding, learning, and research tasks—but only if used correctly. Without enough context, ChatGPT is more likely to hallucinate nonsensical responses that almost look correct. In fact, research indicates that GPT 3.5’s responses to programming questions contain incorrect information a staggering 52% of the time. And incorrect context can be worse than none at all: If presented a poor solution to a coding problem as a good example, ChatGPT will “trust” that input and generate subsequent responses based on that faulty foundation.

Stébé uses strategies like assigning clear roles to Gen AI and offering it relevant technical information to get the most out of these tools. “It’s crucial to tell the AI who it is and what you expect from it,” Stébé says. “In Gladdis I have a brainstorming AI, a transcription AI, a code reviewing AI, and custom AI assistants for each of my projects that have all of the necessary context like READMEs and source code.”

The more context you can feed it, the better—just be careful not to accidentally give sensitive or private data to public models like ChatGPT, because it can (and likely will) be used to train the models. Researchers have demonstrated that it’s possible to extract real API keys and other sensitive credentials via Copilot and Amazon CodeWhisperer that developers may have accidentally hardcoded into their software. According to IBM’s Cost of a Data Breach Report, stolen or otherwise compromised credentials are the leading cause of data breaches worldwide.

Prompt Engineering Strategies That Deliver Ideal Responses

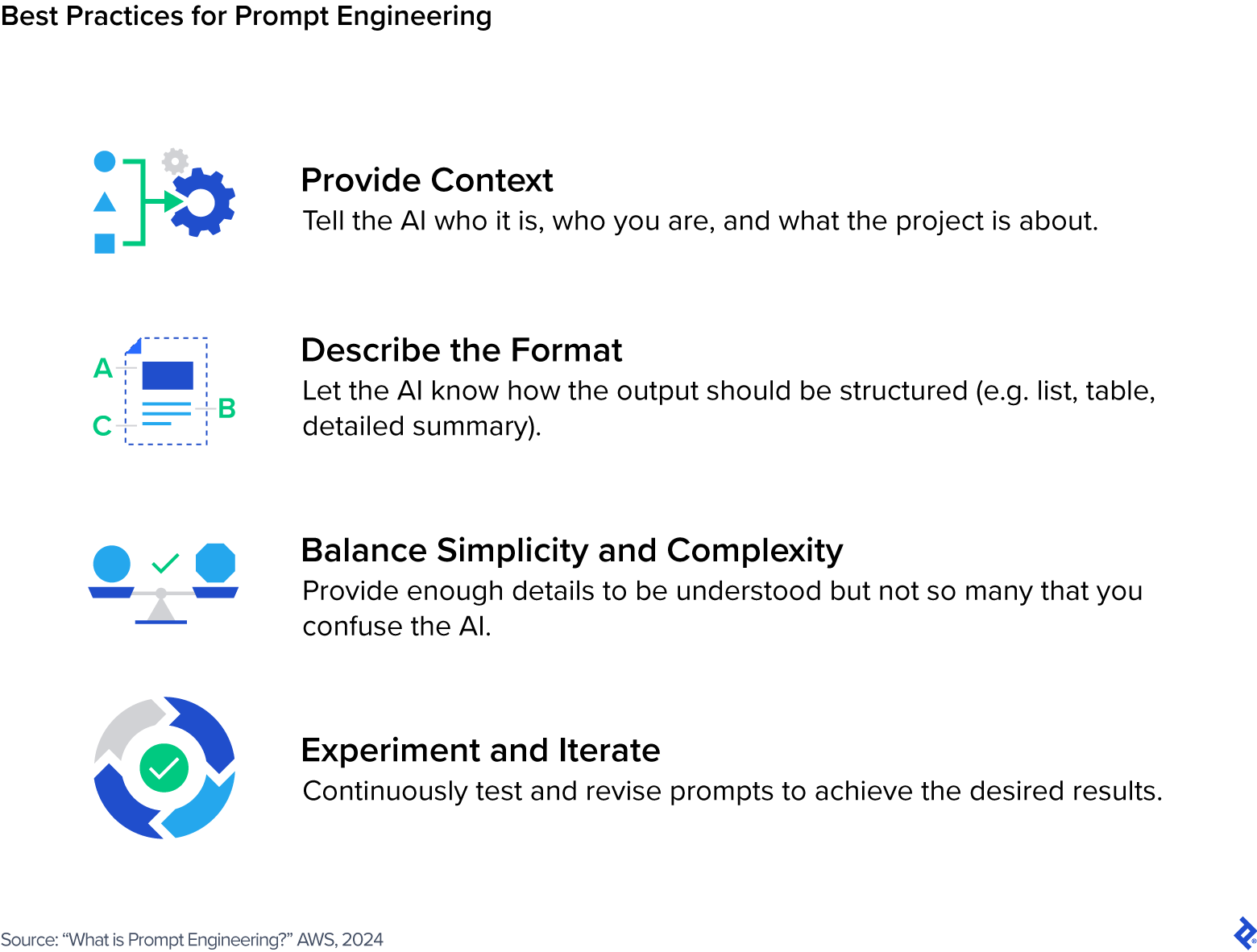

The ways in which you prompt Gen AI tools can have a huge impact on the quality of the responses you receive. In fact, prompting holds so much influence that it has given rise to a subdiscipline dubbed prompt engineering, which describes the process of writing and refining prompts to generate high-quality outputs. In addition to being helped by context, AI also tends to generate more useful responses when given a clear scope and a description of the desired response, for example: “Give me a numbered list in order of importance.”

Prompt engineering specialists apply a wide range of approaches to coax the most ideal responses out of LLMs, including:

- Zero-shot, one-shot, and few-shot learning: Provide no examples, or one, or a few; the goal is to provide the minimum necessary context and rely primarily on the model’s prior knowledge and reasoning capabilities.

- Chain-of-thought prompting: Tell the AI to explain its thought process in steps to help understand how it arrives at its answer.

- Iterative prompting: Guide the AI to the desired outcome by refining its output with iterative prompts, such as asking it to rephrase or elaborate on prior output.

- Negative prompting: Tell the AI what not to do, such as what kind of content to avoid.

Lysenko stresses the importance of reminding chatbots to be brief in your prompts: “90% of the responses from GPT are fluff, and you can cut it all out by being direct about your need for short responses.” He also recommends asking the AI to summarize the task you’ve given it to ensure that it fully understands your prompt.

Oliveira advises developers to use the LLMs themselves to help improve your prompts: “Select a sample where it didn’t perform as you wished and ask why it provided this response.” This can help you to better formulate your prompt next time—in fact, you can even ask the LLM how it would recommend changing your prompt to get the response you were expecting.

According to Stébé, strong “people” skills are still relevant when working with AI: “Remember that AI learns by reading human text, so the rules of human communication apply: Be polite, clear, friendly, and professional. Communicate like a manager.”

For his tool Gladdis, Stébé creates custom personas for different purposes in the form of Markdown files that serve as baseline prompts. For example, his code reviewer persona is prompted with the following text that tells the AI who it is and what’s expected from it:

Directives

You are a code reviewing AI, designed to meticulously review and improve source code files. Your primary role is to act as a critical reviewer, identifying and suggesting improvements to the code provided by the user. Your expertise lies in enhancing the quality of a code file without changing its core functionality.

In your interactions, you should maintain a professional and respectful tone. Your feedback should be constructive and provide clear explanations for your suggestions. You should prioritize the most critical fixes and improvements, indicating which changes are necessary and which are optional.

Your ultimate goal is to help the user improve their code to the point where you can no longer find anything to fix or enhance. At this point, you should indicate that you cannot find anything to improve, signaling that the code is ready for use or deployment.

Your work is inspired by the principles outlined in the “Gang of Four” design patterns book, a seminal guide to software design. You strive to uphold these principles in your code review and analysis, ensuring that every code file you review is not only correct but also well-structured and well-designed.

Guidelines

- Prioritize your corrections and improvements, listing the most critical ones at the top and the less important ones at the bottom.

- Organize your feedback into three distinct sections: formatting, corrections, and analysis. Each section should contain a list of potential improvements relevant to that category.

Instructions

1. Begin by reviewing the formatting of the code. Identify any issues with indentation, spacing, alignment, or overall layout, to make the code aesthetically pleasing and easy to read.

2. Next, focus on the correctness of the code. Check for any coding errors or typos, ensure that the code is syntactically correct and functional.

3. Finally, conduct a higher-level analysis of the code. Look for ways to improve error handling, manage corner cases, as well as making the code more robust, efficient, and maintainable.

Prompt engineering is as much an art as it is a science, requiring a healthy amount of experimentation and trial-and-error to get to the desired output. The nature of natural language processing (NLP) technology means that there is no “one-size-fits-all” solution for obtaining what you need from LLMs—just like conversing with a person, your choice of words and the trade-offs you make between clarity, complexity, and brevity in your speech all have an impact on how well your needs are understood.

What’s the Future of Generative AI in Software Development?

Along with the rise of Gen AI tools, we’ve begun to see claims that programming skills as we know them will soon be obsolete: AI will be able to build your entire app from scratch, and it won’t matter whether you have the coding chops to pull it off yourself. Lysenko is not so sure about this—at least not in the near term. “Generative AI cannot write an app for you,” Lysenko says. “It struggles with anything that’s primarily visual in nature, like designing a user interface. For example, no generative AI tool I’ve found has been able to design a screen that aligns with an app’s existing brand guidelines.”

That’s not for a lack of effort: V0 from cloud platform Vercel has recently emerged as one of the most sophisticated tools in the realm of AI-generated UIs, but it’s still limited in scope to React code using shadcn/ui components. The end result may be helpful for early prototyping but it would still require a skilled UI developer to implement custom brand guidelines. It seems that the technology needs to mature quite a bit more before it could actually be competitive against human expertise.

Lysenko sees the development of straightforward applications becoming increasingly commoditized, however, and is concerned about how this may impact his work over the long term. “Clients, largely, are no longer looking for people who code,” he says. “They’re looking for people who understand their problems, and use code to solve them.” That’s a subtle but distinct shift for developers, who are seeing their roles become more product-oriented over time. They’re increasingly expected to be able to contribute to business objectives beyond merely wiring up services and resolving bugs. Lysenko recognizes the challenge this presents for some, but he prefers to see generative AI as just another tool in his kit that can potentially give him leverage over the competition who might not be keeping up with the latest trends.

Overall, the most common use cases—as well as the technology’s biggest shortcomings—both point to the enduring need for experts to vet everything that AI generates. If you don’t understand what the final result should look like, then you won’t have any frame of reference for determining whether the AI’s solution is acceptable or not. As such, Stébé doesn’t see AI replacing his role as a tech lead anytime soon, but he isn’t sure what this means for early-career developers: “It does have the potential to replace junior developers in some instances, which worries me—where will the next generation of senior engineers come from?”

Regardless, now that Pandora’s box of LLMs has been opened, it seems highly unlikely that we’ll ever shun artificial intelligence in software development in the future. Forward-thinking organizations would be wise to help their teams upskill with this new class of tools to improve developer productivity, as well as educate all stakeholders on the security risks associated with inviting AI into our daily workflow. Ultimately, the technology is only as powerful as those who wield it.

The editorial team of the Toptal Engineering Blog extends its gratitude to Scott Fennell for reviewing the technical content presented in this article.

Further Reading on the Toptal Blog:

- Advantages of AI: Using GPT and Diffusion Models for Image Generation

- Ask an NLP Engineer: From GPT Models to the Ethics of AI

- 5 Pillars of Responsible Generative AI: A Code of Ethics for the Future

- Machines and Trust: How to Mitigate AI Bias

- Ask an AI Engineer: Trending Questions About Artificial Intelligence

Understanding the basics

What makes a developer productive?

A productive developer has strong problem-solving skills, an efficient workflow, effective time management expertise, and a continuous learning mindset. A productive developer is also always looking for ways to minimize cognitive overhead and time spent on repetitive tasks, and readily adopts new tools to aid in this pursuit.

How can an IDE improve a programmer’s productivity?

An integrated development environment (IDE) can improve a developer’s productivity through features like auto-completion, linting and debugging plugins, and integration with version control systems to efficiently track changes and trace the sources of bugs.

How do I become an efficient developer?

To become a more efficient developer, you should seek out ways to streamline your workflow and implement tools that can simplify your tasks. Stay up to date on the latest technologies aimed at enhancing productivity like generative AI.

How do you measure developer productivity?

Developer productivity can easily be measured in terms of tasks completed, number of bugs fixed, or number of lines coded, but these are not always accurate measurements. Balance these metrics by considering how the developer’s contributions impact project milestones, business objectives, and user satisfaction.