Don’t Panic: How Toptal Engineers Approach the Debugging Process

Test failures can cause panic as teams rush to unblock an app’s release. At Toptal, however, we view challenges as opportunities to understand and improve projects. Get an inside look at our engineering process with an Apollo Client debugging case study.

Test failures can cause panic as teams rush to unblock an app’s release. At Toptal, however, we view challenges as opportunities to understand and improve projects. Get an inside look at our engineering process with an Apollo Client debugging case study.

Toptal’s Talent Portal (the web application where talent access the network and jobs) is one of the critical software services that delivers business value to the company. Working as an engineer on this surface is a high-stakes and fast-paced proposition. To provide the best user and developer experiences, we replaced the old portal with an updated platform and a new codebase that automatically deploys to production a few times a day.

However, with great power comes great responsibility—and code maintenance. New code yields new bugs, which engineers must address rapidly in order to keep pushing updates to production. This is what happened when I recently encountered a Cypress test failure blocking our entire delivery pipeline and interrupting many engineers’ work. Though the failure was difficult to address, my team supported my suggestion that we turn this challenge into an opportunity; the bug allowed me to understand our testing framework in-depth, improve our project’s infrastructure, and better prepare other teams for similar issues.

An Inside Look at Toptal Engineering Projects: The Portal Site

First, let’s understand how engineering projects work at Toptal—and which technologies, partners, and testing standards are typically involved—using our updated Talent Portal site as our example.

What Technologies Do We Use?

Toptal engineers frequently adapt to new technologies, and the Talent Portal is no exception. While our previous development environment used jQuery and Backbone.js for data management, our updated site leverages GraphQL and Apollo Client for their excellent developer experience. Many other teams across Toptal also use this pairing for data management. GraphQL, a standard query language, is the technology that allows us to access the information necessary to render user interfaces (such as a logged-in user’s name and job engagements displayed in the Talent Portal). GraphQL is popular among developers due to its speed, type-safety features, and flexible data sources. Apollo Client further enhances GraphQL’s data management experience with intelligent data caching.

In addition to updating our data development environment, the team also shifted gears for UI development, moving from Ruby and JavaScript to React, whose clean repository improves our code maintainability. React also makes our codebase more appealing to engineers, as there is a growing base of enthusiastic React developers.

Who Do We Partner With?

Because our platforms directly impact the livelihoods of our clients and freelance talent, high product quality is non-negotiable. Engineers at Toptal partner with dedicated quality assurance (QA) engineers (integrated with the product team) and beta testers to guarantee high product quality. We work quickly but cautiously: Our Talent Portal, for example, began as an experiment before launching officially. We shared the new site with a closed group of beta testers and improved the portal based on their feedback.

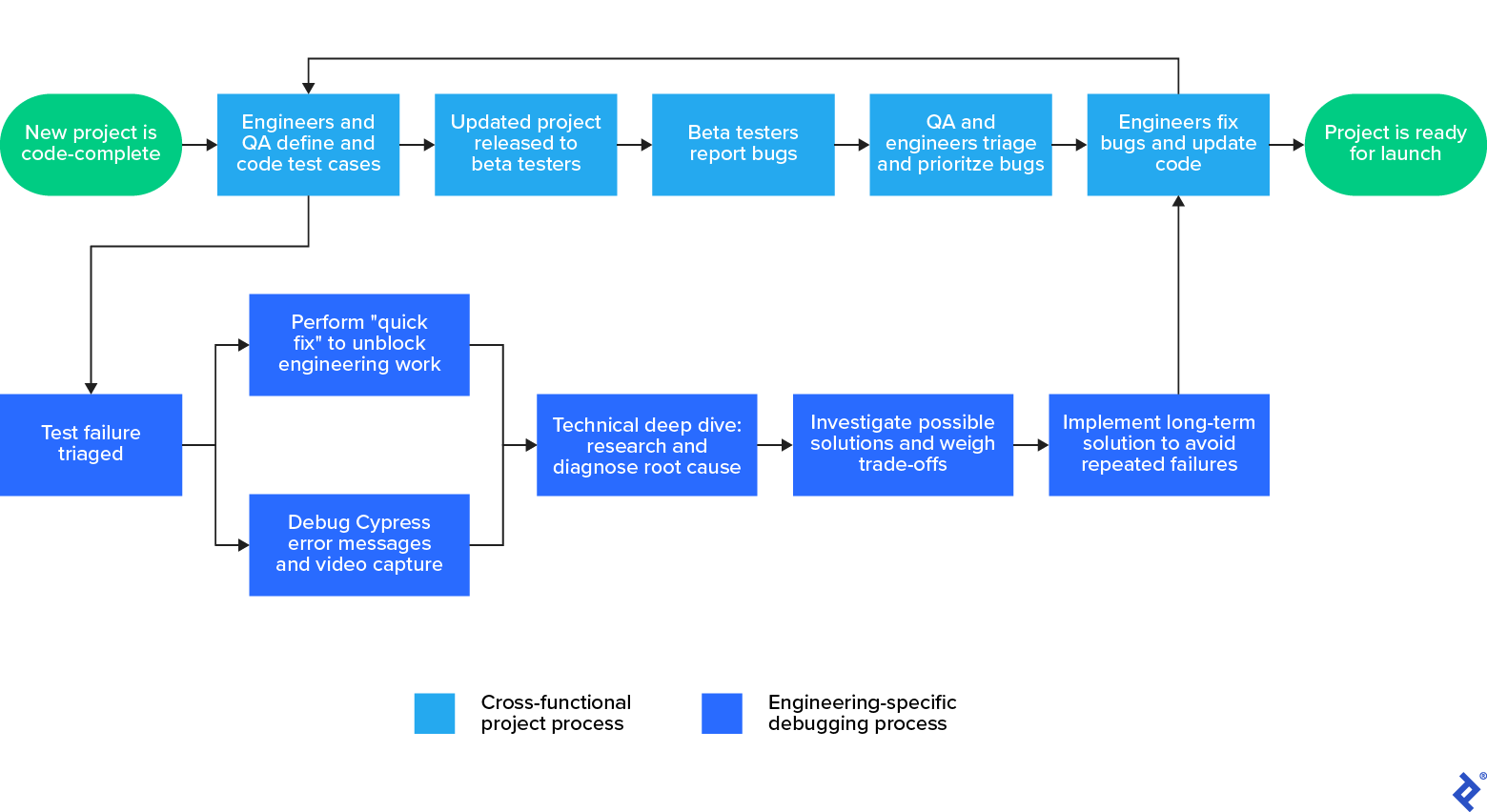

Given our fast-paced cycle for product updates, it is crucial to release stable iterations of the portal so that we use our limited time with beta testers efficiently. We use automated tests to keep application branches stable, and our QA engineers help establish comprehensive test cases and triage bugs or test failures.

What Are Our Testing Standards?

Toptal engineers take testing seriously—it keeps our products reliable! The Talent Portal and other teams conduct automated end-to-end tests on our projects with the Cypress testing framework. Because Cypress runs in a web browser, it covers certain cases that other environments (like jsdom) cannot test easily.

We run all test cases before finalizing product changes to keep our main code branch stable. When a test fails on the main branch, where it had passed previously, that’s usually an indication that the test is flaky and produces unreliable results. The same test case can either pass or fail depending on a nonobvious factor that might be external to the code. Flakiness reduces our confidence in the tests and must be fixed. When a test is failing, there are two methods of triage:

- For isolated tests with a clear owner, an automatic notification will alert the correct team according to code ownership rules.

- When ownership is unclear because one user interaction spans multiple teams (as often is the case for Talent Portal Cypress tests), a QA or project engineer will help identify the responsible team.

Before launch, engineers must collaborate to fix incoming bugs and iterate on test cases to address past bugs until the project reaches stability.

How Toptal Engineers Debug: Diagnosing the Root Cause

You may be wondering: With so many players and tools involved in a given project, how do Toptal engineers address bugs effectively? And what happens after triage? The following case study, which details a Cypress failure I encountered, serves as a good illustration of our debugging process.

A Test Failure Caused by Apollo Client Overfetching

Let’s flash back to the day of the bug. Through the triage system, our team receives a notification that one of our Cypress tests is failing on the main branch. This is a burning issue, and we need to act fast; a broken test blocks our delivery pipeline and causes failure alerts on new code, disrupting the work of engineers.

From our past experiences, we know that we can implement a workaround for the problem: If we wait a bit longer, or disable specific test assertions temporarily, the test will pass. While this is a beneficial tactical move to unblock engineers’ work, we can’t stop there. We must identify the root cause. Understanding the behavior behind the failure allows us to improve our own project and prepare other projects for the same issues.

In our initial investigation of the test failure, we notice an error message declaring that the element selected for interaction (in this case, a button) is not reachable. Our Cypress setup captures a video recording of the test execution, so we can inspect what is happening in the web browser. Surprisingly, the video shows that the element is visible on the page. We’re stumped temporarily before realizing that the failure is occurring because there are two copies of the button element in the page context:

- The instance referenced by Cypress that is currently detached from the page

- A new copy of the button that is visible on the page

So, the page is re-rendering right after Cypress captures a reference to the element. When the test attempts to click on the button, that button instance is already detached from the page. With this knowledge, we investigate further, examining our operations log. We learn that the additional render is caused by an extra Apollo Client HTTP request (GetProfileSkills)—one that is unnecessary, as the former GetProfileSkills request had just finished.

A quick check confirms that the test passes consistently if we block the third query. Why would we need to re-fetch a query that came back with data less than a second ago? We don’t. This excessive behavior is an example of Apollo Client overfetching, and this is our bug’s root cause.

A Quick Detour: Apollo Client Caching Explained

To remedy our overfetching problem, let’s perform a deep dive into the Apollo Client caching system so we can best evaluate potential solutions.

Apollo Client’s cache is one of its most essential aspects; it provides an intelligent solution for keeping data available and consistent. By saving the results of past queries in a local cache, Apollo Client can instantly access data for previously cached queries. However, Apollo Client’s cache is known to have issues with duplicate network requests.

I prepared a simplified example to demonstrate this scenario without going into the unnecessary complexity of our full project queries. In this example, our Content function issues two queries with the useQuery hooks, but our debug log shows that we perform three operations: DataForList1, DataForList2, and DataForList1 again. Let’s examine the queries involved:

const query1 = gql`

query DataForList1 {

articles {

id

title

tags {

name

color

}

}

}

`;

const query2 = gql`

query DataForList2 {

articles {

id

title

tags {

name

articlesCount

}

}

}

`;

The queries are similar, with the same fields selected from the article type, but their selections from the tag type differ. Since we don’t use an id field for the tag type, Apollo Client stores the tags in a denormalized way as properties of the articles. And, because the tags field is an array, Apollo Client’s default merge strategy is to rewrite data. When we receive data for the first query, Apollo Client sets the article’s cached tags to:

{

tags: [{name: 'HTML', color: 'blue'}, {name: 'JS', color: 'yellow'}, {name: 'PHP', color: 'red'}]

}

After we receive data for the second query, the article’s cached tags are overwritten:

{

tags: [{name: 'HTML', articlesCount: 10}, {name: 'JS', articlesCount: 12}, {name: 'PHP', articlesCount: 6}]

}

As a result, Apollo Client’s cache no longer knows the tags’ colors and performs an additional HTTP request to retrieve them. In our example, Apollo Client stops here because the duplicated query’s data is identical to that of the original query. However, if we were to load dynamic values like counters, time stamps, or dates, the client could end up in an infinite loop where the two queries continually trigger each other.

Thinking Like a Toptal Engineer: Potential Solution Trade-offs

With an understanding of the bug’s root cause and Apollo Client’s caching process, we can explore the potential solutions that prevent additional requests and discuss their pros and cons.

Disable the Cache

In cases where we don’t need cached values, we can disable the cache entirely:

const { data: data1 } = useQuery(query1, { fetchPolicy: 'no-cache' });

const { data: data2 } = useQuery(query2, { fetchPolicy: 'no-cache' });

Using the no-cache policy ensures that the network will never read from the cache, so we avoid re-fetching.

Decision: While this solution is simple to implement, disabling the cache negatively impacts our app’s usability and performance. Providing an excellent user experience is a top priority at Toptal, so this solution is not a viable option.

Use a Custom Merge Policy

We can customize Apollo Client’s merge behavior (which defaults to rewriting cached values with new values) in the cache typePolicies configuration. To do this, we update the merge function, which accepts two values: cached data and new data. The function’s output is the new value of the cache. Ignoring edge cases, we can resolve the example project’s tag overwriting with a basic custom merge policy:

new InMemoryCache({

typePolicies: {

Article: {

fields: {

tags: {

merge: (existing = [], incoming: any[]) => {

const result = [...incoming];

existing.forEach((tag: any) => {

const index = result.findIndex((t) => t.name === tag.name);

if (index > -1) {

result[index] = { ...result[index], ...tag };

}

});

return result;

}

}

}

}

}

})

However, this solution does not handle cases with arrays of different sizes.

Decision: A custom merge policy is powerful but getting it right for arrays is difficult. Adding complexity to a project always makes it harder to maintain, and added configuration on the front end would slow our development speed. (This is because our GraphQL endpoints have multiple front-end applications reading data from them, and we would need to repeat the configuration changes across projects.) Overall, the complexity of the custom merge function needed to address our problem would cause more problems than it would solve.

Configure a Custom Key Field

We can provide a custom typePolicy for setting a key field. In the example above, we assume our tags are unique by name and merge tags with the same name into a single entity. If this remains true, we can set the name field as a key field so it can store tags in a normalized way. This configuration is global per type and will affect how tags are cached in general (not just for articles). Now, article tags will be cached with just their names, and tag objects will be cached separately under those names:

{tags: ["PHP", "HTML", "JS"]}

Using a custom policy prevents data loss since the normalized tag cache will make updates without wiping existing fields. Let’s look at a simple typePolicy that would fix the example project:

new InMemoryCache({

typePolicies: {

Tag: {

keyFields: ["name"]

}

}

})

Decision: Providing a custom key field policy is an effective and relatively low-cost option. However, with this solution, the name key field must always be selected in our queries in order for Apollo Client to perform caching. Forgetting this step can cause runtime errors. Using a custom key field is impractical because we won’t always need the name to render our user interface. Since there is no candidate better than the name field for the custom key field, this solution is less than optimal.

Implement a Default Key Field

An even simpler solution than configuring the name key field is to rely on a default key field: id. We can add this field to all queries (since an item ID always exists) and normalize our cache to avoid wiping existing data this way. Let’s fix our example project by adding id to the tag type selection:

# ...

tags {

id

name

color # query1

}

# ...

tags {

id

name

articlesCount # query2

}

# ...

Decision: Using a default key is safe and straightforward. We can use an ESLint rule to require selecting an id field if it exists in the schema, and this default key doesn’t require any front-end changes in our application. Plus, the engineering cost to add an id field to types is relatively low. Implementing a default key field would also protect other projects that rely on our GraphQL schema from the original test failure without requiring additional code changes.

The clear benefits and absence of unwanted side effects make this the ideal solution.

Lasting Excellence: The Impact of Thoughtful Engineering Solutions

By updating our queries to use a default key field, we successfully eliminated our initial test failures. The impact of the fix, however, extended far beyond one bug instance. Adding the default key field has protected other projects from production-blocking test failures as well. Moreover, our original failure exposed Apollo Client overfetching as a potential source of performance issues in our front-end projects, allowing us to fortify our user experience. Lastly, obtaining a deep understanding of Cypress and its handling of HTML has helped us debug related issues efficiently.

This case study highlights what I love about engineering at Toptal: We focus on quality above all else. Accepting the challenge the bug presented and figuring out the best possible solution was rewarding. The alternative—allowing subpar performance in our automated tests and failing to understand our tools’ behavior—was unacceptable. Even in a dynamic and fast-paced environment, Toptal engineers are encouraged to take the time to find the proper solution to every problem. The results speak for themselves: better products, and a happier and more engaged team that takes pride in their work.

World-class articles, delivered weekly.

Understanding the Basics

What is Apollo Client used for?

Apollo Client is a GraphQL client that enhances data management; it also performs intelligent data caching.How is data stored in GraphQL?

GraphQL is a query language that provides access to data based on data types and logic that developers code. It uses existing data instead of a specific storage engine or database.Is Cypress good for testing?

Cypress is a great option for end-to-end testing. It runs in a web browser, so it can test certain cases that are difficult to run using other testing tools.Why do Cypress tests fail?

Cypress tests fail due to defects in software or because the tests are “flaky” and produce unreliable results. (The test may have passed when it was committed to the codebase but later starts to fail.)How do you fix a flaky test in Cypress?

Often, a flaky test’s result depends on a nonobvious factor that may be external to the code. You can debug the root cause of a flaky test using tools like Cypress video recordings and error logs.How does the Apollo cache work?

The Apollo cache keeps data available and consistent. It saves the results of past queries (in a local cache) so that they can be instantly accessed when requested again. Because the cache is normalized, it is more efficient than typical HTTP caching.