AI in QA: A Novel Framework for Delivering Quality Software Quickly

This AI-informed framework is designed to fast-track code reviews, enhance software quality, and foster smart, cost-effective decisions in software development.

This AI-informed framework is designed to fast-track code reviews, enhance software quality, and foster smart, cost-effective decisions in software development.

Costas is a product and project management expert who specializes in sophisticated technology projects for highly competitive markets around the world. He has a doctorate degree from the National Technical University of Athens, and has held multiple C-suite positions during his 25-year career.

Previous Role

CTOPREVIOUSLY AT

The journey from a code’s inception to its delivery is full of challenges—bugs, security vulnerabilities, and tight delivery timelines. The traditional methods of tackling these challenges, such as manual code reviews or bug tracking systems, now appear sluggish amid the growing demands of today’s fast-paced technological landscape. Product managers and their teams must find a delicate equilibrium between reviewing code, fixing bugs, and adding new features to deploy quality software on time. That’s where the capabilities of large language models (LLMs) and artificial intelligence (AI) can be used to analyze more information in less time than even the most expert team of human developers could.

Speeding up code reviews is one of the most effective actions to improve software delivery performance, according to Google’s State of DevOps Report 2023. Teams that have successfully implemented faster code review strategies have 50% higher software delivery performance on average. However, LLMs and AI tools capable of aiding in these tasks are very new, and most companies lack sufficient guidance or frameworks to integrate them into their processes.

In the same report from Google, when companies were asked about the importance of different practices in software development tasks, the average score they assigned to AI was 3.3/10. Tech leaders understand the importance of faster code review, the survey found, but don’t know how to leverage AI to get it.

With this in mind, my team at Code We Trust and I created an AI-driven framework that monitors and enhances the speed of quality assurance (QA) and software development. By harnessing the power of source code analysis, this approach assesses the quality of the code being developed, classifies the maturity level of the development process, and provides product managers and leaders with valuable insights into the potential cost reductions following quality improvements. With this information, stakeholders can make informed decisions regarding resource allocation, and prioritize initiatives that drive quality improvements.

Low-quality Software Is Expensive

Numerous factors impact the cost and ease of resolving bugs and defects, including:

- Bug severity and complexity.

- Stage of the software development life cycle (SDLC) in which they are identified.

- Availability of resources.

- Quality of the code.

- Communication and collaboration within the team.

- Compliance requirements.

- Impact on users and business.

- Testing environment.

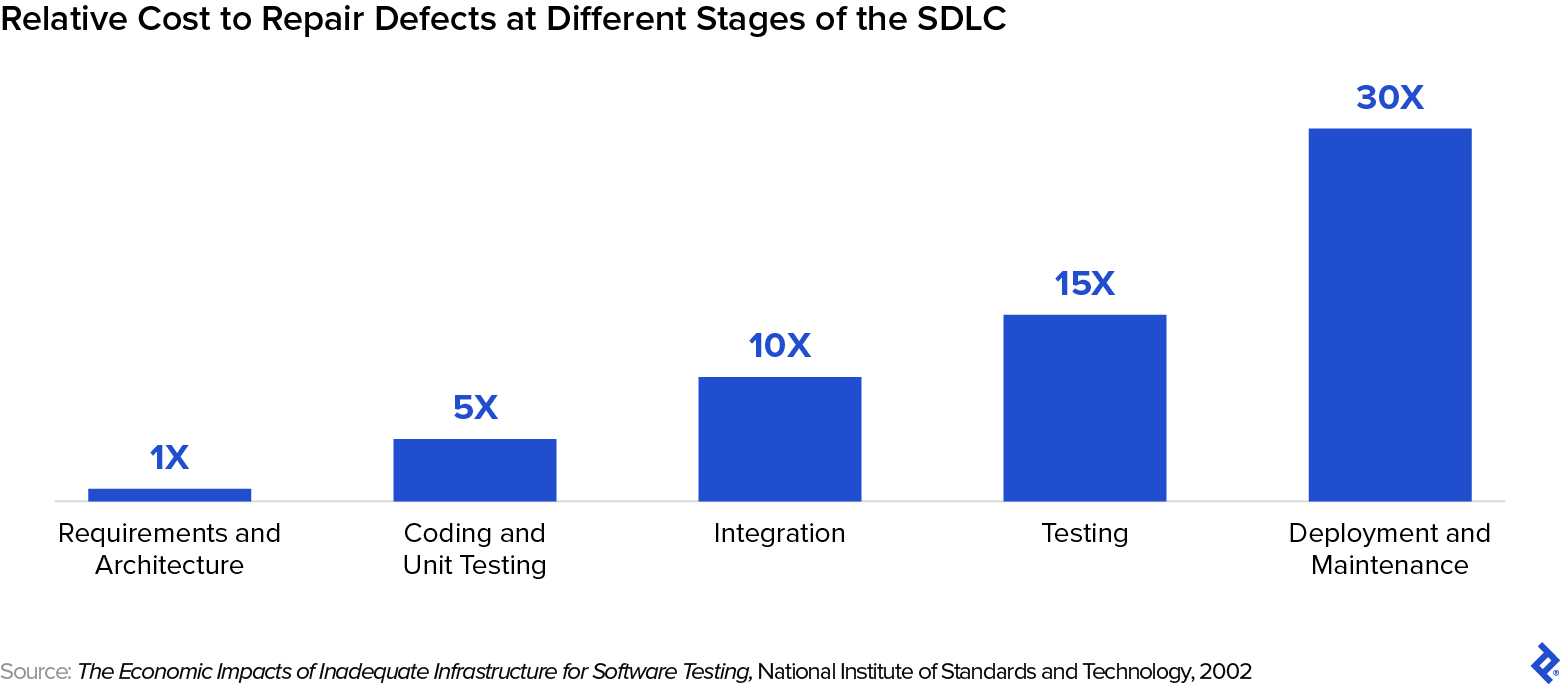

This host of elements makes calculating software development costs directly via algorithms challenging. However, the cost of identifying and rectifying defects in software tends to increase exponentially as the software progresses through the SDLC.

The National Institute of Standards and Technology reported that the cost of fixing software defects found during testing is five times higher than fixing one identified during design—and the cost to fix bugs found during deployment can be six times higher than that.

Clearly, fixing bugs during the early stages is more cost-effective and efficient than addressing them later. The industrywide acceptance of this principle has further driven the adoption of proactive measures, such as thorough design reviews and robust testing frameworks, to catch and correct software defects at the earliest stages of development.

By fostering a culture of continuous improvement and learning through a rapid adoption of AI, organizations are not merely fixing bugs—they are cultivating a mindset that constantly seeks to push the boundaries of what is achievable in software quality.

Implementing AI in Quality Assurance

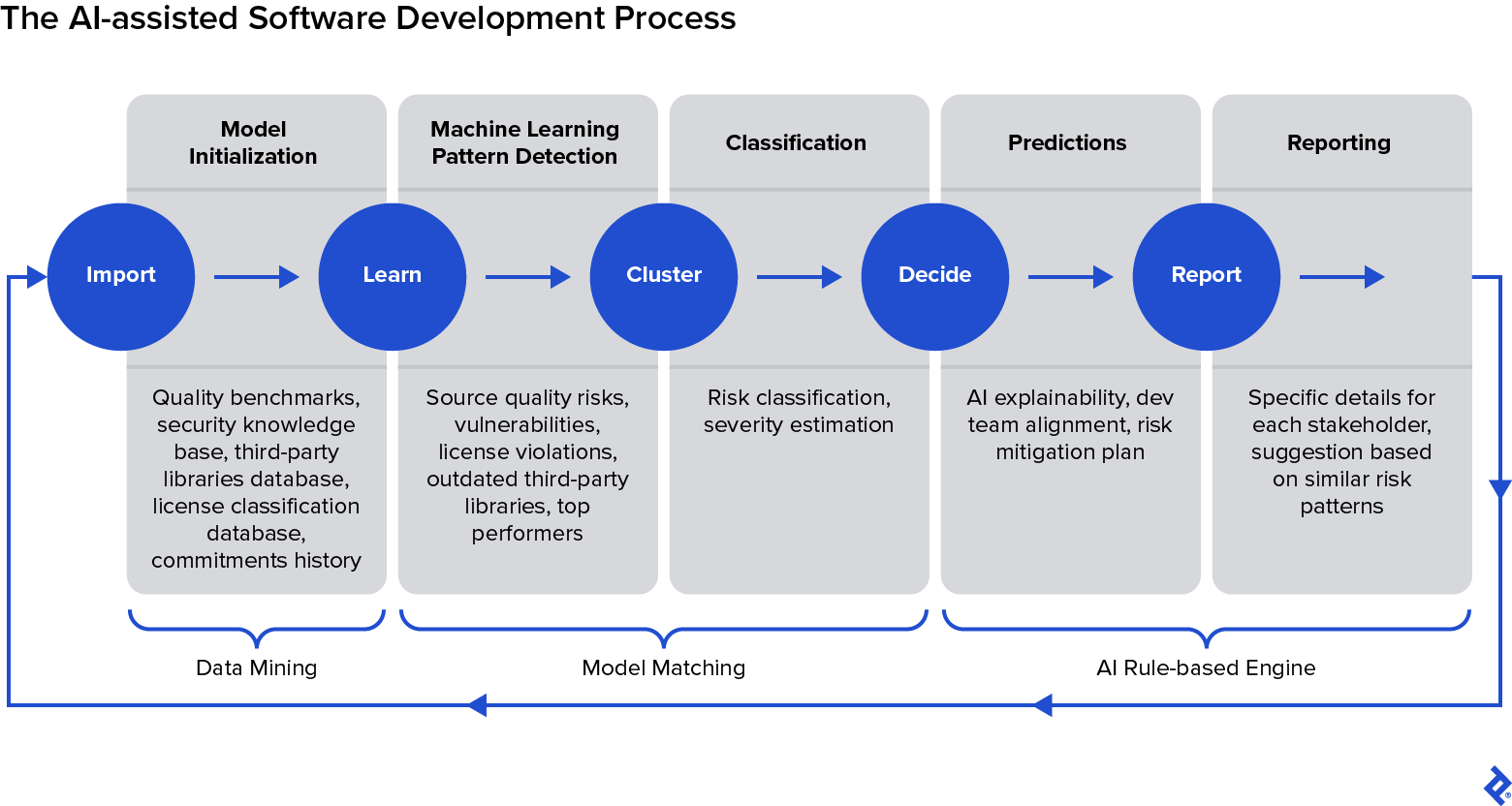

This three-step implementation framework introduces a straightforward set of AI for QA rules driven by extensive code analysis data to evaluate code quality and optimize it using a pattern-matching machine learning (ML) approach. We estimate bug fixing costs by considering developer and tester productivity across SDLC stages, comparing productivity rates to resources allocated for feature development: The higher the percentage of resources invested in feature development, the lower the cost of bad quality code and vice versa.

Define Quality Through Data Mining

The standards for code quality are not easy to determine—quality is relative and depends on various factors. Any QA process compares the actual state of a product with something considered “perfect.” Automakers, for example, match an assembled car with the original design for the car, considering the average number of imperfections detected over all the sample sets. In fintech, quality is usually defined by identifying transactions misaligned with the legal framework.

In software development, we can employ a range of tools to analyze our code: linters for code scanning, static application security testing for spotting security vulnerabilities, software composition analysis for inspecting open-source components, license compliance checks for legal adherence, and productivity analysis tools for gauging development efficiency.

From the many variables our analysis can yield, let’s focus on six key software QA characteristics:

- Defect density: The number of confirmed bugs or defects per size of the software, typically measured per thousand lines of code

- Code duplications: Repetitive occurrences of the same code within a codebase, which can lead to maintenance challenges and inconsistencies

- Hardcoded tokens: Fixed data values embedded directly into the source code, which can pose a security risk if they include sensitive information like passwords

- Security vulnerabilities: Weaknesses or flaws in a system that could be exploited to cause harm or unauthorized access

- Outdated packages: Older versions of software libraries or dependencies that may lack recent bug fixes or security updates

- Nonpermissive open-source libraries: Open-source libraries with restrictive licenses can impose limitations on how the software can be used or distributed

Companies should prioritize the most relevant characteristics for their clients to minimize change requests and maintenance costs. While there could be more variables, the framework remains the same.

After completing this internal assessment, it’s time to look for a point of reference for high-quality software. Product managers should curate a collection of source code from products within their same market sector. The code of open-source projects is publicly available and can be accessed from repositories on platforms such as GitHub, GitLab, or the project’s own version control system. Choose the same quality variables previously identified and register the average, maximum, and minimum values. They will be your quality benchmark.

You should not compare apples to oranges, especially in software development. If we were to compare the quality of one codebase to another that utilizes an entirely different tech stack, serves another market sector, or differs significantly in terms of maturity level, the quality assurance conclusions could be misleading.

Train and Run the Model

At this point in the AI-assisted QA framework, we need to train an ML model using the information obtained in the quality assessment. This model should analyze code, filter results, and classify the severity of bugs and issues according to a defined set of rules.

The training data should encompass various sources of information, such as quality benchmarks, security knowledge databases, a third-party libraries database, and a license classification database. The quality and accuracy of the model will depend on the data fed to it, so a meticulous selection process is paramount. I won’t venture into the specifics of training ML models here, as the focus is on outlining the steps of this novel framework. But there are several guides you can consult that discuss ML model training in detail.

Once you are comfortable with your ML model, it’s time to let it analyze the software and compare it to your benchmark and quality variables. ML can explore millions of lines of code in a fraction of the time it would take a human to complete the task. Each analysis can yield valuable insights, directing the focus toward areas that require improvement, such as code cleanup, security issues, or license compliance updates.

But before addressing any issue, it’s essential to define which vulnerabilities will yield the best results for the business if fixed, based on the severity detected by the model. Software will always ship with potential vulnerabilities, but the product manager and product team should aim for a balance between features, costs, time, and security.

Because this framework is iterative, every AI QA cycle will take the code closer to the established quality benchmark, fostering continuous improvement. This systematic approach not only elevates code quality and lets the developers fix critical bugs earlier in the development process, but it also instills a disciplined, quality-centric mindset in them.

Report, Predict, and Iterate

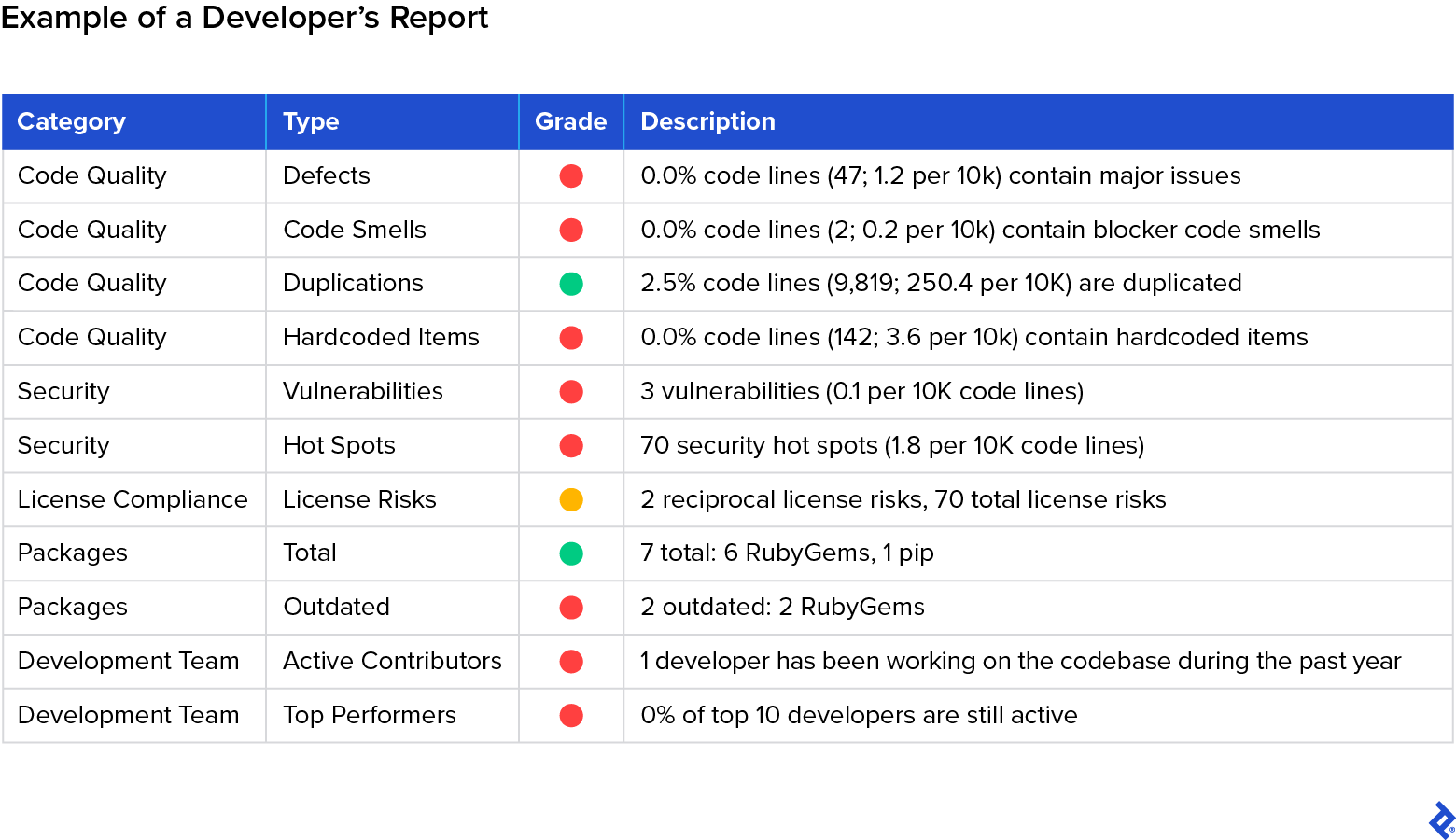

In the previous step, the ML model analyzed the code against the quality benchmark and provided insights into technical debt and other areas in need of improvement. Still, for many stakeholders this data, as in the example presented below, won’t mean much.

Category | Issues | Debt Estimation | Comment |

Quality | 445 bugs, 3,545 code smells |

~500 days

| Assuming that only blockers and high-severity issues will be resolved |

Security | 55 vulnerabilities, 383 security hot spots |

~100 days

| Assuming that all vulnerabilities will be resolved and the higher-severity hot spots will be inspected |

Secrets | 801 hardcoded risks |

~50 days

| |

Outdated Packages | 496 outdated packages (>3 years) |

~300 days

| |

Duplicated Blocks | 40,156 blocks |

~150 days

| Assuming that only the bigger blocks will be revised |

High-risk Licenses | 20 issues in React code |

~20 days

| Assuming that all the issues will be resolved |

Total | 1,120 days |

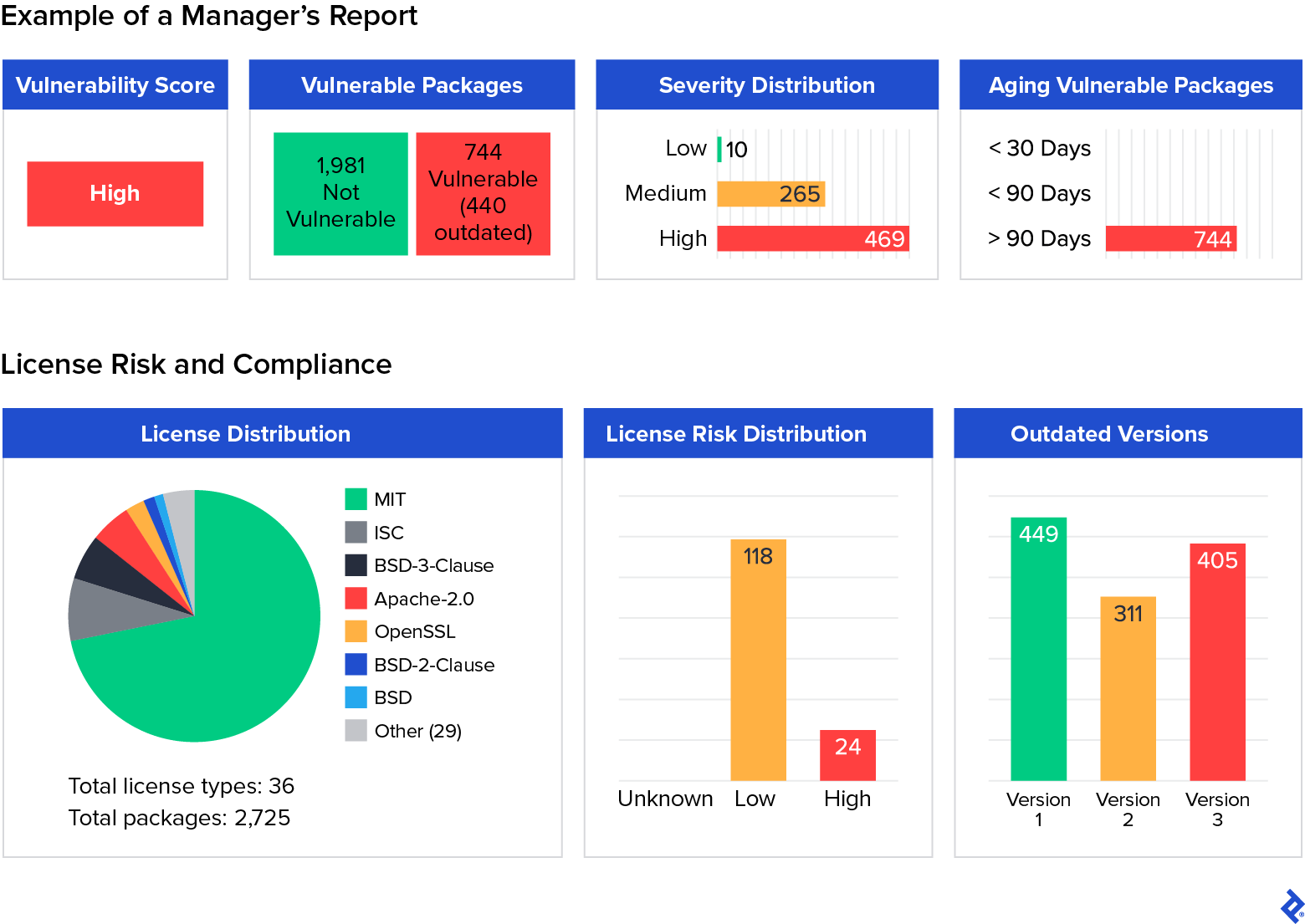

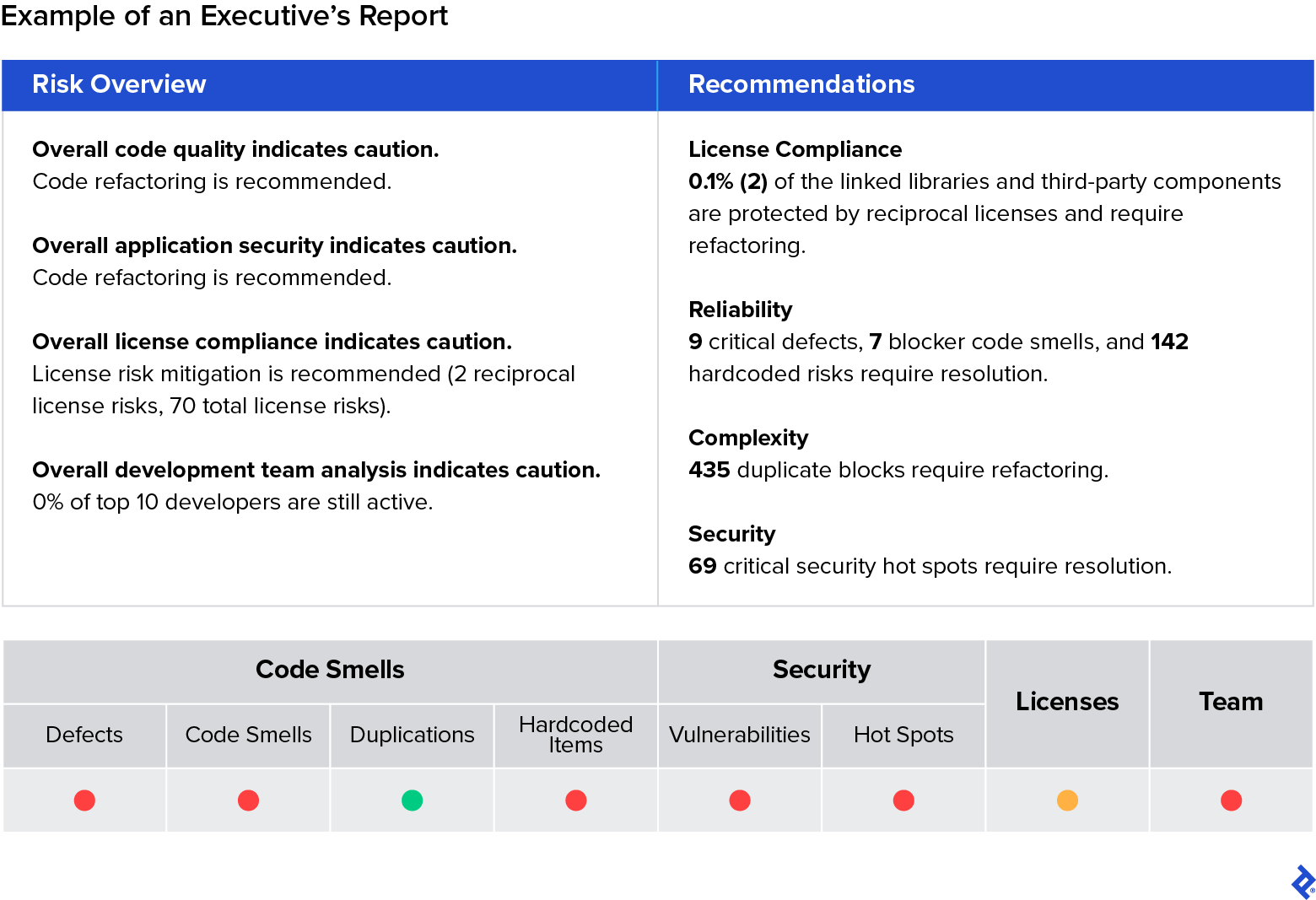

An automated reporting step is therefore crucial to make informed decisions. We achieve this by feeding an AI rule engine with the information obtained from the ML model, data from the development team composition and alignment, and the risk mitigation strategies available to the company. This way, all three levels of stakeholders (developers, managers, and executives) each receive a catered report with the most salient pain points for each, as can be seen in the following examples:

Additionally, a predictive component is activated when this process iterates multiple times, enabling the detection of quality variation spikes. For instance, a discernible pattern of quality deterioration might emerge under conditions previously faced, such as increased commits during a release phase. This predictive facet aids in anticipating and addressing potential quality issues preemptively, further fortifying the software development process against prospective challenges.

After this step, the process cycles back to the initial data mining phase, starting another round of analysis and insights. Each iteration of the cycle results in more data and refines the ML model, progressively enhancing the accuracy and effectiveness of the process.

In the modern era of software development, striking the right balance between swiftly shipping products and ensuring their quality is a cardinal challenge for product managers. The unrelenting pace of technological evolution mandates a robust, agile, and intelligent approach toward managing software quality. The integration of AI in quality assurance discussed here represents a paradigm shift in how product managers can navigate this delicate balance. By adopting an iterative, data-informed, and AI-enhanced framework, product managers now have a potent tool at their disposal. This framework facilitates a deeper understanding of the codebase, illuminates the technical debt landscape, and prioritizes actions that yield substantial value, all while accelerating the quality assurance review process.

Further Reading on the Toptal Blog:

Understanding the basics

Can AI replace quality assurance?

AI can significantly augment quality assurance (QA) by automating mundane testing tasks, identifying patterns, and predicting issues. However, it’s not likely to fully replace human QA testers due to the nuanced understanding and creative problem-solving skills humans bring to the table. A blend of AI and human insight can offer a more robust and efficient QA process.

How is AI used in QA?

AI in QA enhances efficiency by automating repetitive tests, quickly analyzing large data sets, and detecting bugs early. It employs machine learning to predict issues, improve test accuracy over time, and ensure software quality. This tech-savvy approach accelerates the QA process, making it more cost-effective and efficient in delivering a superior product swiftly.

Will QA testers be replaced by AI?

While AI can automate repetitive tasks and analyze data efficiently, it isn’t going to replace QA testers. Human insight is irreplaceable for understanding complex scenarios and providing nuanced evaluations. The future likely entails a collaborative mix of AI and human expertise, enhancing the efficiency and effectiveness of the QA process, while retaining the critical understanding and judgment of human testers.

Athens, Greece

Member since March 22, 2019

About the author

Costas is a product and project management expert who specializes in sophisticated technology projects for highly competitive markets around the world. He has a doctorate degree from the National Technical University of Athens, and has held multiple C-suite positions during his 25-year career.

Previous Role

CTOPREVIOUSLY AT