Building an AI Product? Maximize Value With an Implementation Framework

Building and scaling an AI product is an enormous undertaking. This implementation framework will help you organize assets, manage teams, and stay aligned with the product vision.

Building and scaling an AI product is an enormous undertaking. This implementation framework will help you organize assets, manage teams, and stay aligned with the product vision.

Mayank is a product manager who has built products for GE, The Emirates Group, KPMG, and Kraft Heinz, and launched two startups in the IoT and AI spaces. Mayank specializes in Agile-based execution of robust go-to-market strategies. He holds a master’s degree in information systems management from Carnegie Mellon University and is the author of The Art of Building Great Products.

PREVIOUSLY AT

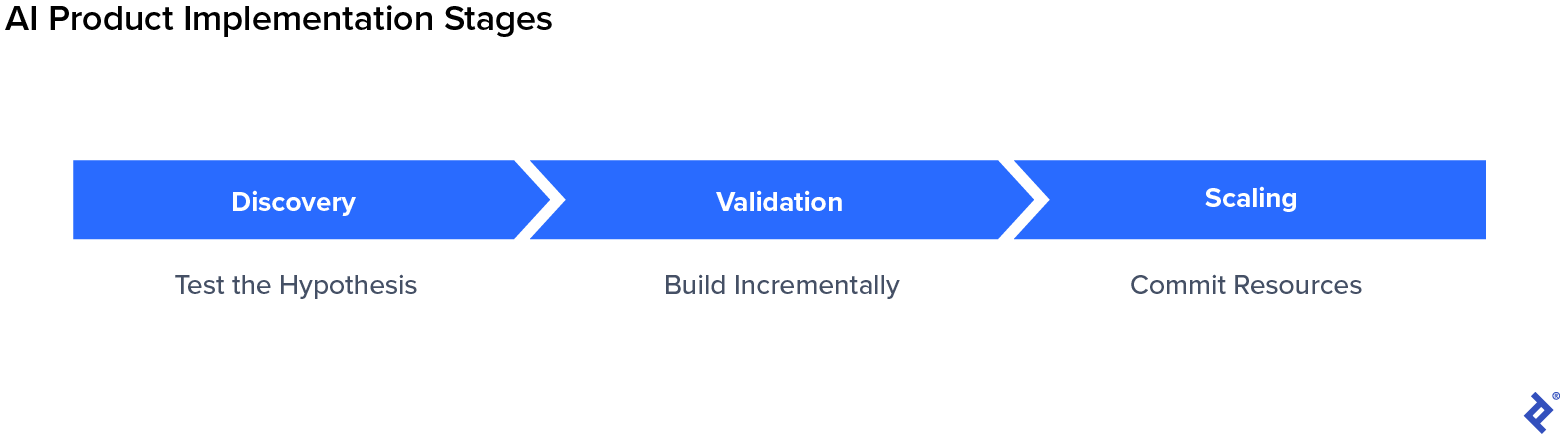

This is part 3 in a three-part series on AI digital product management. In the first two installments, I introduced the basics of machine learning and outlined how to create an AI product strategy. In this article, I discuss how to apply these lessons to build an AI product.

Building an AI product is a complex and iterative process involving multiple disciplines and stakeholders. An implementation framework ensures that your AI product provides maximum value with minimum cost and effort. The one I describe in this article combines Agile and Lean startup product management principles to build customer-centric products and unify teams across disparate fields.

Each section of this article corresponds to a stage of this framework, beginning with discovery.

AI Product Discovery

In part 2 of this series, I described how to plan a product strategy and an AI strategy that supports it. In the strategy stage, we used discovery as a preliminary step to identify customers, problems, and potential solutions without worrying about AI tech requirements. However, discovery is more than a one-time research push at the start of a project; it is an ongoing mandate to seek and evaluate new evidence to ensure that the product is moving in a useful and profitable direction.

In the implementation stage, discovery will help us assess the proposed AI product’s value to customers within the technical limits we established in the AI strategy. Revisiting discovery will also help identify the AI product’s core value, also known as the value proposition.

Structure the Hypothesis

Continuing an example from the previous article in this series, suppose an airline has hired you as a product manager to boost sales of underperforming routes. After researching the problem and evaluating multiple solution hypotheses during strategy planning, you decide to pursue a flight-demand prediction product.

At this stage, deepen your research to add detail to the hypothesis. How will the product function, who is it for, and how will it generate revenue?

Collect information on customers, competitors, and industry trends to expand the hypothesis: | ||

|---|---|---|

Research Target | Purpose | Sources |

Customers | Discover what features customers value. |

|

Competitors | Learn about customer perception, funding levels and sources, product launches, and struggles and achievements. |

|

Industry Trends | Keep pace with advancements in technology and business practices. |

|

Next, organize your findings to identify patterns in the research. In this example, you determine the product should be marketed to travel agents in tier 2 cities who will promote deals on unsold seats. If all goes well, you plan to scale the product by offering it to competitor airlines.

Structure research findings into actionable and measurable statements: | ||||

|---|---|---|---|---|

Customer | Problem | Customer Goal | Potential Solutions | Riskiest Assumption |

|

Travel agents in tier 2 cities | Inability to predict flight costs and availability fluctuations | Maximize profits |

| Travel agents will use a flight-demand predictor to make decisions for their business. |

Based on the areas of inquiry you’ve pursued, you can begin structuring MVP statements.

One MVP statement could read: |

|---|

40% of travel agents will use a flight-demand prediction product if the model’s accuracy exceeds 90%. |

Note: Unlike the exploratory MVP statements in the strategy phase, this MVP statement combines the product concept (a flight-demand predictor) with the technology that powers it (an AI model).

Once you have listed all MVP statements, prioritize them based on three factors:

- Desirability: How important is this product to the customer?

- Viability: Will the product fulfill the product vision defined in the strategy?

- Feasibility: Do you have the time, money, and organizational support to build this product?

Test the Hypothesis

In hypothesis testing, you’ll market and distribute prototypes of varying fidelity (such as storyboards and static or interactive wireframes) to gauge initial customer interest in this potential AI product.

The hypothesis will determine which testing methods you use. For instance, landing page tests will help measure demand for a new product. Hurdle tests are best if you are adding new features to an existing product, and smoke tests evaluate user responses to a particular selection of features.

Hypothesis Testing Methods | |

|---|---|

Landing Page Test | Build a series of landing pages promoting different versions of your solution. Promote the pages on social media and measure which one gets the most visits or sign-ups. |

Hurdle Test | Build simple, interactive wireframes but make them difficult to use. Adding UX friction will help gauge how motivated users are to access your product. If you retain a predefined percentage of users, there’s likely healthy demand. |

UX Smoke Test | Market high-fidelity interactive wireframes and observe how users navigate them. |

Note: Document the hypotheses and results once testing is complete to help determine the product’s value proposition. I like Lean Canvas for its one-page, at-a-glance format.

At the end of AI product discovery, you’ll know which solution to build, who you’re making it for, and its core value. If evidence indicates that customers will buy your AI product, you’ll build a full MVP in the validation phase.

Many sprints must run in parallel to accommodate the AI product’s complexity and the product team’s array of personnel and disciplines. In the AI product discovery phase, the business, marketing, and design teams will work in sprints to quickly identify the customer, problem statement, and hypothesized solution.

AI Product Validation

In the AI product validation stage, you’ll use an Agile experimental format to build your AI product incrementally. That means processing data and expanding the AI model piecemeal, gauging customer interest at every step.

Because your AI product likely involves a large quantity of data and many stakeholders, your build should be highly structured. Here’s how I manage mine:

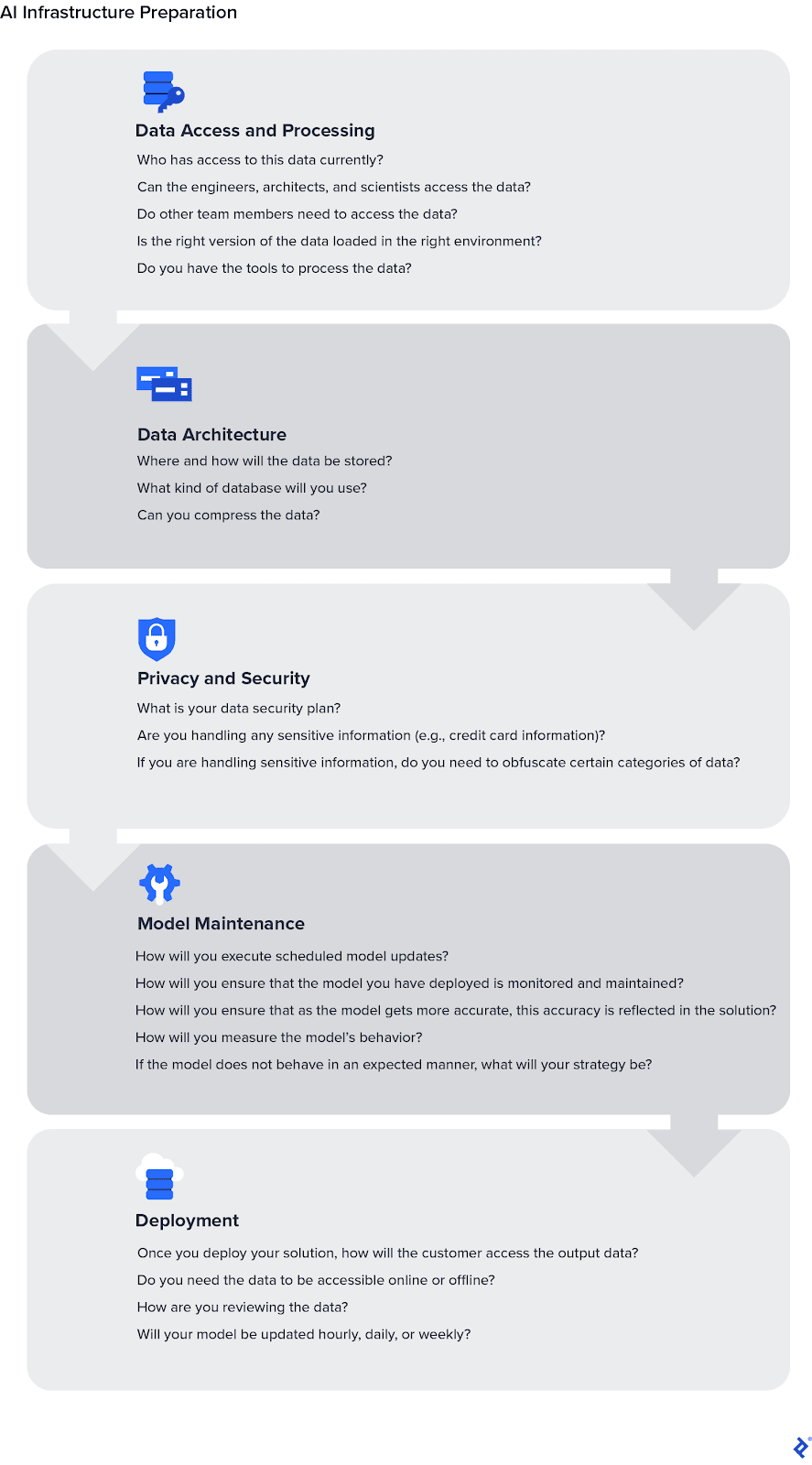

1. Prepare the Infrastructure

The infrastructure encompasses every process required to train, maintain, and launch the AI algorithm. Because you’ll build the model in a controlled environment, a robust infrastructure is the best way to prepare for the unknowns of the real world.

Part 2 of this series covered tech and infrastructure planning. Now it’s time to build that infrastructure before creating the machine learning (ML) model. Building the infrastructure requires finalizing your approach to data collection, storage, processing, and security, as well as creating your plans for the model’s maintenance, improvement, and course correction should it behave unpredictably.

Here’s a downloadable step-by-step guide to get you started.

2. Data Processing and Modeling

Work with domain experts and data engineers to target, collect, and preprocess a high-quality development data set. Accessing data in a corporate setting will likely involve a gauntlet of bureaucratic approvals, so make sure to scope out plenty of time. Once you have the development set, the data science team can create the ML model.

Target and collect. The domain expert on your team will help you locate and understand the available data, which should fulfill the Four Cs: correct, current, consistent, and connected. Consult with your domain expert early and often. I have worked on projects in which nonexperts made many false assumptions while identifying data, leading to costly machine learning problems later in the development process.

Next, determine which of the available data belongs in your development set. Weed out discontinuous, irrelevant, or one-off data.

At this point, assess whether the data set mirrors real-world conditions. It may be tempting to speed up the process by training your algorithm on dummy or nonproduction data, but this will waste time in the long run. The functions that result are usually inaccurate and will require extensive work later in the development process.

Preprocess. Once you have identified the right data set, the data engineering team will refine it, convert it into a standardized format, and store it according to the data science team’s specifications. This process has three steps:

- Cleaning: Removes erroneous or duplicative data from the set.

- Wrangling: Converts raw data into accessible formats.

- Sampling: Creates structures that enable the data science team to take samples for an initial assessment.

Modeling is where the real work of a data scientist starts. In this step, the data scientists will work within the infrastructure’s parameters and select an algorithm that solves the customer’s problem and suits the product features and data.

Before testing these algorithms, the data scientists must know the product’s core features. These features are derived from the problem statement and solution you identified in the AI product discovery phase at the beginning of this article.

Optimize the features. Fine-tune features to boost model performance and determine whether you need different ones.

Train the model. The model’s success depends on the development and training data sets. If you do not select these carefully, complications will arise later on. Ideally, you should choose both data sets randomly from the same data source. The bigger the data set, the better the algorithm will perform.

Data scientists apply data to different models in the development environment to test their learning algorithms. This step involves hyperparameter tuning, retraining models, and model management. If the development set performs well, aim for a similar level of performance from the training set. Regularization can help ensure that the model’s fit within the data set is balanced. When the model does not perform well, it is usually due to variance, bias, or both. Prejudicial bias in customer data derives from interpretations of factors such as gender, race, and location. Removing human preconceptions from the data and applying techniques such as regularization can improve these issues.

Evaluate the model. At the start of the project, the data scientists should select evaluation metrics to gauge the quality of the machine learning model. The fewer metrics, the better.

The data scientists will cross-validate results with different models to see whether they selected the best one. The winning model’s algorithm will produce a function that most closely represents the data in the training set. The data scientists will then place the model in test environments to observe its performance. If the model performs well, it’s ready for deployment.

During the model development phase, the data engineering and data science teams will run dedicated sprints in parallel, with shared sprint reviews to exchange key learnings.

The early sprints of the data engineering team will build domain understanding and identify data sources. The next few sprints can focus on processing the data into a usable format. At the end of each sprint, solicit feedback from the data science team and the broader product development team.

The data science team will have goals for each sprint, including enabling domain understanding, sampling the right data sets, engineering product features, choosing the right algorithm, adjusting training sets, and ensuring performance.

3. Deployment and Customer Validation

It’s time to prepare your model for deployment in the real world.

Finalize the UX. The deployed model must seamlessly interact with the customer. What will that customer journey look like? What type of interaction will trigger the machine learning model if the AI product is an app or website? Remember that if the end user sees and interacts with the model, you’ll likely need access to web services or APIs.

Plan updates. The data scientists and research scientists must constantly update the deployed model to ensure that its accuracy will improve as it encounters more data. Decide how and when to do this.

Ensure safety and compliance. Enable industry-specific compliance practices and establish a fail-safe mechanism that kicks in when the model does not behave as expected.

As for validation, use built-in tracking features to collect customer interactions. Previous customer interactions (interviews, demos, etc.) might have helped you understand what solutions customers want, but observing them in action will tell you whether you’ve delivered successfully. For instance, if you are building a mobile app, you may want to track which button the customer clicks on the most and the navigation journeys they take through the app.

The customer validation phase will furnish a data-backed analysis that will tell you whether to invest more time in specific app features.

No product is ever right on the first try, so don’t give up. It takes about three iterations to impress customers. Wait for those three iterations. Learn from the evidence, go back to the drawing board, and add and modify features.

During product deployment, the engineering, marketing, and business teams will run parallel sprints when preparing to deploy the model. Once the model is running, the deployment team will handle updates based on user feedback.

Institute a process among the engineering, marketing, data science, and business teams to test and improve the model. Create an iteration structure designed to implement the recommendations from this process. Divide this work into sprints dedicated to launching a new feature, running tests, or collecting user feedback.

AI Product Scaling

At this stage, you will have identified your customer and gathered real-time feedback. Now it’s time to invest in the product by scaling in the following areas:

Business model: At this point, you will have evidence of how much it costs to acquire a new customer and how much each customer is willing to pay for your product. If necessary, pivot your business model to ensure you achieve your profit objectives. Depending on your initial product vision, you can choose one-time payments or SaaS-based models.

Team structure: How and when do you add more people to the team as you build out your product? Are key players missing?

Product positioning: What positioning and messaging are working well for the customer? How can you capitalize on and attract more customers within your chosen demographic?

Operations: What happens when something goes wrong? Who will the customer call?

Audience: Listen to customer communications and social media posts. Growing your customer base also means growing your product, so keep adjusting and improving in response to customer demands. To do this, return to discovery to research potential new features, test your hypotheses, and create your next product iteration.

AI Product Shortcuts

If building an AI product from scratch is too onerous or expensive, try leaning on third-party AI tools. For example, open-source frameworks such as Kafka and Databricks ingest, process, and store data for ML model development. Amazon Mechanical Turk speeds model training by crowdsourcing human labor for tasks such as labeling training data.

If you need to make sense of large quantities of data, as in sentiment analysis, AI as a service (AIaaS) products like MonkeyLearn can tag, analyze, and create visualizations without a single piece of code. For more complex problems, DataRobot offers an all-in-one cloud-based AI platform that handles everything from uploading data to creating and applying AI models.

AI Is Just Getting Started

I’ve covered the what, why, and how of AI implementation, but a wealth of ethical and legal considerations fall outside the scope of this series. Self-driving cars, smart medical devices, and tools such as Dall-E 2 and ChatGPT are poised to challenge long-held assumptions about human thought, labor, and creativity. Whatever your views, this new era has already arrived.

AI has the potential to power exceptional tools and services. Those of us who harness it should do so thoughtfully, with an eye toward how our decisions will affect future users.

Do you have thoughts about AI and the future of product management? Please share them in the comments.

For product management tips, check out Mayank’s book, The Art of Building Great Products.

Further Reading on the Toptal Blog:

Understanding the basics

What is an AI framework?

An artificial intelligence implementation framework structures the build of the machine learning model that powers an AI product. By following the framework, product managers can lead parallel sprints across multiple teams to set up an infrastructure, process data, and create, deploy, and validate a model.

How do you implement an AI project?

Building an AI product requires vetting the product concept in the discovery phase, using an Agile experimental approach to validate the AI technology, and scaling in response to customer feedback post-launch.

What are the three main challenges when developing AI products?

AI implementation challenges include correcting bias in the data sets, implementing industry-specific compliance practices, and incorporating fail-safe mechanisms to protect customers and stakeholders should the AI malfunction.

Mayank Mittal

Dubai, United Arab Emirates

Member since March 2, 2021

About the author

Mayank is a product manager who has built products for GE, The Emirates Group, KPMG, and Kraft Heinz, and launched two startups in the IoT and AI spaces. Mayank specializes in Agile-based execution of robust go-to-market strategies. He holds a master’s degree in information systems management from Carnegie Mellon University and is the author of The Art of Building Great Products.

PREVIOUSLY AT