Advancing AI Image Labeling and Semantic Metadata Collection

Image labeling can be a tedious, time-consuming task, compounded by the sheer volume of data needed to train deep neural networks. This article breaks down large data set processing and explains how a new SaaS product can help automate image labeling.

Image labeling can be a tedious, time-consuming task, compounded by the sheer volume of data needed to train deep neural networks. This article breaks down large data set processing and explains how a new SaaS product can help automate image labeling.

Neven is an artificial intelligence engineer with extensive experience in machine learning, computer vision, algorithms, and a range of AI-related technologies. Prior to founding an AI R&D consulting company, Neven helped create and train cutting-edge computer vision models used by healthcare, e-commerce, real estate, and financial services companies across the globe.

Expertise

Previous Role

Senior Machine Learning EngineerDeep neural networks are becoming increasingly relevant across various industries, and for good reason. When trained using supervised learning, they can be highly effective at solving various problems; however, to achieve optimal results, a significant amount of training data is required. The data must be of a high quality and representative of the production environment.

While large amounts of data are available online, most of it is unprocessed and not useful for machine learning (ML). Let’s assume we want to build a traffic light detector for autonomous driving. Training images should contain traffic lights and bounding boxes to accurately capture the borders of these traffic lights. But transforming raw data into organized, labeled, and useful data is time-consuming and challenging.

To optimize this process, I developed Cortex: The Biggest AI Dataset, a new SaaS product that focuses on image data labeling and computer vision but can be extended to different types of data and other artificial intelligence (AI) subfields. Cortex has various use cases that benefit many fields and image types:

- Improving model performance for fine-tuning of custom data sets: Pretraining a model on a large and diverse data set like Cortex can significantly improve the model’s performance when it is fine-tuned on a smaller, specialized data set. For instance, in the case of a cat breed identification app, pretraining a model on a diverse collection of cat images helps the model quickly recognize various features across different cat breeds. This improves the app’s accuracy in classifying cat breeds when fine-tuned on a specific data set.

- Training a model for general object detection: Because the data set contains labeled images of various objects, a model can be trained to detect and identify certain objects in images. One common example is the identification of cars, useful for applications such as automated parking systems, traffic management, law enforcement, and security. Besides car detection, the approach for general object detection can be extended to other MS COCO classes (the data set currently handles only MS COCO classes).

- Training a model for extracting object embeddings: Object embeddings refer to the representation of objects in a high-dimensional space. By training a model on Cortex, you can teach it to generate embeddings for objects in images, which can then be used for applications such as similarity search or clustering.

- Generating semantic metadata for images: Cortex can be used to generate semantic metadata for images, such as object labels. This can empower application users with additional insights and interactivity (e.g., clicking on objects in an image to learn more about them or seeing related images in a news portal). This feature is particularly advantageous for interactive learning platforms, in which users can explore objects (animals, vehicles, household items, etc.) in greater detail.

Our Cortex walkthrough will focus on the last use case, extracting semantic metadata from website images and creating clickable bounding boxes over these images. When a user clicks on a bounding box, the system initiates a Google search for the MS COCO object class identified within it.

The Importance of High-quality Data for Modern AI

Many subfields of modern AI have recently seen significant breakthroughs in computer vision, natural language processing (NLP), and tabular data analysis. All these subfields share a common reliance on high-quality data. AI is only as good as the data it is trained on, and, as such, data-centric AI has become an increasingly important area of research. Techniques like transfer learning and synthetic data generation have been developed to address the issue of data scarcity, while data labeling and cleaning remain important for ensuring data quality.

In particular, labeled data plays a vital role in the development of modern AI models such as fine-tuned LLMs or computer vision models. It is easy to obtain trivial labels for pretraining language models, such as predicting the next word in a sentence. However, collecting labeled data for conversational AI models like ChatGPT is more complicated; these labels must demonstrate the desired behavior of the model to make it appear to create meaningful conversations. The challenges multiply when dealing with image labeling. To create models like DALL-E 2 and Stable Diffusion, a vast data set with labeled images and textual descriptions was necessary to train them to generate images based on user prompts.

Low-quality data for systems like ChatGPT would lead to poor conversational abilities, and low-quality data for image object bounding boxes would lead to inaccurate predictions, such as assigning the wrong classes to the wrong bounding boxes, failing to detect objects, and so on. Low-quality image data can also contain noise and blur images. Cortex aims to make high-quality data readily available to developers creating or training their image models, making the training process faster, more efficient, and predictable.

An Overview of Large Data Set Processing

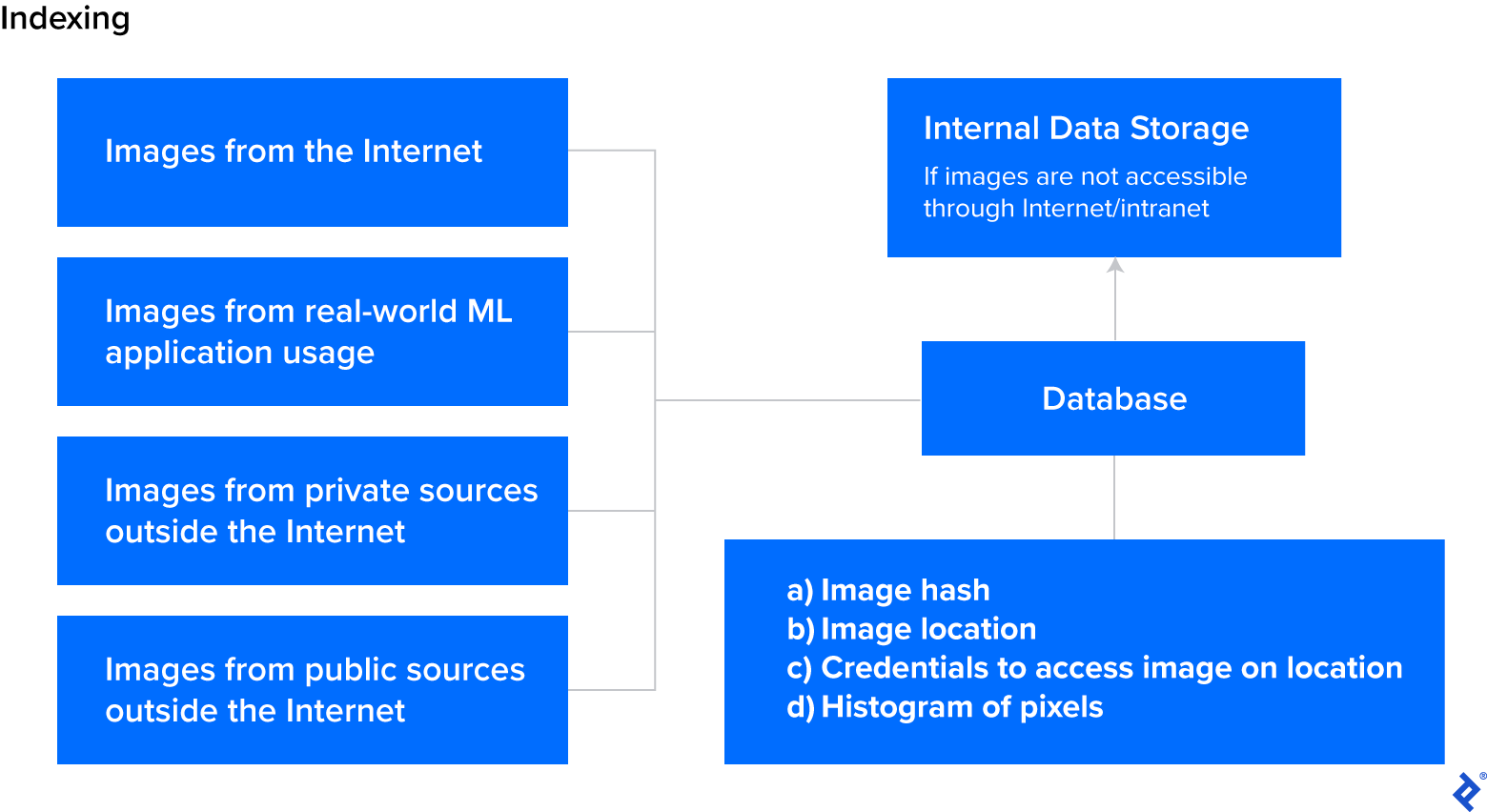

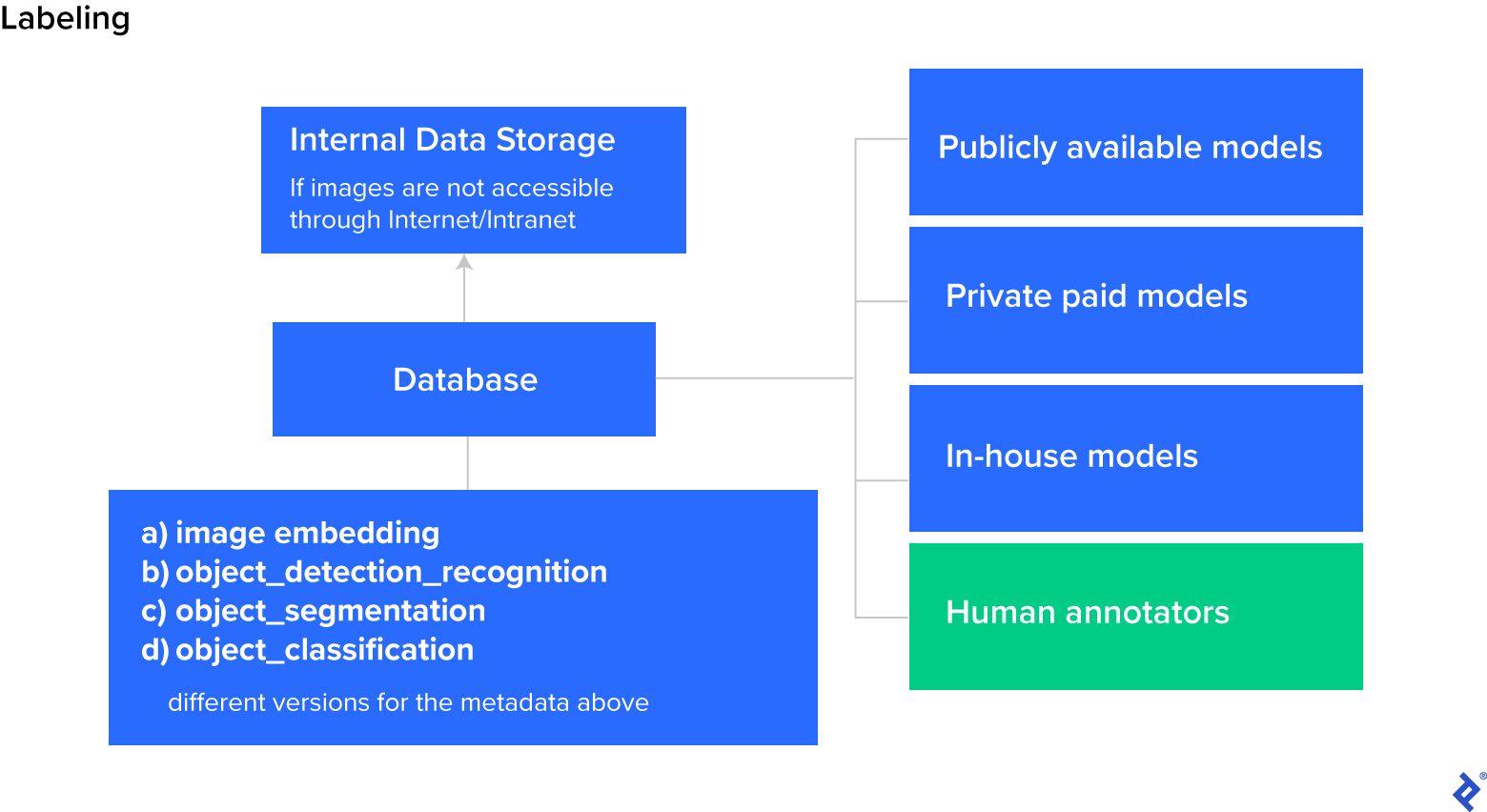

Creating a large AI data set is a robust process that involves several phases. Typically, in the data collection phase, images are scraped from the Internet with stored URLs and structural attributes (e.g., image hash, image width and height, and histogram). Next, models perform automatic image labeling to add semantic metadata (e.g., image embeddings, object detection labels) to images. Finally, quality assurance (QA) efforts verify the accuracy of labels through rule-based and ML-based approaches.

Data Collection

There are various methods of obtaining data for AI systems, each with its own set of advantages and disadvantages:

-

Labeled data sets: These are created by researchers to solve specific problems. These data sets, such as MNIST and ImageNet, already contain labels for model training. Platforms like Kaggle provide a space for sharing and discovering such data sets, but these are typically intended for research, not commercial use.

-

Private data: This type is proprietary to organizations and is usually rich in domain-specific information. However, it often needs additional cleaning, data labeling, and possibly consolidation from different subsystems.

-

Public data: This data is freely accessible online and collectible via web crawlers. This approach can be time-consuming, especially if data is stored on high-latency servers.

-

Crowdsourced data: This type involves engaging human workers to collect real-world data. The quality and format of the data can be inconsistent due to variations in individual workers’ output.

-

Synthetic data: This data is generated by applying controlled modifications to existing data. Synthetic data techniques include generative adversarial networks (GANs) or simple image augmentations, proving especially beneficial when substantial data is already available.

When building AI systems, obtaining the right data is crucial to ensure effectiveness and accuracy.

Data Labeling

Data labeling refers to the process of assigning labels to data samples so that the AI system can learn from them. The most common data labeling methods are the following:

-

Manual data labeling: This is the most straightforward approach. A human annotator examines each data sample and manually assigns a label to it. This approach can be time-consuming and expensive, but it is often necessary for data that requires specific domain expertise or is highly subjective.

-

Rule-based labeling: This is an alternative to manual labeling that involves creating a set of rules or algorithms to assign labels to data samples. For example, when creating labels for video frames, instead of manually annotating every possible frame, you can annotate the first and last frame and programmatically interpolate for frames in between.

-

ML-based labeling: This approach involves using existing machine learning models to produce labels for new data samples. For example, a model might be trained on a large data set of labeled images and then used to automatically label images. While this approach requires a great many labeled images for training, it can be particularly efficient, and a recent paper suggests that ChatGPT is already outperforming crowdworkers for text annotation tasks.

The choice of labeling method depends on the complexity of the data and the available resources. By carefully selecting and implementing the appropriate data labeling method, researchers and practitioners can create high-quality labeled data sets to train increasingly advanced AI models.

Quality Assurance

Quality assurance ensures that the data and labels used for training are accurate, consistent, and relevant to the task at hand. The most common QA methods mirror data labeling methods:

-

Manual QA: This approach involves manually reviewing data and labels to check for accuracy and relevance.

-

Rule-based QA: This strategy employs predefined rules to check data and labels for accuracy and consistency.

-

ML-based QA: This method uses machine learning algorithms to detect errors or inconsistencies in data and labels automatically.

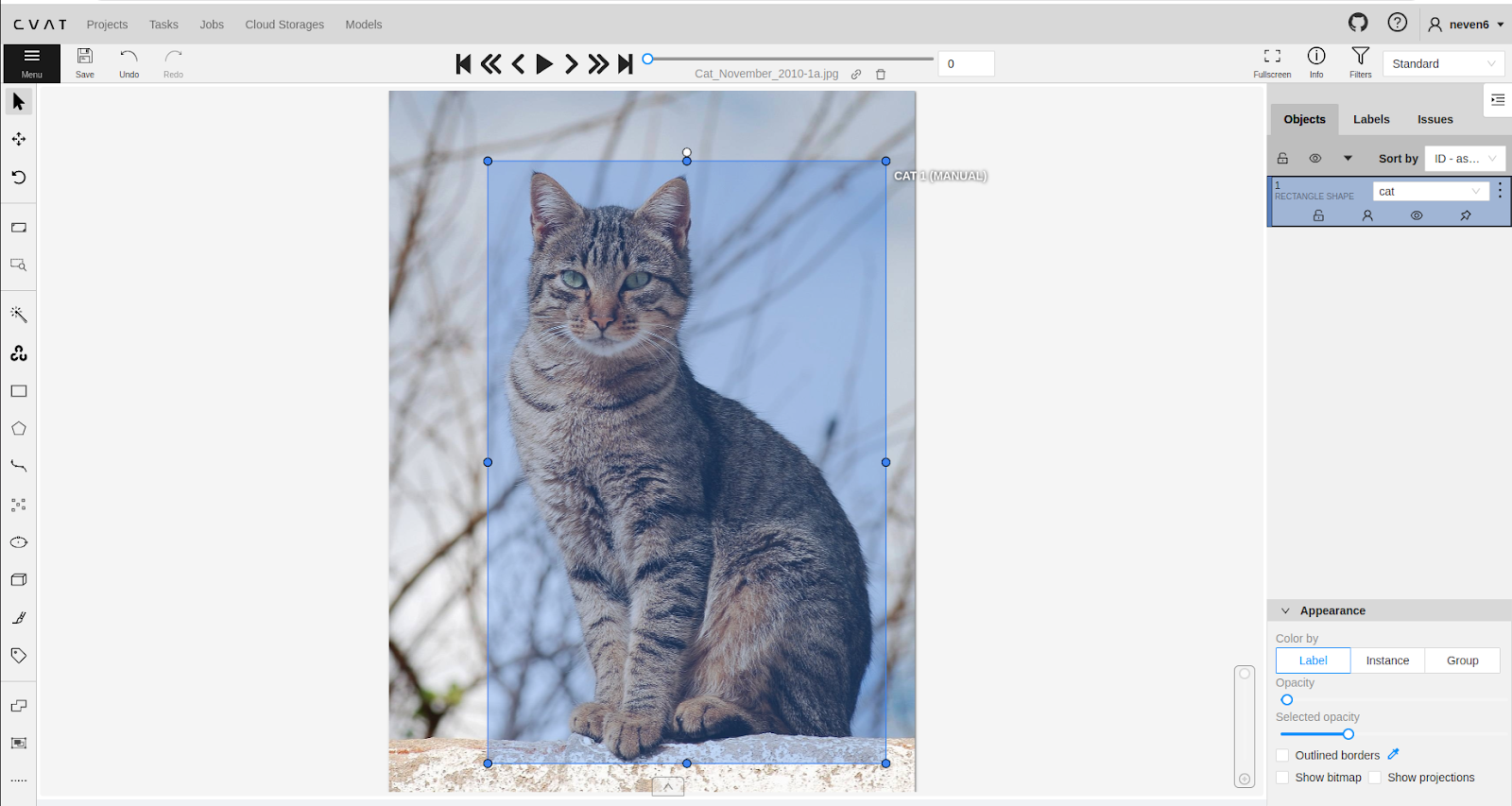

One of the ML-based tools available for QA is FiftyOne, an open-source toolkit for building high-quality data sets and computer vision models. For manual QA, human annotators can use tools like CVAT to improve efficiency. Relying on human annotators is the most expensive and least desirable option, and should only be done if automatic annotators don’t produce high-quality labels.

When validating data processing efforts, the level of detail required for labeling should match the needs of the task at hand. Some applications may require precision down to the pixel level, while others may be more forgiving.

QA is a crucial step in building high-quality neural network models; it verifies that these models are effective and reliable. Whether you use manual, rule-based, or ML-based QA, it is important to be diligent and thorough to ensure the best outcome.

Cortex Walkthrough: From URL to Labeled Image

Cortex uses both manual and automated processes to collect and label the data and perform QA; however, the goal is to reduce manual work by feeding human outputs to rule-based and ML algorithms.

Cortex samples consist of URLs that reference the original images, which are scraped from the Common Crawl database. Data points are labeled with object bounding boxes. Object classes are MS COCO classes, like “person,” “car,” or “traffic light.” To use the data set, users must download the images they are interested in from the given URLs using img2dataset. Labels in the context of Cortex are called semantic metadata as they give the data meaning and expose useful knowledge hidden in every single data sample (e.g., image width and height).

The Cortex data set also includes a filtering feature that enables users to search the database to retrieve specific images. Additionally, it offers an interactive image labeling feature that allows users to provide links to images that are not indexed in the database. The system then dynamically annotates the images and presents the semantic metadata and structural attributes for the images at that specific URL.

Code Examples and Implementation

Cortex lives on RapidAPI and allows free semantic metadata and structural attribute extraction for any URL on the Internet. The paid version allows users to get batches of scraped labeled data from the Internet using filters for bulk image labeling.

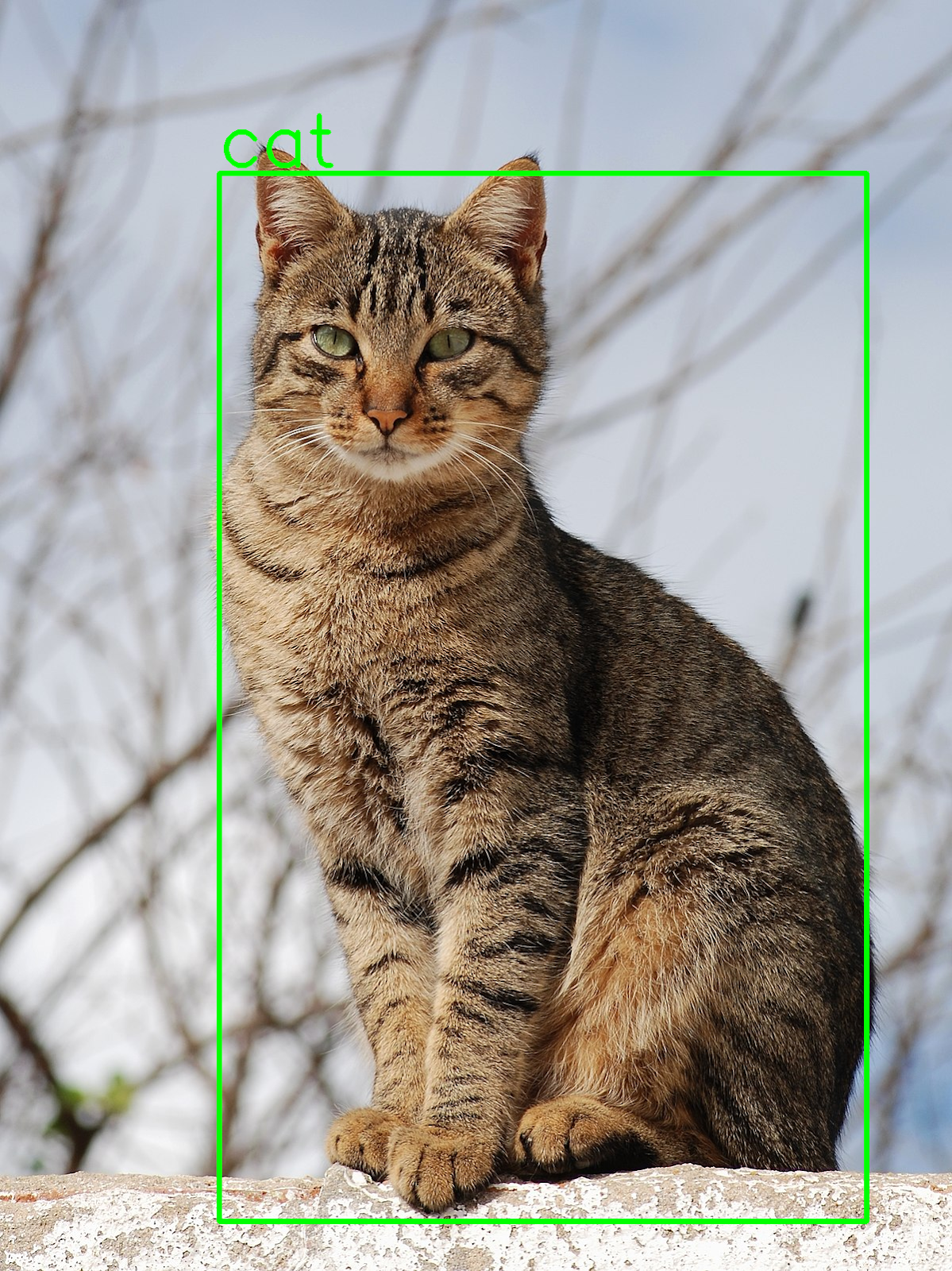

The Python code example presented in this section demonstrates how to use Cortex to get semantic metadata and structural attributes for a given URL and draw bounding boxes for object detection. As the system evolves, functionality will be expanded to include more attributes, such as a histogram, pose estimation, and so on. Every additional attribute adds value to the processed data and makes it suitable for more use cases.

import cv2

import json

import requests

import numpy as np

cortex_url = 'https://cortex-api.piculjantechnologies.ai/upload'

img_url = \

'https://upload.wikimedia.org/wikipedia/commons/thumb/4/4d/Cat_November_2010-1a.jpg/1200px-Cat_November_2010-1a.jpg'

req = requests.get(img_url)

png_as_np = np.frombuffer(req.content, dtype=np.uint8)

img = cv2.imdecode(png_as_np, -1)

data = {'url_or_id': img_url}

response = requests.post(cortex_url, data=json.dumps(data), headers={'Content-Type': 'application/json'})

content = json.loads(response.content)

object_analysis = content['object_analysis'][0]

for i in range(len(object_analysis)):

x1 = object_analysis[i]['x1']

y1 = object_analysis[i]['y1']

x2 = object_analysis[i]['x2']

y2 = object_analysis[i]['y2']

classname = object_analysis[i]['classname']

cv2.rectangle(img, (x1, y1), (x2, y2), (0, 255, 0), 5)

cv2.putText(img, classname,

(x1, y1 - 10),

cv2.FONT_HERSHEY_SIMPLEX, 3, (0, 255, 0), 5)

cv2.imwrite('visualization.png', img)

The contents of the response look like this:

{

"_id":"PT::63b54db5e6ca4c53498bb4e5",

"url":"https://upload.wikimedia.org/wikipedia/commons/thumb/4/4d/Cat_November_2010-1a.jpg/1200px-Cat_November_2010-1a.jpg",

"datetime":"2023-01-04 09:58:14.082248",

"object_analysis_processed":"true",

"pose_estimation_processed":"false",

"face_analysis_processed":"false",

"type":"image",

"height":1602,

"width":1200,

"hash":"d0ad50c952a9a153fd7b0f9765dec721f24c814dbe2ca1010d0b28f0f74a2def",

"object_analysis":[

[

{

"classname":"cat",

"conf":0.9876543879508972,

"x1":276,

"y1":218,

"x2":1092,

"y2":1539

}

]

],

"label_quality_estimation":2.561230587616592e-7

}

Let’s take a closer look and outline what each piece of information can be used for:

-

_idis the internal identifier used for indexing the data and is self-explanatory. -

urlis the URL of the image, which allows us to see where the image originated and to potentially filter images from certain sources. -

datetimedisplays the date and time when the image was seen by the process for the first time. This data can be crucial for time-sensitive applications, e.g., when processing images from a real-time source such as a livestream. -

object_analysis_processed,pose_estimation_processed, andface_analysis_processedflags tell if the labels for object analysis, pose estimation, and face analysis have been created. -

typedenotes the type of data (e.g., image, audio, video). Since Cortex is currently limited to image data, this flag will be expanded with other types of data in the future. -

heightandwidthare self-explanatory structural attributes and provide the height and width of the sample. -

hashis self-explanatory and displays the hashed key. -

object_analysiscontains information about object analysis labels and displays crucial semantic metadata information, such as the class name and level of confidence. -

label_quality_estimationcontains the label quality score, ranging in value from 0 (poor quality) to 1 (good quality). The score is calculated using ML-based QA for labels.

This is what the visualization.png image created by the Python code snippet looks like:

The next code snippet shows how to use the paid version of Cortex to filter and get URLs of images scraped from the Internet:

import json

import requests

url = 'https://cortex4.p.rapidapi.com/get-labeled-data'

querystring = {'page': '1',

'q': '{"object_analysis": {"$elemMatch": {"$elemMatch": {"classname": "cat"}}}, "width": {"$gt": 100}}'}

headers = {

'X-RapidAPI-Key': 'SIGN-UP-FOR-KEY',

'X-RapidAPI-Host': 'cortex4.p.rapidapi.com'

}

response = requests.request("GET", url, headers=headers, params=querystring)

content = json.loads(response.content)

The endpoint uses a MongoDB Query Language query ( q) to filter the database based on semantic metadata and accesses the page number in the body parameter named page.

The example query returns images containing object analysis semantic metadata with the classname cat and a width greater than 100 pixels. The content of the response looks like this:

{

"output":[

{

"_id":"PT::639339ad4552ef52aba0b372",

"url":"https://teamglobalasset.com/rtp/PP/31.png",

"datetime":"2022-12-09 13:35:41.733010",

"object_analysis_processed":"true",

"pose_estimation_processed":"false",

"face_analysis_processed":"false",

"source":"commoncrawl",

"type":"image",

"height":234,

"width":325,

"hash":"bf2f1a63ecb221262676c2650de5a9c667ef431c7d2350620e487b029541cf7a",

"object_analysis":[

[

{

"classname":"cat",

"conf":0.9602264761924744,

"x1":245,

"y1":65,

"x2":323,

"y2":176

},

{

"classname":"dog",

"conf":0.8493766188621521,

"x1":68,

"y1":18,

"x2":255,

"y2":170

}

]

],

“label_quality_estimation”:3.492028982676312e-18

}, … <up to 25 data points in total>

]

"length":1454

}

The output contains up to 25 data points on a given page, along with semantic metadata, structural attributes, and information about the source from where the image is scraped (commoncrawl in this case). It also exposes the total query length in the length key.

Foundation Models and ChatGPT Integration

Foundation models, or AI models trained on a large amount of unlabeled data through self-supervised learning, have revolutionized the field of AI since their introduction in 2018. Foundation models can be further fine-tuned for specialized purposes (e.g., mimicking a certain person’s writing style) using small amounts of labeled data, allowing them to be adapted to a variety of different tasks.

Cortex’s labeled data sets can be used as a reliable source of data to make pretrained models an even better starting point for a wide variety of tasks, and those models are one step above foundation models that still use labels for pretraining in a self-supervised manner. By leveraging vast amounts of data labeled by Cortex, AI models can be pretrained more effectively and produce more accurate results when fine-tuned. What sets Cortex apart from other solutions is its scale and diversity—the data set constantly grows, and new data points with diverse labels are added regularly. At the time of publication, the total number of data points was more than 20 million.

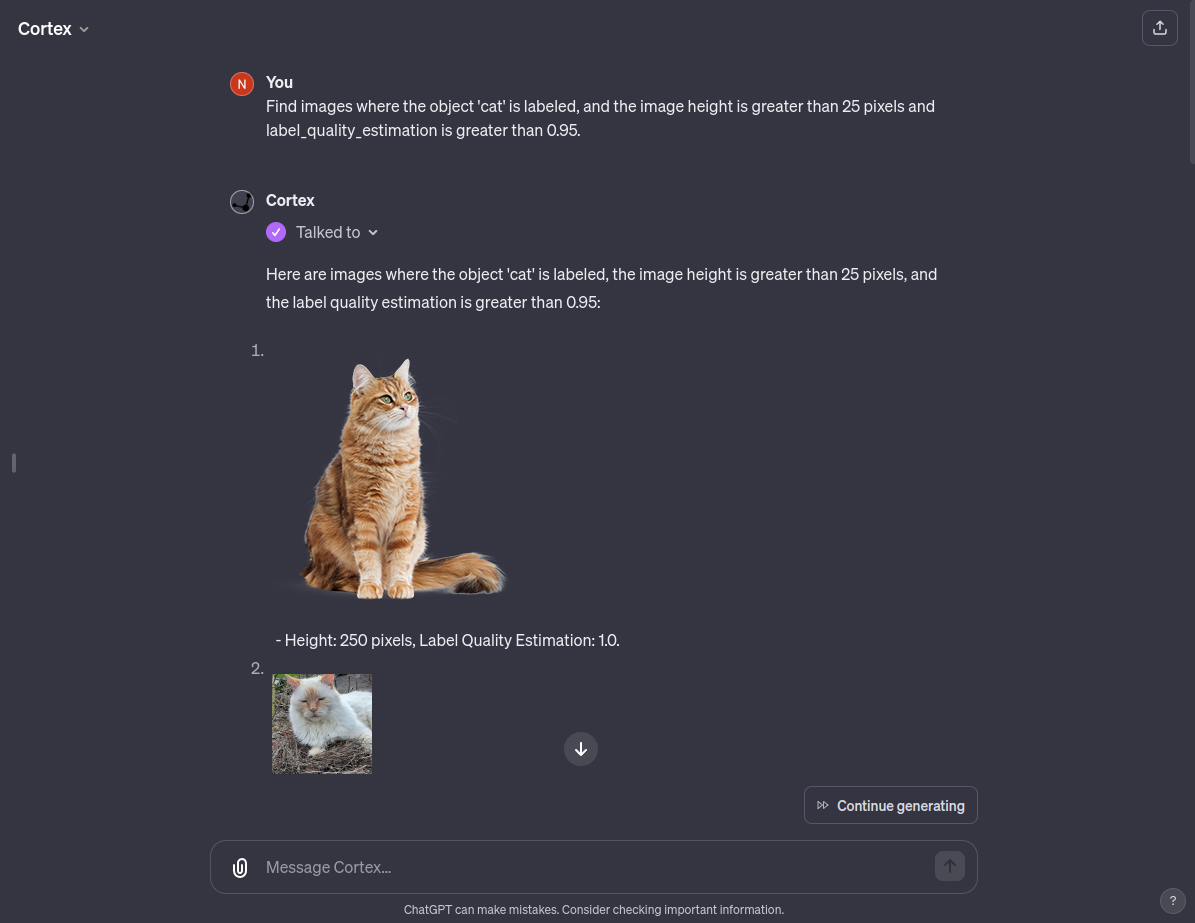

Cortex also offers a customized ChatGPT chatbot, giving users unparalleled access to and utilization of a comprehensive database filled with meticulously labeled data. This user-friendly functionality improves ChatGPT’s capabilities, providing it with deep access to both semantic and structural metadata for images, but we plan to extend it to different data beyond images.

With the current state of Cortex, users can ask this customized ChatGPT to provide a list of images containing certain items that consume most of the image’s space or images containing multiple items. Customized ChatGPT can understand deep semantics and search for specific types of images based on a simple prompt. With future refinements that will introduce diverse object classes to Cortex, the custom GPT could act as a powerful image search chatbot.

Image Data Labeling as the Backbone of AI Systems

We are surrounded by large amounts of data, but unprocessed raw data is mostly irrelevant from a training perspective, and has to be refined to build successful AI systems. Cortex tackles this challenge by helping transform vast quantities of raw data into valuable data sets. The ability to quickly refine raw data reduces reliance on third-party data and services, speeds up training, and enables the creation of more accurate, customized AI models.

The system currently returns semantic metadata for object analysis along with a quality estimate, but will eventually support face analysis, pose estimation, and visual embeddings. There are also plans to support modalities other than images, such as video, audio, and text data. The system currently returns width and height structural attributes, but it is going to support a histogram of pixels as well.

As AI systems become more commonplace, demand for quality data is bound to go up, and the way we collect and process data will evolve. Current AI solutions are only as good as the data they are trained on, and can be extremely effective and powerful when meticulously trained on large amounts of quality data. The ultimate goal is to use Cortex to index as much publicly available data as possible and assign semantic metadata and structural attributes to it, creating a valuable repository of high-quality labeled data needed to train the AI systems of tomorrow.

The editorial team of the Toptal Engineering Blog extends its gratitude to Shanglun Wang for reviewing the code samples and other technical content presented in this article.

All data set images and sample images courtesy of Pičuljan Technologies.

Further Reading on the Toptal Blog:

Understanding the basics

What is image labeling?

Image labeling is the process of identifying and marking specific features within an image to provide semantic meaning. It involves annotating images with labels that describe the presence, location, and characteristics of various objects or elements.

Why do we label images?

Labeling images provides essential data for training machine learning models in computer vision, enabling them to identify and understand various elements within the images.

Why is label quality assurance important in machine learning?

Label quality assurance is vital to ensure the reliability and accuracy of training data. High-quality labels lead to more precise and efficient machine learning models, especially in complex visual tasks.

How does Cortex aid in building foundation models?

Cortex provides a database of high-quality, labeled data, essential for creating pretrained models that function better than foundation models trained on unlabeled data. Diverse training data helps pretrained models gain broad knowledge and skills, and Cortex’s labeled data enhances their learning processes.

Zagreb, Croatia

Member since September 27, 2017

About the author

Neven is an artificial intelligence engineer with extensive experience in machine learning, computer vision, algorithms, and a range of AI-related technologies. Prior to founding an AI R&D consulting company, Neven helped create and train cutting-edge computer vision models used by healthcare, e-commerce, real estate, and financial services companies across the globe.