Agile’s Next Frontier: A Guide to Navigating the AI Project Life Cycle

The dynamism of AI-driven software development requires a specialized project management approach. This overview of the AI project life cycle details the key adaptations needed for success.

The dynamism of AI-driven software development requires a specialized project management approach. This overview of the AI project life cycle details the key adaptations needed for success.

Alvaro is an experienced technical project manager and consultant. He has rolled out many SaaS implementation projects and delivered complex cloud and AI-driven projects at numerous companies worldwide.

Expertise

Previous Role

Technical Project ManagerPREVIOUSLY AT

My first experience managing an artificial intelligence (AI) project was in 2020, when I led the development of a cutting-edge facial recognition platform. It quickly dawned on me that the undertaking called for a highly specialized approach tailored to the intricacies and unique challenges of AI-driven innovation.

Only 54% of AI projects make it from pilot to production, according to a 2022 Gartner survey, and it’s not hard to see why. AI project management is not just about overseeing timelines and resources. It demands an understanding of data dependencies, familiarity with model training, and frequent adjustments. I realized that the sheer dynamism of an AI project would need an entirely new life cycle management approach, one that fosters innovation, facilitates continuous learning, predicts potential roadblocks, and adapts Agile strategies to ensure the successful translation of complex concepts into functional products that align with business objectives and deliver value.

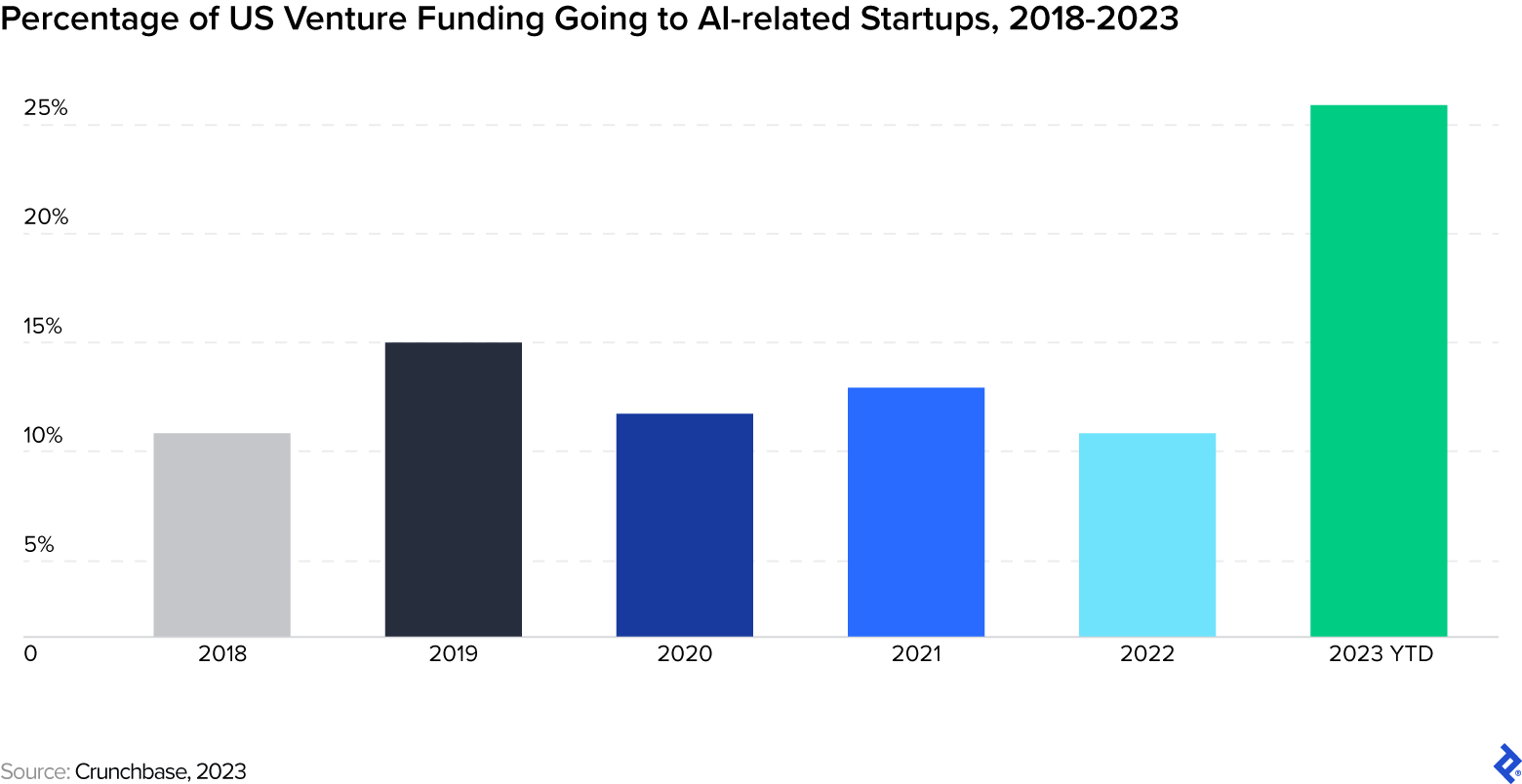

Investment in the development of AI products and services has grown rapidly. Crunchbase data shows that one in four dollars invested in American startups this year went to an AI-related company—more than double the 2022 percentage. The adoption of AI products and services across organizations more than doubled from 2017 to 2022, according to McKinsey & Company’s 2022 Global Survey on AI, and this exponential growth is expected to continue: 63% of survey respondents “expect their organizations’ investment to increase over the next three years.” All this means that the demand for technical project managers will no doubt increase too.

This guide aims to help you navigate this new frontier. I peel back the layers of AI project management, distilling my experiences into actionable insights. Following an overview of AI project considerations, we traverse the essential stages of the AI project life cycle: business understanding, data discovery, model building and evaluation, and deployment.

AI Project Management: Key Considerations

AI product development differs from traditional software development in several key ways. These are some specific Agile adaptations to consider:

- Iterative model training: AI models may require multiple iterations. Not every sprint will deliver a functional increment of the product; instead, use the sprint to focus on developing a better-performing version of the model.

- Data backlog: The product backlog in AI projects is largely influenced by data availability, quality, and relevance. Prioritizing data acquisition or cleaning can sometimes take precedence over feature development.

- Exploratory data analysis: Prior to model building, AI projects often require a deep dive into data. This helps in understanding distribution, potential outliers, and relationships between variables.

- Extended “definition of done”: The traditional “definition of done” in software projects might need to be extended to include criteria such as model accuracy, data validation, and bias checks for AI projects.

- Model versioning: Traditional software version control systems might not be sufficient for AI projects. Model versioning, which tracks changes to both code and data, is essential.

- Feedback loops: In addition to user feedback, model performance feedback loops are needed for developers to understand how models perform in real-world scenarios and to retrain them accordingly.

- Prototyping and experimentation: AI development often requires experimentation to identify the best models or approaches. Incorporate spikes focused solely on research and prototyping to help you test your assumptions and validate ideas.

These adaptations ensure that the frameworks address the unique challenges posed by AI product development, balancing timely delivery with the highest standards of quality and ethics.

Now that you have an idea of how certain aspects of Agile may differ, let’s explore the AI project life cycle stages and take an in-depth look at what each one involves.

Business Understanding

The initiation of a successful AI project life cycle begins with a thorough understanding of the business needs it aims to address. The key here is to translate project requirements into clear and concise specifications that can guide the development of the AI system.

When I led the development of an AI revenue optimization tool for an airline, we started by identifying the business need: to increase revenue by adjusting pricing and availability based on real-time market demands. This led to the creation of precise specifications—the tool needed to collect data from revenue analysts and process it using AI algorithms to suggest the best pricing strategy. We refined the specifications by conducting workshops and interviews to get clarity on the role that revenue analysts perform and their pain points. This process uncovered insights that meant the solution would be both useful and usable.

The challenge lies in ensuring that the AI is utilized in an appropriate way. AI excels at tasks involving pattern recognition, data analysis, and prediction. As such, it was the perfect fit for some of our project use cases because it could quickly analyze vast amounts of market data, recognize trends, and make accurate pricing suggestions.

The following scenarios demonstrate how AI capabilities can be applied to best effect:

AI Capabilities | Scenarios |

|---|---|

Predictive analytics | Stock market trend forecasting based on historical data |

Natural language processing | Use of voice assistants to understand human commands |

Image recognition | Identity verification on security systems |

Task automation | Payroll processing |

Decision-making | Healthcare diagnoses based on patient records and medical test results |

Personalization | Recommendation algorithms on streaming platforms |

Conversely, AI solutions would be less effective in areas requiring human judgment or creativity, such as interpreting ambiguous data or generating original ideas.

AI isn’t a magic wand that solves all issues, it’s a tool to be used judiciously. Knowing the strengths and limitations of AI helped us make strategic decisions about the tool’s development. By clearly delineating the areas in which AI could add value and those in which human input was crucial, we were able to design a tool that complemented the analysts’ work rather than trying to replace it.

Data Discovery

Appen’s 2022 State of AI and Machine Learning Report indicated that data management was the greatest hurdle for AI initiatives, with 41% of respondents reporting it to be the biggest bottleneck; hence, the importance of robust data discovery at the beginning of an AI project life cycle cannot be overstated. This was made clear to me during a SaaS project that aimed to assist account analysts in evaluating sales tax nexuses based on cross-state regulations.

A sales tax nexus revolves around the principle that a state can require a business to collect sales tax only if that business has a significant presence, or nexus, in that state. But each state defines the nexus criteria differently. Our obstacle wasn’t just myriad regulations, but the vast, unstructured data sets accompanying them. Every state provided data in varying formats with no universal standard—there were spreadsheets, PDFs, and even handwritten notes. We focused on understanding what data we had, where gaps existed, and how to bridge them following a simple five-step process:

- Data cataloging. Our team cataloged all available data sources for each state, documenting their format, relevance, and accessibility.

- Unification. We designed a framework to unify data inputs, which involved creating a consistent taxonomy for all data fields, even if they were named differently or were nested within diverse structures in their original sources.

- Gap identification. Gaps became more apparent after creating a consolidated data pool. For example, some states lacked information on certain thresholds, while others were missing notes about specific criteria.

- Data enrichment. Collaborating closely with domain experts, we explored ways to enrich our existing data. This meant sometimes extrapolating data from known values or even collaborating with state officials to gather missing pieces.

- Continuous review. Our team implemented a cyclical review process, so that as regulations evolved or states refined their definitions, our data sets adapted too.

The outcome was a highly effective tool that could provide real-time sales tax nexus calculations, allowing businesses to proactively manage their tax obligations and get a clear picture of their exposure. The SaaS platform increased the efficiency of the account analysts and brought a level of precision and speed to the process that was not possible before.

Before any AI or machine learning (ML) model can be effective, there’s often a mountain of data work needed. The work done during the data discovery phase ensures any AI-driven solution’s accuracy, reliability, and effectiveness.

Model Building and Evaluation

Selecting an appropriate model is not a case of one-size-fits-all. The following factors should inform your decision-making process during the model evaluation phase:

-

Accuracy: How well does the model do its job? It’s crucial to gauge its precision, thus ensuring that the model is effective in real-world scenarios.

-

Interpretability: Especially in highly regulated sectors in which decisions may need explanations (such as finance or healthcare), it’s key for the model to make its predictions and explanations understandable.

-

Computational cost: A model that takes a long time to produce results might not be viable. Balance computational efficiency with performance, particularly for real-time applications.

Once the model is constructed, the real test begins—gauging its efficacy. The evaluation stage is not just a checkpoint, as it can be in a typical software development process, but rather a cyclical process of testing, iteration, and refinement.

You may think that once a model is built and optimized, it remains a static piece of perfection but, in reality, the efficacy of a model can be as dynamic as the world around us. Appen’s report indicated that 91% of organizations update their machine learning models at least quarterly.

Take an AI-driven tool we built for the hospitality sector, for example. Its goal was to optimize the use of amenities to increase profit margins. To do this, we used a machine learning model to analyze guest interactions and behaviors across various hotel amenities. Once launched, the system was adept at discerning patterns and projecting revenue based on space utilization. But as time went on, we noticed subtle discrepancies in its performance: The model, once accurate and insightful, began to falter in its predictions. This wasn’t due to any inherent flaw in the model itself, but rather a reflection of the ever-changing nature of the data it was using.

There are several inherent data challenges that make regular model evaluation necessary:

-

Data drift: Just as rivers change course over millennia, the data fed into a model can also drift over time. For our hotel project, changes in guest demographics, new travel trends, or even the introduction of a popular nearby attraction could significantly alter guest behaviors. If the model is not recalibrated to this new data, its performance can wane.

-

Concept drift: Sometimes, the very fundamentals of what the data represents can evolve. The concept of luxury is a good example. A decade ago, luxury in hotels might have meant opulent décor and private staff. Today, it could mean minimalist design and high-tech automation. If a model trained on older notions of luxury isn’t updated, it’s bound to misinterpret today’s guest expectations and behaviors.

-

Training-serving skew: This happens when the data used to train the model differs from the data it encounters in real-world scenarios. Perhaps during training, our model saw more data from business travelers, but in its real-world application, it encountered more data from vacationing families. Such skews can lead to inaccurate predictions and recommendations.

Machine learning models aren’t artifacts set in stone but evolving entities. Regular monitoring, maintenance, and recalibration will help to ensure that the model remains relevant, accurate, and capable of delivering valuable insights.

Deployment

The deployment phase is the crescendo of all the diligent work that goes into an AI project. It’s where the meticulously crafted model transcends the confines of development and begins its journey of solving tangible business challenges.

The essence of successful deployment is not simply about introducing a new capability, but also about managing its seamless integration into the existing ecosystem, providing value with minimal disruption to current operations.

Here are some effective rollout strategies I’ve witnessed and applied in my own AI project work:

-

Phased rollout: Instead of a full-scale launch, introduce the product to a small cohort first. This allows for real-world testing while providing a safety net for unforeseen issues. As confidence in the product grows, it can be rolled out to larger groups incrementally.

-

Feature flags: This strategy allows you to release a new feature but keep it hidden from users. You can then selectively enable it for specific users or groups, allowing for controlled testing and gradual release.

-

Blue/green deployment: Here, two production environments are maintained. The “blue” environment runs the current application, while the “green” hosts the new version. Once testing in the green environment is successful, traffic is gradually shifted from blue to green, ensuring a smooth transition.

An additional hurdle is that people are inherently resistant to change, especially when it impacts their daily tasks and routines. This is where change management strategies come into play:

-

Communication: From the inception of the project, keep stakeholders informed. Transparency about why changes are happening, the benefits they’ll bring, and how they will be implemented is key.

-

Training: Offer training sessions, workshops, or tutorials. Equip your users with the knowledge and skills they need to navigate and leverage the new features.

-

Feedback loops: Establish channels where users can voice concerns, provide feedback, or seek clarification. This not only aids in refining the product but also makes users feel valued and involved in the change process.

-

Celebrate milestones: Recognize and celebrate the small wins along the way. This fosters a positive outlook toward change and builds momentum for the journey ahead.

While the technological facets of deployment are vital, the human side should not be overlooked. Marrying the two ensures not just a successful product launch, but also introduces a solution that truly adds business value.

Embarking on Your AI Journey

Navigating AI project management is challenging but it also offers ample opportunities for growth, innovation, and meaningful impact. You’re not just managing projects, you’re facilitating change.

Apply the advice above as you begin to explore the vast new frontier of the AI project life cycle: Implement AI judiciously, align solutions with real needs, prioritize data quality, embrace continuous review, and roll out strategically. Harness the power of Agile—collaboration, flexibility, and adaptability are particularly vital when tackling such complex and intricate development. Remember, though, that AI is always evolving, so your project management approach should always be poised to evolve too.

Further Reading on the Toptal Blog:

- Getting Started in AI Product Management

- What Is Agile? A Philosophy That Develops Through Practice

- Traversing Hybrid Project Management: The Bridge Between Agile and Waterfall

- How to Use Coaching in Organizational Change Management

- What Is a Technical Project Manager?

- Using AI in Project Management: Key Applications and Benefits

Understanding the basics

What is the AI project life cycle?

The AI project life cycle encompasses the stages involved in developing AI-driven products and services. These stages have been adapted from the traditional project life cycle stages to ensure that the AI product or service is being fed accurate, organized data and uses a functional model.

How many stages are there in the AI project life cycle?

There are four stages in the AI project life cycle: business understanding, data discovery, model building and evaluation, and deployment. These stages may also incorporate iterative model training, exploratory data analysis, and prototyping and experimentation.

Santiago, Chile

Member since October 16, 2019

About the author

Alvaro is an experienced technical project manager and consultant. He has rolled out many SaaS implementation projects and delivered complex cloud and AI-driven projects at numerous companies worldwide.

Expertise

Previous Role

Technical Project ManagerPREVIOUSLY AT