Stork, Part 3: Implementing Expressions and Variables

Developing a new programming language from scratch tends to create unique challenges, followed by unconventional solutions that may go against some programming dogmas.

In Part 3 of our Stork series, Toptal Full-stack Developer Jakisa Tomic elaborates on how C++ macros can help and why expression implementation might not be as straightforward as expected.

Developing a new programming language from scratch tends to create unique challenges, followed by unconventional solutions that may go against some programming dogmas.

In Part 3 of our Stork series, Toptal Full-stack Developer Jakisa Tomic elaborates on how C++ macros can help and why expression implementation might not be as straightforward as expected.

Jakisa has 15+ years of experience developing various apps on multiple platforms. Most of his technical expertise is in C++ development.

Expertise

PREVIOUSLY AT

In Part 3 of our series, our lightweight programming language will finally run. It will not be Turing-complete, it will not be powerful, but it will be able to evaluate expressions and even call external functions written in C++.

I will try to describe the process in as much detail as possible, mainly because it is the purpose of this blog series, but also for my own documentation because, in this part, things got a little complicated.

I started coding for this part before the publication of the second article, but then it turned out that the expression parser should be a standalone component that deserves its own blog post.

That, along with some infamous programming techniques, made it possible for this part not to be monstrously big, and yet, some readers will most likely point at the said programming techniques and wonder why I had to use them.

Why Do We Use Macros?

As I gained programming experience working on different projects and with different people, I learned that developers tend to be quite dogmatic—probably because it is easier that way.

The first dogma of programming is that the goto statement is bad, evil, and horrible. I can understand where that sentiment originates, and I agree with that notion in the vast majority of cases when someone uses the goto statement. It can usually be avoided, and more readable code could be written instead.

However, one cannot deny that breaking from the inner loop in C++ can be easily accomplished with the goto statement. The alternative—which requires a bool variable or a dedicated function—could be less readable than the code that dogmatically falls into the bucket of prohibited programming techniques.

The second dogma, relevant exclusively to C and C++ developers, is that macros are bad, evil, awful, and basically, a disaster waiting to happen. This is almost always accompanied by this example:

#define max(a, b) ((a) > (b) ? (a) : (b))

...

int x = 3;

int z = 2;

int y = max(x++, z);

And then there is a question: What is the value of x after this piece of code, and the answer is 5 because x is incremented twice, one on each side of the ?-operator.

The only problem is that nobody uses macros in this scenario. Macros are evil if they are used in a scenario where ordinary functions work fine, especially if they pretend to be functions, so the user is unaware of their side effects. However, we will not use them as functions, and we will use block letters for their names to make it obvious that they are not functions. We will not be able to properly debug them, and that’s bad, but we will live with that, since the alternative is copy-pasting the same code dozens of times, which is much more error-prone than macros. One solution to that problem is writing the code generator, but why should we write it when we already have one embedded in C++?

Dogmas in programming are almost always bad. I am cautiously using “almost” here just to avoid recursively falling into the dogma trap that I’ve just set up.

You can find the code and all the macros for this part here.

Variables

In the previous part, I mentioned that Stork will not be compiled into binary or anything similar to assembly language, but I also said that it will be a statically typed language. Therefore, it will be compiled, but in a C++ object that will be able to execute. It will become clearer later but for now, let’s just state that all variables will be objects on their own.

Since we want to keep them in the global variable container or on the stack, one convenient approach is to define the base class and inherit from it.

class variable;

using variable_ptr = std::shared_ptr<variable>;

class variable: public std::enable_shared_from_this<variable> {

private:

variable(const variable&) = delete;

void operator=(const variable&) = delete;

protected:

variable() = default;

public:

virtual ~variable() = default;

virtual variable_ptr clone() const = 0;

template <typename T>

T static_pointer_downcast() {

return std::static_pointer_cast<

variable_impl<typename T::element_type::value_type>

>(shared_from_this());

}

};

As you can see, it is fairly simple, and the function clone, which does the deep copy, is its only virtual member function apart from the destructor.

As we will always use objects of this class via shared_ptr, it makes sense to inherit it from std::enable_shared_from_this, so that we can easily get the shared pointer from it. The function static_pointer_downcast is here for convenience because we will frequently have to downcast from this class to its implementation.

The real implementation of this class is variable_impl, parametrized with the type that it holds. It will be instantiated for the four types that we will use:

using number = double;

using string = std::shared_ptr<std::string>;

using array = std::deque<variable_ptr>;

using function = std::function<void(runtime_context&)>;

We will use double as our number type. Strings are reference-counted, as they will be immutable, to enable certain optimizations when passing them by value. Array will be std::deque, as it is stable, and let’s just note that runtime_context is the class that holds all relevant information about program memory during the runtime. We will get to that later.

The following definitions are frequently used as well:

using lvalue = variable_ptr;

using lnumber = std::shared_ptr<variable_impl<number>>;

using lstring = std::shared_ptr<variable_impl<string>>;

using larray = std::shared_ptr<variable_impl<array>>;

using lfunction = std::shared_ptr<variable_impl<function>>;

The “l” used here is shortened for “lvalue.” Whenever we have an lvalue for some type, we will use the shared pointer to variable_impl.

Runtime Context

During runtime, the memory state is kept in the class runtime_context.

class runtime_context{

private:

std::vector<variable_ptr> _globals;

std::deque<variable_ptr> _stack;

std::stack<size_t> _retval_idx;

public:

runtime_context(size_t globals);

variable_ptr& global(int idx);

variable_ptr& retval();

variable_ptr& local(int idx);

void push(variable_ptr v);

void end_scope(size_t scope_vars);

void call();

variable_ptr end_function(size_t params);

};

It is initialized with the count of global variables.

-

_globalskeeps all global variables. They are accessed with the member functionglobalwith the absolute index. -

_stackkeeps local variables and function arguments, and the integer at the top of_retval_idxkeeps the absolute index in_stackof the current return value. -

Return value is accessed with the function

retval, while local variables and function arguments are accessed with the functionlocalby passing the index relative to the current return value. Function arguments have negative indices in this case. - The

pushfunction adds the variable to the stack, whileend_scoperemoves the passed number of variables from the stack. - The

callfunction will resize the stack by one and push the index of the last element in_stackto_retval_idx. -

end_functionremoves the return value and the passed number of arguments from the stack and also returns the removed return value.

As you can see, we will not implement any low-level memory management and we will take advantage of the native (C++) memory management, which we can take for granted. We will not implement any heap allocations, either, at least for now.

With runtime_context, we finally have all building blocks needed for the central and most difficult component of this part.

Expressions

To fully explain the complicated solution that I will present here, I will briefly introduce you to a couple of failed attempts I made before settling on this approach.

The easiest approach is to evaluate every expression as variable_ptr and have this virtual base class:

class expression {

...

public:

variable_ptr evaluate(runtime_context& context) const = 0;

lnumber evaluate_lnumber(runtime_context& context) const {

return evaluate(context)->static_pointer_downcast<lnumber>();

}

lstring evaluate_lstring(runtime_context& context) const {

return evaluate(context)->static_pointer_downcast<lstring>();

}

number evaluate_number(runtime_context& context) const {

return evaluate_lnumber(context)->value;

}

string evaluate_string(runtime_context& context) const {

return evaluate_lstring(context)->value;

}

...

};

using expression_ptr = std::unique_ptr<expression>;

We would then inherit from this class for each operation, such as addition, concatenation, function call, etc. For example, this would be the addition expression implementation:

class add_expression: public expression {

private:

expression_ptr _expr1;

expression_ptr _expr2;

public:

...

variable_ptr evaluate(runtime_context& context) const override{

return std::make_shared<variable_impl<number> >(

_expr1->evaluate_number(context) +

_expr2->evaluate_number(context)

);

}

...

};

So we need to evaluate both sides (_expr1 and _expr2), add them, and then construct variable_impl<number>.

We can safely downcast variables because we checked their type during the compile time, so that’s not the issue here. However, the big issue is the performance penalty that we pay for the heap allocation of the returning object, which—in theory—is not needed. We are doing that to satisfy the virtual function declaration. In the first version of Stork, we will have that penalty when we return numbers from functions. I can live with that but not with the simple pre-increment expression doing heap allocation.

Then, I tried with type-specific expressions inherited from the common base:

class expression {

...

public:

virtual void evaluate(runtime_context& context) const = 0;

...

};

class lvalue_expression: public virtual expression {

...

public:

virtual lvalue evaluate_lvalue(runtime_context& context) const = 0;

void evaluate(runtime_context& context) const override {

evaluate_lvalue(context);

}

...

};

using lvalue_expression_ptr = std::unique_ptr<lvalue_expression>;

class number_expression: public virtual expression {

...

public:

virtual number evaluate_number(runtime_context& context) const = 0;

void evaluate(runtime_context& context) const override {

evaluate_number(context);

}

...

};

using number_expression_ptr = std::unique_ptr<number_expression>;

class lnumber_expression: public lvalue_expression, public number_expression {

...

public:

virtual lnumber evaluate_lnumber(runtime_context& context) const = 0;

lvalue evaluate_lvalue(runtime_context& context) const override {

return evaluate_lnumber(context);

}

number evaluate_number(runtime_context& context) const override {

return evaluate_lnumber(context)->value;

}

void evaluate(runtime_context& context) const override {

return evaluate_lnumber(context);

}

...

};

using lnumber_expression_ptr = std::unique_ptr<lnumber_expression>;

This is just the part of the hierarchy (only for numbers), and we already ran into problems of diamond shape (the class inheriting two classes with the same base class).

Luckily, C++ offers virtual inheritance, which gives the ability to inherit from the base class, by keeping the pointer to it, in the inherited class. Therefore, if classes B and C inherit virtually from A, and class D inherits from B and C, there would be only one copy of A in D.

There are a number of penalties that we have to pay in that case, though—performance and the inability to downcast from A, to name a few—but this still looked like an opportunity for me to use the virtual inheritance for the first time in my life.

Now, the implementation of the addition expression will look more natural:

class add_expression: public number_expression {

private:

number_expression_ptr _expr1;

number_expression_ptr _expr2;

public:

...

number evaluate_number(runtime_context& context) const override{

return _expr1->evaluate_number(context) +

_expr2->evaluate_number(context);

}

...

};

Syntax-wise, there is nothing more to ask for, and this is as natural as it gets. However, if any of the inner expressions is an lvalue number expression, it will require two virtual function calls to evaluate it. Not perfect, but not terrible, either.

Let’s add strings into this mix and see where it gets us:

class string_expression: public virtual expression {

...

public:

virtual string evaluate_string(runtime_context& context) const = 0;

void evaluate(runtime_context& context) const override {

evaluate_string(context);

}

...

};

using string_expression_ptr = std::unique_ptr<string_expression>;

Since we want the numbers to be convertible to strings, we need to inherit number_expression from string_expression.

class number_expression: public string_expression {

...

public:

virtual number evaluate_number(runtime_context& context) const = 0;

string evaluate_string(runtime_context& context) const override {

return tostring(evaluate_number(context));

}

void evaluate(runtime_context& context) const override {

evaluate_number(context);

}

...

};

using number_expression_ptr = std::unique_ptr<number_expression>;

We survived that, but we have to re-override the evaluate virtual method, or we will face serious performance issues due to unnecessary conversion from number to string.

So, things are evidently getting ugly, and our design is barely surviving them because we don’t have two types of expressions that should be converted one to another (both ways). If that was the case, or if we tried to have any kind of circular conversion, our hierarchy couldn’t handle it. After all, hierarchy should reflect is-a relationship, not is-convertible-to relationship, which is weaker.

All these unsuccessful attempts led me to a complicated yet - in my opinion - proper design. First, having a single base class is not crucial for us. We need the expression class that would evaluate to void, but if we can distinguish between void expressions and expressions of another kind in compile time, there is no need to convert between them in a runtime. Therefore, we will parametrize the base class with the return type of the expression.

Here is the full implementation of that class:

template <typename R>

class expression {

expression(const expression&) = delete;

void operator=(const expression&) = delete;

protected:

expression() = default;

public:

using ptr = std::unique_ptr<const expression>;

virtual R evaluate(runtime_context& context) const = 0;

virtual ~expression() = default;

};

We will have only one virtual function call per expression evaluation (of course, we will have to call it recursively), and since we don’t compile to binary code, it is quite a good result. The only thing left to do is the conversion between types, when it is allowed.

To accomplish that, we will parametrize each expression with the return type and inherit it from the corresponding base class. Then, in the evaluate function, we will convert the evaluation result to the return value of that function.

For example, this is our addition expression:

template <typename R>

class add_expression: public expression<R> {

...

R evaluate(runtime_context& context) const override{

return convert<R>(

_expr1->evaluate(context) +

_expr2->evaluate(context)

);

}

...

};

To write the “convert” function, we need some infrastructure:

template<class V, typename T>

struct is_boxed {

static const bool value = false;

};

template<typename T>

struct is_boxed<std::shared_ptr<variable_impl<T> >, T> {

static const bool value = true;

};

string convert_to_string(number n) {

std::string str

if (n == int(n)) {

str = std::to_string(int(n));

} else {

str = std::to_string(n);

}

return std::make_shared<std::string>(std::move(str));

}

string convert_to_string(const lnumber& v) {

return convert_to_string(v->value);

}

The structure is_boxed is a type trait that has an inner constant, value, that evaluates to true if (and only if) the first parameter is a shared pointer to variable_impl parametrized with the second type.

The implementation of the convert function would be possible even in older versions of C++, but there is a very useful statement in C++17 called if constexpr, which evaluates the condition in the compile time. If it evaluates to false, it will drop the block altogether, even if it causes the compile time error. Otherwise, it will drop the else block.

template<typename To, typename From>

auto convert(From&& from) {

if constexpr(std::is_convertible<From, To>::value) {

return std::forward<From>(from);

} else if constexpr(is_boxed<From, To>::value) {

return unbox(std::forward<From>(from));

} else if constexpr(std::is_same<To, string>::value) {

return convert_to_string(from);

} else {

static_assert(std::is_void<To>::value);

}

}

Try to read this function:

- Convert if it is convertible in C++ (this is for

variable_implpointer upcast). - Unbox if it is boxed.

- Convert to string if the target type is string.

- Do nothing and check if the target is void.

In my opinion, this is far more readable than the older syntax based on SFINAE.

I will provide a brief overview of expression types and omit some technical details in order to keep it reasonably brief.

There are three types of leaf expressions in an expression tree:

- Global variable expression

- Local variable expression

- Constant expression

template<typename R, typename T>

class global_variable_expression: public expression<R> {

private:

int _idx;

public:

global_variable_expression(int idx) :

_idx(idx)

{

}

R evaluate(runtime_context& context) const override {

return convert<R>(

context.global(_idx)

->template static_pointer_downcast<T>()

);

}

};

Apart from the return type, it is also parametrized with the variable type. Local variables are treated similarly, and this is the class for constants:

template<typename R, typename T>

class constant_expression: public expression<R> {

private:

T _c;

public:

constant_expression(T c) :

_c(std::move(c))

{

}

R evaluate(runtime_context& context) const override {

return convert<R>(_c);

}

};

In this case, we convert the constant immediately in the constructor.

This is used as the base class for most of our expressions:

template<class O, typename R, typename... Ts>

class generic_expression: public expression<R> {

private:

std::tuple<typename expression<Ts>::ptr...> _exprs;

template<typename... Exprs>

R evaluate_tuple(

runtime_context& context,

const Exprs&... exprs

) const {

return convert<R>(O()(

std::move(exprs->evaluate(context))...)

);

}

public:

generic_expression(typename expression<Ts>::ptr... exprs) :

_exprs(std::move(exprs)...)

{

}

R evaluate(runtime_context& context) const override {

return std::apply(

[&](const auto&... exprs){

return this->evaluate_tuple(context, exprs...);

},

_exprs

);

}

};

The first argument is the functor type that will be instantiated and called for the evaluation. The rest of the types are return types of child expressions.

In order to reduce boilerplate code, we define three macros:

#define UNARY_EXPRESSION(name, code)\

struct name##_op {\

template <typename T1> \

auto operator()(T1 t1) {\

code;\

}\

};\

template<typename R, typename T1>\

using name##_expression = generic_expression<name##_op, R, T1>;

#define BINARY_EXPRESSION(name, code)\

struct name##_op {\

template <typename T1, typename T2>\

auto operator()(T1 t1, T2 t2) {\

code;\

}\

};\

template<typename R, typename T1, typename T2>\

using name##_expression = generic_expression<name##_op, R, T1, T2>;

#define TERNARY_EXPRESSION(name, code)\

struct name##_op {\

template <typename T1, typename T2, typename T3>\

auto operator()(T1 t1, T2 t2, T3 t3) {\

code;\

}\

};\

template<typename R, typename T1, typename T2, typename T3>\

using name##_expression = generic_expression<name##_op, R, T1, T2, T3>;

Notice that operator() is defined as a template, although it usually doesn’t have to be. It is easier to define all expressions the same way instead of supplying argument types as macro arguments.

Now, we can define the majority of the expressions. For example, this is the definition for /=:

BINARY_EXPRESSION(div_assign,

t1->value /= t2;

return t1;

);

We can define almost all of the expressions by using these macros. The exceptions are operators that have defined order of evaluation of arguments (logical && and ||, ternary (?) and comma (,) operator), array index, function call, and param_expression, which clones the parameter to pass it to the function by value.

There is nothing complicated in the implementation of these. Function call implementation is the most complex, so I will explain it here:

template<typename R, typename T>

class call_expression: public expression<R>{

private:

expression<function>::ptr _fexpr;

std::vector<expression<lvalue>::ptr> _exprs;

public:

call_expression(

expression<function>::ptr fexpr,

std::vector<expression<lvalue>::ptr> exprs

):

_fexpr(std::move(fexpr)),

_exprs(std::move(exprs))

{

}

R evaluate(runtime_context& context) const override {

std::vector<variable_ptr> params;

params.reserve(_exprs.size());

for (size_t i = 0; i < _exprs.size(); ++i) {

params.push_back(_exprs[i]->evaluate(context));

}

function f = _fexpr->evaluate(context);

for (size_t i = params.size(); i > 0; --i) {

context.push(std::move(params[i-1]));

}

context.call();

f(context);

if constexpr (std::is_same<R, void>::value) {

context.end_function(_exprs.size());

} else {

return convert<R>(

context.end_function(

_exprs.size()

)->template static_pointer_downcast<T>()

);

}

}

};

It prepares the runtime_context by pushing all evaluated arguments on its stack and calling the call function. It then calls the evaluated first argument (which is the function itself) and returns the return value of the end_function method. We can see the usage of the if constexpr syntax here as well. It saves us from writing the specialization for the whole class for functions that return void.

Now, we have everything related to expressions available during the runtime. The only thing left is the conversion from the parsed expression tree (described in the previous blog post) to the tree of expressions.

Expression Builder

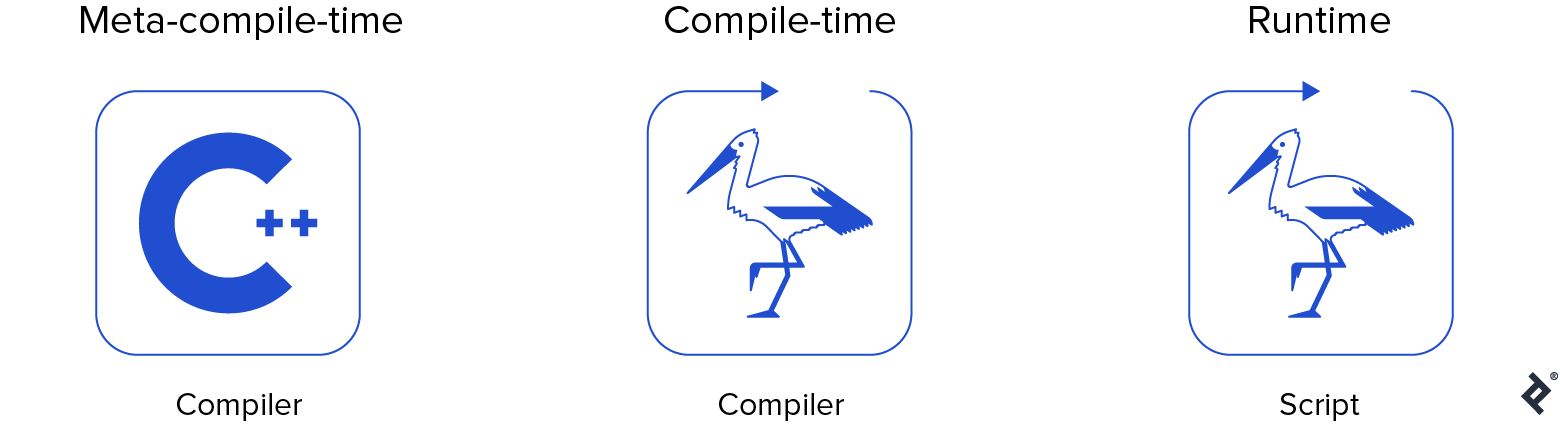

To avoid confusion, let’s name different phases of our language development cycle:

- Meta-compile-time: the phase when C++ compiler runs

- Compile-time: the phase when Stork compiler runs

- Runtime: the phase when Stork script runs

Here is the pseudo-code for the expression builder:

function build_expression(nodeptr n, compiler_context context) {

if (n is constant) {

return constant_expression(n.value);

} else if (n is identifier) {

id_info info = context.find(n.value);

if (context.is_global(info)) {

return global_variable_expression(info.index);

} else {

return local_variable_expression(info.index);

}

} else { //operation

switch (n->value) {

case preinc:

return preinc_expression(

build_expression(n->child[0])

);

...

case add:

return add_expression(

build_expression(n->child[0]),

build_expression(n->child[1])

);

...

case call:

return call_expression(

n->child[0], //function

n->child[1], //arg0

...

n->child[k+1], //argk

);

}

}

}

Apart from having to handle all operations, this seems like a straightforward algorithm.

If it worked, it would be great, but it doesn’t. For starters, we need to specify the return type of the function, and it is obviously not fixed here, because the return type depends on the type of node that we are visiting. Node types are known in compile-time, but return types should be known in meta-compile-time.

In the previous post, I mentioned that I don’t see the advantage of languages that do dynamic type checking. In such languages, the pseudo-code shown above could be implemented almost literally. Now, I am quite aware of the advantages of dynamic-type languages. Instant karma at its finest.

Luckily, we know the type of the top-level expression - it depends on the context of the compilation, but we know its type without parsing the expression tree. For example, if we have the for-loop:

for (expression1; expression2; expression3)

...

The first and the third expressions have a void return type because we don’t do anything with their evaluation result. The second expression, however, has a type number because we are comparing it to zero, in order to decide whether or not to stop the loop.

If we know the type of the expression that is related to the node operation, it will usually determine the type of its child expression.

For example, if the expression (expression1) += (expression2) has the type lnumber, that means that expression1 has that type as well, and expression2 has the type number.

However, the expression (expression1) < (expression2) always has the type number, but their child expressions can have type number or type string. In case of this expression, we will check if both nodes are numbers. If so, we will build expression1 and expression2 as expression<number>. Otherwise, they will be of the type expression<string>.

There is another problem we have to take into account and deal with.

Imagine if we need to build an expression of the type number. Then, we cannot return anything valid if we run into a concatenation operator. We know that it cannot happen, as we already checked the types when we built the expression tree (in the previous part), but that means that we cannot write the template function, parametrized with the return type, because it will have invalid branches depending on that return type.

One approach would split the function by return type, using if constexpr, but it is inefficient because if the same operation exists in multiple branches, we will have to repeat its code. We could write separate functions in that case.

The implemented solution splits the function based on the node type. In each of the branches, we will check if that branch type is convertible to the function return type. If it is not, we will throw the compiler error, because it should never happen, but the code is too complicated for such a strong claim. I may have made an error.

We are using the following self-explanatory, type-trait structure to check the convertibility:

template<typename From, typename To>

struct is_convertible {

static const bool value =

std::is_convertible<From, To>::value ||

is_boxed<From, To>::value ||

(

std::is_same<To, string>::value &&

(

std::is_same<From, number>::value ||

std::is_same<From, lnumber>::value

)

);

};

After that split, the code is almost straightforward. We can semantically cast from the original expression type to the one that we want to build, and there are no errors in meta-compile-time.

There is a lot of boilerplate code, however, so I relied heavily on macros in order to reduce it.

template<typename R>

class expression_builder{

private:

using expression_ptr = typename expression<R>::ptr;

static expression_ptr build_void_expression(

const node_ptr& np,

compiler_context& context

);

static expression_ptr build_number_expression(

const node_ptr& np,

compiler_context& context

);

static expression_ptr build_lnumber_expression(

const node_ptr& np,

compiler_context& context

);

static expression_ptr build_string_expression(

const node_ptr& np,

compiler_context& context

);

static expression_ptr build_lstring_expression(

const node_ptr& np,

compiler_context& context

);

static expression_ptr build_array_expression(

const node_ptr& np,

compiler_context& context

);

static expression_ptr build_larray_expression(

const node_ptr& np,

compiler_context& context

);

static expression_ptr build_function_expression(

const node_ptr& np,

compiler_context& context

);

static expression_ptr build_lfunction_expression(

const node_ptr& np,

compiler_context& context

);

public:

static expression_ptr build_expression(

const node_ptr& np,

compiler_context& context

) {

return std::visit(overloaded{

[&](simple_type st){

switch (st) {

case simple_type::number:

if (np->is_lvalue()) {

RETURN_EXPRESSION_OF_TYPE(lnumber);

} else {

RETURN_EXPRESSION_OF_TYPE(number);

}

case simple_type::string:

if (np->is_lvalue()) {

RETURN_EXPRESSION_OF_TYPE(lstring);

} else {

RETURN_EXPRESSION_OF_TYPE(string);

}

case simple_type::nothing:

RETURN_EXPRESSION_OF_TYPE(void);

}

},

[&](const function_type& ft) {

if (np->is_lvalue()) {

RETURN_EXPRESSION_OF_TYPE(lfunction);

} else {

RETURN_EXPRESSION_OF_TYPE(function);

}

},

[&](const array_type& at) {

if (np->is_lvalue()) {

RETURN_EXPRESSION_OF_TYPE(larray);

} else {

RETURN_EXPRESSION_OF_TYPE(array);

}

}

}, *np->get_type_id());

}

};

The function build_expression is the only public function here. It invokes the function std::visit on the node type. That function applies the passed functor on the variant, decoupling it in the process. You can read more about it and about the functor overloaded here.

The macro RETURN_EXPRESSION_OF_TYPE calls private functions for expression building and throws an exception if the conversion is not possible:

#define RETURN_EXPRESSION_OF_TYPE(T)\

if constexpr(is_convertible<T, R>::value) {\

return build_##T##_expression(np, context);\

} else {\

throw expression_builder_error();\

return expression_ptr();\

}

We have to return the empty pointer in the else-branch, as the compiler cannot know the function return type in case of impossible conversion; otherwise, std::visit requires all overloaded functions to have the same return type.

There is, for example, the function that builds expressions with string as a return type:

static expression_ptr build_string_expression(

const node_ptr& np, compiler_context& context

) {

if (std::holds_alternative<std::string>(np->get_value())) {

return std::make_unique<constant_expression<R, string>>(

std::make_shared<std::string>(

std::get<std::string>(np->get_value())

)

);

}

CHECK_IDENTIFIER(lstring);

switch (std::get<node_operation>(np->get_value())) {

CHECK_BINARY_OPERATION(concat, string, string);

CHECK_BINARY_OPERATION(comma, void, string);

CHECK_TERNARY_OPERATION(ternary, number, string, string);

CHECK_INDEX_OPERATION(lstring);

CHECK_CALL_OPERATION(lstring);

default:

throw expression_builder_error();

}

}

It checks if the node holds string constant and builds constant_expression if that’s the case.

Then, it checks if the node holds an identifier and returns global or local variable expression of lstring type in that case. It can hold an identifier if we implement constant variables. Otherwise, it assumes that the node holds the node operation and tries all of the operations that can return string.

Here are the implementations of the CHECK_IDENTIFIER and CHECK_BINARY_OPERATION macros:

#define CHECK_IDENTIFIER(T1)\

if (std::holds_alternative<identifier>(np->get_value())) {\

const identifier& id = std::get<identifier>(np->get_value());\

const identifier_info* info = context.find(id.name);\

if (info->is_global()) {\

return std::make_unique<\

global_variable_expression<R, T1>\

>(info->index());\

} else {\

return std::make_unique<\

local_variable_expression<R, T1>\

>(info->index());\

}\

}

#define CHECK_BINARY_OPERATION(name, T1, T2)\

case node_operation::name:\

return expression_ptr(\

std::make_unique<name##_expression<R, T1, T2> > (\

expression_builder<T1>::build_expression(\

np->get_children()[0], context\

),\

expression_builder<T2>::build_expression(\

np->get_children()[1], context\

)\

)\

);

The CHECK_IDENTIFIER macro has to consult compiler_context to build a global or a local variable expression with the proper index. That’s the only usage of the compiler_context in this structure.

You can see that CHECK_BINARY_OPERATION recursively calls build_expression for the child nodes.

Wrapping Up

At my GitHub page, you can get the full source code, compile it, and then type in expressions and see the result of evaluated variables.

I imagine that, in all branches of human creativity, there is a moment when the author realizes that their product is alive, in some sense. In the construction of a programming language, it is the moment when you can see that the language “breathes.”

In the next and final part of this series, we will implement the rest of the minimal set of language features to see it running live.

Understanding the basics

What is a macro and why is it useful?

Macro is a rule that explains how an input can be mapped to the output or to certain actions. It can be used to automate some common work.

What are macros in C++?

Macros in C++ are named function-like mappings that describe how their input parameters are translated into C++ code.

What does it take to be Turing-complete?

A Turing machine is an abstract machine that can do everything that a modern computer can. A programming language is Turing-complete if it can emulate the Turing machine.

Are GOTO statements bad?

GOTO statements are considered bad because they abruptly break the normal program flow, making the code less readable.

Why do we use a GOTO statement?

There are certain rare situations when a GOTO statement can make the code more readable: for example, breaking from two or more nested loops.

Jakiša Tomić

Zagreb, Croatia

Member since November 13, 2019

About the author

Jakisa has 15+ years of experience developing various apps on multiple platforms. Most of his technical expertise is in C++ development.

Expertise

PREVIOUSLY AT