Applications of Statistics for Measuring Company Growth

Eyeballing the top-line figures of company growth is unlikely to uncover anything particular radical. To understand the trends that lead to user growth, retention, and/or engagement, statistical tools should be employed to test the underlying drivers behind performance.

Eyeballing the top-line figures of company growth is unlikely to uncover anything particular radical. To understand the trends that lead to user growth, retention, and/or engagement, statistical tools should be employed to test the underlying drivers behind performance.

Erik’s a VC GP who has invested into over 50 tech companies and realized notable exits, two of which were sales to Box and Twilio.

Expertise

PREVIOUSLY AT

Executive Summary

Once you have obtained your top-line growth metrics, the analysis can really begin.

- There are many factors both internal and external that implicate upon a company's top line figures, such as revenue and user growth.

- What is key is to be able to isolate the effects of intended actions, like marketing and PR, to understand how effective they were for future use.

- Tools, commonly applied in financial markets, can easily be applied to conventional business practices.

Figure out what aspects of growth you want to measure, then create a benchmark.

- Business growth strategies have three variables that can be deconstructed and measured: top-line user growth, retention, and engagement

- A simple regression model, most commonly via the Ordinary Least Squares method, can then ascertain the benchmark "normal" growth experienced by the business. This can test a range of internal and external forces for their influence on growth performance.

- Don't treat each growth strategy employed as an isolated event—a series of events may have varying performance at each point in time, but their impact overall may combine to produce significant results

Start building an analysis process: Weed out red herrings and look towards continual improvement via machine learning.

- If a significant but one-off event (like a C-suite resignation) occurs, mark the data point as a confounded unrelated event, with an indicator variable. These events in themselves can also be tested over time for their isolated effects.

- Take into account the non-linearity of certain events occurring at the same time. The positive-negative effects may not be the same, as in isolation. We see this in public markets, where companies can "take a bath" by releasing a deluge of negative news all at once, leading to an initial "fixed" hit from the fact of bad news, with marginal subsequent effects.

- Also start building a process for your growth metrics—as you garner more data over time, the accuracy of the insights will increase.

- Look at automating data feeds into the process and harvest data from other areas of the organization (for example, link to GitHub to test for the effect of software updates). Over time, applying iterative machine learning principles to your growth measurement will only increase its value toward your company understanding its progress.

In part as a follow-up to my previous article on how to identify the drivers of growth in businesses, I now want to go further down the rabbit hole and look into how you can then measure the impact of growth initiatives. I will provide some tools for assessing the impact of actions such as product updates, PR, and marketing campaigns on customer growth, retention metrics, and engagement. This represents reflections from my previous work as a statistician, helping companies to assess the impact on their valuation of internal and external events via the reactions of their traded securities.

I believe that statistical impact tools, more commonplace in the hedge fund and Wall Street world, can be of far more use to technology companies for managing growth than how they are currently applied. Due to technology making a range of high-frequency information available to us on user or client behavior, a skilled statistical or data analyst can be a real asset within commercial teams.

There Are Many Ways to Measure the Impact of Growth

As an example of measuring statistical impact on valuation, let’s assume that a publicly-traded company announces a new product and wishes to know the extent to which it impacted its valuation. Estimating the real impact requires accounting for:

- How the market itself performed that day, in the context of the security’s correlation with it.

- The effect of any other company-relevant information released at the same time.

- The simple fact that securities prices and user behavior move on a daily basis from general variance, even in the absence of new information.

- Longer-term impact, in terms of a statistically significant trend in price increase.

For a private company, the same analysis can be done on the change in active users, or clients, both in the short- and long-term, which serve as the corollary to stock price activity. This also applies to retention and depth of engagement metrics.

Establishing this rounded form of analysis allows companies to direct their limited resources based on far stronger information signals, rather than get led astray by what might appear to be a market or user reaction that in fact represents nothing more than random fluctuation. The initial work to set up the statistical model that separates the signal from the noise can yield tremendous dividends via the insights that it brings to a company’s growth efforts. It is also an iterative process that can easily (and often automatically) be updated and refined as new data is received.

Selecting the Target Metric to Test

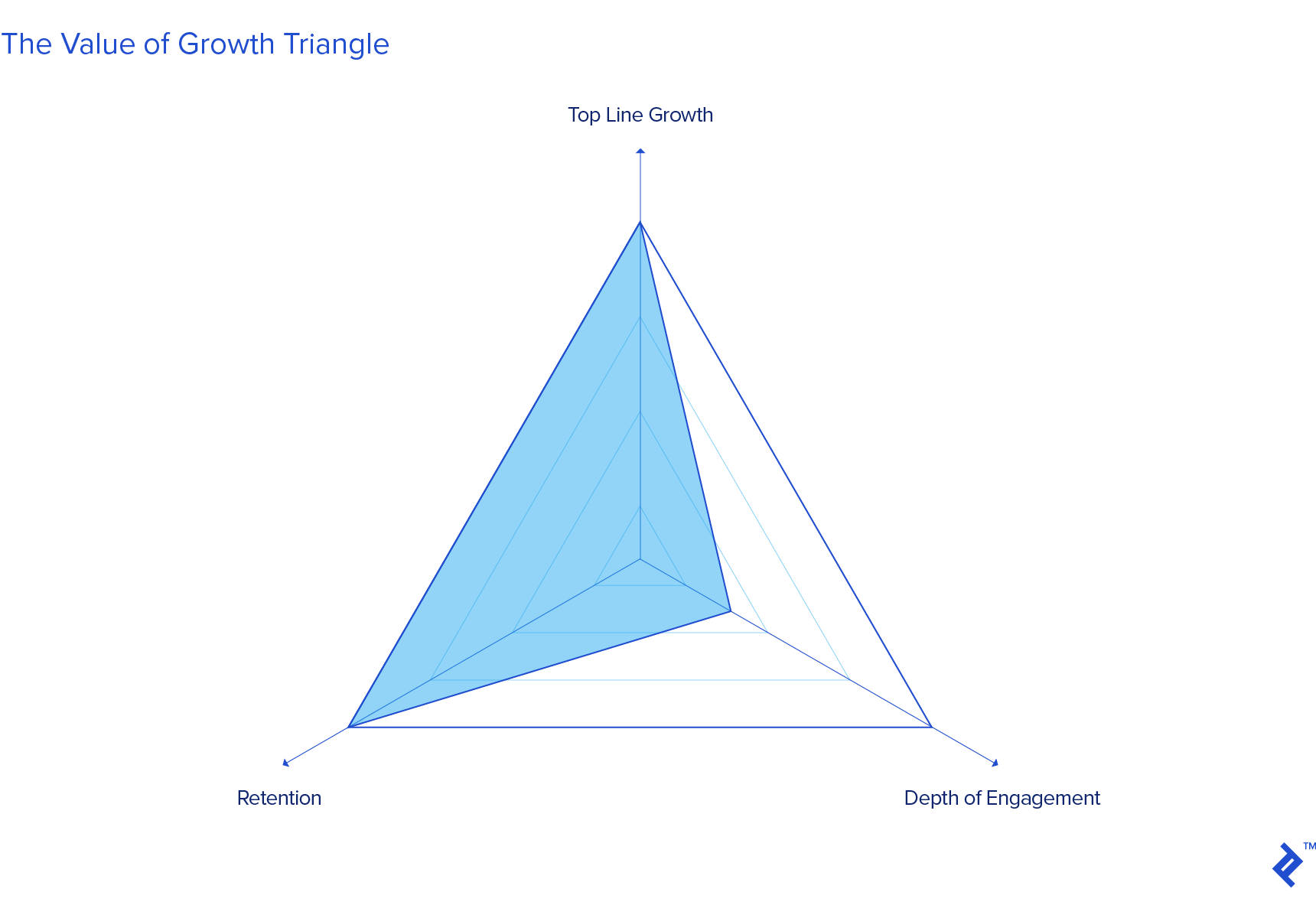

Any measuring effort by a company should target at least one of the following dimensions of growth:

- Top-line growth, defined as the change in total sales or active users/clients over time.

- Retention of users and clients, defined as the average lifetime of any given user or client.

- The depth of engagement of users and clients, defined as either the frequency of the core action taken or volume of transacting via the platform.

All three dimensions are quantifiable, and the company can conceptualize its value as the area of the triangle formed by these three points. If one collapses, then the value potential from the other two are severely constrained. While I certainly agree with many founders and investors that “a few users who love you is better than many who like you,” I do not believe that this contradicts the importance of top-line growth in addition to strong engagement and retention. The trajectory matters much more than the level, and beginning with a smaller group of truly dedicated users best sets the initial conditions for long-term growth in the first place.

The key task for the company is to then establish the analytical framework that allows for measuring the true effects of their actions on one or more of those three key metrics. The company may either test different models for each or use tools such as simultaneous equations to link them more directly. Marketing and PR efforts, in my experience, tend to particularly suffer from a lack of rigorous analysis on whether the company is receiving a return on its investment. Certain metrics, such as total views, clicks, and shares, are almost always recorded, but these are all means to an end and the next question of the effects on customer conversion and engagement are rarely given serious analysis.

Choosing the Benchmark and Example of a One-time Event

We begin with the simplified version of a one-time event. Let’s assume a company releases a new product update or publishes a big PR story on Day 0 and wishes to know whether it represents a move in the right direction in terms of effect on growth. Determining whether a real signal has been received that the company should continue with similar efforts requires knowing how much it increased, versus how much it would have, absent the event in question.

The benchmark growth can be estimated via a regression model that predicts the company’s growth, retention, or engagement based on external and internal variables. In certain cases, the ability to isolate those users that are affected by a product update allows for direct A/B testing with a control group. This is not the case, however, for larger-scale product, PR, and business efforts that affect all current and potential users somewhat uniformly. While there are some excellent resources available for such testing, many early-stage companies can find them expensive.

Variables that can be considered for this model include:

| Sector trends |

|

|---|---|

| Target customer trends |

|

| The S&P 500 plus additional sector-relevant sub-indices |

|

| Macro variables such as interest rates and exchange rates |

|

| Internal drivers such as referral rates |

|

| Seasonality/cyclicality |

|

All variables should be specified as a rate of change rather than absolute level, using logarithms rather than percentages.

The time frame for each variable likewise needs to be carefully considered. Some variables are leading (the stock market for example is heavily based on expectations), while others such as user satisfaction ratings are based on past experience but certainly may bear relevance for expected growth.

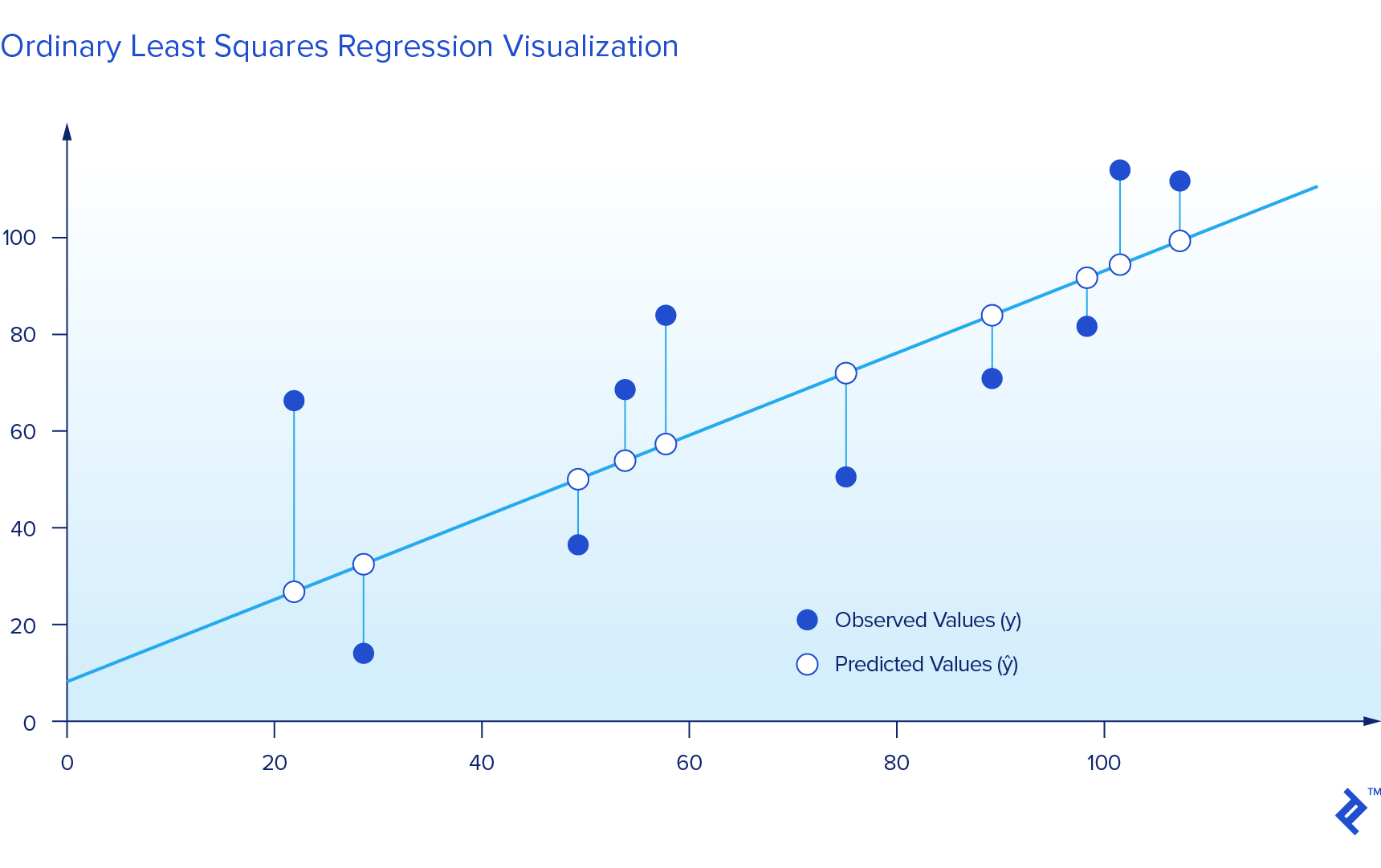

For the regression itself, I recommend beginning with Ordinary Least Squares (OLS) and then only moving on to other functional forms for specific reasons. OLS is versatile and likewise allows for more direct interpretation of the results than other more complex forms. Modifications in the context of OLS would include a logarithmic regression for nonlinear variables, interaction variables (for example, perhaps current customer satisfaction and social media activity), and squaring variables that you believe have disproportionate effects at larger values. Since growth is hopefully exponential, logarithmic regressions could certainly prove a strong fit.

Regarding the time horizon of impact of the action, be sure to consider your users’ frequency of actions or purchases to help you determine the proper interval over which to look for the impact. When using timeframes longer than one day, remember that weekly active users is not the sum of the daily active users that week. If I actively use your product every day that week, then I would be counted each day for a daily analysis. If you then change to a weekly analysis, I should only show up once and hence summing the individual days would over-count.

This model then allows you to estimate expected growth/retention/engagement for any given moment or ongoing time period based on the performance of these explanatory variables. The difference between this expected growth and the actual growth observed after the event is then the abnormal portion that may indicate impact. Dividing this abnormal growth by the standard deviation of the expected growth then indicates how likely the abnormal component was to occur by chance. Typically, a result of 1.96 (being approximately two standard deviations away from the predicted value) is used as the cut-off for deeming that it did not occur by chance.

In the context of cohorts, retention and engagement can either be considered in terms of change across successive cohorts (in other words, holding the values fixed for each cohort), or the change over time of total retention and engagement, without breaking it down by cohort.

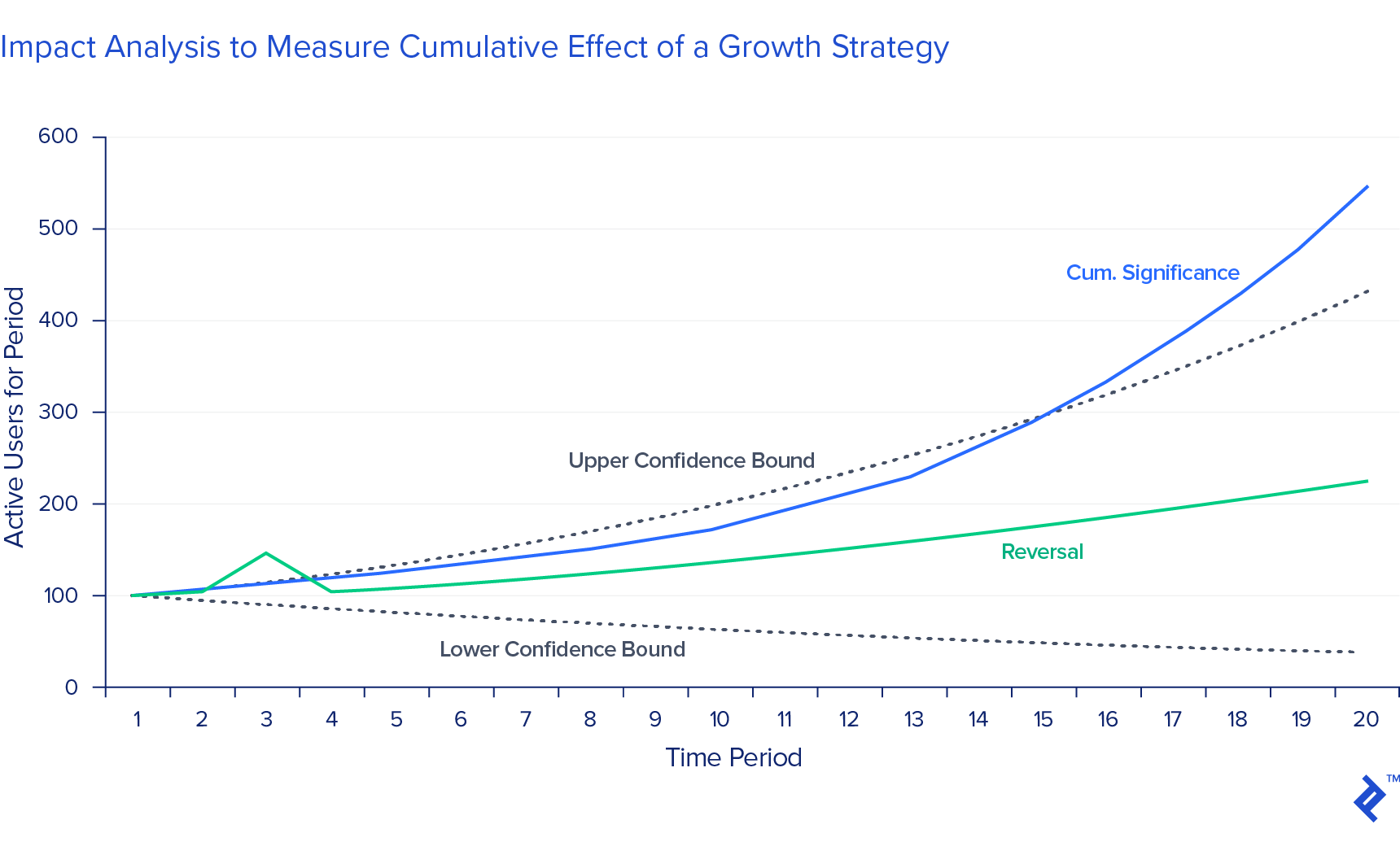

Cumulative Growth Impact from a Series of Events

Growth strategies often make a point of deploying a series of events rather than one-time efforts, both for the more immediate impact of having multiple efforts and the underlying impact of showing customers the pattern itself. Impact analysis can therefore look at cumulative impact as well. A series of events that are individually insignificant can result in a significant cumulative impact, and conversely a series of significant events can net out into insignificance.

The first situation can be thought of as “slow and steady wins the race.” Let’s say your sales increase by a fraction of a percent per week more quickly than your relevant sector. Over a short period of time this would mean nothing, as any given company’s growth will slightly differ from the benchmark by chance. If your slight over-performance continues on for long enough however, then eventually you can state with confidence that the company’s growth rate truly does exceed the market’s.

The second situation is essentially any kind of reversal. The increasingly high-frequency means by which people can react to developments before truly processing the information, as well as short-term herd mentality, brings the challenge of ensuring that you consider the true magnitude and duration of the reaction through the more immediate noise. Under certain circumstances, users and the markets may tend to systematically over-react in the short-term (new technologies, currency markets, and often bad news that does not represent a serious threat to a company) but then later correct themselves.

The two situations can be illustrated as follows. The confidence interval indicates the bounds within which we can expect 95% of observations to fall, which is typically used as the threshold for deeming something statistically significant.

The absence of a significant reversal can be taken as evidence of lasting impact. One must be cautious with this logic as it runs contrary to the normal rule of empirical skepticism that absence of evidence is not evidence of absence, but it is the best we can do.

Be careful when comparing percent/logarithmic changes over individual time periods. A decrease of 99% followed by an increase of 99% does not exactly net out to an insignificant cumulative change. Be sure to consider cumulative change in the end.

If you are measuring the cumulative impact of a series of events such as a specific PR campaign within a limited period of time (i.e., a holiday season), then you may wish to track the growth over all calendar days or weeks included in the timeframe, whether or not each one had a specific action taken. You are still essentially hoping that the 1-2-3 punch yields a knockout within a specific period even if there might be slight delays between hits.

If the events in question are further apart but you still wish to assess cumulative impact, you may consider fitting them together into a single continuous series of days and then running the same analysis. In this, you are essentially saying “Day 1 is January 5, Day 2 is March 15, Day 3 is April 10…”) and testing their cumulative change vs. that predicted by the benchmark as if they were in fact completely sequential dates. Testing the significance is then the same formula as with singular events, except in this raising the standard deviation to the square root of the number of days/weeks that form the cumulative period.

Dealing with Contaminated Information When Measuring Company Growth

The world rarely affords us the courtesy of perfect laboratory conditions to test out our ideas, so once the core model is established, it will most likely need to control for other information that affects the expected growth rate at the same time as the actions we’re seeking to measure.

Let’s assume that at the same time as a PR event or product update, a top executive unfortunately decides to leave for a competitor amidst much fanfare from the press and you become concerned that some users might take this as a signal of the relative merits of the two products. One very quick solution unfortunately is to simply mark the data point as a confounded unrelated event, with an indicator variable.

However, if you can obtain data on previous instances of the “confounding” event, then you can conduct a cross-sectional analysis that allows you to predict how much of an impact that particular event tends to have in similar circumstances, and you can remove that expected impact from the final results. In the above example, data on user activity surrounding high-profile team member departures in other companies would allow you to estimate and separate out the effect of that particular factor in order to isolate the effect of the PR event or product update that you hoped to evaluate.

Many companies also may face seasonality based on time of year or certain key moments such as holidays. Assign indicator variables to the time of year in question to control for this.

The Non-Linearities of Certain Impacts

As you consider the results of your analysis and strategies for growth efforts, certain nonlinear effects in how people have been documented to react to positive developments are worth bearing in mind.

Up- versus down-sensitivity can be very different. Data and time permitting, consider estimating the expected effects of both positive and negative events, if both are relevant to you. Unfortunately, downward movements in many cases ranging from user behavior to the financial markets can be far more abrupt and severe than upward movements.

The combined effect of performing multiple actions at once may not equal that of performing them in sequence because the very fact of the ongoing pattern can itself have positive or negative effect. The pattern of a company releasing a product update every month can instill confidence in users while announcing negative events such as layoffs or write-downs more than once can have a vastly disproportionate effect by causing the worry that the company doesn’t fully understand its own situation. Publicly-traded companies will often “take a bath” and release all bad news at once, as there can tend to be an initial “fixed” hit from the fact of bad news itself, with a marginal subsequent effect. The “Torpedo Effect,” for example, describes the empirical phenomenon that the mere presence of bad news can account for a meaningful portion of a price drop. Negative drops can therefore be broken down into an initial fixed effect that gives way to a diminishing marginal effect from the actual content of the news or development. PR campaigns work better as a sequence than one singular mega-event, as the goal is to position the company over time.

Variance can of course only be measured historically, but certain events might change the underlying true variance and probability that the abnormal growth happened by chance. As the new variance is itself the result of the event in question, the prior variance should be used in order to avoid the circular reasoning of dismissing the significance of the event based on the larger variance that comes with it. As always however, there is debate and each situation may be different.

As previously mentioned, growth or a slowdown in growth can both beget similar effects for a while, due to both human psychology and very real market structures. While there are various fancy autocorrelation tests available for measuring momentum effects, I find the more “manual” approach of regressing the growth series on a lagged version of itself to be more transparent and easier to experiment with.

Concluding Thoughts on Approaching Machine Learning in Business

Once the model that allows for such testing has been developed, there is no reason why the company’s platforms for tracking user behavior, sales, etc. cannot be directly linked to its code to continuously update the coefficients as new data is received. My personal preference has always been to have a rolling one-year estimation period when possible, in that it balances the size of the dataset with the higher value of more recent information and also naturally includes all times of the year in case of seasonality.

Assuming no structural breaks in the nature of the business and product, there is no reason to not extend the estimation period beyond one year, but young rapidly-growing companies tend to evolve quickly. Software-driven companies could link directly to their GitHub to create the process by which software updates are automatically tested for impact. By creating this direct link and allowing the functions to evolve automatically, you have taken the first step toward deploying machine learning for your company.

It is often pointed out how information is the world’s most valuable commodity, but it is less often mentioned that data is not information. On the contrary, companies are overwhelmed with so much data that may seem to tell competing narratives, many of which can be just spurious patterns based on randomness. Statistics at its best is a process of reduction—of rapidly honing in on the key variables and relationships and deploying them for practical testing. The spirit of this form of analysis above all is imbuing healthy skepticism into the decision-making process by forcing the data to prove itself as real information before you base a decision off of it.

Understanding the basics

How growth is measured.

Growth is measured across three variables: top-line growth (change in total sales or active users/clients over time), retention (average lifetime of any given user or client), and depth of engagement (frequency of the core action taken or volume of transacting via the platform).

Why customer retention is so important.

Retaining customers both increases the reliability of revenue and can spill over to increase new user growth. Happy customers are retained customers who are more likely to evangelize the product/service to new prospects

Erik Stettler

New York, NY, United States

Member since November 21, 2017

About the author

Erik’s a VC GP who has invested into over 50 tech companies and realized notable exits, two of which were sales to Box and Twilio.

Expertise

PREVIOUSLY AT