Billing Extraction: A Tale of GraphQL Internal API Optimization

Extracting functional components from a monolithic app into a service can be a daunting task but choosing the right tools and techniques for the job can expedite the process.

In this article, Toptal Back-end Engineer Maciek Rzasa recounts how his team extracted billing functionality from the Toptal platform and how they overcame a series of performance issues.

Extracting functional components from a monolithic app into a service can be a daunting task but choosing the right tools and techniques for the job can expedite the process.

In this article, Toptal Back-end Engineer Maciek Rzasa recounts how his team extracted billing functionality from the Toptal platform and how they overcame a series of performance issues.

An engineer, Scrum Master, and knowledge-sharing advocate, Maciek is interested in distributed systems, text processing, and writing software that matters.

Expertise

One of the main priorities for the Toptal engineering team is migration toward a service-based architecture. A crucial element of the initiative was Billing Extraction, a project in which we isolated billing functionality from the Toptal platform to deploy it as a separate service.

Over the past few months, we extracted the first part of the functionality. To integrate billing with other services, we used both an asynchronous API (Kafka-based) and a synchronous API (HTTP-based).

This article is a record of our efforts toward optimizing and stabilizing the synchronous API.

Incremental Approach

This was the first stage of our initiative. On our journey to full billing extraction, we strive to work in an incremental manner delivering small and safe changes to production. (See slides from an excellent talk about another aspect of this project: incremental extraction of an engine from a Rails app.)

The starting point was the Toptal platform, a monolithic Ruby on Rails application. We started by identifying the seams between billing and the Toptal platform at the data level. The first approach was to replace Active Record (AR) relations with regular method calls. Next, we needed to implement a REST call to the billing service fetching data returned by the method.

We deployed a small billing service accessing the same database as the platform. We were able to query billing either using HTTP API or with direct calls to the database. This approach allowed us to implement a safe fallback; in case the HTTP request failed for any reason (incorrect implementation, performance issue, deployment problems), we used a direct call and returned the correct result to the caller.

To make the transitions safe and seamless, we used a feature flag to switch between HTTP and direct calls. Unfortunately, the first attempt implemented with REST proved to be unacceptably slow. Simply replacing AR relations with remote requests caused crashes when HTTP was enabled. Even though we enabled it only for a relatively small percentage of calls, the problem persisted.

We knew we needed a radically different approach.

The Billing Internal API (aka B2B)

We decided to replace REST with GraphQL (GQL) to get more flexibility on the client side. We wanted to make data-driven decisions during this transition to be able to predict outcomes this time.

To do that, we instrumented every request from the Toptal platform (monolith) to billing and logged detailed information: response time, parameters, errors, and even stack trace on them (to understand which parts of the platform use billing). This allowed us to detect hotspots — places in the code that send many requests or those that cause slow responses. Then, with stacktrace and parameters, we could reproduce issues locally and have a short feedback loop for many fixes.

To avoid nasty surprises on production, we added another level of feature flags. We had one flag per method in the API to move from REST to GraphQL. We were enabling HTTP gradually and watching if “something bad” appeared in the logs.

In most cases, “something bad” was either a long (multi-second) response time, 429 Too Many Requests, or 502 Bad Gateway. We employed several patterns to fix these problems: preloading and caching data, limiting data fetched from the server, adding jitter, and rate-limiting.

Preloading and Caching

The first issue we noticed was a flood of requests sent from a single class/view, similar to the N+1 problem in SQL.

Active Record preloading didn’t work over the service border and, as a result, we had a single page sending ~1,000 requests to billing with every reload. A thousand requests from a single page! The situation in some background jobs wasn’t much better. We preferred to make dozens of requests rather than thousands.

One of the background jobs was fetching job data (let’s call this model Product) and checking if a product should be marked as inactive based on billing data (for this example, we’ll call the model BillingRecord). Even though products were fetched in batches, the billing data was requested every time it was needed. Every product needed billing records, so processing every single product caused a request to the billing service to fetch them. That meant one request per product and resulted in about 1,000 requests sent from a single job execution.

To fix that, we added batch preloading of billing records. For every batch of products fetched from the database, we requested billing records once and then assigned them to respective products:

# fetch all required billing records and assign them to respective products

def cache_billing_records(products)

# array of billing records

billing_records = Billing::QueryService

.billing_records_for_products(*products)

indexed_records = billing_records.group_by(&:product_gid)

products.each do |p|

e.cache_billing_records!(indexed_records[p.gid].to_a) }

end

end

With batches of 100 and a single request to the billing service per batch, we went from ~1,000 requests per job to ~10.

Client-side Joins

Batching requests and caching billing records worked well when we had a collection of products and we needed their billing records. But what about the other way around: if we fetched billing records and then tried to use their respective products, fetched from the platform database?

As expected, this caused another N+1 problem, this time on the platform side. When we were using products to collect N billing records, we were performing N database queries.

The solution was to fetch all needed products at once, store them as a hash indexed by ID, and then assign them to their respective billing records. A simplified implementation is:

def product_billing_records(products)

products_by_gid = products.index_by(&:gid)

product_gids = products_by_gid.keys.compact

return [] if product_gids.blank?

billing_records = fetch_billing_records(product_gids: product_gids)

billing_records.each do |billing_record|

billing_record.preload_product!(

products_by_gid[billing_record.product_gid]

)

end

end

If you think that it’s similar to a hash join, you’re not alone.

Server-side Filtering and Underfetching

We fought off the worst spikes of requests and N+1 issues on the platform side. We still had slow responses, though. We identified that they were caused by loading too much data to the platform and filtering it there (client-side filtering). Loading data to memory, serializing it, sending it over the network, and deserializing just to drop most of it was a colossal waste. It was convenient during implementation because we had generic and reusable endpoints. During operations, it proved unusable. We needed something more specific.

We addressed the issue by adding filtering arguments to GraphQL. Our approach was similar to a well-known optimization that consists of moving filtering from the app level to the DB query (find_all vs. where in Rails). In the database world, this approach is obvious and available as WHERE in the SELECT query. In this case, it required us to implement query handling by ourselves (in Billing).

We deployed the filters and waited to see a performance improvement. Instead, we saw 502 errors on the platform (and our users also saw them too). Not good. Not good at all!

Why did that happen? That change should have improved response time, not break the service. We had introduced a subtle bug inadvertently. We retained both versions of the API (GQL and REST) on the client side. We switched gradually with a feature flag. The first, unfortunate version we deployed introduced a regression in the legacy REST branch. We focused our testing on the GQL branch, so we missed the performance issue in REST. Lesson learned: If search parameters are missing, return an empty collection, not everything you have in your database.

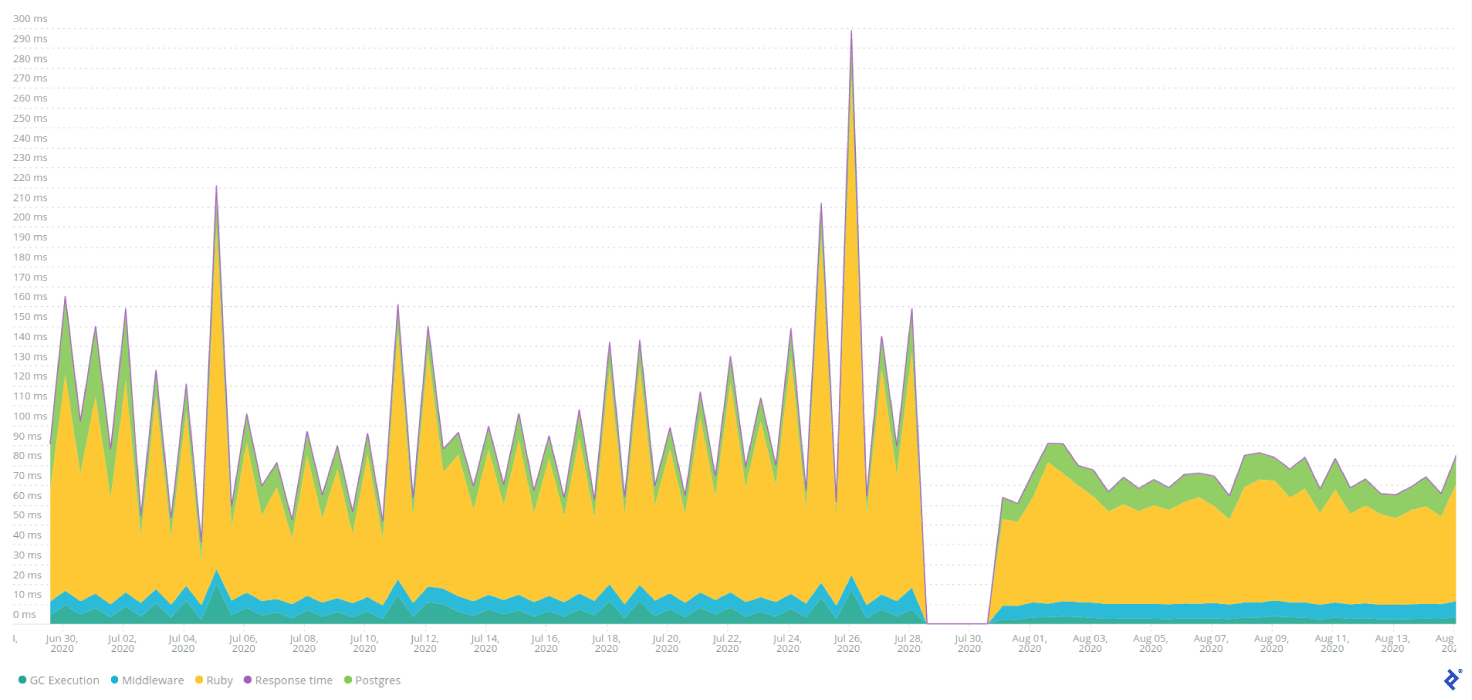

Take a look at the NewRelic data for Billing. We deployed the changes with server-side filtering during a lull in traffic (we switched off billing traffic after encountering platform issues). You can see that responses are faster and more predictable after deployment.

It wasn’t too hard to add filters to a GQL schema. The situations in which GraphQL really shined through were the cases in which we fetched too many fields, not too many objects. With REST, we were sending all the data that was possibly needed. Creating a generic endpoint forced us to pack it with all the data and associations used on the platform.

With GQL, we were able to pick the fields. Instead of fetching 20+ fields that required loading several database tables, we selected just the three to five fields that were needed. That allowed us to remove sudden spikes of billing usage during platform deployments because some of those queries were used by elastic search reindexing jobs run during the deploy. As a positive side effect, it made deployments faster and more reliable.

The Fastest Request Is the One You Don’t Make

We limited the number of fetched objects and the amount of data packed into every object. What else could we do? Maybe not fetch the data at all?

We noticed another area with room for improvement: We were using a creation date of the last billing record in the platform frequently and every time, we were calling billing to fetch it. We decided that instead of fetching it synchronously every time it was needed, we could cache it based on events sent from billing.

We planned ahead, prepared tasks (four to five of them), and started working to have it done as soon as possible, as those requests were generating a significant load. We had two weeks of work ahead of us.

Fortunately, not long after we started, we took a second look at the problem and realized that we could use data that was already on the platform but in a different form. Instead of adding new tables to cache data from Kafka, we spent a couple days comparing data from the billing and the platform. We also consulted domain experts as to whether we could use platform data.

Finally, we replaced the remote call with a DB query. That was a massive win from both performance and workload standpoints. We also saved more than a week of development time.

Distributing the Load

We were implementing and deploying those optimizations one by one, yet there were still cases when billing responded with 429 Too Many Requests. We could have increased the request limit on Nginx but we wanted to understand the issue better, as it was a hint that the communication is not behaving as expected. As you may recall, we could afford to have those errors on production, as they were not visible to end-users (because of the fallback to a direct call).

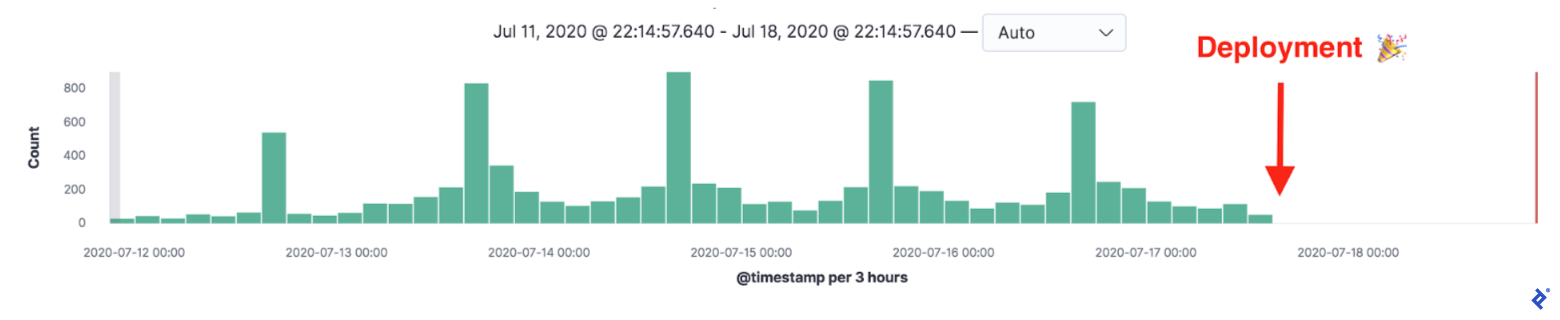

The error occurred every Sunday, when the platform schedules reminders for talent network members regarding overdue timesheets. To send out the reminders, a job fetches billing data for relevant products, which includes thousands of records. The first thing we did to optimize it was batching and preloading billing data, and fetching only the required fields. Both are well-known tricks, so we won’t go into detail here.

We deployed and waited for the following Sunday. We were confident that we’d fixed the problem. However, on Sunday, the error resurfaced.

The billing service was called not only during scheduling but also when a reminder was sent to a network member. The reminders are sent in separate background jobs (using Sidekiq), so preloading was out of the question. Initially, we had assumed it would not be a problem because not every product needed a reminder and because reminders are all sent at once. The reminders are scheduled for 5 PM in the time zone of the network member. We missed an important detail, though: Our members are not distributed across time zones uniformly.

We were scheduling reminders to thousands of network members, about 25% of which live in one time zone. About 15% live in the second-most-populous time zone. As the clock ticked 5 PM in those time zones, we had to send hundreds of reminders at once. That meant a burst of hundreds of requests to the billing service, which was more than the service could handle.

It wasn’t possible to preload billing data because reminders are scheduled in independent jobs. We couldn’t fetch fewer fields from billing, as we had already optimized that number. Moving network members to less-populous time zones was out of the question, as well. So what did we do? We moved the reminders, just a little bit.

We added jitter to the time when reminders were scheduled to avoid a situation in which all reminders would be sent at the exact same time. Instead of scheduling at 5 PM sharp, we scheduled them within a range of two minutes, between 5:59 PM and 6:01 PM.

We deployed the service and waited for following Sunday, confident that we’d finally fixed the problem. Unfortunately, on Sunday, the error appeared again.

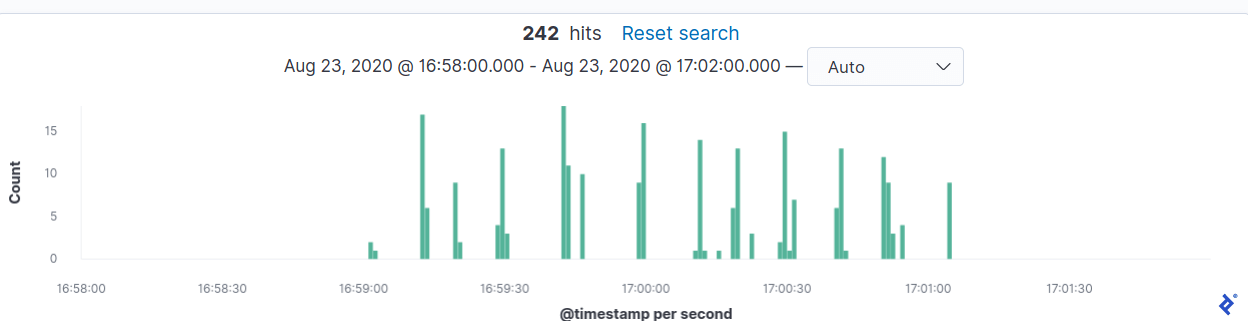

We were puzzled. According to our calculations, the requests should have been spread over a two-minute period, which meant we’d have, at most, two requests per second. That wasn’t something the service couldn’t handle. We analyzed the logs and timings of billing requests and we realized that our jitter implementation didn’t work, so the requests were still appearing in a tight group.

What caused that behavior? It was the way Sidekiq implements scheduling. It polls redis every 10–15 seconds and because of that, it can’t deliver one-second resolution. To achieve a uniform distribution of requests, we used Sidekiq::Limiter – a class provided by Sidekiq Enterprise. We employed the window limiter that allowed eight requests for a moving one-second window. We chose that value because we had an Nginx limit of 10 requests per second on billing. We kept the jitter code because it provided coarse-grained request dispersion: it distributed Sidekiq jobs over a period of two minutes. Then Sidekiq Limiter was used to ensure that each group of jobs was processed without breaking the defined threshold.

Once again, we deployed it and waited for Sunday. We were confident that we’d finally fixed the problem — and we did. The error vanished.

API Optimization: Nihil Novi Sub Sole

I believe you weren’t surprised by the solutions we employed. Batching, server-side filtering, sending only required fields, and rate-limiting aren’t novel techniques. Experienced software engineers have undoubtedly used them in different contexts.

Preloading to avoid N+1? We have it in every ORM. Hash joins? Even MySQL has them now. Underfetching? SELECT * vs. SELECT field is a known trick. Spreading the load? It is not a new concept either.

So why did I write this article? Why didn’t we do it right from the beginning? As usual, the context is key. Many of those techniques looked familiar only after we implemented them or only when we noticed a production problem that needed to be solved, not when we stared at the code.

There were several possible explanations for that. Most of the time, we were trying to do the simplest thing that could work to avoid over-engineering. We started with a boring REST solution and only then moved to GQL. We deployed changes behind a feature flag, monitored how everything behaved with a fraction of the traffic, and applied improvements based on real-world data.

One of our discoveries was that performance degradation is easy to overlook when refactoring (and extraction can be treated as a significant refactoring). Adding a strict boundary meant we cut the ties that were added to optimize the code. It wasn’t apparent, though, until we measured performance. Lastly, in some cases, we couldn’t reproduce production traffic in the development environment.

We strived to have a small surface of a universal HTTP API of the billing service. As a result, we got a bunch of universal endpoints/queries that were carrying data needed in different use cases. And that meant that in many use cases, most of the data was useless. It’s a bit of a tradeoff between DRY and YAGNI: With DRY, we have only one endpoint/query returning billing records while with YAGNI, we end up with unused data in the endpoint that only harms performance.

We also noticed another tradeoff when discussing jitter with the billing team. From the client (platform) point of view, every request should get a response when the platform needs it. Performance issues and server overload should be hidden behind the abstraction of the billing service. From the billing service point of view, we need to find ways to make clients aware of server performance characteristics to withstand the load.

Again, nothing here is novel or groundbreaking. It’s about identifying known patterns in different contexts and understanding the trade-offs introduced by the changes. We’ve learned that the hard way and we hope that we’ve spared you from repeating our mistakes. Instead of repeating our mistakes, you will no doubt make mistakes of your own and learn from them.

Special thanks to my colleagues and teammates who participated in our efforts:

Understanding the basics

What are internal and external APIs?

An external API is a client- or UI-facing API. An internal API is used for communication between services.

Why is GraphQL used?

GraphQL allows the implementation of a flexible API layer. It supports scoping and grouping data so that the client fetches only the data that is needed and can retrieve it in a single request to the server.

Is GraphQL better than REST?

They have different usage patterns and cannot be compared directly. In our case, GraphQL proved to be more suitable but your mileage may vary.

How do you optimize an API?

It depends on the API. Preloading and caching data, limiting data fetched from the server, adding jitter, and rate-limiting can be used to optimize the internal API.