What Is Kubernetes? A Guide to Containerization and Deployment

Moving to a microservices architecture often raises the question: What is the best environment to stabilize services? Here’s how and why you should use Kubernetes, Docker, and CircleCI to containerize and deploy apps.

Moving to a microservices architecture often raises the question: What is the best environment to stabilize services? Here’s how and why you should use Kubernetes, Docker, and CircleCI to containerize and deploy apps.

Dmitriy is a software engineer with 12 years of experience in web development and DevOps. He specializes in cloud services, DevOps, and Kubernetes, focusing on infrastructure cost reduction and high-volume instance management. Dmitriy has a master’s degree in computer science from Kyrgyz State Technical University.

Expertise

A short while ago, we used the monolith web application: huge codebases that grew in new functions and features until they turned into huge, slow-moving, hard to manage giants. Now, an increasing number of developers, architects, and DevOps experts are coming to the opinion that it is better to use microservices than a giant monolith. Usually, using a microservices-based architecture means splitting your monolith into at least two applications: the front-end app and a back-end app (the API). After the decision to use microservices, a question arises: In what environment is it better to run microservices? What should I choose to make my service stable as well as easy to manage and deploy? The short answer is: Use Docker!

In this article, I’ll be introducing you to containers, explaining Kubernetes, and teaching you how to containerize and deploy an app to a Kubernetes cluster using CircleCI.

Docker? What is Docker?

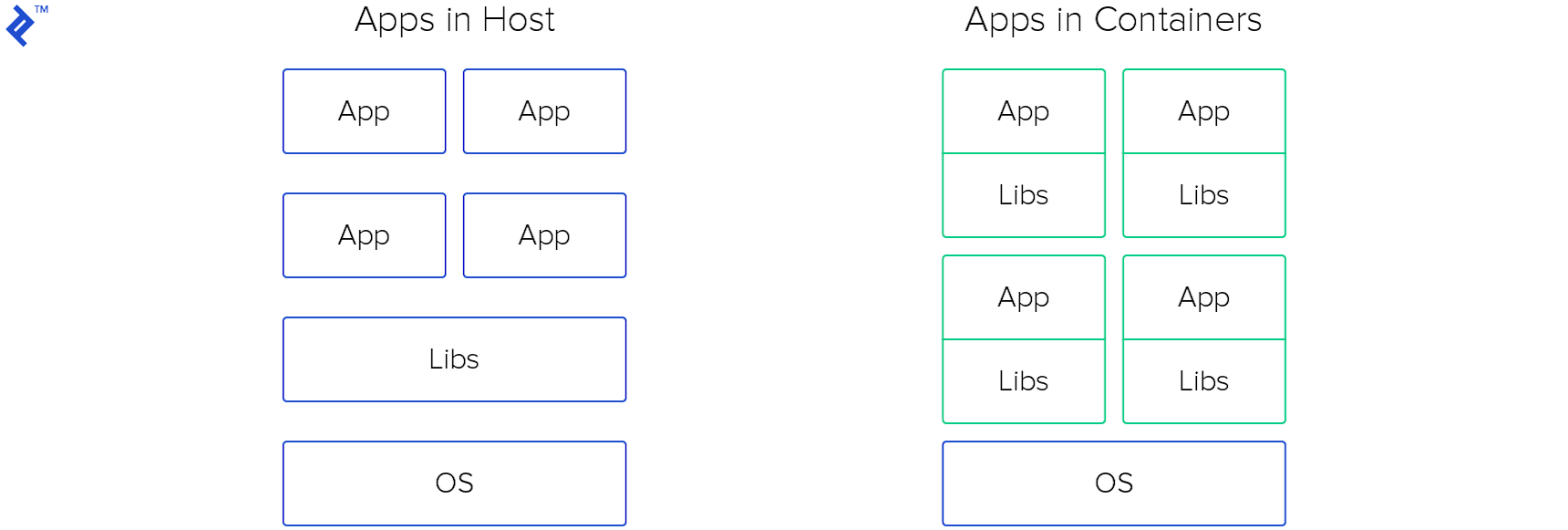

Docker is a tool designed to make DevOps (and your life) easier. With Docker, a developer can create, deploy, and run applications in containers. Containers allow a developer to package up an application with all of the parts it needs, such as libraries and other dependencies, and ship it all out as one package.

Using containers, developers can easily (re)deploy an image to any OS. Just install Docker, execute a command, and your application is up and running. Oh, and don’t worry about any inconsistency with the new version of libraries in the host OS. Additionally, you can launch more containers on the same host—will it be the same app or another? It doesn’t matter.

Seems like Docker is an awesome tool. But how and where should I launch containers?

There are a lot of options for how and where to run containers: AWS Elastic Container Service (AWS Fargate or a reserved instance with horizontal and vertical auto-scaling); a cloud instance with predefined Docker image in Azure or Google Cloud (with templates, instance groups, and auto-scaling); on your own server with Docker; or, of course, Kubernetes! Kubernetes was created especially for virtualization and containers by Google’s engineers in 2014.

Kubernetes? What is that?

Kubernetes is an open-source system which allows you to run containers, manage them, automate deploys, scale deployments, create and configure ingresses, deploy stateless or stateful applications, and many other things. Basically, you can launch one or more instances and install Kubernetes to operate them as a Kubernetes cluster. Then get the API endpoint of the Kubernetes cluster, configure kubectl (a tool for managing Kubernetes clusters) and Kubernetes is ready to serve.

So why should I use it?

With Kubernetes, you can utilize computational resources to a maximum. With Kubernetes, you will be the captain of your ship (infrastructure) with Kubernetes filling your sails. With Kubernetes, your service will be HA. And most importantly, with Kubernetes, you will save a good deal of money.

Looks promising! Especially if it will save money! Let’s talk about it more!

Kubernetes is gaining popularity day after day. Let’s go deeper and investigate what is under the hood.

Under the Hood: What Is Kubernetes?

Kubernetes is the name for the whole system, but like your car, there are many small pieces that work together in perfect harmony to make Kubernetes function. Let’s learn what they are.

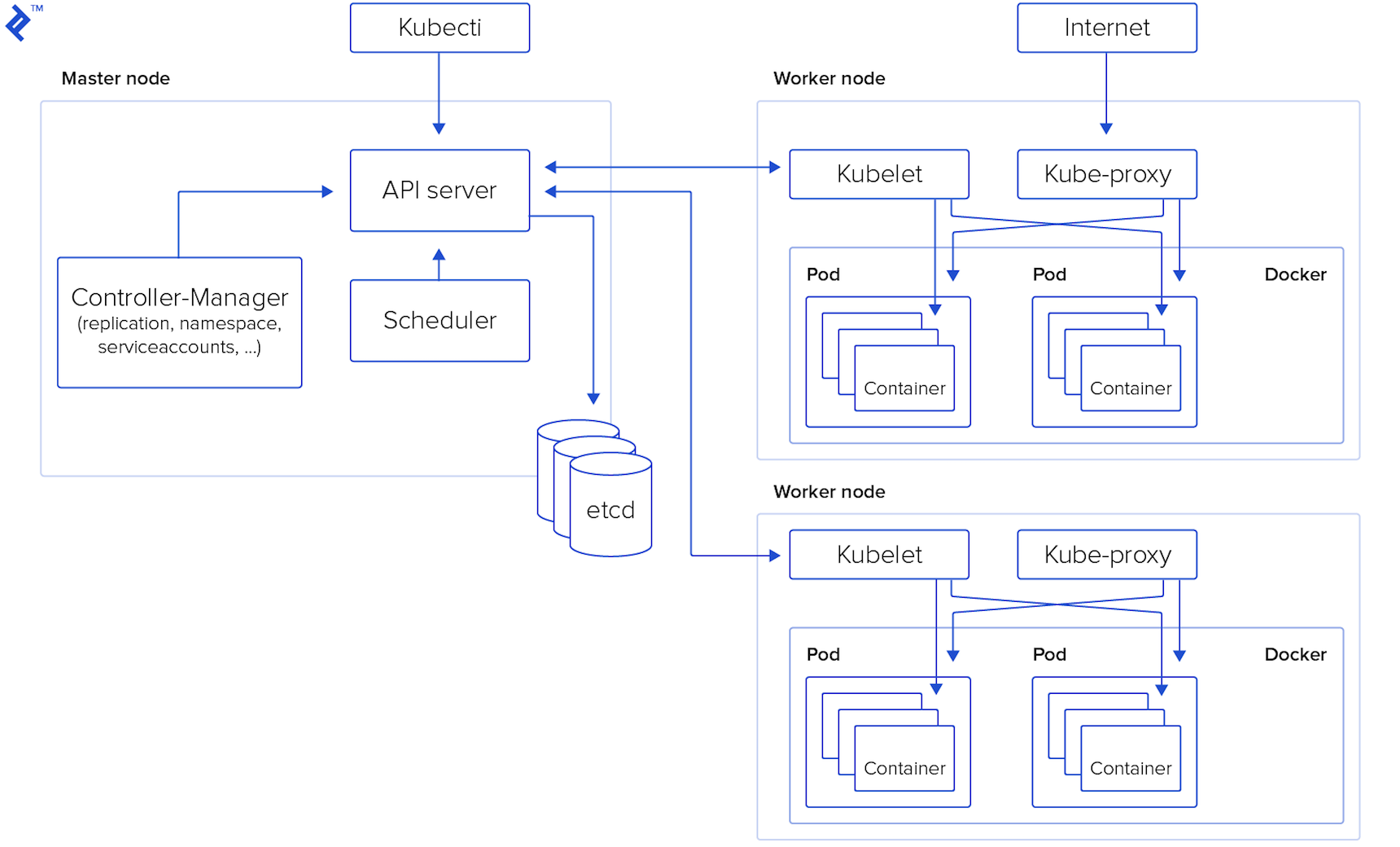

Master Node – A control panel for the whole Kubernetes cluster. The components of the master can be run on any node in the cluster. The key components are:

- API server: The entry point for all REST commands, the sole component of the Master Node which is user-accessible.

- Datastore: Strong, consistent, and highly-available key-value storage used by the Kubernetes cluster.

- Scheduler: Watches for newly-created pods and assigns them to nodes. Deployment of pods and services onto the nodes happen because of the scheduler.

- Controller manager: Runs all the controllers that handle routine tasks in the cluster.

- Worker nodes: Primary node agent, also called minion nodes. The pods are run here. Worker nodes contain all the necessary services to manage networking between the containers, communicate with the master node, and assign resources to the containers scheduled.

- Docker: Runs on each worker node and downloads images and starting containers.

- Kubelet: Monitors the state of a pod and ensures that the containers are up and running. It also communicates with the data store, getting information about services and writing details about newly created ones.

- Kube-proxy: A network proxy and load balancer for a service on a single worker node. It is responsible for traffic routing.

- Kubectl: A CLI tool for the users to communicate with the Kubernetes API server.

What are pods and services?

Pods are the smallest unit of the Kubernetes cluster, it is like one brick in the wall of a huge building. A pod is a set of containers that need to run together and can share resources (Linux namespaces, cgroups, IP addresses). Pods are not intended to live long.

Services are an abstraction on top of a number of pods, typically requiring a proxy on top for other services to communicate with it via a virtual IP address.

Simple Deployment Example

I’ll use a simple Ruby on Rails application and GKE as a platform for running Kubernetes. Actually, you can use Kubernetes in AWS or Azure or even create a cluster in your own hardware or run Kubernetes locally using minikube—all options that you will find on this page.

The source files for this app can be found in this GitHub repository.

To create a new Rails app, execute:

rails new blog

To configure the MySQL connection for production in the config/database.yml file:

production:

adapter: mysql2

encoding: utf8

pool: 5

port: 3306

database: <%= ENV['DATABASE_NAME'] %>

host: 127.0.0.1

username: <%= ENV['DATABASE_USERNAME'] %>

password: <%= ENV['DATABASE_PASSWORD'] %>

To create an Article model, controller, views, and migration, execute:

rails g scaffold Article title:string description:text

To add gems to the Gemfile:

gem 'mysql2', '< 0.6.0', '>= 0.4.4'

gem 'health_check'

To create the Docker image, grab my Dockerfile and execute:

docker build -t REPO_NAME/IMAGE_NAME:TAG . && docker push REPO_NAME/IMAGE_NAME:TAG

It is time to create a Kubernetes cluster. Open the GKE page and create Kubernetes cluster. When the cluster is created, click “Connect button” and copy the command—be sure you have gCloud CLI tool (how to) and kubectl installed and configured. Execute the copied command on your PC and check the connection to the Kubernetes cluster; execute kubectl cluster-info.

The app is ready to deploy to the k8s cluster. Let’s create a MySQL database. Open the SQL page in the gCloud console and create a MySQL DB instance for the application. When the instance is ready, create the user and DB and copy the instance connection name.

Also, we need to create a service-account key in the API & Services page for accessing a MySQL DB from a sidecar container. You can find more info on that process here. Rename the downloaded file to service-account.json. We will come back later to that file.

We are ready to deploy our application to Kubernetes, but first, we should create secrets for our application—a secret object in Kubernetes created for storing sensitive data. Upload the previously downloaded service-account.json file:

kubectl create secret generic mysql-instance-credentials \

--from-file=credentials.json=service-account.json

Create secrets for the application:

kubectl create secret generic simple-app-secrets \

--from-literal=username=$MYSQL_PASSWORD \

--from-literal=password=$MYSQL_PASSWORD \

--from-literal=database-name=$MYSQL_DB_NAME \

--from-literal=secretkey=$SECRET_RAILS_KEY

Don’t forget to replace values or set environment variables with your values.

Before creating a deployment, let’s take a look at the deployment file. I concatenated three files into one; the first part is a service which will expose port 80 and forward all connections coming to port 80 to 3000. The service has a selector with which service knows to what pods it should forward connections.

The next part of the file is deployment, which is describing deployment strategy—containers which will be launched inside the pod, environment variables, resources, probes, mounts for each container, and other information.

The last part is the Horizontal Pod Autoscaler. HPA has a pretty simple config. Keep in mind that if you don ‘t set resources for the container in the deployment section, HPA will not work.

You can configure Vertical Autoscaler for your Kubernetes cluster in the GKE edit page. It also has a pretty simple configuration.

It is time to ship it to the GKE cluster! First of all, we should run migrations via job. Execute:

kubectl apply -f rake-tasks-job.yaml – This job will be useful for the CI/CD process.

kubectl apply -f deployment.yaml – to create service, deployment, and HPA.

And then check your pod by executing the command: kubectl get pods -w

NAME READY STATUS RESTARTS AGE

sample-799bf9fd9c-86cqf 2/2 Running 0 1m

sample-799bf9fd9c-887vv 2/2 Running 0 1m

sample-799bf9fd9c-pkscp 2/2 Running 0 1m

Now let’s create an ingress for the application:

- Create a static IP:

gcloud compute addresses create sample-ip --global - Create the ingress (file):

kubectl apply -f ingress.yaml - Check that the ingress has been created and grab the IP:

kubectl get ingress -w - Create the domain/subdomain for your application.

CI/CD

Let’s create a CI/CD pipeline using CircleCI. Actually, it is easy to create a CI/CD pipeline using CircleCI, but keep in mind, a quick and dirty fully-automated deploy process without tests like this will work for small projects, but please don’t do this for anything serious because, if any new code has issues in production, you’re going to lose money. That is why you should think about designing a robust deployment process, launch canary tasks before full rollout, check errors in logs after the canary has started, and so on.

Currently, we have a small, simple project, so let’s create a fully automated, no-test, CI/CD deployment process. First, you should integrate CircleCI with your repository—you can find all the instructions here. Then we should create a config file with instructions for the CircleCI system. Config looks pretty simple. The main points are that there are two branches in the GitHub repo: master and production.

- The master branch is for development, for the fresh code. When someone pushes new code to the master branch, CircleCI starts a workflow for the master branch—build and test code.

- The production branch is for deploying a new version to the production environment. Workflow for the production branch is as follows: push new code (or even better, open PR from the master branch to production) to trigger a new build and deployment process; during the build, CircleCI creates new Docker images, pushes it to the GCR and creates a new rollout for the deployment; if the rollout fails, CircleCI triggers the rollback process.

Before running any build, you should configure a project in CircleCI. Create a new service account in the API and a Services page in GCloud with these roles: full access to the GCR and GKE, open the downloaded JSON file and copy contents, then create a new environment variable in the project settings in CircleCI with the name GCLOUD_SERVICE_KEY and paste the contents of the service-account file as a value. Also, you need to create the next env vars: GOOGLE_PROJECT_ID (you can find it on the GCloud console homepage), GOOGLE_COMPUTE_ZONE (a zone for your GKE cluster), and GOOGLE_CLUSTER_NAME (GKE cluster name).

The last step (deploy) at CircleCI will look like:

kubectl patch deployment sample -p '{"spec":{"template":{"spec":{"containers":[{"name":"sample","image":"gcr.io/test-d6bf8/simple:'"$CIRCLE_SHA1"'"}]}}}}'

if ! kubectl rollout status deploy/sample; then

echo "DEPLOY FAILED, ROLLING BACK TO PREVIOUS"

kubectl rollout undo deploy/sample

# Deploy failed -> notify slack

else

echo "Deploy succeeded, current version: ${CIRCLE_SHA1}"

# Deploy succeeded -> notify slack

fi

deployment.extensions/sample patched

Waiting for deployment "sample" rollout to finish: 2 out of 3 new replicas have been updated...

Waiting for deployment "sample" rollout to finish: 2 out of 3 new replicas have been updated...

Waiting for deployment "sample" rollout to finish: 2 out of 3 new replicas have been updated...

Waiting for deployment "sample" rollout to finish: 1 old replicas are pending termination...

Waiting for deployment "sample" rollout to finish: 1 old replicas are pending termination...

Waiting for deployment "sample" rollout to finish: 1 old replicas are pending termination...

Waiting for deployment "sample" rollout to finish: 2 of 3 updated replicas are available...

Waiting for deployment "sample" rollout to finish: 2 of 3 updated replicas are available...

deployment "sample" successfully rolled out

Deploy succeeded, current version: 512eabb11c463c5431a1af4ed0b9ebd23597edd9

Conclusion

Looks like the process of creating new Kubernetes cluster is not so hard! And the CI/CD process is really awesome!

Yes! Kubernetes is awesome! With Kubernetes, your system will be more stable, easily manageable, and will make you the captain of your system. Not to mention, Kubernetes gamifies the system a little bit and will give +100 points for your marketing!

Now that you have the basics down, you can go further and turn this into a more advanced configuration. I’m planning on covering more in a future article, but in the meantime, here’s a challenge: Create a robust Kubernetes cluster for your application with a stateful DB located inside the cluster (including sidecar Pod for making backups), install Jenkins inside the same Kubernetes cluster for the CI/CD pipeline, and let Jenkins use pods as slaves for the builds. Use certmanager for adding/obtaining an SSL certificate for your ingress. Create a monitoring and alerting system for your application using Stackdriver.

Kubernetes is great because it scales easily, there’s no vendor lock-in, and, since you’re paying for the instances, you save money. However, not everyone is a Kubernetes expert or has the time to set-up a new cluster—for an alternative view, fellow Toptaler Amin Shah Gilani makes the case to use Heroku, GitLab CI, and a large amount of automation he’s already figured out in order to write more code and do fewer operation tasks in How to Build an Effective Initial Deployment Pipeline.

Further Reading on the Toptal Blog:

- Risk vs. Reward: A Guide to Understanding Software Containers

- Do the Math: Scaling Microservices Applications With Orchestrators

- A Kubernetes Service Mesh Comparison

- A Better Approach to Google Cloud Continuous Deployment

- Big Data Architecture for the Masses: A ksqlDB and Kubernetes Tutorial

- Zero to Hero: Flask Production Recipes

Understanding the basics

What is Docker?

Docker is a tool for developers/sysadmins/devops people to build, ship, and run distributed applications, whether on laptops, data center VMs, or the cloud.

What is Kubernetes?

Kubernetes is an open-source system which allows you to manage containerized workloads (pods) and services, automate deploys, scale deployments, create and configure ingresses, deploy stateless or stateful applications, and many other things.

Is Kubernetes written in Go?

Yes, and Docker is written in Go as well.

What are Kubernetes pods/containers?

A pod is a group of containers deployed together on the same host. If the pod has one container, you can generally replace the word “pod” with “container” and accurately understand the concept.

What does containerization mean?

Application containerization is an OS-level virtualization method used to deploy and run distributed applications without launching an entire virtual machine (VM) for each app.

Is it OK to use Kubernetes in production?

Yes. The first release was four years ago. Since that time, Kubernetes has become powerful and stable platform.

Bishkek, Kyrgyzstan

Member since May 31, 2018

About the author

Dmitriy is a software engineer with 12 years of experience in web development and DevOps. He specializes in cloud services, DevOps, and Kubernetes, focusing on infrastructure cost reduction and high-volume instance management. Dmitriy has a master’s degree in computer science from Kyrgyz State Technical University.