NLP With Google Cloud Natural Language API

Natural language processing (NLP) has become one of the most researched subjects in the field of AI. This interest is driven by applications that have been brought to market in recent years.

In this article, Toptal Deep Learning Developer Maximilian Hopf introduces you to Google Natural Language API and Google AutoML Natural Language.

Natural language processing (NLP) has become one of the most researched subjects in the field of AI. This interest is driven by applications that have been brought to market in recent years.

In this article, Toptal Deep Learning Developer Maximilian Hopf introduces you to Google Natural Language API and Google AutoML Natural Language.

Max is a data science and machine learning expert. He has helped to build one of Germany’s most highly funded fintechs.

Expertise

PREVIOUSLY AT

Natural language processing (NLP), which is the combination of machine learning and linguistics, has become one of the most heavily researched subjects in the field of artificial intelligence. In the last few years, many new milestones have been reached, the newest being OpenAI’s GPT-2 model, which is able to produce realistic and coherent articles about any topic from a short input.

This interest is driven by the many commercial applications that have been brought to market in recent years. We speak to our home assistants who use NLP to transcribe the audio data and to understand our questions and commands. More and more companies shift a big part of the customer communications effort to automated chatbots. Online marketplaces use it to identify fake reviews, media companies rely on NLP to write news articles, recruitment companies match CVs to positions, social media giants automatically filter hateful content, and legal firms use NLP to analyze contracts.

Training and deploying machine learning models for tasks like these has been a complex process in the past, which required a team of experts and an expensive infrastructure. But high demand for such applications has driven big could providers to develop NLP-related services, which reduce the workload and infrastructure costs greatly. The average cost of cloud services has been going down for years, and this trend is expected to continue.

The products I will introduce in this article are part of Google Cloud Services and are called “Google Natural Language API” and “Google AutoML Natural Language.”

Google Natural Language API

The Google Natural Language API is an easy to use interface to a set of powerful NLP models which have been pre-trained by Google to perform various tasks. As these models have been trained on enormously large document corpuses, their performance is usually quite good as long as they are used on datasets that do not make use of a very idiosyncratic language.

The biggest advantage of using these pre-trained models via the API is, that no training dataset is needed. The API allows the user to immediately start making predictions, which can be very valuable in situations where little labeled data is available.

The Natural Language API comprises five different services:

- Syntax Analysis

- Sentiment Analysis

- Entity Analysis

- Entity Sentiment Analysis

- Text Classification

Syntax Analysis

For a given text, Google’s syntax analysis will return a breakdown of all words with a rich set of linguistic information for each token. The information can be divided into two parts:

Part of speech: This part contains information about the morphology of each token. For each word, a fine-grained analysis is returned containing its type (noun, verb, etc.), gender, grammatical case, tense, grammatical mood, grammatical voice, and much more.

For example, for the input sentence “A computer once beat me at chess, but it was no match for me at kickboxing.” (Emo Philips) the part-of-speech analysis is:

| A | tag: DET |

| 'computer' | tag: NOUN number: SINGULAR |

| 'once' | tag: ADV |

| 'beat' | tag: VERB mood: INDICATIVE tense: PAST |

| 'me' | tag: PRON case: ACCUSATIVE number: SINGULAR person: FIRST |

| at | tag: ADP |

| 'chess' | tag: NOUN number: SINGULAR |

| ',' | tag: PUNCT |

| 'but' | tag: CONJ |

| 'it' | tag: PRON case: NOMINATIVE gender: NEUTER number: SINGULAR person: THIRD |

| 'was' | tag: VERB mood: INDICATIVE number: SINGULAR person: THIRD tense: PAST |

| 'no' | tag: DET |

| 'match' | tag: NOUN number: SINGULAR |

| 'for' | tag: ADP |

| 'kick' | tag: NOUN number: SINGULAR |

| 'boxing' | tag: NOUN number: SINGULAR |

| '.' | tag: PUNCT |

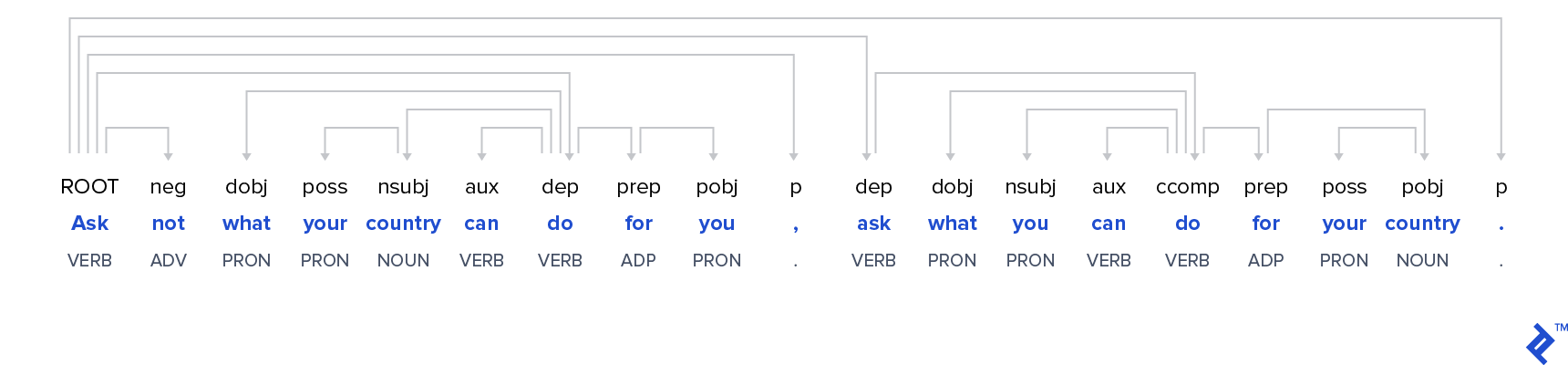

Dependency trees: The second part of the return is called a dependency tree, which describes the syntactic structure of each sentence. The following diagram of a famous Kennedy quote shows such a dependency tree. For each word, the arrows indicate which words are modified by it.

The commonly used Python libraries nltk and spaCy contain similar functionalities. The quality of the analysis is consistently high across all three options, but the Google Natural Language API is easier to use. The above analysis can be obtained with very few lines of code (see example further down). However, while spaCy and nltk are open-source and therefore free, the usage of the Google Natural Language API costs money after a certain number of free requests (see cost section).

Apart from English, the syntactic analysis supports ten additional languages: Chinese (Simplified), Chinese (Traditional), French, German, Italian, Japanese, Korean, Portuguese, Russian, and Spanish.

Sentiment Analysis

The syntax analysis service is mostly used early in one’s pipeline to create features which are later fed into machine learning models. On the contrary, the sentiment analysis service can be used right out of the box.

Google’s sentiment analysis will provide the prevailing emotional opinion within a provided text. The API returns two values: The “score” describes the emotional leaning of the text from -1 (negative) to +1 (positive), with 0 being neutral.

The “magnitude” measures the strength of the emotion.

Let’s look at some examples:

| Input Sentence | Sentiment Results | Interpretation |

| The train to London leaves at four o'clock | Score: 0.0 Magnitude: 0.0 | A completely neutral statement, which doesn't contain any emotion at all. |

| This blog post is good. | Score: 0.7 Magnitude: 0.7 | A positive sentiment, but not expressed very strongly. |

| This blog post is good. It was very helpful. The author is amazing. | Score: 0.7 Magnitude: 2.3 | The same sentiment, but expressed much stronger. |

| This blog post is very good. This author is a horrible writer usually, but here he got lucky. | Score: 0.0 Magnitude: 1.6 | The magnitude shows us that there are emotions expressed in this text, but the sentiment shows that they are mixed and not clearly positive or negative. |

Google’s sentiment analysis model is trained on a very large dataset. Unfortunately, there is no information about its detailed structure available. I was curious about its real-world performance so I tested it on a part of the Large Movie Review Dataset, which was created by scientists from Stanford University in 2011.

I randomly selected 500 positive and 500 negative movie reviews from the test set and compared the predicted sentiment to the actual review label. The confusion matrix looked like this:

| Positive Sentiment | Negative Sentiment | |

| Good Review | 470 | 30 |

| Bad Review | 29 | 471 |

As the table shows, the model is right about 94% of the time for good and bad movie reviews. This is not a bad performance for an out-of-the-box solution without any finetuning to the given problem.

Note: The sentiment analysis is available for the same languages as the syntax analysis except for Russian.

Entity Analysis

Entity Analysis is the process of detecting known entities like public figures or landmarks from a given text. Entity detection is very helpful for all kinds of classification and topic modeling tasks.

The Google Natural Language API provides some basic information about each detected entity and even provides a link to the respective Wikipedia article if it exists. Also, a salience score is calculated. This score for an entity provides information about the importance or centrality of that entity to the entire document text. Scores closer to 0 are less salient, while scores closer to 1.0 are highly salient.

When we send a request to the API with this example sentence: “Robert DeNiro spoke to Martin Scorsese in Hollywood on Christmas Eve in December 2011.” We receive the following result:

| Detected Entity | Additional Information |

| Robert De Niro | type : PERSON salience : 0.5869118 wikipedia_url : https://en.wikipedia.org/wiki/Robert_De_Niro |

| Hollywood | type : LOCATION salience : 0.17918482 wikipedia_url : https://en.wikipedia.org/wiki/Hollywood |

| Martin Scorsese | type : LOCATION salience : 0.17712952 wikipedia_url : https://en.wikipedia.org/wiki/Martin_Scorsese |

| Christmas Eve | type : PERSON salience : 0.056773853 wikipedia_url : https://en.wikipedia.org/wiki/Christmas |

| December 2011 | type : DATE Year: 2011 Month: 12 salience : 0.0 wikipedia_url : - |

| 2011 | type : NUMBER salience : 0.0 wikipedia_url : - |

As you can see, all entities are identified and classified correctly, except that 2011 appears twice. Additionally to the field in the example output, the entity analysis API will also detect organizations, works of art, consumer goods, phone numbers, addresses, and prices.

Entity Sentiment Analysis

If there are models for entity detection and sentiment analysis, it’s only natural to go a step further and combine them to detect the prevailing emotions towards the different entities in a text.

While the Sentiment Analysis API finds all displays of emotion in the document and aggregates them, the Entity Sentiment Analysis tries to find the dependencies between different parts of the document and the identified entities and then attributes the emotions in these text segments to the respective entities.

For example the opinionated text: “The author is a horrible writer. The reader is very intelligent on the other hand.” leads to the results:

| Entity | Sentiment |

| author | Salience: 0.8773350715637207 Sentiment: magnitude: 1.899999976158142 score: -0.8999999761581421 |

| reader | Salience: 0.08653714507818222 Sentiment: magnitude: 0.8999999761581421 score: 0.8999999761581421 |

The entity sentiment analysis so far works only for English, Japanese, and Spanish.

Text Classification

Lastly, the Google Natural language API comes with a plug-and-play text classification model.

The model is trained to classify the input documents into a large set of categories. The categories are structured hierarchical, e.g. the Category “Hobbies & Leisure” has several sub-categories, one of which would be “Hobbies & Leisure/Outdoors” which itself has sub-categories like “Hobbies & Leisure/Outdoors/Fishing.”

This is an example text from a Nikon camera ad:

“The D3500’s large 24.2 MP DX-format sensor captures richly detailed photos and Full HD movies—even when you shoot in low light. Combined with the rendering power of your NIKKOR lens, you can start creating artistic portraits with smooth background blur. With ease.”

The Google API returns the result:

| Category | Confidence |

| Arts & Entertainment/Visual Art & Design/Photographic & Digital Arts | 0.95 |

| Hobbies & Leisure | 0.94 |

| Computers & Electronics/Consumer Electronics/Camera & Photo Equipment | 0.85 |

All three of these categories make sense, even though we would intuitively rank the third entry higher than the second one. However, one must consider that this input segment is only a short part of the full camera ad document and the classification model’s performance improves with text length.

After trying it out with a lot of documents, I found the results of the classification model meaningful in most cases. Still, as all other models from the Google Natural Language API, the classifier comes as a black-box solution which cannot be modified or even fine-tuned by the API user. Especially in the case of text classification, the vast majority of companies will have their own text-categories that differ from the categories of the Google model and therefore, the Natural Language API text classification service might not be applicable for the majority of the users.

Another limitation of the classification model is that it only works for English language texts.

How to Use the Natural Language API

The major advantage of the Google Natural Language API is its ease of use. No machine learning skills are required and almost no coding skills. On the Google Cloud website, you can find code snippets for calling the API for a lot of languages.

For example, the Python code to call the sentiment analysis API is as short as:

from google.cloud import language_v1

from google.cloud.language_v1 import enums

import six

def sample_analyze_sentiment(content):

client = language_v1.LanguageServiceClient()

if isinstance(content, six.binary_type):

content = content.decode('utf-8')

type_ = enums.Document.Type.PLAIN_TEXT

document = {'type': type_, 'content': content}

response = client.analyze_sentiment(document)

sentiment = response.document_sentiment

print('Score: {}'.format(sentiment.score))

print('Magnitude: {}'.format(sentiment.magnitude))

The other API functionalities are called in a similar way, simply by changing client.analyze_sentiment to the appropriate function.

Overall Cost of Google Natural Language API

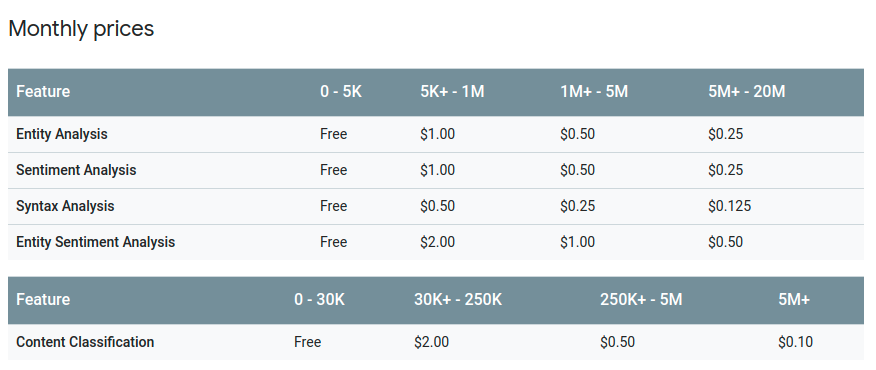

Google charges its users on a per-request basis for all services of the Natural Language API. This has the advantage that there are no fixed costs for any deployment servers. The disadvantage is that it can become pricey for very large datasets.

This table shows the prices (per 1,000 requests) depending on the number of monthly requests:

If a document has more than 1,000 characters, it counts as multiple requests. For example, if you want to analyze the sentiment of 10,000 documents, which have 1,500 characters each, you would be charged 20,000 requests. As the first 5,000 are free, the total costs would amount to $15. Analyzing one million documents of the same size would cost $1,995.

Convenient, but Inflexible

The Google Natural Language API is a very convenient option for quick, out-of-the-box solutions. Very little technical knowledge and no understanding of the underlying machine learning models is required.

The main disadvantage is its inflexibility and the lack of access to the models. The models cannot be tuned to a specific task or dataset.

In a real-world environment, most tasks will probably require a more tailored solution than the standardized Natural Language API functions can provide.

For this scenario, Google AutoML Natural Language is more suitable.

Google AutoML Natural Language

If the Natural Language API is not flexible enough for your business purposes, then AutoML Natural Language might be the right service. AutoML is a new Google Cloud Service (still in beta) that enables the user to create customized machine learning models. In contrast to the Natural Language API, the AutoML models will be trained on the user’s data and therefore fit a specific task.

Custom machine learning models for classifying content are useful when the predefined categories that are available from the Natural Language API are too generic or not applicable to your specific use case or knowledge domain.

The AutoML service requires a bit more effort for the user, mainly because you have to provide a dataset to train the model. However, the training and evaluation of the models in completely automated and no machine learning knowledge is required. The whole process can be done without writing any code by using the Google Cloud console. Of course, if you want to automate these steps, there is support for all common programming languages.

What Can Be Done With Google AutoML Natural Language?

The AutoML service covers three use cases. All of these use cases support solely the English language for now.

1. AutoML Text Classification

While the text classifier of the Natural Language API is pre-trained and therefore has a fixed set of text categories, the AutoML text classification builds customized machine learning models, with the categories that you provide in your training dataset.

2. AutoML Sentiment Analysis

As we have seen, the sentiment analysis of the Natural Language API works great in general use cases like movie reviews. Because the sentiment model is trained on a very general corpus, the performance can deteriorate for documents that use a lot of domain-specific language. In these situations, the AutoML Sentiment Analysis allows you to train a sentiment model that is customized to your domain.

3. AutoML Entity Extraction

In many business contexts, there are domain specific entities (legal contracts, medical documents) that the Natural Language API will not be able to identify. If you have a dataset where the entities are marked, you can train a customized model entity extractor with AutoML. If the dataset is sufficiently big, the trained entity extraction model will also be able to detect previously unseen entities.

How to Use AutoML Natural Language

Using the three AutoML is a four-step process and is very similar for all three methodologies:

-

Dataset Preparation

The dataset has to be in a specific format (CSV or JSON) and needs to be stored in a storage bucket. For classification and sentiment models, the datasets contain just two columns, the text and the label. For the entity extraction model, the dataset needs the text and the locations of all entities in the text. -

Model Training

The model training is completely automatic. If no instructions are given otherwise, then AutoML will split the training set automatically into train, test and validation sets. This split can also be decided by the user, but that is the only way to influence the model training. The rest of the training is completely automated in a black-box fashion. -

Evaluation

When the training is finished, AutoML will display precision and recall scores as well as a confusion matrix. Unfortunately, there is absolutely no information about the model itself, making it difficult to identify the reasons for bad performing models. -

Prediction

Once you are happy with the model's performance, the model can be conveniently deployed with a couple of clicks. The deployment process takes only a few minutes.

AutoML Model Performance

The training process is quite slow, probably because the underlying models are very big. I trained a small test classification task with 15,000 samples and 10 categories and the training took several hours. A real-world example with a much bigger dataset took me several days.

While Google didn’t publish any details about the models used, my guess is that Google’s BERT model is used with small adaptations for each task. Fine-tuning big models like BERT is a computationally expensive process, especially when a lot of cross-validation is performed.

I tested the AutoML classification model in a real-world example against a model that I developed myself, which was based on BERT. Surprisingly, the AutoML model performed significantly worse than my own model, when trained on the same data. AutoML achieved an accuracy of 84%, while my on model achieved 89%.

That means while using AutoML might be very convenient, for performance critical tasks it makes sense to invest the time and develop the model yourself.

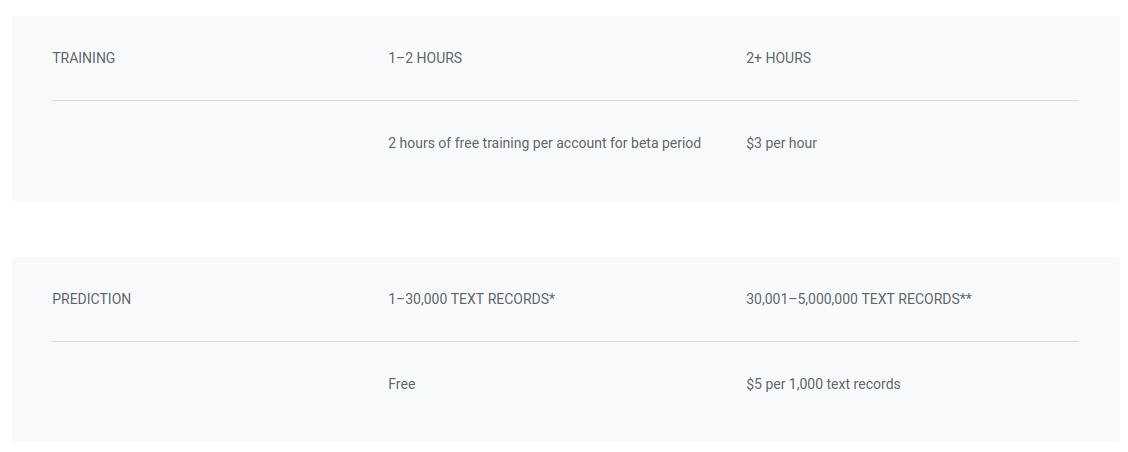

AutoML Pricing

The AutoML pricing for predictions with $5 per 1,000 text records is significantly more expensive than the Natural Language API. Additionally, for the model training AutoML charges $3 per hour. While this is negligible in the beginning, for use cases that require frequent retraining, this can add up to a substantial amount, especially because the training seems to be quite slow.

Let’s use the same example as for the Natural Language API:

You want to analyze the sentiment of 10,000 documents, which have 1,500 characters each, so you would be charged 20,000 requests. Let’s say training the model takes 20 hours, which costs $48. Prediction wouldn’t cost you anything, as the first 30,000 requests are free. For small datasets like this, AutoML is very economical.

However, if your dataset is bigger and you need to predict the sentiment of one million of the same size, it would cost $9,850, which is quite expensive. For large datasets like this, it makes sense to develop your own model deploy it yourself without using AutoML.

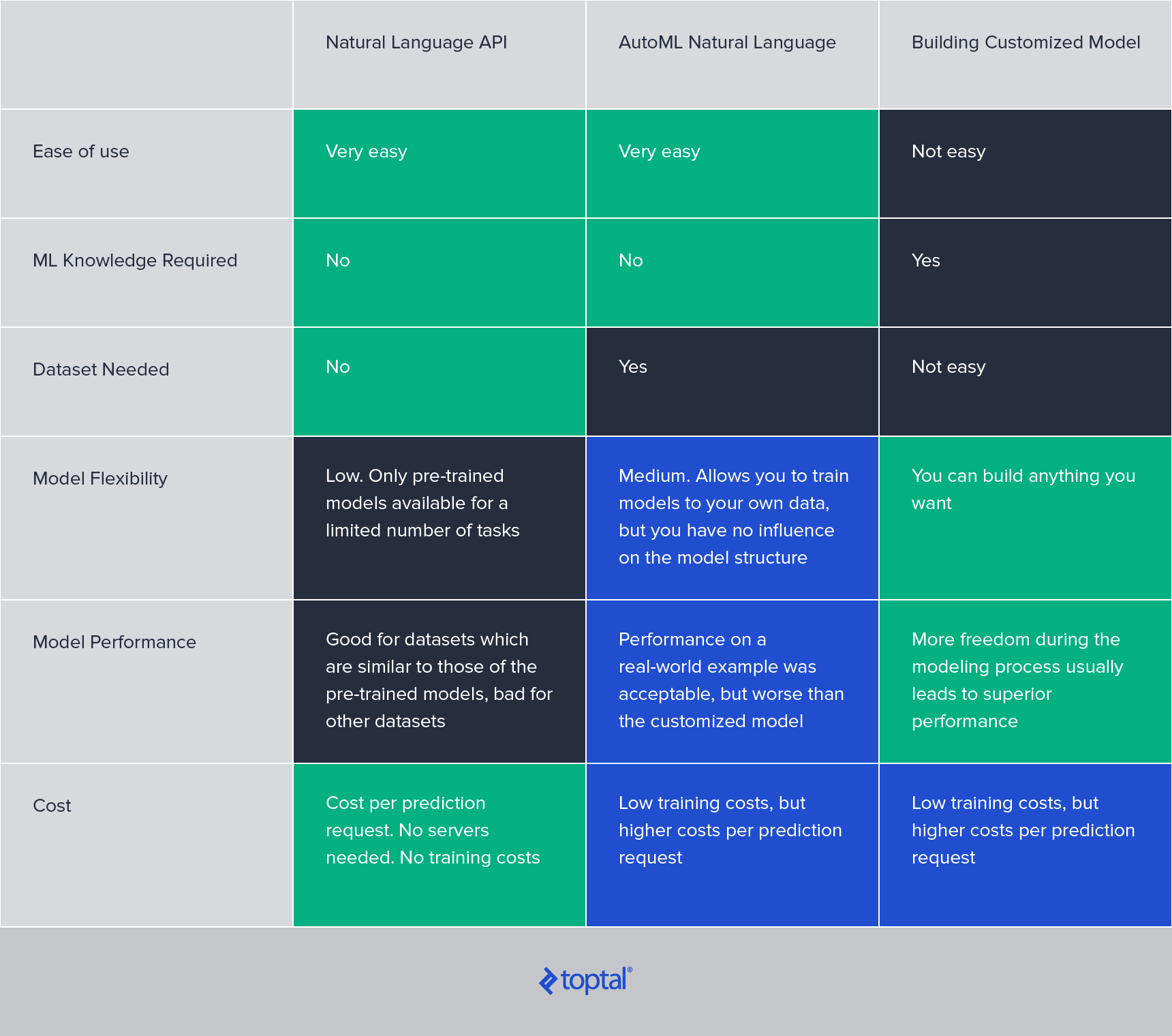

Google Natural Language API vs. AutoML Natural Language

Google AutoML Natural Language is much more powerful than the Natural Language API because it allows the user to train models that are customized for their specific dataset and domain.

It is as easy to use and doesn’t require machine learning knowledge. The two downsides are the higher costs and the necessity of providing a high-quality dataset that needs to train models that perform well.

The AutoML beta supports only three NLP tasks for now (classification, sentiment analysis, entity extraction) and supports only English language documents. When this service is released fully, I expect other languages and NLP tasks to be added over time.

Comparison of Natural Language Processors

Further Reading on the Toptal Blog:

Understanding the basics

How does sentiment analysis work?

Sentiment analysis is the process of computationally identifying opinions in a text. A machine learning model is trained on a set of texts with known sentiment and learns which expressions correlate to the writer’s attitude towards the topic. The model then can detect the sentiment in unseen texts.

What is text classification in NLP?

Text classification is the process of automatically categorizing text documents into a limited number of groups. Text classifiers are machine learning models trained to detect similarities between texts. Their features are usually based on the vocabulary and word order of the input text documents.

What is an NLP API?

An NLP API is an interface to an existing natural language processing model. The API is used to send a text document to the model and receive the model output as a return. Google offers an NLP API to several models for different tasks like sentiment analysis and text classification.

What does the cloud Natural Language API do?

The cloud Natural Language API is a Google service that offers an interface to several NLP models which have been trained on large text corpora. The API can be used for entity analysis, syntax analysis, text classification, and sentiment analysis.

Maximilian Hopf

London, United Kingdom

Member since June 4, 2019

About the author

Max is a data science and machine learning expert. He has helped to build one of Germany’s most highly funded fintechs.

Expertise

PREVIOUSLY AT