Machine Learning Video Analysis: Identifying Fish

Machine learning, combined with some standard image processing techniques, can result in powerful video analysis tools.

In this article, Toptal Freelance Software Engineer Michael Karchevsky walks through a solution for a machine learning competition that identifies the species and lengths of any fish present in a given video segment.

Machine learning, combined with some standard image processing techniques, can result in powerful video analysis tools.

In this article, Toptal Freelance Software Engineer Michael Karchevsky walks through a solution for a machine learning competition that identifies the species and lengths of any fish present in a given video segment.

Michael is an experienced Python, OpenCV, and C++ developer. He’s particularly interested in machine learning and computer vision.

Expertise

PREVIOUSLY AT

Competitions are a great way to level up machine learning skills. Not only do you get access to quality datasets, you are also given clear goals. This helps you focus on the important part: designing quality solutions for problems at hand.

I and a friend of mine recently took part in the N+1 fish, N+2 fish competition. This machine learning competition, with lots of image processing, requires you to process video clips of fish being identified, measured, and kept or thrown back into the sea.

In the article, I will walk you through how we approached the problem from the competition using standard image processing techniques and pre-trained neural network models. The performance of the submitted solutions was measured based on a certain formula. With our solution, we managed to secure 11th place.

For a brief introduction to machine learning, you can refer to this article.

About the Competition

We were provided with videos of one or many fish in each segment. These videos were captured on different boats fishing for ground fish in the Gulf of Maine.

The videos were collected from fixed-position cameras placed to look down on a ruler. A fish is placed on the ruler, the fisherman removes their hands from the ruler, and then the fisherman either discards or keeps the fish based on the species and size.

Performance Metric

There were three tasks that were important to this project. The ultimate goal was to create an algorithm that automatically generates annotations for the video files, where the annotations are comprised of:

- The sequence of fish that appear

- The species of each fish that appear in the video

- The length of each fish that appear in the video

The organizers of the competition created an aggregated metric that gave a general sense of performance on all of these tasks. The metric was a simple weighted combination of an individual metric for each of the tasks. While there were certain weights, they recommended that we focus on a well-rounded algorithm that was able to contribute to all of the tasks!

You can learn more about how the overall performance metric is calculated from the performance metrics of each individual task from the official competition web page.

Designing a Machine Learning Solution

When working with machine learning projects dealing with pictures or videos, you will most likely be using convolutional neural networks. But, before we could use convolutional neural networks, we had to preprocess the frames and solve some other subtasks through different strategies.

For training, we used one nVidia 1080Ti GPU. A good chunk of our time was lost trying to optimize our code in order to stay relevant in the competition. We did, however, end up spending less time where it would have mattered more.

Stage 0: Finding Out the Number of Unique Boats

With silhouette analysis, finding the number of boats became a fairly trivial task. The steps were as follows, and leveraged some very standard techniques:

- Get some random frames from each video.

- Calculate statistics and Speeded Up Robust Features (SURF) for each image.

- Using silhouette analysis for K-means clustering, we can find the number of boats.

SURF detects points of interest in an image and generates feature descriptions. This approach is really robust, even with various image transformations.

Once the features of the points of interest in the image are known, K-means clustering is performed, followed by silhouette analysis to determine an approximate number of boats in the images.

Stage 1: Identifying Repeated Frames

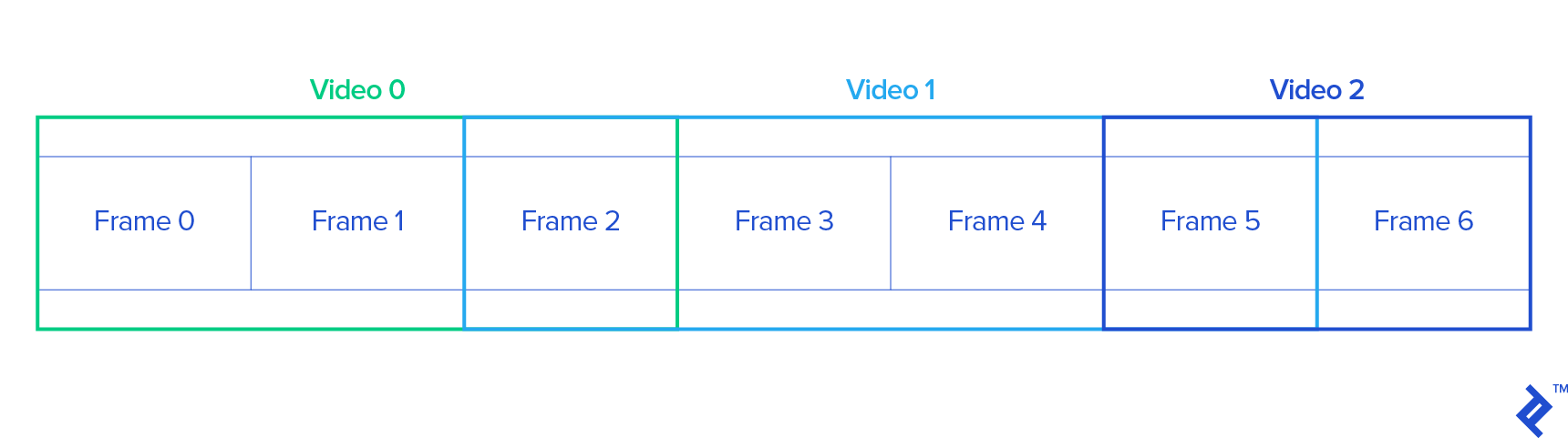

Although the dataset contained separate video files, each video seemed to have some overlaps with other videos in the dataset. This is possibly because the videos were split from one long video and thus ended up having a few common frames at the start or end of each video.

To identify such frames and remove them as necessary, we used some quick hash functions on the frames.

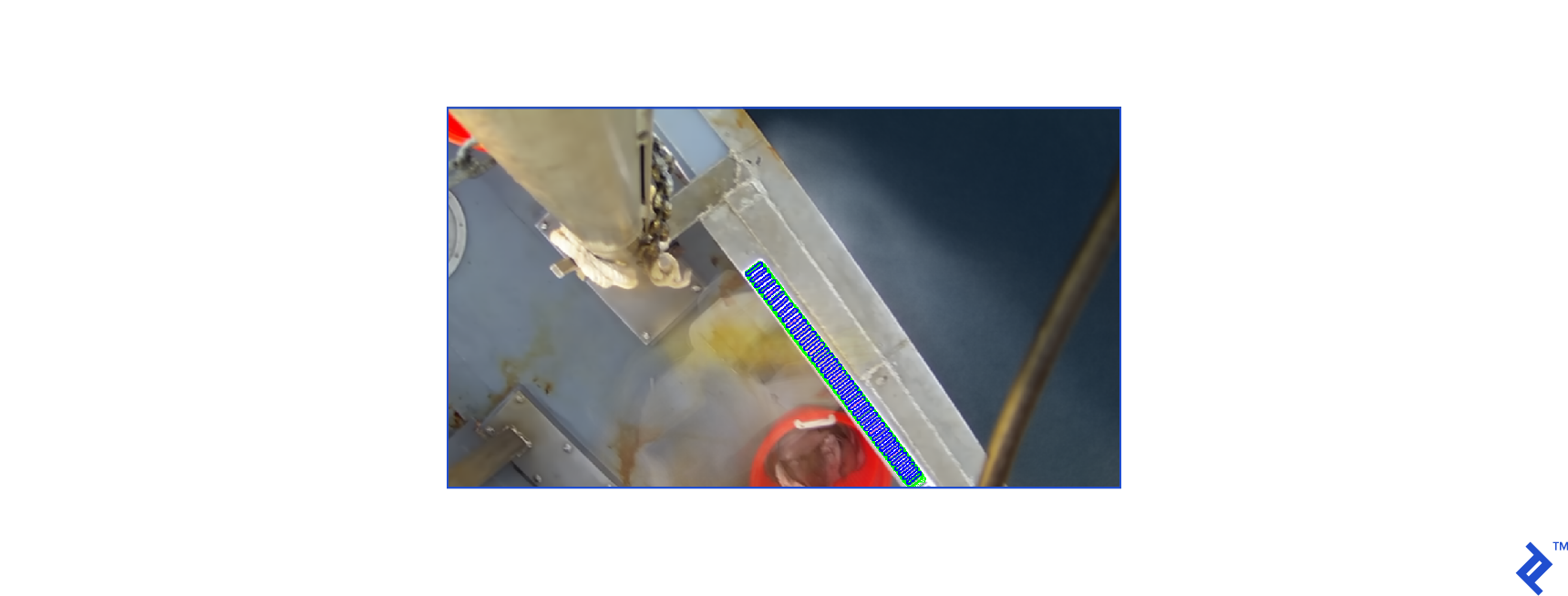

Stage 2: Locating the Ruler

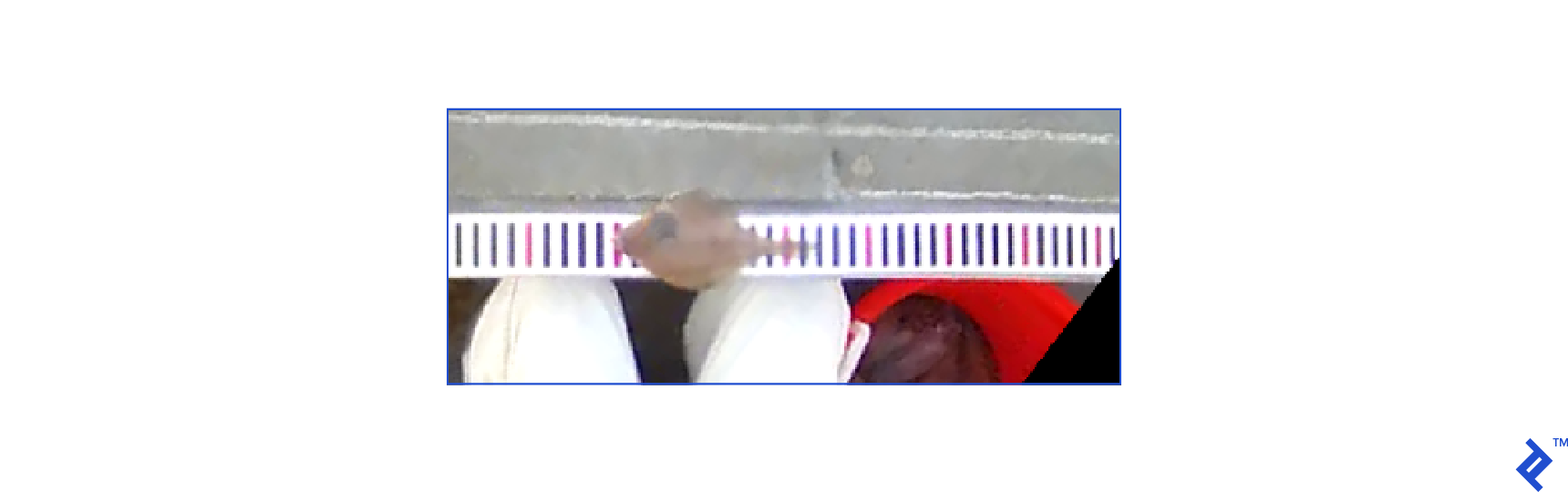

By applying some standard image processing methods, we located the position of the ruler and its orientation. We then rotated and cropped the image to position the ruler in a consistent manner across all frames. This also allowed to us reduce the frame size tenfold.

Detected ruler (plotted on the mean frame):

Cropped and rotated area:

Stage 3: Determining the Sequence of the Fish

Implementing this stage to determine the sequence of the fish took a majority of my time during this competition. Training new convolutional neural networks seemed too expensive, so we decided to use pre-trained neural networks.

For this, we chose the following neural networks:

These neural network models are trained on the ImageNet dataset.

We extracted only the convolutional layers of the models and passed through them the competition dataset. At the output, I had a fairly compact array of features.

Then, we trained the neural networks with only fully connected dense layers and predicted results for each pretrained model. After that, we averaged the result, and the results turned out quite poor.

We decided to replace it with Long short-term memory (LSTM) neural networks for better prediction where the input data was a sequence of five frames which were transformed with the pretrained models.

To merge the output of all the models, we used the geometric mean.

The fish detection pipeline was:

- Generate features with pretrained models.

- Predict the probability of fish appearance on a dense neural network.

- Generate LSTM features with pretrained models.

- Predict the probability of fish appearance on an LSTM neural network.

- Merge models using the geometric mean.

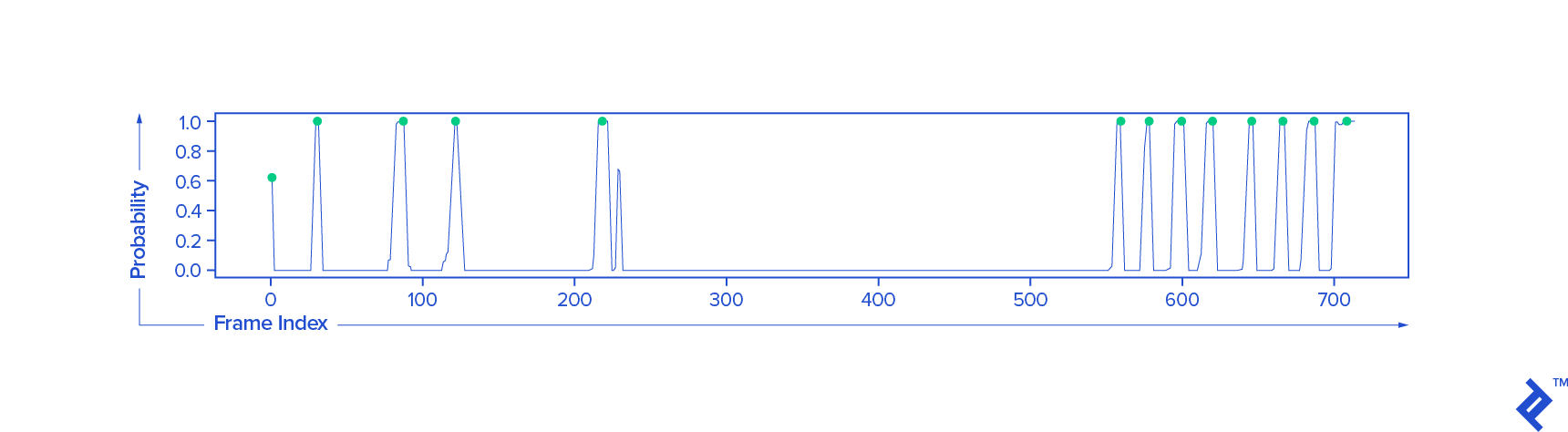

The result for one video looks something like this:

Stage 4: Identify the Species of the Fish

After spending a majority of the contest duration implementing the previous stage, we tried to make up for the lost time working with models from the previous stage to identify the species of the fish.

Our approach for that was roughly as follows:

- Add dense layers to convolutional pretrained models VGG16, VGG19, ResNet50, Xception, InceptionV3 layers (weights of convolutional layers were fixed).

- Train models with small image augmentation.

- Predict species with each model.

- Сonsolidate models by voting.

Stage 5: Detect the Length of the Fish

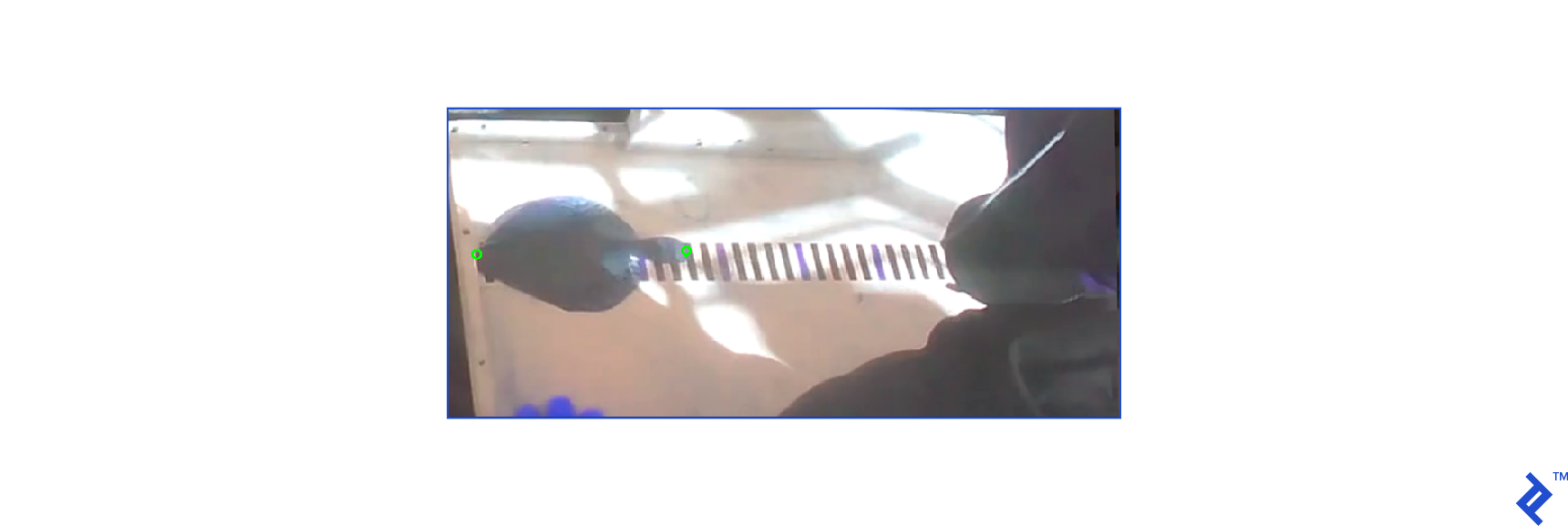

To determine the length of the fish, we used to neural networks. One of them was trained to identify the fish heads and the other was trained to identify fish tails. The lengths of the fish were approximated as the distance between the two points identified by the two neural networks.

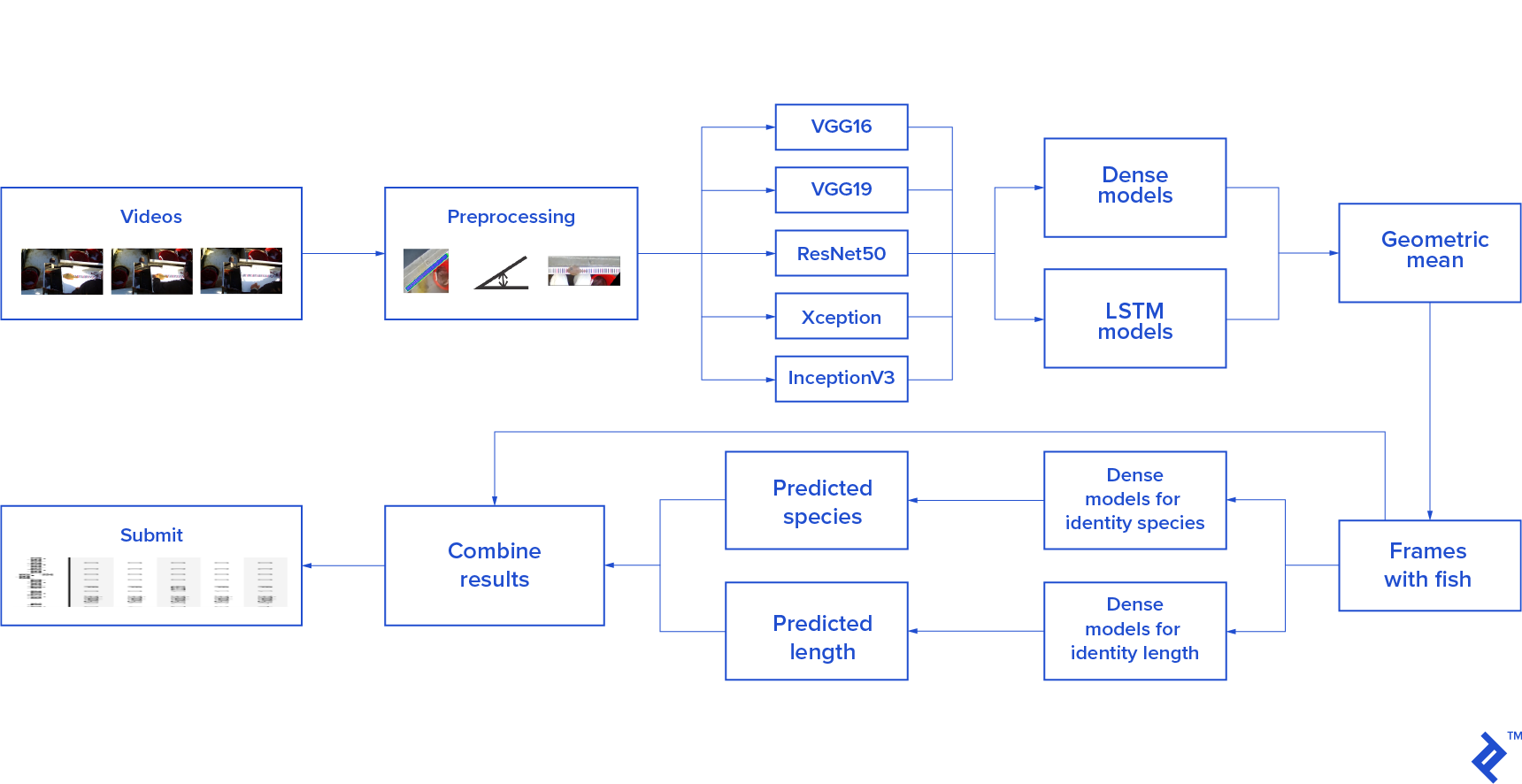

Complete Scheme

Here is what the overall scheme of the stages looked like:

The overall design was fairly simple as video frames were passed through the stages outlined above before combining the separate results.

Further Reading on the Toptal Blog:

Understanding the basics

What is silhouette analysis?

Silhouette analysis is a technique that can distinguish between clusters of data points that are visually separate from each other.

What is a machine learning model?

A machine learning model is a product of a machine learning algorithm training on data. The model can later be used to produce relevant output for similar inputs.

Michael Karchevsky

Batumi, Adjara, Georgia

Member since September 12, 2016

About the author

Michael is an experienced Python, OpenCV, and C++ developer. He’s particularly interested in machine learning and computer vision.

Expertise

PREVIOUSLY AT