iOS ARKit Tutorial: Drawing in the Air with Bare Fingers

With augmented reality on the rise, more and more libraries and tools are developed to tap into that market. Apple recently released ARKit, which shows great promise through power and simplicity of use.

With augmented reality on the rise, more and more libraries and tools are developed to tap into that market. Apple recently released ARKit, which shows great promise through power and simplicity of use.

Osama has 8+ years of experience developing iOS/Mac apps, and leading technical teams. He also co-founded a startup with 10m users worldwide

Expertise

Recently, Apple announced its new augmented reality (AR) library named ARKit. For many, it looked like just another good AR library, not a technology disruptor to care about. However, if you take a look at AR progress in the past couple of years, one shouldn’t be too quick to draw such conclusions.

In this post, we will create a fun ARKit example project using iOS ARKit. The user will place his fingers on a table as if they were holding a pen, tap on the thumbnail and start drawing. Once finished, the user will be able to transform their drawing into a 3D object, as shown in the animation below. The full source code for our iOS ARKit example is available at GitHub.

Why Should We Care about iOS ARKit Now?

Every experienced developer is probably aware of the fact that AR is an old concept. We can pin down the first serious development of AR to the time developers got access to individual frames from webcams. Apps at that time usually were used to transform your face. However, humanity didn’t take long to realize that transforming faces into bunnies wasn’t one of their most imminent needs, and soon the hype faded!

I believe AR has always been missing two key technology leaps to make it useful: usability and immersion. If you traced other AR hypes, you will notice this. For instance, the AR hype took off again when developers got access to individual frames from mobile cameras. Beside the strong return of the great bunny transformers, we saw a wave of apps that drop 3D objects on printed QR codes. But they never never took off as a concept. They were not augmented reality, but rather augmented QR codes.

Then Google surprised us with a piece of science fiction, Google Glass. Two years passed, and by the time this amazing product was expected to come to life, it was already dead! Many critics analyzed the reasons for the failure of Google Glass, putting the blame on anything ranging from social aspects to Google’s dull approach at launching the product. However, we do care in this article for one particular reason - immersion in the environment. While Google Glass solved the usability problem, it was still nothing more than a 2D image plotted in the air.

Tech titans like Microsoft, Facebook, and Apple learned this harsh lesson by heart. In June 2017, Apple announced its beautiful iOS ARKit library, making immersion its top priority. Holding a phone is still a big user experience blocker, but Google Glass’s lesson taught us that hardware is not the issue.

I believe we are heading towards a new AR hype peak very soon, and with this new significant pivot, it could eventually find its home market, allowing more AR app development to become mainstream. This also means that every augmented reality app development company out there will be able to tap into Apple’s ecosystem and user base.

But enough history, let us get our hands dirty with code and see Apple augmented reality in action!

ARKit Immersion Features

ARKit provides two main features; the first is the camera location in 3D space and the second is horizontal plane detection. To achieve the former, ARKit assumes that your phone is a camera moving in the real 3D space such that dropping some 3D virtual object at any point will be anchored to that point in real 3D space. And to the latter, ARKit detects horizontal planes like tables so you can place objects on it.

So how does ARKit achieve this? This is done through a technique called Visual Inertial Odometry (VIO). Don’t worry, just like entrepreneurs find their pleasure in the number of giggles you giggle when you figure out the source behind their startup name, researchers find theirs in the number of head scratches you do trying to decipher any term they come up with when naming their inventions - so let’s allow them to have their fun, and move on.

VIO is a technique by which camera frames are fused with motion sensors to track the location of the device in 3D space. Tracking the motion from camera frames is done by detecting features, or, in other words, edge points in the image with high contrast - like the edge between a blue vase and a white table. By detecting how much these points moved relative to each other from one frame to another, one can estimate where the device is in 3D space. That is why ARKit won’t work properly when placed facing a featureless white wall or when the device moves very fast resulting in blurred images.

Getting Started with ARKit in iOS

As of the time of writing of this article, ARKit is part of iOS 11, which is still in beta. So, to get started, you need to download iOS 11 Beta on iPhone 6s or above, and the new Xcode Beta. We can start a new ARKit project from New > Project > Augmented Reality App. However, I found it more convenient to start this augmented reality tutorial with the official Apple ARKit sample, which provides a few essential code blocks and is especially helpful for plane detection. So, let us start with this example code, explain the main points in it first, and then modify it for our project.

First, we should determine which engine we are going to use. ARKit can be used with Sprite SceneKit or Metal. In the Apple ARKit example, we are using iOS SceneKit, a 3D engine provided by Apple. Next, we need to set up a view that will render our 3D objects. This is done by adding a view of type ARSCNView.

ARSCNView is a subclass of the SceneKit main view named SCNView, but it extends the view with a couple of useful features. It renders the live video feed from the device camera as the scene background, while it automatically matches SceneKit space to the real world, assuming the device is a moving camera in this world.

ARSCNView doesn’t do AR processing on its own, but it requires an AR session object that manages the device camera and motion processing. So, to start off, we need to assign a new session:

self.session = ARSession()

sceneView.session = session

sceneView.delegate = self

setupFocusSquare()

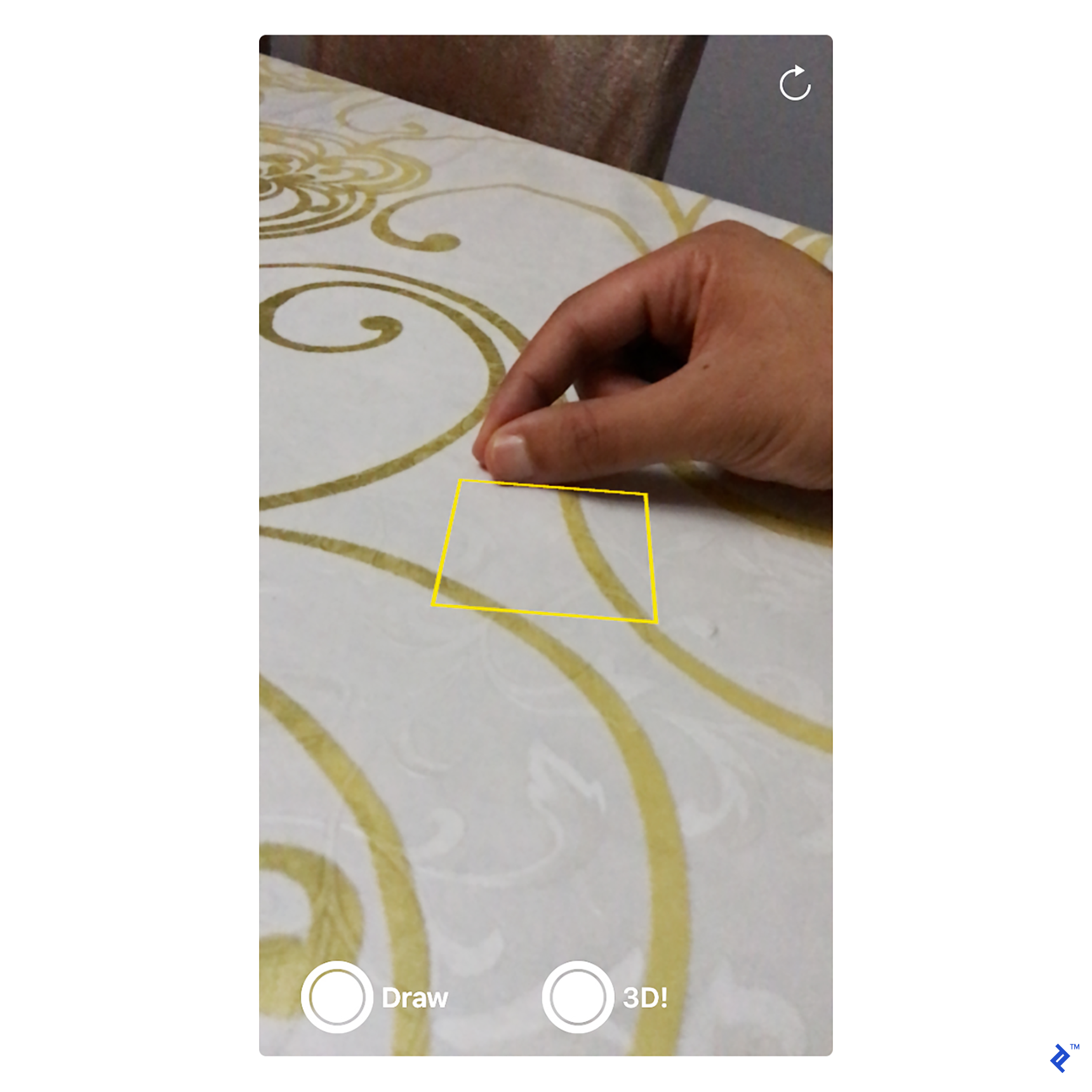

The last line above adds a visual indicator that aids the user visually in describing the status of plane detection. Focus Square is provided by the sample code, not the ARKit library, and it is one of the main reasons we started off with this sample code. You can find more about it in the readme file included in the sample code. The following image shows a focus square projected on a table:

The next step is to start the ARKit session. It makes sense to restart the session every time the view appears because we can make no use of previous session information if we aren’t tracking the user anymore. So, we are going to start the session in viewDidAppear:

override func viewDidAppear(_ animated: Bool) {

let configuration = ARWorldTrackingSessionConfiguration()

configuration.planeDetection = .horizontal

session.run(configuration, options: [.resetTracking, .removeExistingAnchors])

}

In the code above, we start by setting ARKit session configuration to detect horizontal planes. As of writing this article, Apple does not provide options other than this. But apparently, it hints to detecting more complex objects in the future. Then, we start running the session and make sure we reset tracking.

Finally, we need to update the Focus Square whenever the camera position, i.e., the actual device orientation or position, changes. This can be done in the renderer delegate function of SCNView, which is called every time a new frame of the 3D engine is going to be rendered:

func renderer(_ renderer: SCNSceneRenderer, updateAtTime time: TimeInterval) {

updateFocusSquare()

}

By this point, if you run the app, you should see the focus square over the camera stream searching for a horizontal plane. In the next section, we will explain how planes are detected, and how we can position the focus square accordingly.

Detecting Planes in ARKit

ARKit can detect new planes, update existing ones, or remove them. In order to handle planes in a handy way, we will create some dummy SceneKit node that holds the plane position information and a reference to the focus square. Planes are defined in the X and Z direction, where Y is the surface’s normal, i.e., we should always keep our drawing nodes positions within the same Y value of the plane if we want to make it look as if it is printed on the plane.

Planes detection is done through callback functions provided by ARKit. For example, the following callback function is called whenever a new plane is detected:

var planes = [ARPlaneAnchor: Plane]()

func renderer(_ renderer: SCNSceneRenderer, didAdd node: SCNNode, for anchor: ARAnchor) {

if let planeAnchor = anchor as? ARPlaneAnchor {

serialQueue.async {

self.addPlane(node: node, anchor: planeAnchor)

self.virtualObjectManager.checkIfObjectShouldMoveOntoPlane(anchor: planeAnchor, planeAnchorNode: node)

}

}

}

func addPlane(node: SCNNode, anchor: ARPlaneAnchor) {

let plane = Plane(anchor)

planes[anchor] = plane

node.addChildNode(plane)

}

...

class Plane: SCNNode {

var anchor: ARPlaneAnchor

var focusSquare: FocusSquare?

init(_ anchor: ARPlaneAnchor) {

self.anchor = anchor

super.init()

}

...

}

The callback function provides us with two parameters, anchor and node. node is a normal SceneKit node placed at the exact position and orientation of the plane. It has no geometry, so it is invisible. We use it to add our own plane node, which is also invisible, but holds information about the plane orientation and position in anchor.

So how are position and orientation saved in ARPlaneAnchor? Position, orientation, and scale are all encoded in a 4x4 matrix. If I have the chance to choose one math concept for you to learn, it will undoubtedly be matrices. Anyway, we can circumvent this by describing this 4x4 matrix as follows: A brilliant 2-dimensional array containing 4x4 floating point numbers. By multiplying these numbers in a certain way by a 3D vertex, v1, in its local space, it results in a new 3D vertex, v2, that represents v1 in world space. So, if v1 = (1, 0, 0) in its local space, and we want to place it at x = 100 in world space, v2 will be equal to (101, 0, 0) with respect to world space. Of course, the math behind this becomes more complex when we add rotations about axes, but the good news is that we can do without understanding it (I highly recommend checking the relevant section from this excellent article for an in-depth explanation of this concept).

checkIfObjectShouldMoveOntoPlane checks if we already have objects drawn and checks whether the y-axis of all these objects matches that of the newly detected planes.

Now, back to updateFocusSquare(), described in the previous section. We want to keep the focus square at the center of the screen, but projected on the nearest detected plane. The code below demonstrates this:

func updateFocusSquare() {

let worldPos = worldPositionFromScreenPosition(screenCenter, self.sceneView)

self.focusSquare?.simdPosition = worldPos

}

func worldPositionFromScreenPosition(_ position: CGPoint, in sceneView: ARSCNView) -> float3? {

let planeHitTestResults = sceneView.hitTest(position, types: .existingPlaneUsingExtent)

if let result = planeHitTestResults.first {

return result.worldTransform.translation

}

return nil

}

sceneView.hitTest searches for real-world planes corresponding to a 2D point in the screen view by projecting this 2D point to the nearest underneath plane. result.worldTransform is a 4x4 matrix that holds all transform information of the detected plane, while result.worldTransform.translation is a handy function that returns the position only.

Now, we have all the information we need to drop a 3D object on detected surfaces given a 2D point on the screen. So, let’s start drawing.

Drawing

Let us first explain the approach of drawing shapes that follows a human’s finger in computer vision. Drawing shapes is done by detecting every new location for the moving finger, dropping a vertex at that location, and connecting each vertex with the previous one. Vertices can be connected by a simple line, or through a Bezier curve if we need a smooth output.

For simplicity, we will follow a bit of a naive approach for drawing. For every new location of the finger, we will drop a very small box with rounded corners and almost zero height on the detected plan. It will appear as if it is a dot. Once the user finishes drawing and selects the 3D button, we will change the height of all dropped objects based on the movement of the user’s finger.

The following code shows the PointNode class that represents a point:

let POINT_SIZE = CGFloat(0.003)

let POINT_HEIGHT = CGFloat(0.00001)

class PointNode: SCNNode {

static var boxGeo: SCNBox?

override init() {

super.init()

if PointNode.boxGeo == nil {

PointNode.boxGeo = SCNBox(width: POINT_SIZE, height: POINT_HEIGHT, length: POINT_SIZE, chamferRadius: 0.001)

// Setup the material of the point

let material = PointNode.boxGeo!.firstMaterial

material?.lightingModel = SCNMaterial.LightingModel.blinn

material?.diffuse.contents = UIImage(named: "wood-diffuse.jpg")

material?.normal.contents = UIImage(named: "wood-normal.png")

material?.specular.contents = UIImage(named: "wood-specular.jpg")

}

let object = SCNNode(geometry: PointNode.boxGeo!)

object.transform = SCNMatrix4MakeTranslation(0.0, Float(POINT_HEIGHT) / 2.0, 0.0)

self.addChildNode(object)

}

. . .

}

You will notice in the above code that we translate the geometry along the y-axis by half the height. The reason for this is to make sure that the object’s bottom is always at y = 0, so that it appears above the plane.

Next, in the renderer callback function of SceneKit, we will draw some indicator that acts like a pen tip point, using the same PointNode class. We will drop a point at that location if drawing is enabled, or raise the drawing into a 3D structure if 3D mode is enabled:

func renderer(_ renderer: SCNSceneRenderer, updateAtTime time: TimeInterval) {

updateFocusSquare()

// Setup a dot that represents the virtual pen's tippoint

if (self.virtualPenTip == nil) {

self.virtualPenTip = PointNode(color: UIColor.red)

self.sceneView.scene.rootNode.addChildNode(self.virtualPenTip!)

}

// Draw

if let screenCenterInWorld = worldPositionFromScreenPosition(self.screenCenter, self.sceneView) {

// Update virtual pen position

self.virtualPenTip?.isHidden = false

self.virtualPenTip?.simdPosition = screenCenterInWorld

// Draw new point

if (self.inDrawMode && !self.virtualObjectManager.pointNodeExistAt(pos: screenCenterInWorld)){

let newPoint = PointNode()

self.sceneView.scene.rootNode.addChildNode(newPoint)

self.virtualObjectManager.loadVirtualObject(newPoint, to: screenCenterInWorld)

}

// Convert drawing to 3D

if (self.in3DMode ) {

if self.trackImageInitialOrigin != nil {

DispatchQueue.main.async {

let newH = 0.4 * (self.trackImageInitialOrigin!.y - screenCenterInWorld.y) / self.sceneView.frame.height

self.virtualObjectManager.setNewHeight(newHeight: newH)

}

}

else {

self.trackImageInitialOrigin = screenCenterInWorld

}

}

}

virtualObjectManager is a class that manages drawn points. In 3D mode, we estimate the difference from the last position and increase/decrease the height of all points with that value.

Up until now, we are drawing on the detected surface assuming the virtual pen is at the center of the screen. Now for the fun part—detecting the user’s finger and using it instead of the screen center.

Detecting the User’s Fingertip

One of the cool libraries that Apple introduced in iOS 11 is Vision Framework. It provides some computer vision techniques in a pretty handy and efficient way. In particular, we are going to use the object tracking technique for our augmented reality tutorial. Object tracking works as follows: First, we provide it with an image and coordinates of a square within the image boundaries for the object we want to track. After that we call some function to initialize tracking. Finally, we feed in a new image in which the position of that object changed and the analysis result of the previous operation. Given that, it will return for us the object’s new location.

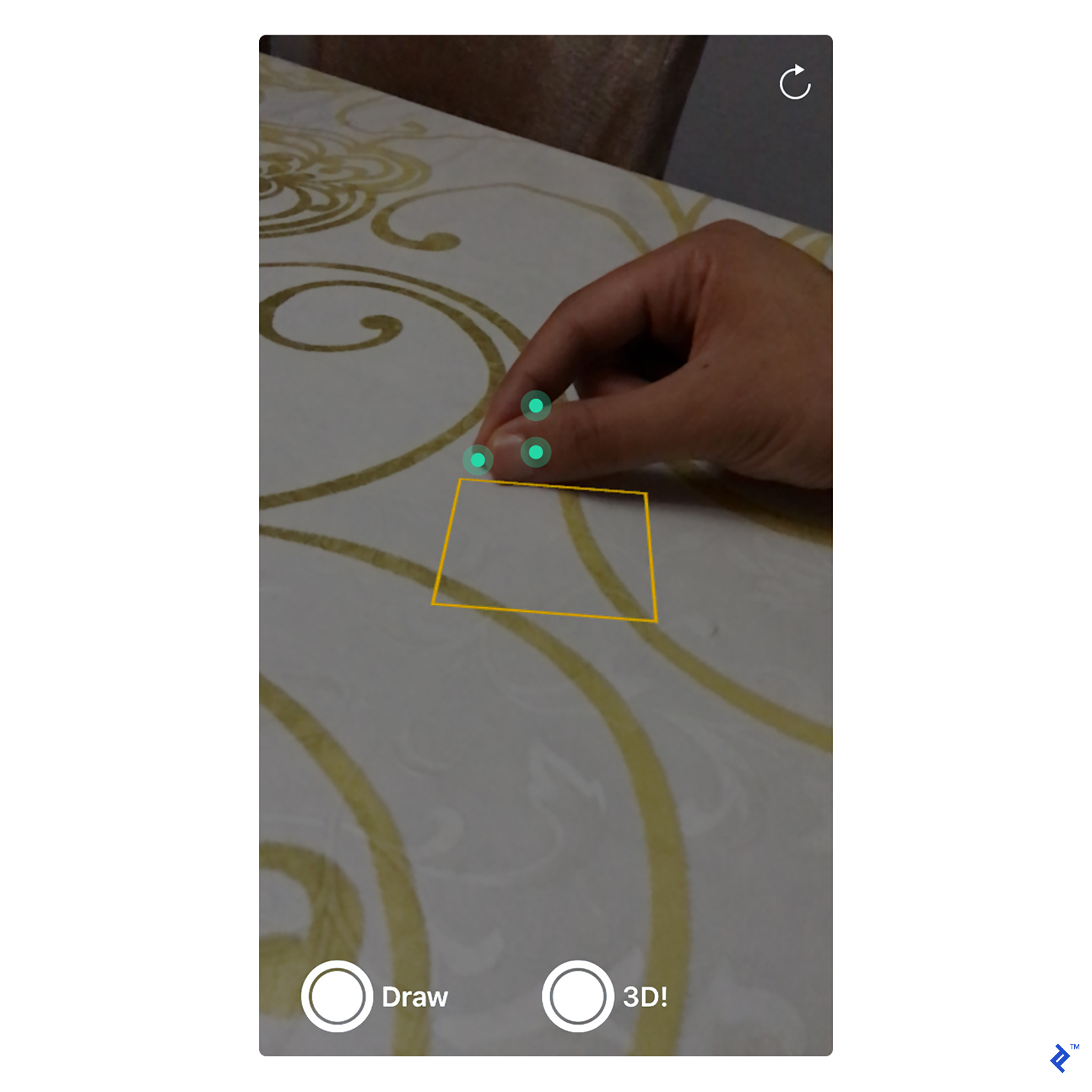

We are going to use a small trick. We will ask the user to rest their hand on the table as if they are holding a pen, and to make sure their thumbnail faces the camera, after which they should tap on their thumbnail on the screen. There are two points that need to be elaborated here. First, the thumbnail should have enough unique features to be traced via the contrast between the white thumbnail, the skin, and the table. This means that darker skin pigment will result in more reliable tracking. Second, since the user is resting their hands on the table, and since we are already detecting the table as a plane, projecting the location of the thumbnail from 2D view to the 3D environment will result in almost the exact location of the finger on the table.

The following image shows feature points that could be detected by the Vision library:

We will initialize thumbnail tracking in a tap gesture as follows:

// MARK: Object tracking

fileprivate var lastObservation: VNDetectedObjectObservation?

var trackImageBoundingBox: CGRect?

let trackImageSize = CGFloat(20)

@objc private func tapAction(recognizer: UITapGestureRecognizer) {

lastObservation = nil

let tapLocation = recognizer.location(in: view)

// Set up the rect in the image in view coordinate space that we will track

let trackImageBoundingBoxOrigin = CGPoint(x: tapLocation.x - trackImageSize / 2, y: tapLocation.y - trackImageSize / 2)

trackImageBoundingBox = CGRect(origin: trackImageBoundingBoxOrigin, size: CGSize(width: trackImageSize, height: trackImageSize))

let t = CGAffineTransform(scaleX: 1.0 / self.view.frame.size.width, y: 1.0 / self.view.frame.size.height)

let normalizedTrackImageBoundingBox = trackImageBoundingBox!.applying(t)

// Transfrom the rect from view space to image space

guard let fromViewToCameraImageTransform = self.sceneView.session.currentFrame?.displayTransform(withViewportSize: self.sceneView.frame.size, orientation: UIInterfaceOrientation.portrait).inverted() else {

return

}

var trackImageBoundingBoxInImage = normalizedTrackImageBoundingBox.applying(fromViewToCameraImageTransform)

trackImageBoundingBoxInImage.origin.y = 1 - trackImageBoundingBoxInImage.origin.y // Image space uses bottom left as origin while view space uses top left

lastObservation = VNDetectedObjectObservation(boundingBox: trackImageBoundingBoxInImage)

}

The trickiest part above is how to convert the tap location from UIView coordinate space to the image coordinate space. ARKit provides us with the displayTransform matrix that converts from image coordinate space to a viewport coordinate space, but not the other way around. So how can we do the inverse? By using the inverse of the matrix. I really tried to minimize use of math in this post, but it is sometimes unavoidable in the 3D world.

Next, in the renderer, we our going to feed in a new image to track the finger’s new location:

func renderer(_ renderer: SCNSceneRenderer, updateAtTime time: TimeInterval) {

// Track the thumbnail

guard let pixelBuffer = self.sceneView.session.currentFrame?.capturedImage,

let observation = self.lastObservation else {

return

}

let request = VNTrackObjectRequest(detectedObjectObservation: observation) { [unowned self] request, error in

self.handle(request, error: error)

}

request.trackingLevel = .accurate

do {

try self.handler.perform([request], on: pixelBuffer)

}

catch {

print(error)

}

. . .

}

Once object tracking is completed, it will call a callback function in which we will update the thumbnail location. It is typically the inverse of the code written in the tap recognizer:

fileprivate func handle(_ request: VNRequest, error: Error?) {

DispatchQueue.main.async {

guard let newObservation = request.results?.first as? VNDetectedObjectObservation else {

return

}

self.lastObservation = newObservation

var trackImageBoundingBoxInImage = newObservation.boundingBox

// Transfrom the rect from image space to view space

trackImageBoundingBoxInImage.origin.y = 1 - trackImageBoundingBoxInImage.origin.y

guard let fromCameraImageToViewTransform = self.sceneView.session.currentFrame?.displayTransform(withViewportSize: self.sceneView.frame.size, orientation: UIInterfaceOrientation.portrait) else {

return

}

let normalizedTrackImageBoundingBox = trackImageBoundingBoxInImage.applying(fromCameraImageToViewTransform)

let t = CGAffineTransform(scaleX: self.view.frame.size.width, y: self.view.frame.size.height)

let unnormalizedTrackImageBoundingBox = normalizedTrackImageBoundingBox.applying(t)

self.trackImageBoundingBox = unnormalizedTrackImageBoundingBox

// Get the projection if the location of the tracked image from image space to the nearest detected plane

if let trackImageOrigin = self.trackImageBoundingBox?.origin {

self.lastFingerWorldPos = self.virtualObjectManager.worldPositionFromScreenPosition(CGPoint(x: trackImageOrigin.x - 20.0, y: trackImageOrigin.y + 40.0), in: self.sceneView)

}

}

}

Finally, we will use self.lastFingerWorldPos instead of screen center when drawing, and we are done.

ARKit and the Future

In this post, we have demonstrated how AR could be immersive through interaction with user fingers and real-life tables. With more advancements in computer vision, and by adding more AR-friendly hardware to gadgets (like depth cameras), we can get access to the 3D structure of more and more objects around us.

Although not yet released to the masses, it is worth mentioning how Microsoft is very serious to win the AR race through its Hololens device, which combines AR-tailored hardware with advanced 3D environment recognition techniques. You can wait to see who will win this race, or you can be part of it by developing real immersive augmented reality apps now! But please, do humankind a favor and don’t change live objects into bunnies.

Further Reading on the Toptal Blog:

Understanding the basics

What features does Apple ARKit provide to developers?

ARKit allows developers to build immersive augmented reality apps on iPhone and iPad by analyzing the scene presented by the camera view and finding horizontal planes in the room.

How can we track objects with Apple Vision library?

Apple Vision library allows developers to track objects in a video stream. Developers provide coordinates of a rectangle within initial image frame for the object they want to track, and then they feed in video frames and the library returns the new position for that object.

How can we get started with Apple ARKit?

To get started with Apple ARKit, download iOS 11 on iPhone 6s or above and create a new ARKit project from New > Project > Augmented Reality App. You can also check AR sample code provided by Apple here: https://developer.apple.com/arkit/

How does augmented reality work?

Augmented reality is the process of overlaying digital video and other CGI elements onto real-world video. Creating a quality CGI overlay on video captured by a camera requires the use of numerous sensors and software solutions.

What is the use of augmented reality?

Augmented reality has numerous potential applications in various industries. AR can be used to provide training in virtual settings that would otherwise be difficult to recreate for training purposes, e.g. spaceflight, medical industries, certain engineering projects.

What is difference between VR and AR?

While AR is based on the concept of overlaying CGI onto real-world video, VR generates a completely synthetic 3D environment, with no real life elements. As a result, VR tends to be more immersive, whereas AR offers a bit more flexibility and freedom to the user.

Alexandria, Alexandria Governorate, Egypt

Member since June 12, 2017

About the author

Osama has 8+ years of experience developing iOS/Mac apps, and leading technical teams. He also co-founded a startup with 10m users worldwide