Brace Yourselves Android Developers, A New Android Compiler Is Coming

With Dalvik out of the picture, many people expected Google’s new 64-bit capable ART runtime to stick around for years, which it probably will, but it will get a major overhaul in the near future. In addition to offering support for 64-bit hardware, ART also introduced ahead-of-time (AOT) compilation, while Dalvik was a just-in-time (JIT) compiler.

Throw in new 10-core ARM processors and Intel mobile processors based on three different architectures, and you end up with spicy, Google-style hardware gumbo.

With Dalvik out of the picture, many people expected Google’s new 64-bit capable ART runtime to stick around for years, which it probably will, but it will get a major overhaul in the near future. In addition to offering support for 64-bit hardware, ART also introduced ahead-of-time (AOT) compilation, while Dalvik was a just-in-time (JIT) compiler.

Throw in new 10-core ARM processors and Intel mobile processors based on three different architectures, and you end up with spicy, Google-style hardware gumbo.

Nermin Hajdarbegovic

As a veteran tech writer, Nermin helped create online publications covering everything from the semiconductor industry to cryptocurrency.

Expertise

Fragmentation has been a source of frustration for Android developers and consumers for years; now it seems things will get worse before they get better. A new Android compiler is coming, again, and there are some noteworthy developments on the hardware front, which could affect developers.

With Dalvik out of the picture, many people expected Google’s new 64-bit capable ART runtime to stick around for years, which it probably will, but it will get a major overhaul in the near future. In addition to offering support for 64-bit hardware, ART also introduced ahead-of-time (AOT) compilation, while Dalvik was a just-in-time (JIT) compiler. The new Optimized compiler will unlock even more possibilities.

As for hardware developments, there are some new trends and some new and old players in the smartphone System-on-Chip industry, but I will get to that later.

First, let’s take a look at Google’s runtime plans.

Dalvik, ART, ART with new Android Compiler

ART was introduced with Android 5.0 last year, launching on the Nexus 9 and Nexus 6, although the latter used a 32-bit ARMv7-A CPU. However, rather than being designed from scratch, ART was actually an evolution of Dalvik, which moved away from JIT.

Dalvik compiles apps on the fly, as necessary. This, obviously, adds more CPU load, increases the time needed to launch applications, and takes its toll on battery life. Since ART compiles everything ahead of time, at installation, it does not have to waste clock cycles on compilation every time the device starts an app. It results in a smoother user experience, while at the same time reducing power consumption and boosting battery life.

So what is Google going to do next?

Since ART was developed to take advantage of new, 64-bit ARMv8 CPU cores, which started coming online late last year, the original compiler appears to have been a stopgap measure. This meant that time-to-market was the priority, not efficiency and optimization. It does not mean ART was just a botched rush job, because it wasn’t; the runtime works well and has been praised by developers and users.

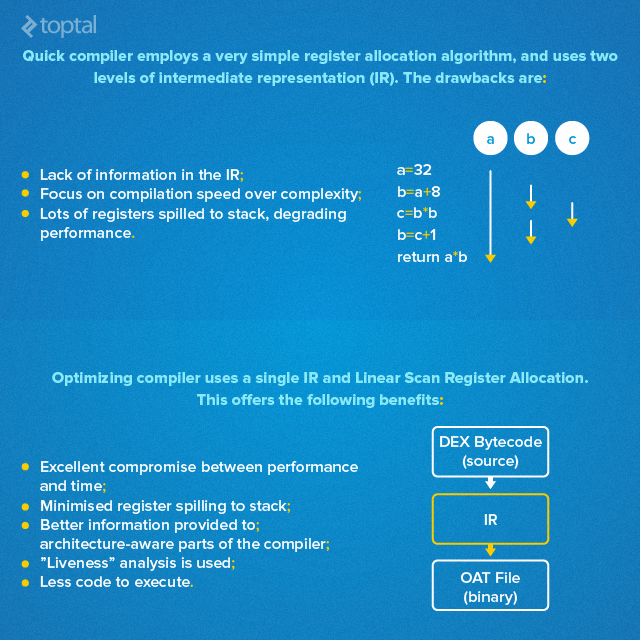

However, there is room for improvement, and now, it seems Google has been working on a vastly improved compiler for a while, and the effort probably predates the official release of ART. British chip designer, ARM, recently revealed a few interesting factoids about Google’s runtime plans, pointing to a new “Optimizing” compiler for ART. The new compiler offers intermediate representations (IR) that allow manipulation of the program structure prior to code generation. It uses a single level of intermediate representation, structured as an information-rich graph, which provides better information to the architecture-aware parts of the compiler.

The “Quick” compiler uses two levels of intermediate representation, with simple linked lists of instructions and variables, but it loses important information during IR creation.

ARM claims the new “Optimizing” compiler will offer a number of significant benefits, describing it as a “big leap forward” in terms of compiler tech. The compiler will offer a better infrastructure for future optimisations and will help improve code quality.

Optimizing And Quick Compiler Key Features

ARM outlined the difference between the two compilers in a single slide, claiming that the “Optimizing” compiler enables more efficient register usage, less spilling to the stack, and needs less code to execute.

Here is how ARM puts it:

Quick has a very simple register allocation algorithm.

- Lack of information in the IR

- Compilation speed over complexity – origins as a JIT

- Poor performance – lots of registers spilled to the stack

Optimizing uses Liner Scan Register Allocation.

- Excellent compromise between performance and time

- Liveness analysis is used

- Minimizing register spilling to stack”

Although the new compiler is still in development, ARM shared a few performance figures; in synthetic CPU tests, the compiler yields a performance increase in the 15 to 40 percent range. Compilation speed is increased by about 8 percent. However, the company cautions that the figures “change daily” as the new compiler matures.

The focus is on achieving near parity with the “Quick” compiler, which currently has a clear advantage in compilation speed and file size.

Right now, it looks like a trade-off; the new “Optimized” compiler delivers impressive performance improvements in CPU-bound applications and synthetic benchmarks, but results in 10 percent bigger files that compile ~8 percent slower. While the last two figures seem to be outweighed by the CPU performance gains, bear in mind that they will apply to every app, regardless of CPU load, using up even more limited resources such as RAM and storage. Remember that compiling in 64 bits already takes up more RAM than compiling in 32 bits.

Any reduction in compilation speed and launch times is a source of concern as well, due to its effect on device responsiveness and user experience.

The Multicore ARM Race

Another source of concern, regardless of runtime and compiler, is the popularity of multicore processors based on ARMv7-A and ARMv8 architectures. The octa-core craze started in 2013 and was quickly dismissed as a cheap marketing stunt. A Qualcomm executive went so far as to call octa-core processors “silly” and “dumb”, saying that the company wouldn’t make any because its engineers “aren’t dumb”. The same exec also described 64-bit support on the Apple A7 as a “gimmick”.

Fast forward two years, and I have a 64-bit Qualcomm octa-core Cortex-A53 smartphone on my desk, while the executive in question has a different job title on his name plaque.

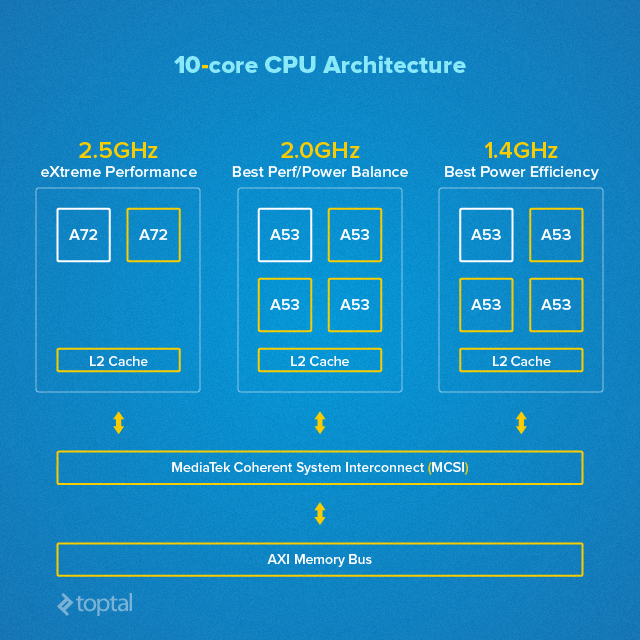

As if 8-core chips aren’t enough, next year, we will see the first devices based on 10-core application processors. The first 10-core smartphone chip is coming from MediaTek in the form of the Helio X20, and will feature three clusters of CPU cores, dubbed huge.Medium.TINY. Sounds fun, and it gets better; we will soon start to see the first affordable Android devices based on a new generation of Intel processors.

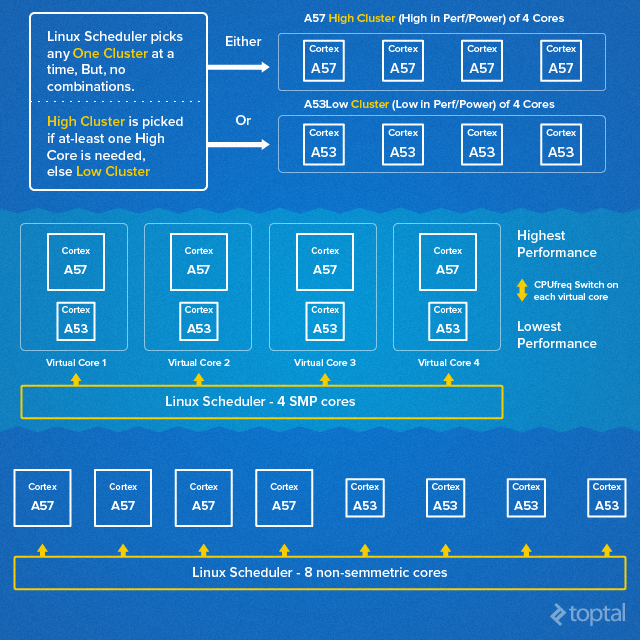

Let’s look at the ARM core wars and what they mean for developers and consumers. There are two distinct versions of ARM SoC octa-core designs. High-end solutions tend to use ARM’s big.LITTLE layout, using four low power cores and four big cores for high load. The second way of sticking eight ARM CPU cores in a chip is to use identical cores, or identical cores in two clusters with different clock speeds.

Leading mobile chipmakers tend to use both approaches, big.LITTLE chips in high-end devices, along with regular octa-cores on mainstream products. Both approaches have their pros and cons, so let’s take a closer look.

ARM big.LITTLE vs. Regular Octa-Core:

Using two clusters of different CPU cores allows for good single thread performance and efficiency on big.LITTLE designs. The trade-off is that a single Cortex-A57 core is roughly the size of four small Cortex-A53 cores, and it’s less efficient.

Using eight identical cores, or eight identical cores in two clusters with different clocks, is cost effective and power efficient. However, single thread performance is low.

The current generation of big.LITTLE designs based on ARMv8 cores cannot use the cheapest 28nm manufacturing node. Even at 20nm, some designs exhibit a lot of throttling, which limits their sustained performance. Standard octa-cores based on Cortex-A53 CPU cores can be effectively implemented in 28nm, so chipmakers don’t have to use cutting-edge manufacturing nodes like 20nm or 16/14nm FinFET, which keeps costs down.

I don’t want to bore you to death with chip design trends, but a few basics are important to keep in mind for 2015 and 2016 mobile processors:

- Most chips will use 28nm manufacturing nodes and Cortex-A53 cores, limiting single-thread performance.

- The big Cortex-A57 core is implemented in two major designs from Samsung and Qualcomm, but other chipmakers appear to be skipping it and waiting for the Cortex-A72 core.

- Multithreaded performance will become increasingly relevant over the next 18 months.

- Big performance gains cannot be expected until 20nm and FinFET nodes become significantly cheaper (2016 and beyond).

- 10-core designs are coming as well.

All of these points have certain implications for Android developers. As long as chipmakers are stuck at 28nm processes for the majority of smartphone and tablet chips, developers will have to do their best to tap multi-threaded performance and focus on efficiency.

ART and new compilers should go a long way toward improving performance and efficiency, but they won’t be able to break the laws of physics. Old, 32-bit designs won’t be used in many devices moving forward, and even the cheapest devices are starting to ship with 64-bit silicon and Android 5.0.

Although Android 5.x still has a relatively small user base, it is growing rapidly and will expand even faster now that $100 to $150 phones are arriving with 64-bit chips and Android 5.0. The transition to 64-bit Android is going well.

The big question is when Dalvik will get the new Optimized compiler. It could launch later this year, or next year with Android 6.0; it’s still too early to say for sure.

Heterogeneous Computing Coming To Mobiles

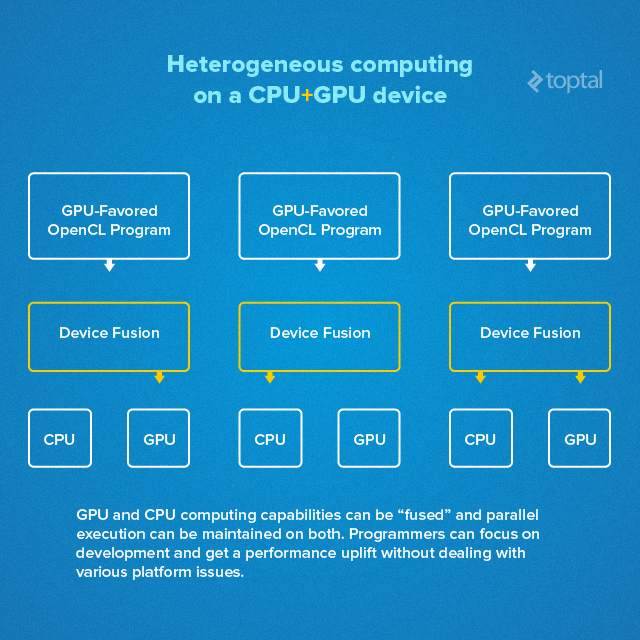

There is another thing to keep in mind; mobile graphics are getting more powerful, especially on high-end processors, so chipmakers are doing a lot of work behind the scenes to tap them for uses other than games and video decoding. Heterogeneous computing has been around for a few years, allowing PCs to offload highly parallelized tasks to the GPU.

The same technology is now coming to mobile processors, effectively fusing CPU and GPU cores. The approach will allow developers to unlock more performance by executing certain types of programmes, namely OpenCL loads, to the GPU. Developers will be able to focus on throughput, while processors will automatically handle parallel execution on CPUs and GPUs.

Of course, this won’t work in every app and reduce load in all situations, but in some niches, it should unlock more performance and help reduce power consumption. Depending on the load, the SoC will automatically decide how to process code, using the CPU for some tasks while offloading others to the GPU.

Since we are dealing with parallelised applications, the approach is expected to yield the biggest improvements in image processing. For example, if you need to use super-resolution and resample images, the process could be divided into different stages in OpenCL. If the process involves different stages, such as find_neighbor, col_upsample, row_upsample, sub and blur2, the hardware will distribute the load across CPU and GPU cores in the most efficient way, depending on what sort of core will handle the given task in the best way. This will not only improve performance by an order of magnitude, but it will also help reduce power consumption.

Intel Is Back From The Dead, And It’s Looking Good For a Corpse

Intel missed the boat on the mobile revolution and practically ceded the market to ARM and its hardware partners. However, the US chipmaker has the money and resources to spend a few years on the bench and make a comeback.

Last year, Intel subsidized sales of its Atom processors for tablets, managing to quadruple shipments in under a year. It is now turning its attention on the smartphone segment with new SoFIA Atom x3 processors. Frankly, I am not entirely sure these chips should even be referred to as Intel processors because they aren’t actually produced by the chip giant. SoFIA processors are designed on a tight budget, in cooperation with Chinese chipmakers. They are manufactured on the 28nm node, they are slow, tiny and cheap.

This may come as a surprise to some casual observers, but Intel is not bothering with high-end mobile solutions; low-end SoFIA parts will power commoditized Android phones priced between $50 and $150. The first designs should start shipping by the end of the second quarter of 2015, and most of them will be designed for Asian markets, as well as emerging markets in other parts of the world. While it’s possible we will see some of them in North America and Europe, Intel’s focus appears to be on China and India.

Intel is hedging its bets with Atom x5 and x7 processors, which will use a completely new architecture plus the company’s cutting edge 14nm manufacturing node. However, these products are destined for tablets rather than smartphones, at least for the time being.

The big question, the one I don’t have an answer to, is how many design wins Intel can get under its belt. Analysts are divided on the issue and shipment forecasts look like guesstimates at this point.

Last year Intel proved that it is willing to sustain losses and burn billions of dollars to gain a foothold in the tablet market. It is still too early to say whether it will use the same approach with new Atom chips, especially smartphone SoFIA products.

I’ve only seen one actual product based on an Intel SoFIA processor so far – a Chinese $69 tablet with 3G connectivity. It’s essentially an oversized phone, so as you can imagine, an entry-level SoFIA phone could end up costing much less. It must be a tempting proposition for white-box smartphone and tablet makers, since they could easily design $50-$100 devices with an “Intel Inside” sticker on the back, which, from a marketing perspective, sounds good.

Unfortunately, we can only guess how many Intel phones and tablets will ship over the next year or so. We are obviously dealing with millions of units, dozens of millions, but the question is: how many dozens? Most analysts believe Intel will ship between 20 and 50 million Atom x3 processors this year, which is a drop in the bucket considering total smartphone shipments are projected to hit 1.2 billion devices this year. However, Intel is ruthless, has money to burn and doesn’t have to make a profit on any of these chips. It could capture 3 to 4 percent of the market by the end of 2015, but the market share should keep growing in 2016 and beyond.

What Does This Mean For Android Developers?

Intel got a bad reputation among some Android developers due to certain compatibility issues. This was a real problem a couple of years ago because the hardware was much different to standard ARM cores used in most devices.

Luckily, the company made a lot of progress over the two years; it offers extensive training programmes, comprehensive documentation and more. In fact, a quick glance at LinkedIn jobs listings reveals Intel is hiring dozens of Android developers, with a few new vacancies opening each month.

So everything is going well, right? Well, it depends…

Last week I had a chance to test a new Asus phone based on Intel’s Atom Z3560, and I must say I was pleased with the results; it’s a good hardware platform capable of addressing 4GB of RAM on a budget device. Asus thinks it can sell 30 million units this year, which is truly impressive given Intel’s smartphone market share.

The only problem is that some Android apps still misbehave on Intel hardware. Usually, it is nothing too big, but you do get some weird crashes, unrealistic benchmark scores and other compatibility quirks. The bad news is that developers can’t do much to address hardware-related issues, although getting some Intel-based devices for testing would be a good start. The good news: Intel is doing its best to sort everything out on its end, so you don’t have to.

As for ARM hardware, we will see more CPU cores in even more clusters. Single thread performance will remain limited on many mainstream devices, namely inexpensive phones based on quad- and octa-core Cortex-A53 SoCs. It is too early to say whether or not new Google/ARM compilers will be able boost performance on such devices. They probably will, but by how much? Heterogeneous computing is another trend to watch out for next year.

Wrapping up, here is what Android developers should expect in terms of software and hardware in late 2015 and 2016:

- More Intel x86 processors in entry-level and mainstream market segments.

- Intel’s market share will be negligible in 2015, but may grow in 2016 and beyond.

- More ARMv8 multicore designs coming online.

- New “Optimized” ART compiler.

- Heterogeneous computing is coming, but it will take a while.

- Transition to FinFET manufacturing nodes and Cortex-A72 will unlock more performance and features.