Case Study: Why I Use AWS Cloud Infrastructure for My Products

As a platform for running complex and demanding software products, AWS offers flexibility by using resources only when needed and scaling on demand.

In this article, Toptal JavaScript Developer Tomislav Capan explains why he uses AWS and what provisioned infrastructure can do for clients.

As a platform for running complex and demanding software products, AWS offers flexibility by using resources only when needed and scaling on demand.

In this article, Toptal JavaScript Developer Tomislav Capan explains why he uses AWS and what provisioned infrastructure can do for clients.

Tomislav is a veteran software engineer, technical consultant, and architect. He specializes in highly scalable full-stack applications.

PREVIOUSLY AT

As a platform for running a complex and demanding software product, AWS offers flexibility by utilizing resources when needed, at the scale needed. It’s on demand and instant, allowing full control over the running environment. When proposing such a cloud architecture solution to a client, the provisioned infrastructure, and its price, heavily depend on requirements that need to be set up front.

This article will present one such AWS cloud infrastructure architecture, proposed and implemented for LEVELS, a social network with an integrated facial payment function which finds and applies all the benefits users might get for the card programs they are in, things they own, or places they live in.

Requirements

The client had two principal requirements that the proposed solution had to meet:

- Security

- Scalability

The security requirement was all about protecting users’ data from unauthorized access from outside, but also from inside. The scalability requirement was about the infrastructure’s ability to support product growth, automatically adapting to increasing traffic and occasional spikes, as well as automatic failover and recovery in case of servers failures, minimizing potential downtime.

Overview of AWS Security Concepts

One of the main benefits of setting up your own AWS Cloud infrastructure is full network isolation and full control over your cloud. That’s the main reason why you’d choose the Infrastructure as a Service (IaaS) route, rather than running somewhat simpler Platform as a Service (PaaS) environments, which offer solid security defaults but lack the complete, fine-grained control you get by setting up your own cloud with AWS.

Although LEVELS was a young product when they approached Toptal for AWS consulting services, they were willing to commit to AWS and knew they wanted state-of-the-art security with their infrastructure, as they are very concerned about user data and privacy. On top of that, they are planning to support credit card processing in the future, so they knew they would need to ensure PCI-DSS compliance at some point.

Virtual Private Cloud (VPC)

Security on AWS starts with the creation of your own Amazon Virtual Private Cloud (VPC) - a dedicated virtual network that hosts your AWS resources and is logically isolated from other virtual networks in the AWS Cloud. The VPC gets its own IP address range, fully configurable subnets, routing tables, network access control lists, and security groups (effectively, a firewall).

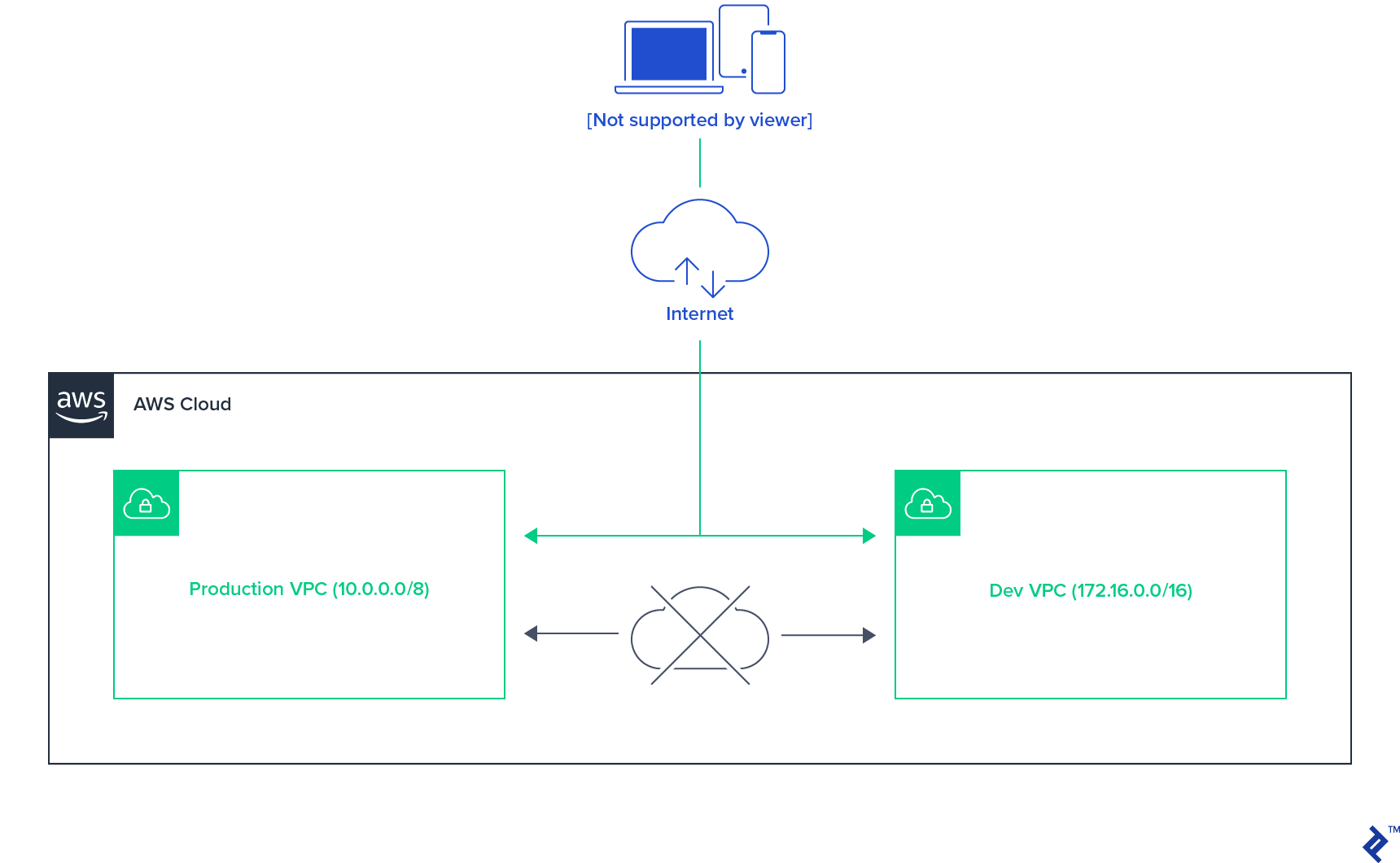

With LEVELS, we started by isolating our production environment from development, testing, and QA environments. The first idea was to run each of them as their own fully isolated VPC, each running all the services as required by the application. After some consideration, it turned out we could allow for some resource-sharing across the three non-production environments, which reduced the cost to some extent.

Thus, we settled on the production environment as one VPC, with development, testing, and QA environments as another VPC.

Access Isolation on a VPC Level

The two-VPC setup isolates the production environment from the remaining three environments on a network level, ensuring accidental application misconfiguration is not able to cross that boundary. Even if the non-production environment configuration should erroneously point to production environment resources, like database or message queues, there’s no way of gaining access to them.

With development, testing, and QA environments sharing the same VPC, cross-boundary access is possible in case of misconfiguration, but, as those environments use test data, no real security concern exists with the lack of isolation there.

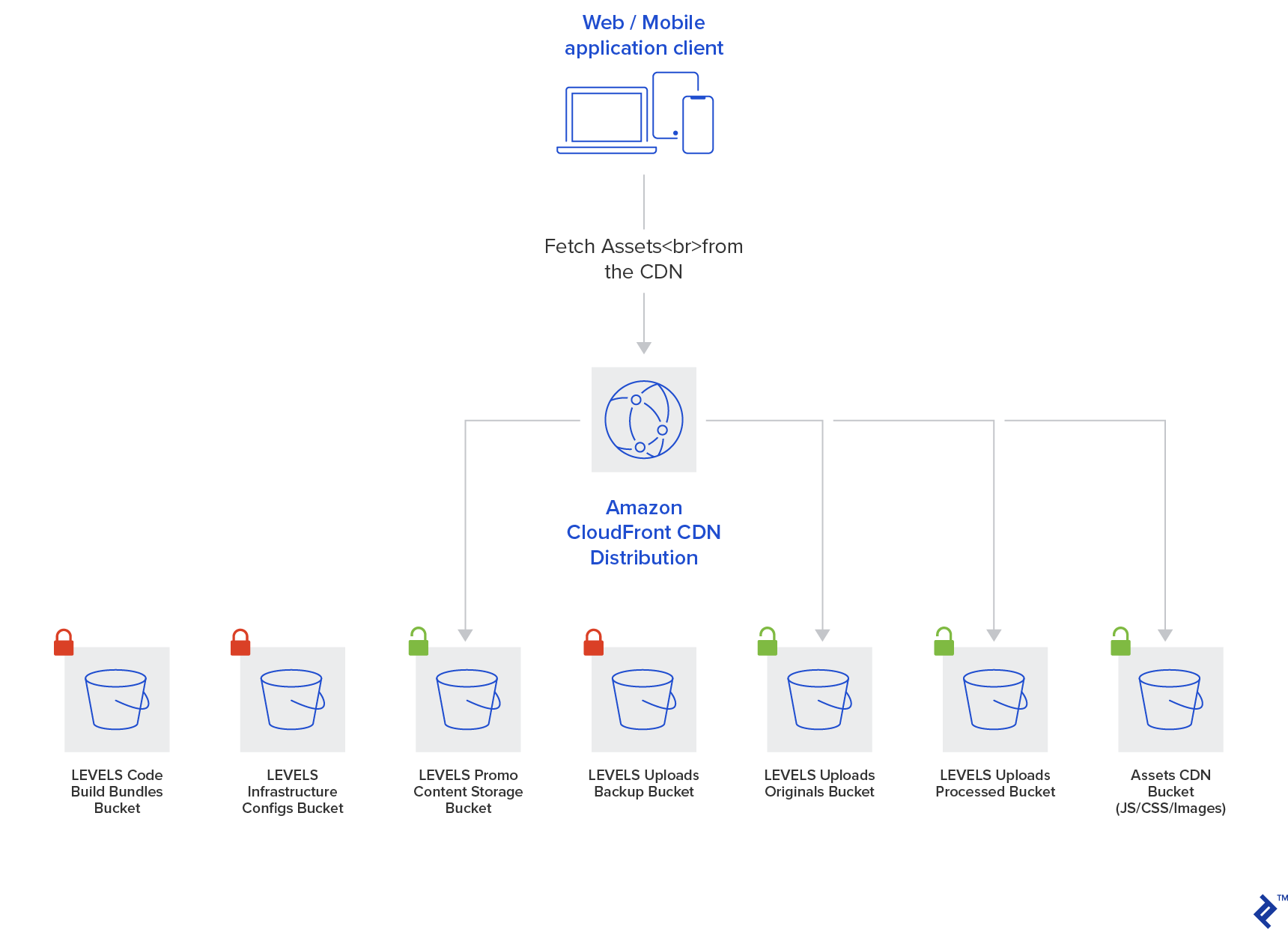

Assets Store Security Model

Assets get stored in the Amazon Simple Storage Service (S3) object storage. The S3 storage infrastructure is completely independent from VPCs, and its security model is different. Storage is organized in separate S3 buckets for each environment and/or classes of assets, protecting each bucket with the appropriate access rights.

With LEVELS, several classes of assets were identified: user uploads, LEVELS produced content (promotional videos and similar content), web application and UI assets (JS code, icons, fonts), application and infrastructure configuration, and server-side deployments bundles.

Each of these gets a separate S3 bucket.

Application Secrets Management

With AWS, there’s an encrypted AWS Systems Manager Parameter Store or the AWS Secrets Manager, which are managed key-value services designed to keep secrets safe (you can learn more at Linux Academy and 1Strategy).

AWS manages the underlying encryption keys and handles the encryption/decryption. Secrets themselves can have read and write permissions policies applied based on the key prefixes, for both the infrastructure and the users (servers and developers, respectively).

Server SSH Access

SSH access to servers in a fully automated and immutable environment shouldn’t be needed at all. Logs can be extracted and sent to dedicated logging services, monitoring is also offloaded to a dedicated monitoring service. Still, there might be a need for occasional SSH access for configuration inspection and debugging.

To limit the servers’ attack surface, the SSH port doesn’t get exposed to the public network. Servers are reached through a bastion host, a dedicated machine allowing for external SSH access, additionally protected by whitelisting only the allowed IP addresses range at the firewall. Access is controlled by deploying the users’ public SSH keys to the appropriate boxes (password logins are disabled). Such a setup offers a fairly resilient gate for malicious attackers to get through.

Database Access

The same principles outlined above apply to database servers. There might also be an occasional need to connect and manipulate the data directly in the database, although this is definitely not a recommended practice, and such access needs to be protected in the same way as the servers SSH access is protected.

A similar approach can be used, utilizing the same bastion host infrastructure with SSH tunneling. A double SSH tunnel is needed, one to the bastion host, and through that one, another one to the server having access to the database (bastion host has no database server access). Through that second tunnel, a connection from the user’s client machine to the database server is now available.

AWS Scalability Concepts Overview

When we talk purely about servers, scalability is rather easily achieved with platforms simpler than AWS. The main downside is that certain requirements might need to be covered by the platform’s external services, meaning data traveling across the public network and breaking security boundaries. Sticking with AWS, all data is kept private, while scalability needs to be engineered to achieve a scalable, resilient, and fault-tolerant infrastructure.

With AWS, the best approach to scalability is by leveraging managed AWS services with monitoring and automation battle-tested across thousands of clients using the platform. Add data backups and recovery mechanisms into the mix, and you get a lot of concerns off the table by just relying on the platform itself.

Having all that offloaded allows for a smaller operations team, somewhat offsetting the higher cost of platform managed services. The LEVELS team was happy to choose that path wherever possible.

Amazon Elastic Compute Cloud (EC2)

Relying on a proven environment running EC2 instances is still a rather reasonable approach, compared to the additional overhead and complexity of running containers or the still very young and rapidly changing architectural concepts of serverless computing.

EC2 instances provisioning needs to be fully automated. Automation is achieved through custom AMIs for different classes of servers, and Auto Scaling Groups taking care of running dynamic servers fleets by keeping the appropriate number of running servers instances in the fleet according to the current traffic.

Additionally, the auto-scaling feature allows setting the lower and the upper limit in the number of EC2 instances to be running. The lower limit aids in fault tolerance, potentially eliminating downtime in case of instances failures. The upper limit keeps costs under control, allowing for some risk of downtime in case of unexpected extreme conditions. Auto-scaling then dynamically scales the number of instances within those limits.

Amazon Relational Database Service (RDS)

The team have already been running on Amazon RDS for MySQL, but the appropriate backup, fault tolerance, and security strategy had yet to be developed. In the first iteration, the database instance was upgraded to a two-instance cluster in each VPC, configured as a master and a read-replica, supporting automatic failover.

In the next iteration, with the availability of MySQL version 5.7 engine, the infrastructure got an upgrade to the Amazon Aurora MySQL service. Although fully managed, Aurora is not an automatically scaled solution. It offers automatic storage scaling, avoiding the issue of capacity planning. But a solution architect still needs to choose the computing instance size.

The downtime due to scaling can’t be avoided but can be reduced to a minimum with the help of the auto-healing capability. Thanks to better replication capabilities, Aurora offers a seamless failover functionality. Scaling is executed by creating a read-replica with the desired computing power and then executing the failover to that instance. All other read-replicas are then also taken out of the cluster, resized, and brought back into the cluster. It requires some manual juggling but is more than doable.

The new Aurora Serverless offering enables some level of automatic scaling of the computing resources as well, a feature definitely worth looking into in the future.

Managed AWS Services

Aside from those two components, the rest of the system benefits from the auto-scaling mechanisms of the fully managed AWS services. Those are Elastic Load Balancing (ELB), Amazon Simple Queue Service (SQS), Amazon S3 object storage, AWS Lambda functions, and Amazon Simple Notification Service (SNS), where no particular scaling effort is needed from the architect.

Mutable vs. Immutable Infrastructure

We recognize two approaches to handling the servers infrastructure - a mutable infrastructure where servers are installed and continually updated and modified in-place; and an immutable infrastructure where the servers are never modified after provisioned, and any configuration modifications or server updates are handled by provisioning new servers from a common image or an installation script, replacing the old ones.

With LEVELS, the choice is to run an immutable infrastructure with no in-place upgrades, configuration changes, or management actions. The only exception is application deployments, which do happen in-place.

More on mutable vs. immutable infrastructure can be found in HashiCorp’s blog.

Architecture Overview

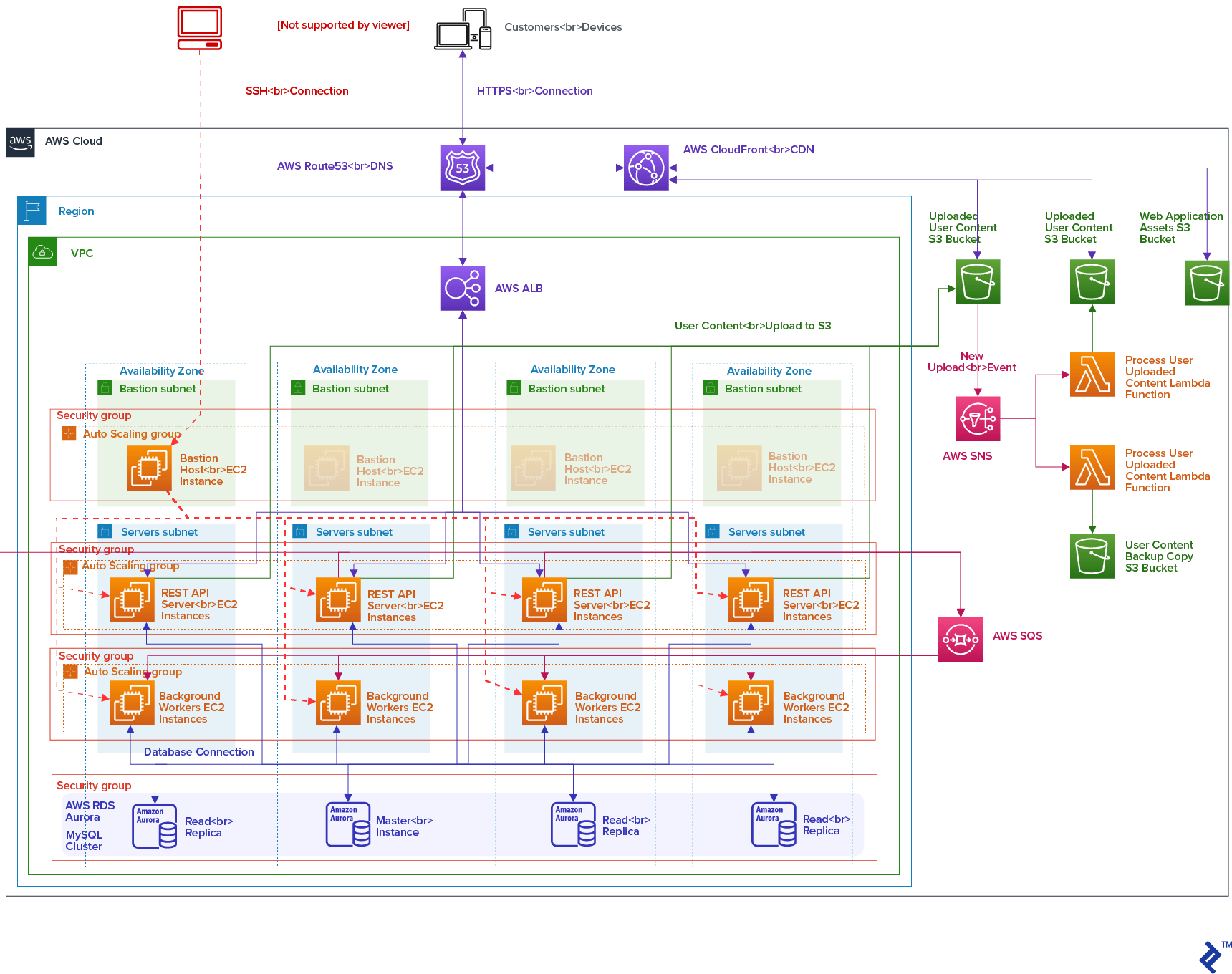

Technically, LEVELS is a mobile app and a web app with the REST API backend and some background services - a rather typical application nowadays. With regard to that, the following infrastructure was proposed and configured:

Virtual Network Isolation - Amazon VPC

The diagram visualizes a structure of one environment in its VPC. Other environments follow the same structure (having the Application Load Balancer (ALB) and Amazon Aurora cluster shared between the non-production environments in their VPC, but the networking setup is exactly the same).

The VPC is configured across four availability zones within an AWS region for fault tolerance. Subnets and Security Groups with Network Access Control Lists allow for satisfying the security and access control requirements.

Spanning the infrastructure across multiple AWS Regions for additional fault tolerance would have been too complex and unnecessary at an early stage of the business and its product but is an option in the future.

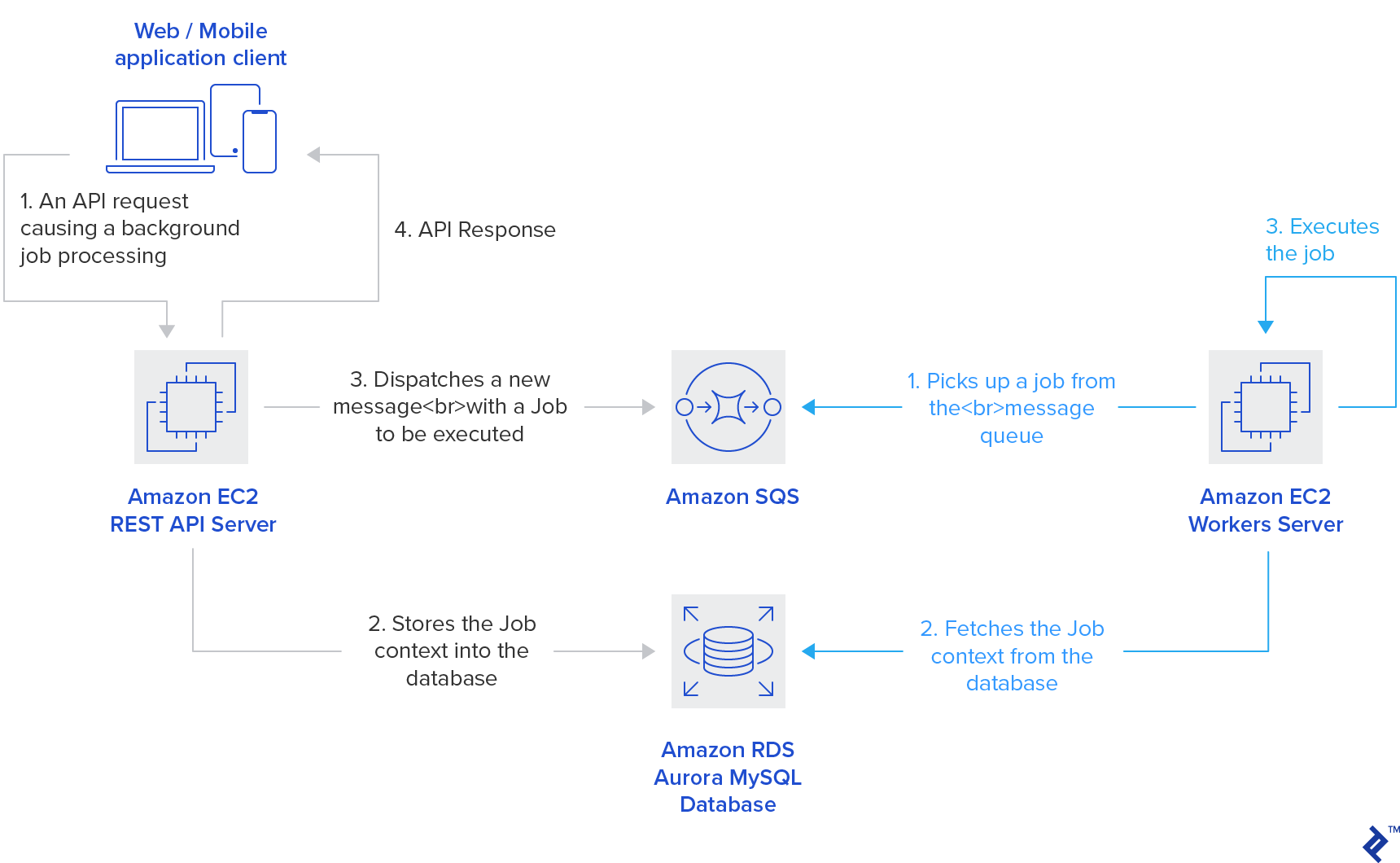

Computing

LEVELS runs a traditional REST API with some background workers for the application logic happening in the background. Both of these run as dynamic fleets of plain EC2 instances, fully automated through Auto Scaling Groups. Amazon SQS managed service is used for background tasks (jobs) distribution, avoiding the need of running, maintaining, and scaling your own MQ server.

There are also some utility tasks having no business logic shared with the rest of the application, like image processing. Such types of tasks run extremely well on AWS Lambda, as Lambdas are indefinitely horizontally scalable and offer unmatched parallel processing in comparison with server-workers.

Load Balancing, Auto-scaling, and Fault Tolerance

Load balancing can be achieved manually through Nginx or HAProxy, but choosing the Amazon Elastic Load Balancing (ELB) adds the benefit of having the load balancing infrastructure intrinsically automatically scalable, highly available, and fault-tolerant.

The Application Load Balancer (ALB) flavor of the ELB is used, making use of HTTP layer routing available at the load balancer. New instances added to the fleet get automatically registered with the ALB through the mechanisms of the AWS platform. The ALB also monitors the instances’ availability at all times. It has the power of deregistering and terminating the unhealthy instances, triggering the Auto Scaling to replace them with fresh ones. Through this interplay between the two, the EC2 fleet auto-healing is achieved.

Application Data Store

The application data store is an Amazon Aurora MySQL cluster, consisting of a master instance and a number of read-replica instances. In the case of master instance failure, one of the read-replicas gets automatically promoted into a new master instance. Configured as such, it satisfies the fault-tolerance requirement requested.

Read-replicas, as their name implies, can also be used for distributing the database cluster load for data read operations.

Database storage is automatically scaled in 10GB increments with Aurora, and backups are also fully managed by the AWS, offering a point-in-time restore by default. All that reduces the database administration burden to virtually none, except for scaling up the database computing power when the need arises - a service well worth paying for to run worry-free.

Storing and Serving Assets

LEVELS accepts users’ uploaded content which needs to be stored. Amazon S3 object storage is, quite predictably, the service that will take care of that task. There are also application assets (UI elements - images, icons, fonts) which need to be made available to the client app, so they get stored into the S3 as well. Offering automated backups of the uploaded data through their internal storage replication, S3 provides data durability by default.

Images users upload are of various size and weight, often unnecessarily big for serving directly and overweight, putting a burden on mobile connections. Producing several variations in different sizes will enable serving more optimized content for each use case. AWS Lambda is leveraged for that task, as well as for making a copy of uploaded images originals into a separate backup bucket, just in case.

Finally, a browser-running web application is also a set of static assets - the Continuous Integration build infrastructure compiles the JavaScript code and stores each build into the S3 as well.

Once all these assets are safely stored in S3, they can be served directly, as S3 provides a public HTTP interface, or through the Amazon CloudFront CDN. CloudFront makes the assets geographically distributed, reducing the latency to the end users, and also enables the HTTPS support for serving static assets.

Infrastructure Provisioning and Management

Provisioning the AWS infrastructure is a combination of networking, the AWS managed resources and services, and the bare EC2 computing resources. Managed AWS services are, well, managed. There’s not much to do with them except provisioning and applying the appropriate security, while with EC2 computing resources, we need to take care of the configuration and automation on our own.

Tooling

The web-based AWS Console makes managing the “lego-bricks-like” AWS infrastructure anything but trivial, and, as any manual work, rather error-prone. That’s why using a dedicated tool to automate that work is highly desired.

One such tool is the AWS CloudFormation, developed and maintained by the AWS. Another one is the HashiCorp’s Terraform - a slightly more flexible choice by offering multiple platforms providers, but interesting here mainly due to Terraform’s immutable infrastructure approach philosophy. Aligned with the way LEVELS infrastructure is being run, Terraform, together with Packer for providing the base AMI images, turned out to be a great fit.

Infrastructure Documentation

An additional benefit with an automation tool is that it requires no detailed infrastructure documentation, which gets outdated sooner or later. The provisioning tool’s “Infrastructure as Code” (IaC) paradigm dubs as documentation, with the benefit of always being up to date with the actual infrastructure. Having a high-level overview, less likely to be changed and relatively easy to maintain with the eventual changes, documented on the side is then enough.

Final Thoughts

The proposed AWS Cloud infrastructure is a scalable solution able to accommodate future product growth mostly automatically. After almost two years, it manages to keep the operation costs low, relying on cloud automation without having a dedicated systems operations team on duty 24/7.

With regard to security, AWS Cloud keeps all the data and all the resources within a private network inside the same cloud. No confidential data is ever required to travel across the public network. External access is granted with fine granular permissions to the trusted support systems (CI/CD, external monitoring, or alerting), limiting the scope of access only to the resources required for their role in the whole system.

Architectured and set up correctly, such a system is flexible, resilient, secure, and ready to answer all future requirements regarding scaling for the product growth or implementing advanced security like PCI-DSS compliance.

It’s not necessarily cheaper than the productized offers from the likes of Heroku or similar platforms, which work well as long as you fit in the common usage patterns covered by their offerings. By choosing the AWS you gain more control over your infrastructure, an additional level of flexibility with the range of AWS services offered, and customized configuration with the fine-tuning ability of the whole infrastructure.

Further Reading on the Toptal Blog:

Understanding the basics

What is cloud infrastructure?

Cloud infrastructure is a highly automated offering where computing resources complemented with storage and networking services are provided to the user on demand. In essence, users have an IT infrastructure they can use without ever having to pay for the construction of physical infrastructure.

What is Amazon Web Services (AWS)?

Amazon Web Services is a cloud services provider which owns and maintains the network-connected hardware required for these application services. AWS Cloud provides a mix of infrastructure as a service (IaaS), platform as a service (PaaS), and packaged software as a service (SaaS) offerings.

How does AWS Cloud work?

AWS Cloud works as an on-demand cloud-computing resource that allows users to provision and use only what they need. It offers flexible, reliable, scalable, easy-to-use, and cost-effective cloud-computing solutions.

What is Cloud Computing?

Cloud computing is the on-demand delivery of compute power, database, storage, applications, and other IT resources via the internet with pay-as-you-go pricing.

Is AWS a public cloud?

AWS is an API-driven globally distributed public cloud offering near-instant and infinite capacity through developer self-service. Applications can auto-scale capacity up or down based on demand, achieving instant global scale.

Tomislav Capan

Zagreb, Croatia

Member since February 20, 2013

About the author

Tomislav is a veteran software engineer, technical consultant, and architect. He specializes in highly scalable full-stack applications.

PREVIOUSLY AT