The Missing Article About Qt Multithreading in C++

C++ developers strive to build robust multithreaded applications, but multithreading was never an easy thing to do.

In this article, Toptal Freelance Qt Developer Andrei Smirnov talks about several scenarios exploring concurrent programming with the Qt framework.

C++ developers strive to build robust multithreaded applications, but multithreading was never an easy thing to do.

In this article, Toptal Freelance Qt Developer Andrei Smirnov talks about several scenarios exploring concurrent programming with the Qt framework.

Andrei has 15+ years working for the likes of Microsoft, EMC, Motorola, and Deutsche Bank on mobile, desktop, and web using C++, C#, and JS.

PREVIOUSLY AT

C++ developers strive to build robust multithreaded Qt applications, but multithreading was never easy with all those race conditions, synchronization, and deadlocks and livelocks. To your credit, you don’t give up and find yourself scouring StackOverflow. Nevertheless, picking the right and working solution from a dozen different answers is fairly non-trivial, especially given that each solution comes with its own drawbacks.

Multithreading is a widespread programming and execution model that allows multiple threads to exist within the context of one process. These threads share the process’ resources but are able to execute independently. The threaded programming model provides developers with a useful abstraction of concurrent execution. Multithreading can also be applied to one process to enable parallel execution on a multiprocessing system..

The goal of this article is to aggregate the essential knowledge about concurrent programming with the Qt framework, specifically the most misunderstood topics. A reader is expected to have previous background in Qt and C++ to understand the content.

Choosing between using QThreadPool and QThread

The Qt framework offers many tools for multithreading. Picking the right tool can be challenging at first, but in fact, the decision tree consists of just two options: you either want Qt to manage the threads for you, or you want to manage the threads by yourself. However, there are other important criteria:

-

Tasks that don’t need the event loop. Specifically, the tasks that are not using signal/slot mechanism during the task execution.

Use: QtConcurrent and QThreadPool + QRunnable. -

Tasks that use signal/slots and therefore need the event loop.

Use: Worker objects moved to + QThread.

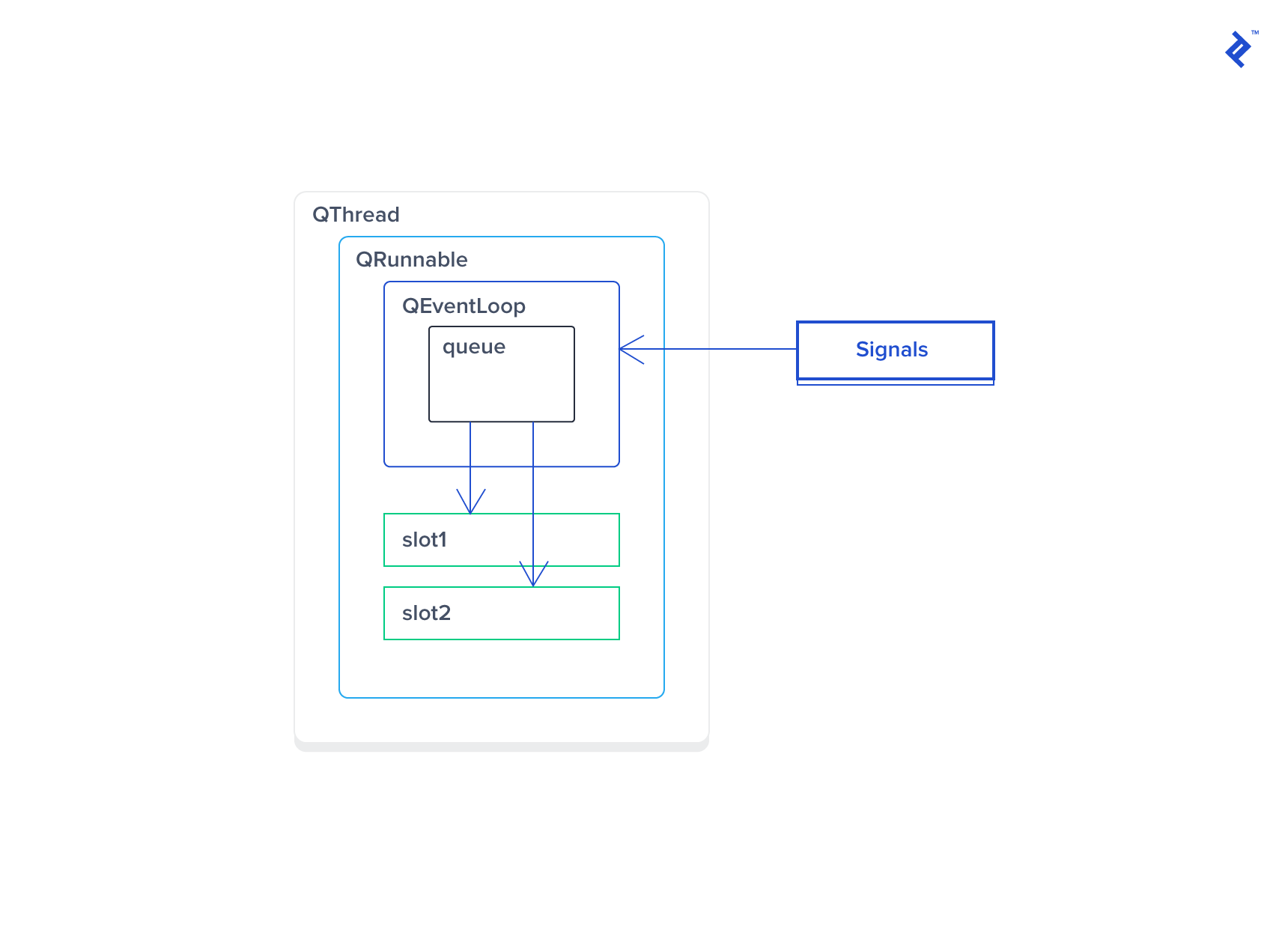

The great flexibility of the Qt framework allows you to work around the “missing event loop” problem and to add one to QRunnable:

class MyTask : public QObject, public QRunnable

{

Q_OBJECT

public:

void MyTask::run() {

_loop.exec();

}

public slots:

// you need a signal connected to this slot to exit the loop,

// otherwise the thread running the loop would remain blocked...

void finishTask() {

_loop.exit();

}

private:

QEventLoop _loop;

}

Try to avoid such “workarounds”, though, because these are dangerous and not efficient: if one of the threads from thread pool (running MyTask) is blocked due to waiting for a signal, then it cannot execute other tasks form the pool.

You can also run a QThread without any event loop by overriding QThread::run() method and this is perfectly fine as long as you know what you are doing. For example, do not expect method quit() to work in such case.

Running one task instance at a time

Imagine you need to ensure that only one task instance at a time can be executed and all pending requests to run the same task are waiting on a certain queue. This is often needed when a task is accessing an exclusive resource, such as writing to the same file or sending packets using TCP socket.

Let’s forget about computer science and producer-consumer pattern for a moment and consider something trivial; something that can be easily found in real projects.

A naïve solution to this problem could be using a QMutex. Inside the task function, you could simply acquire the mutex effectively serializing all threads attempting to run the task. This would guarantee that only one thread at a time could be running the function. However, this solution impacts the performance by introducing high contention problem because all those threads would be blocked (on the mutex) before they can proceed. If you have many threads actively using such a task and doing some useful job in between, then all these threads will be just sleeping most of the time.

void logEvent(const QString & event) {

static QMutex lock;

QMutexLocker locker(& lock); // high contention!

logStream << event; // exclusive resource

}

To avoid contention, we need a queue and a worker that lives in its own thread and processing the queue. This is pretty much the classic producer-consumer pattern. The worker (consumer) would be picking requests from the queue one by one, and each producer can simply add its requests into the queue. Sounds simple at first and you may think of using QQueue and QWaitCondition, but hold on and let’s see if we can achieve the goal without these primitives:

- We can use

QThreadPoolas it has a queue of pending tasks

Or

- We can use default

QThread::run()because it hasQEventLoop

The first option is to use QThreadPool. We can create a QThreadPool instance and use QThreadPool::setMaxThreadCount(1). Then we can be using QtConcurrent::run() to schedule requests:

class Logger: public QObject

{

public:

explicit Logger(QObject *parent = nullptr) : QObject(parent) {

threadPool.setMaxThreadCount(1);

}

void logEvent(const QString &event) {

QtConcurrent::run(&threadPool, [this, event]{

logEventCore(event);

});

}

private:

void logEventCore(const QString &event) {

logStream << event;

}

QThreadPool threadPool;

};

This solution has one benefit: QThreadPool::clear() allows you to instantly cancel all pending requests, for example when your application needs to shut down quickly. However, there is also a significant drawback that is connected to thread-affinity: logEventCore function will be likely executing in different threads from call to call. And we know Qt has some classes that require thread-affinity: QTimer, QTcpSocket and possibly some others.

What Qt spec says about thread-affinity: timers started in one thread, cannot be stopped from another thread. And only the thread owning a socket instance can use this socket. This implies that you must stop any running timers in the thread that started them and you must call QTcpSocket::close() in the thread owning the socket. Both examples are usually executed in destructors.

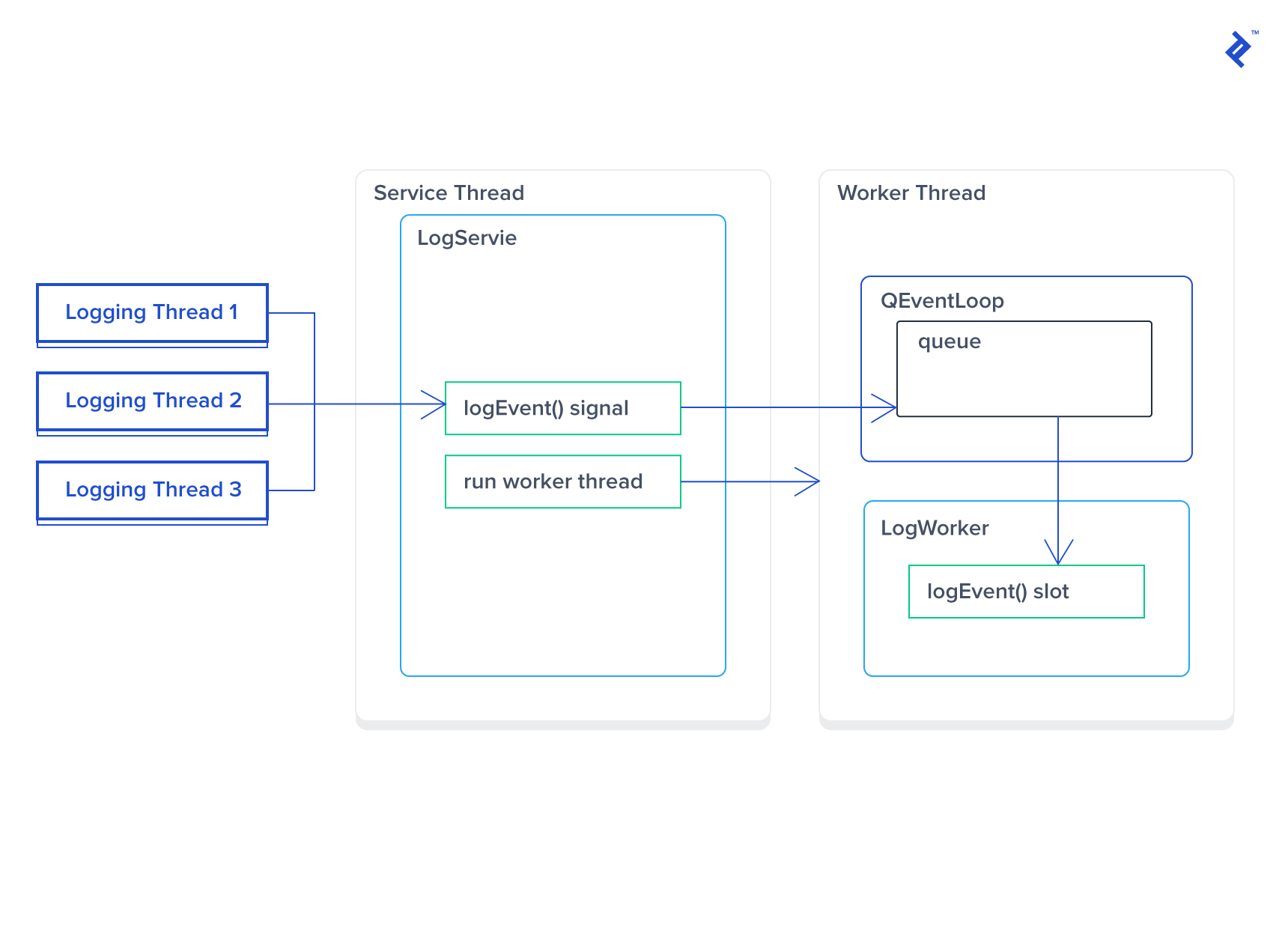

The better solution relies on using QEventLoop provided by QThread. The idea is simple: we use a signal/slot mechanism to issue requests, and the event loop running inside the thread will serve as a queue allowing just one slot at a time to be executed.

// the worker that will be moved to a thread

class LogWorker: public QObject

{

Q_OBJECT

public:

explicit LogWorker(QObject *parent = nullptr);

public slots:

// this slot will be executed by event loop (one call at a time)

void logEvent(const QString &event);

};

Implementation of LogWorker constructor and logEvent is straightforward and therefore not provided here. Now we need a service that will be managing the thread and the worker instance:

// interface

class LogService : public QObject

{

Q_OBJECT

public:

explicit LogService(QObject *parent = nullptr);

~LogService();

signals:

// to use the service, just call this signal to send a request:

// logService->logEvent("event");

void logEvent(const QString &event);

private:

QThread *thread;

LogWorker *worker;

};

// implementation

LogService::LogService(QObject *parent) : QObject(parent) {

thread = new QThread(this);

worker = new LogWorker;

worker->moveToThread(thread);

connect(this, &LogService::logEvent, worker, &LogWorker::logEvent);

connect(thread, &QThread::finished, worker, &QObject::deleteLater);

thread->start();

}

LogService::~LogService() {

thread->quit();

thread->wait();

}

Let’s discuss how this code works:

- In the constructor, we create a thread and worker instance. Notice that the worker does not receive a parent, because it will be moved to the new thread. Because of this, Qt won’t be able to release the worker’s memory automatically, and therefore, we need to do this by connecting

QThread::finishedsignal todeleteLaterslot. We also connect the proxy methodLogService::logEvent()toLogWorker::logEvent()which will be usingQt::QueuedConnectionmode because of different threads. - In the destructor, we put the

quitevent into the event loop’s queue. This event will be handled after all other events are handled. For example, if we have made hundreds oflogEvent()calls just prior to the destructor call, the logger will handle them all before it fetches the quit event. This takes time, of course, so we mustwait()until the event loop exits. It is worth mentioniong that all future logging requests posted after the quit event will never be processed. - The logging itself (

LogWorker::logEvent) will always be done in the same thread, therefore this approach is working well for classes requiring thread-affinity. At the same time,LogWorkerconstructor and destructor are executed in the main thread (specifically the threadLogServiceis running in), and therefore, you need to be very careful about what code you are running there. Specifically, do not stop timers or use sockets in the worker’s destructor unless you could be running the destructor in the same thread!

Executing worker’s destructor in the same thread

If your worker is dealing with timers or sockets, you need to ensure the destructor is executed in the same thread (the thread you created for the worker and where you moved the worker to). The obvious way to support this is to subclass QThread and delete worker inside QThread::run() method. Consider the following template:

template <typename TWorker>

class Thread : QThread

{

public:

explicit Thread(TWorker *worker, QObject *parent = nullptr)

: QThread(parent), _worker(worker) {

_worker->moveToThread(this);

start();

}

~Thread() {

quit();

wait();

}

TWorker worker() const {

return _worker;

}

protected:

void run() override {

QThread::run();

delete _worker;

}

private:

TWorker *_worker;

};

Using this template, we redefine LogService from the previous example:

// interface

class LogService : public Thread<LogWorker>

{

Q_OBJECT

public:

explicit LogService(QObject *parent = nullptr);

signals:

void **logEvent**(const QString &event);

};

// implementation

LogService::**LogService**(QObject *parent)

: Thread<LogWorker>(new LogWorker, parent) {

connect(this, &LogService::logEvent, worker(), &LogWorker::logEvent);

}

Let’s discuss how this is supposed to work:

- We made

LogServiceto be theQThreadobject because we needed to implement the customrun()function. We used private subclassing to prevent accessingQThread’s functions since we want to control thread’s lifecycle internally. - In

Thread::run()function we run the event loop by calling the defaultQThread::run()implementation, and destroy the worker instance right after the event loop has quit. Note that the worker’s destructor is executed in the same thread. -

LogService::logEvent()is the proxy function (signal) that will post the logging event to the thread’s event queue.

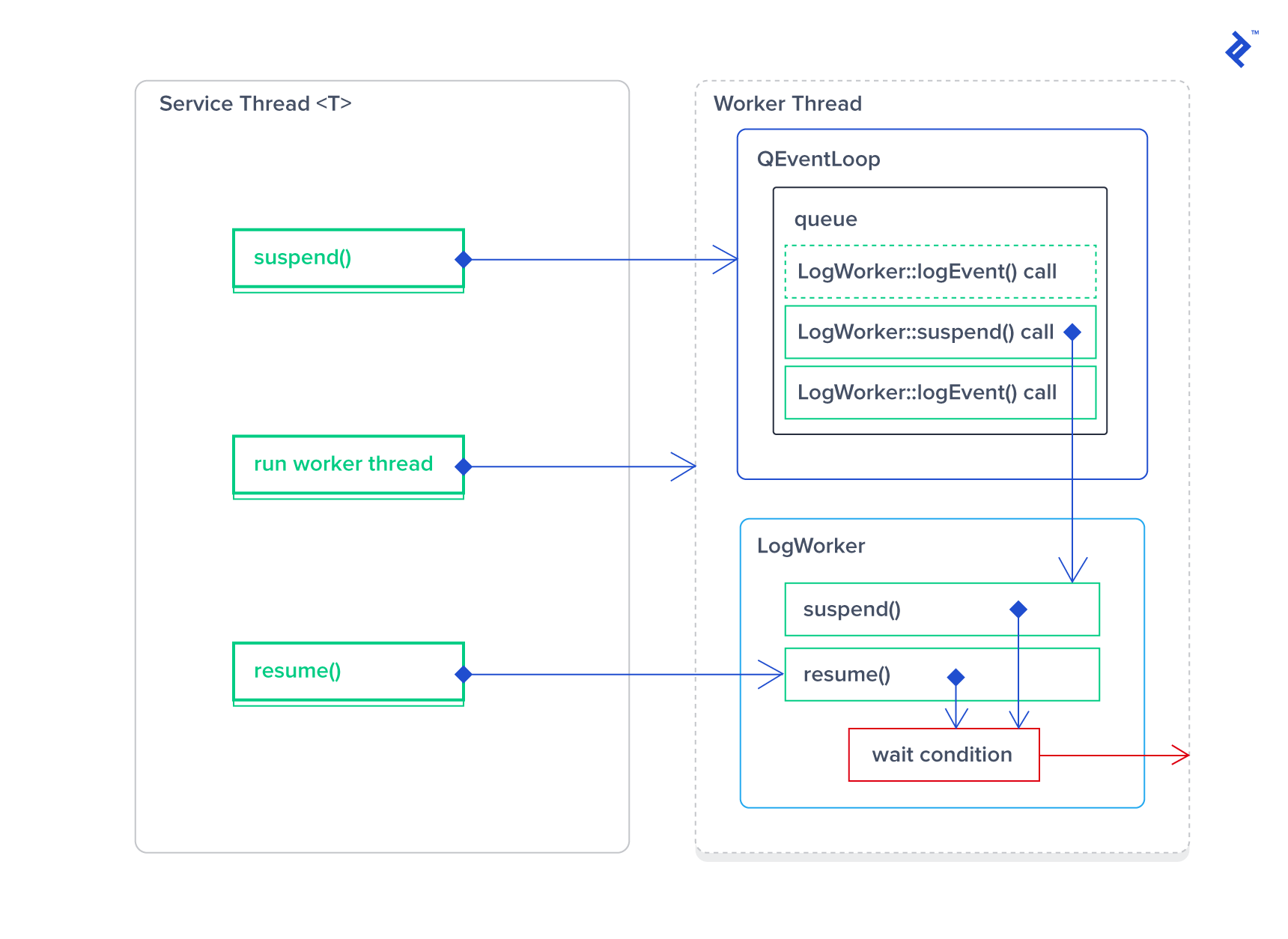

Pausing and resuming the threads

Another interesting opportunity is to be able to suspend and resume our custom threads. Imagine your application is doing some processing that needs to be suspended when the application gets minimized, locked, or just has lost the network connection. This can be achieved by building a custom asynchronous queue that will hold all pending requests until the worker is resumed. However, since we are looking for easiest solutions, we will be using (again) the event loop’s queue for the same purpose.

To suspend a thread, we clearly need it to wait on a certain waiting condition. If the thread is blocked this way, its event loop is not handling any events and Qt has to put keep in the queue. Once resumed, the event loop will be processing all accumulated requests. For the waiting condition, we simply use QWaitCondition object that also requires a QMutex. To design a generic solution that could be reused by any worker, we need to put all suspend/resume logic into a reusable base class. Let’s call it SuspendableWorker. Such a class shall support two methods:

-

suspend()would be a blocking call that sets the thread waiting on a waiting condition. This would be done by posting a suspend request into the queue and waiting until it is handled. Pretty much similar toQThread::quit()+wait(). -

resume()would signal the wait condition to wake the sleeping thread up to continue its execution.

Let’s review the interface and implementation:

// interface

class SuspendableWorker : public QObject

{

Q_OBJECT

public:

explicit SuspendableWorker(QObject *parent = nullptr);

~SuspendableWorker();

// resume() must be called from the outer thread.

void resume();

// suspend() must be called from the outer thread.

// the function would block the caller's thread until

// the worker thread is suspended.

void suspend();

private slots:

void suspendImpl();

private:

QMutex _waitMutex;

QWaitCondition _waitCondition;

};

// implementation

SuspendableWorker::SuspendableWorker(QObject *parent) : QObject(parent) {

_waitMutex.lock();

}

SuspendableWorker::~SuspendableWorker() {

_waitCondition.wakeAll();

_waitMutex.unlock();

}

void SuspendableWorker::resume() {

_waitCondition.wakeAll();

}

void SuspendableWorker::suspend() {

QMetaObject::invokeMethod(this, &SuspendableWorker::suspendImpl);

// acquiring mutex to block the calling thread

_waitMutex.lock();

_waitMutex.unlock();

}

void SuspendableWorker::suspendImpl() {

_waitCondition.wait(&_waitMutex);

}

Remember that a suspended thread will never receive a quit event. For this reason, we cannot use this safely with vanilla QThread unless we resume the thread before posting quit. Let’s integrate this into our custom Thread<T> template to make it bulletproof.

template <typename TWorker>

class Thread : QThread

{

public:

explicit Thread(TWorker *worker, QObject *parent = nullptr)

: QThread(parent), _worker(worker) {

_worker->moveToThread(this);

start();

}

~Thread() {

resume();

quit();

wait();

}

void suspend() {

auto worker = qobject_cast<SuspendableWorker*>(_worker);

if (worker != nullptr) {

worker->suspend();

}

}

void resume() {

auto worker = qobject_cast<SuspendableWorker*>(_worker);

if (worker != nullptr) {

worker->resume();

}

}

TWorker worker() const {

return _worker;

}

protected:

void run() override {

QThread::*run*();

delete _worker;

}

private:

TWorker *_worker;

};

With these changes, we will resume the thread before posting the quit event. Also, Thread<TWorker> still allows any kind of worker to be passed in regardless if it is a SuspendableWorker or not.

The usage would be as follows:

LogService logService;

logService.logEvent("processed event");

logService.suspend();

logService.logEvent("queued event");

logService.resume();

// "queued event" is now processed.

volatile vs atomic

This is a commonly misunderstood topic. Most people believe that volatile variables can be used to serve certain flags accessed by multiple threads and that this preserves from data race conditions. That is false, and QAtomic* classes (or std::atomic) must be used for this purpose.

Let’s consider a realistic example: a TcpConnection connection class that works in a dedicated thread, and we want this class to export a thread-safe method: bool isConnected(). Internally, the class will be listening to socket events: connected and disconnected to maintain an internal boolean flag:

// pseudo-code, won't compile

class TcpConnection : QObject

{

Q_OBJECT

public:

// this is not thread-safe!

bool isConnected() const {

return _connected;

}

private slots:

void handleSocketConnected() {

_connected = true;

}

void handleSocketDisconnected() {

_connected = false;

}

private:

bool _connected;

}

Making _connected member volatile is not going to solve the problem and is not going to make isConnected() thread-safe. This solution will work 99% of the time, but the remaining 1% will make your life a nightmare. To fix this, we need to protect the variable access from multiple threads. Let’s use QReadWriteLocker for this purpose:

// pseudo-code, won't compile

class TcpConnection : QObject

{

Q_OBJECT

public:

bool isConnected() const {

QReadLocker locker(&_lock);

return _connected;

}

private slots:

void handleSocketConnected() {

QWriteLocker locker(&_lock);

_connected = true;

}

void handleSocketDisconnected() {

QWriteLocker locker(&_lock);

_connected = false;

}

private:

QReadWriteLocker _lock;

bool _connected;

}

This works reliably, but not as fast as using “lock-free” atomic operations. The third solution is both fast and thread-safe (the example is using std::atomic instead of QAtomicInt, but semantically these are identical):

// pseudo-code, won't compile

class TcpConnection : QObject

{

Q_OBJECT

public:

bool isConnected() const {

return _connected;

}

private slots:

void handleSocketConnected() {

_connected = true;

}

void handleSocketDisconnected() {

_connected = false;

}

private:

std::atomic<bool> _connected;

}

Conclusion

In this article, we discussed several important concerns about concurrent programming with the Qt framework and designed solutions to address specific use cases. We have not considered many of the simple topics such as using of atomic primitives, read-write locks, and many others, but if you are interested in these, leave your comment below and ask for such a tutorial.

If you’re interested in exploring Qmake, I also recently published The Vital Guide to Qmake. It’s a great read!

Further Reading on the Toptal Blog:

Understanding the basics

How is multithreading useful?

This multithreading model provides developers with a useful abstraction for concurrent execution. However, it really shines when applied to a single process: enabling parallel execution on a multiprocessor system.

What is multithreading?

Multithreading is a programming and execution model that allows multiple threads to exist within the context of a single process. These threads share the process’ resources but are able to execute independently.

What is a Qt application?

An application that is built with Qt framework. Qt applications are often built with C++ because the framework itself is built with C++. However, there are other language bindings such as Python-Qt.

What concurrent models are supported by Qt?

Task based concurrency by leveraging of QThreadPool and QRunnable, and threads programming by using QThread class.

What synchronization primitives are available for Qt developers?

The most frequently used are QMutex, QSemaphore, and QReadWriteLock. There are also lock-free atomic operations provided by QAtomic* classes.

Andrei Smirnov

Ankara, Turkey

Member since December 11, 2014

About the author

Andrei has 15+ years working for the likes of Microsoft, EMC, Motorola, and Deutsche Bank on mobile, desktop, and web using C++, C#, and JS.

PREVIOUSLY AT