Beyond Text: Designing the Future of AI Prompting

Generative AI is a potent tool, but text prompting can make it hard for users to get the results they want. Four design experts share ideas for making AI prompting more intuitive and accessible to a wider range of users.

Generative AI is a potent tool, but text prompting can make it hard for users to get the results they want. Four design experts share ideas for making AI prompting more intuitive and accessible to a wider range of users.

Micah is a digital designer who has worked with clients such as Google, Deloitte, and Autodesk. He is also the Lead Editor of Toptal’s Design Blog. His design expertise has been featured in Fast Company, TNW, and other notable publications.

Expertise

Previous Role

Digital DesignerPREVIOUSLY AT

Featured Experts

Generative artificial intelligence (AI) can seem like a magic genie. So perhaps it’s no surprise that people use it like one—by describing their “wishes” in natural language, using text prompts. After all, what user interface could be more flexible and powerful than simply telling software what you want from it?

As it turns out, so-called “natural language” still causes serious usability problems. Renowned UX researcher Jakob Nielsen, co-founder of the Nielsen Norman Group, calls it the articulation barrier: For many users, describing their intent in writing—with enough clarity and specificity to produce useful outputs from generative AI—is just too hard. “Most likely, half the population can’t do it,” Nielsen writes.

In this roundtable discussion, four Toptal designers explain why text prompts are so tricky, and share their solutions for solving generative AI’s “blank page” problem. These experts are on the forefront of leveraging the latest technologies to improve design. Together, they bring a range of design expertise to this discussion of the future of AI prompting. Damir Kotorić has led design projects for clients like Booking.com and the Australian government, and was the lead UX instructor at General Assembly. Darwin Álvarez currently leads UX projects for Mercado Libre, one of Latin America’s leading e-commerce platforms. Darrell Estabrook has more than 25 years of experience in digital product design for enterprise clients like IBM, CSX, and CarMax. Edward Moore has more than two decades of UX design experience on award-winning projects for Google, Sony, and Electronic Arts.

This conversation has been edited for clarity and length.

To begin, what do you consider to be the biggest weakness of text prompting for generative AI?

Damir Kotorić: Currently, it’s a one-way street. As the prompt creator, you’re almost expected to create an immaculate conception of a prompt to achieve your desired result. This is not how creativity works, especially in the digital age. The enormous benefit of Microsoft Word over a typewriter is that you can easily edit your creation in Word. It’s ping-pong, back-and-forth. You try something, then you get some feedback from your client or colleague, then you pivot again. In this regard, the current AI tools are still primitive.

Darwin Álvarez: Text prompting isn’t flexible. In most cases, I have to know exactly what I want, and it’s not a progressive process where I can iterate and expand an idea I like. I have to go in a linear direction. But when I use generative AI, I often only have a vague idea of what I want.

Edward Moore: The great thing about language prompting is that talking and typing are natural forms of expression for many of us. But one thing that makes it very challenging is that the biases you include in your writing can skew the results. For example, if you ask ChatGPT whether or not assistive robots are an effective treatment for adults with dementia, it will generate answers that assume that the answer is “yes” just because you used the word “effective” in your prompt. You may get wildly different or potentially untrue outputs based on subtle differences in how you’re using language. The requirements for being effective at using generative AI are quite steep.

Darrell Estabrook: Like Damir and Darwin said, the back-and-forth isn’t quite there with text prompts. It can also be hard to translate visual creativity into words. There’s a reason why they say a picture’s worth a thousand words. You almost need that many words to get something interesting from a generative AI tool!

Moore: Right now, the technology is highly driven by data scientists and engineers. The rough edges need to be filed down, and the best way to do that is to democratize the tech and include UX designers in the conversation. There’s a quote from Mark Twain, “History doesn’t repeat itself, but it sure does rhyme.” And I think that’s appropriate here because suddenly, it’s like we’ve returned to the command line era.

Do you think the general public will still be using text prompts as the main way of interacting with generative AI in five years?

Moore: The interfaces for prompting AI will become more visual, in the same way that website-building tools put a GUI layer on top of raw HTML. But I think that the text prompts will always be there. You can always manually write HTML if you want to, but most people don’t have the time for it. Becoming more visual is one possible way interfaces might evolve.

Estabrook: There are different paths for this to go. Text input is limited. One possibility is to incorporate body language, which plays a huge part in communicating our intent. Wouldn’t it be an interesting use of a camera and AI recognition to consider our body language as part of a prompt? This type of tech would also be helpful in all sorts of AI-driven apps. For instance, it could be used in a medical app to assess a patient’s demeanor or mental state.

What are some additional usability limitations around text prompting, and what are specific strategies for addressing them?

Kotorić: The current generation of AI tools is a black box. The machine waits for user input, and once it has produced the output, little to no tweaking can be done. You’ve got to start all over again if you want something a little different. What needs to happen is that these magic algorithms need to be opened up. And we need levers to granularly control each stylistic aspect of the output so that we can iterate to perfection instead of being required to cast the perfect spell first.

Álvarez: As a native Spanish speaker, I’ve seen how these tools are optimized for English, and I think that has the potential to undermine trust among non-native English speakers. Ultimately, users will be more likely to trust and engage with AI tools when they can use a language they are comfortable with. Making generative AI multilingual at scale will probably require putting AI models through extensive training and testing, and adapting their responses to cultural nuances.

Another barrier to trust is that it’s impossible to know how the AI created its output. What source material was it trained on? Why did it organize or compose the output the way it did? How did my prompt affect the result? Users need to know these things to determine whether an outcome is reliable.

AI tools should provide information about the sources used to generate a response, including links or citations to relevant documents or websites. This would help users verify the information independently. Even assigning some confidence scores to its responses would inform users about the level of certainty the tool has in its answer. If the confidence score is low, users may take the response as a starting point for further research.

Estabrook: I’ve had some lousy results with image generation. For instance, I copied the exact prompt for image examples I found online, and the results were drastically different. To overcome that, prompting needs to be even more reliant on a back-and-forth process. As a creative director working with other designers on a team, we always go back and forth. They produce something, then we review it: “This is good. Strengthen that. Remove this.” You need that at an image level.

A UI strategy could be to have the tool explain some of its choices. Maybe enable it to say, “I put this blob here thinking that’s what you meant by this prompt.” And I could say, “Oh, that thing? No, I meant this other thing.” Now I’ve been able to be more descriptive because the AI and I have a common frame of reference. Whereas right now, you’re just randomly throwing out ideas and hoping to land on something.

How can design help increase the accuracy of generative AI responses to text prompts?

Álvarez: If one of the limitations of prompting is that users don’t always know what they want, we can use a heuristic called recognition rather than recall. We don’t have to force users to define or remember exactly what they want; we can give them ideas and clues that can help them get to a specific point.

We can also differentiate and customize the interaction design for someone who is more clear on what they want versus a newbie user who is not very tech-savvy. This could be a more straightforward approach.

Estabrook: Another idea is to “reverse the authority.” Don’t make AI seem so authoritative in your app. It provides suggestions and possibilities, but that doesn’t mitigate the fact that one of those options could be wildly wrong.

Moore: I agree with Darrell. If companies are trying to present AI as this authoritative thing, we must remember, who are the authentic agents in this interaction? It’s the humans. We have the decision-making power. We decide how and when to move things forward.

My dream usability improvement is, “Hey, can I have a button next to the output to instantly flag hallucinations?” AI image generators resolved the hand problem, so I think the hallucination problem will be fixed. But we’re in this intermediate period where there’s no interface for you to say, “Hey, that’s inaccurate.”

We have to look at AI as an assistant that we can train over time, much like you would any real assistant.

What alternative UI features could supplement or replace text prompting?

Álvarez: Instead of forcing users to write or give an instruction, they could answer a survey, form, or multistep questionnaire. This would help when you are in front of a blank text field and don’t know how to write AI prompts.

Moore: Yes, some features could provide potential options rather than making the user think about them. I mean, that’s what AI is supposed to do, right? It’s supposed to reduce cognitive load. So the tools should do that instead of demanding more cognitive load.

Kotorić: Creativity is a multiplayer game, but the current generative AI tools are single-player games. It’s just you writing a prompt. There’s no way for a team to collaborate on creating the solution directly in the AI tool. We need ways for AI and other teammates to fork ideas and explore alternative possibilities without losing work. We essentially need to Git-ify this creative process.

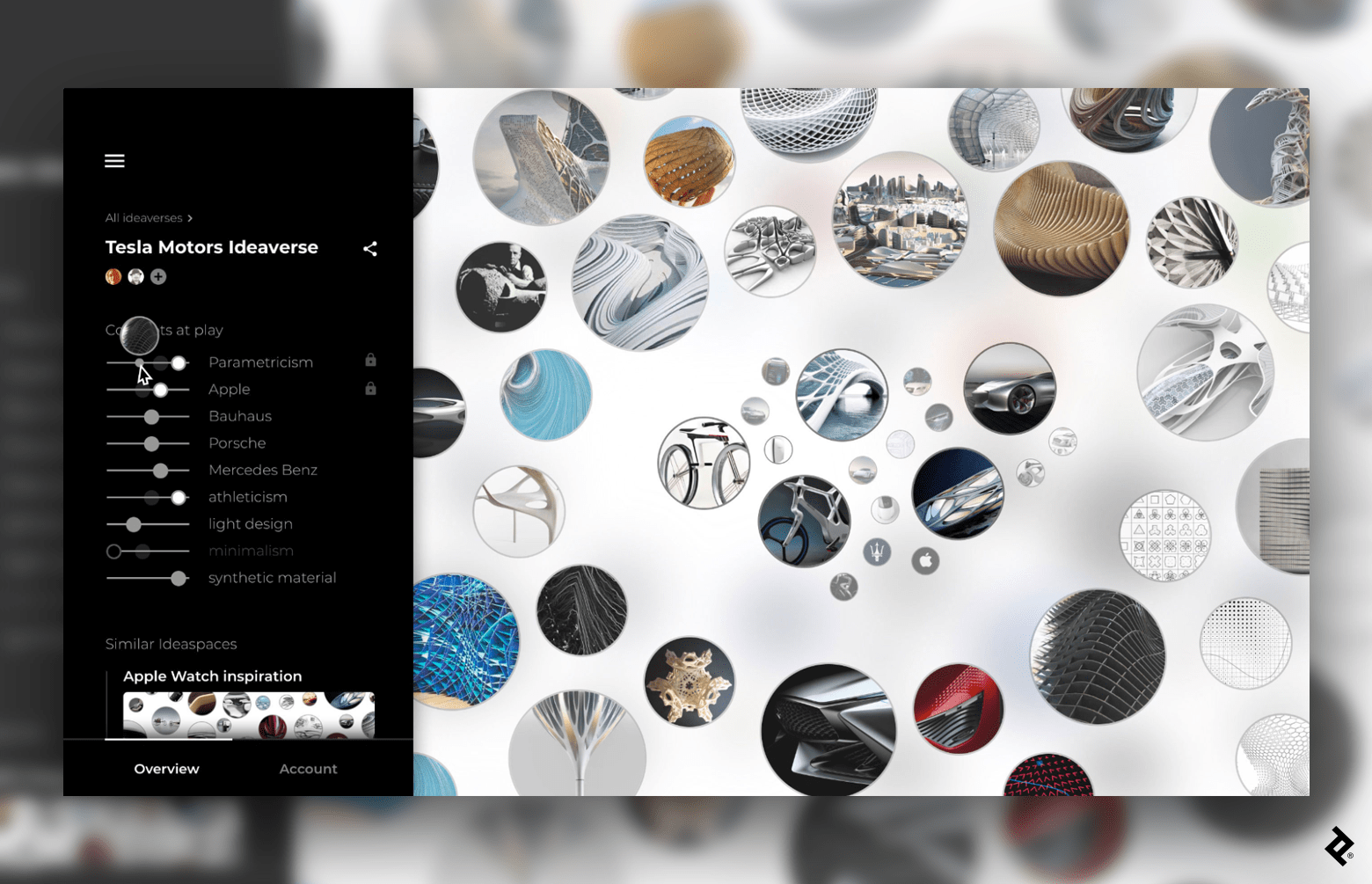

I explored such a solution with a client years ago. We came up with the concept of an “Ideaverse.” When you tweaked the creative parameters on the left sidebar, you’d see the output update to better match what you were after. You could also zoom in on a creative direction and zoom out to see a broader suite of creative options.

Midjourney allows for this kind of specificity using prompt weights, but it’s a slow process: You have to manually create a selection of weights and generate the output, then tweak and generate again, tweak and generate again. It feels like restarting the creative process each time, instead of something you can quickly tweak on the fly as you’re narrowing in on your creative direction.

In my client’s Ideaverse that I mentioned, we also included a Github-like version control feature where you could see a “commit history” not at all dissimilar to Figma’s version history, which also allows you to see how a file has changed over time and exactly who made which changes.

Let’s talk about specific use cases. How would you improve the AI prompt-writing experience for a text-generation task such as creating a document?

Álvarez: If AI can be predictable—like in Gmail, where I see the prediction of the text I’m about to write—then that’s when I would use it because I can see the result that works for me. But a blank document template that AI fills in—I wouldn’t use that because I don’t know what to expect. So if AI could be smart enough to understand what I’m writing in real time and offer me an option that I can see and use instantly, that would be beneficial.

Estabrook: I’d almost like to see it displayed similarly to tracked changes and comments in a document. It’d be neat to see AI comments pop up as I write, maybe in the margin. It takes away that authority as if the AI-generated material will be the final text. It just implies, “Here are some suggestions”; this could be useful if you’re trying to craft something, not just generate something by rote.

Or there could be selectable text sections where you could say, “Give me some alternatives for further content.” Maybe it gives me research if I want to know more about this or that subject I’m writing about.

Moore: It’d be great if you could say, “Hey, I’m going to highlight this paragraph, and now I want you to write it from the point of view of a different character.” Or “I need you to rephrase that in a way that will apply to people of different ages, education levels, backgrounds,” things like that. Just having that sort of nuance would go a long way to improving usability.

If we generate everything, the result loses its authenticity. People crave that human touch. Let’s accelerate that first 90% of the task, but we all know that the last 10% takes 90% of the effort. That’s where we can add our little touch that makes it unique. People like that: They like wordsmithing, they like writing.

Do we want to surrender that completely to AI? Again, it depends on intent and context. You probably want more creative control if you’re writing for pleasure or to tell a story. But if you’re just like, “I want to create a backlog of social media posts for the next three months, and I don’t have the time to do it,” then AI is a good option.

How could text prompting be improved for generating images, graphics, and illustrations?

Estabrook: I want to feed it visual material, not just text. Show it a bunch of examples of the brand style and other inspiration images. We do that already with color: Upload a photo and get a palette. Again, you’ve got to be able to go back and forth to get what you want. It’s like saying, “Go make me a sandwich.” “OK, what kind?” “Roast beef, and you know what extras I like.” That sort of thing.

Álvarez: I was recently involved in a project for a game agency using an AI generator for 3D objects. The challenge was creating textures for a game where it’s not economical to start from scratch every time. So the agency created a backlog, a bank of information related to all the game’s assets. And it will use this backlog—existing textures, existing models—instead of text prompts to generate consistent results for a new model or character.

Kotorić: We made an experiment called AI Design Generator, which allowed for live tweaking of a visual direction using sliders in a GUI.

This allows you to mix different creative directions and have the AI create several intermediate states between those two directions. Again, this is possible with the current AI text-prompting tools, but it’s a slow and mundane manual process. You need to be able to read through Midjourney docs and follow tutorials online, which is difficult for the majority of the general population. If the AI itself starts suggesting ideas, it would open new creative possibilities and democratize the process.

Moore: I think the future of this—if it doesn’t exist already—is being able to choose what will get fed into the machine. So you can specify, “These are the things that I like. This is the thing that I’m trying to do.” Much like you would if you were working with an assistant, junior artist, or graphic designer. Maybe some sliders are involved; then it generates the output, and you can flag parts, saying, “OK, I like these things. Regenerate it.”

What would a better generative AI interface look like for video, where you have to control moving images over time?

Moore: Again, I think a lot of it comes down to being able to flag things—“I like this, I don’t like this”—and having the ability to preserve those preferences in the video timeline. For instance, you could click a lock icon on top of the shots you like so they don’t get regenerated in subsequent iterations. I think that would help a lot.

Estabrook: Right now, it’s like a hose: You turn it on full blast, and the end of it starts going everywhere. I used Runway to make a scene of an asteroid belt with the sun emerging from behind one of the asteroids as it passes in front of the camera. I tried to describe that in a text prompt and got these very trippy blobs moving in space. So there would have to be a level of sophistication in the locking mechanism that’s as advanced as the AI to get across what you want. Like, “No, keep the asteroid here. Now move the sun a little bit to the right.”

Álvarez: Just because the tool can generate the final result doesn’t mean we have to jump straight from the idea to the final result. There are steps in the middle that AI should consider, like storyboards, that help me make decisions and progressively refine my thoughts so that I’m not surprised by an output I didn’t want. I think with video, considering those middle steps is key.

Looking toward the future, what emerging technologies could improve the AI prompting user experience?

Moore: I do a lot of work in virtual and augmented reality, and those realms deal much more with using human bodies as input mechanisms; for instance, they have eye sensors so you can use your eyeballs as an input mechanism. I also think using photogrammetry or depth-sensing to capture data about people in environments will be used to steer AI interfaces in an exciting way. An example is the “AI pin” device from a startup called Humane. It’s like the little communicators they would tap on Star Trek: The Next Generation, except it is an AI-powered assistant with cameras, sensors, and microphones that can project images onto nearby surfaces like your hand.

I also do a lot of work with accessibility, and we often talk about how AI will expand agency for people. Imagine if you have motor issues and don’t have the use of your hands. You’re cut off from a whole realm of digital experience because you can’t use a keyboard or mouse. Advances in speech recognition have enabled people to speak their prompts into AI art generators like Midjourney to create imagery. Putting aside the ethical considerations of how AI art generators function and how they are trained, they still enable a new digital interaction previously unavailable to users with accessibility needs.

More forms of AI interaction will be possible for users with accessibility limitations once eye tracking—found in higher-end VR headsets like PlayStation VR2, Meta Quest Pro, and Apple Vision Pro—becomes more commonplace. This will essentially let users trigger interactions by detecting where their eyes are looking.

So those types of input mechanisms, enabled by cameras and sensors, will all emerge. And it’s going to be exciting.

Further Reading on the Toptal Blog:

Understanding the basics

What is an AI prompt?

An AI prompt refers to the input or instruction given to a language model to generate a desired response. A prompt can be a sentence, phrase, or set of specific instructions. To optimize future iterations of AI, prompts should also include images and collaboration features.

What is the prompting technique in AI?

The AI prompting technique is a way for users to guide AI models with specific input (often text-based) to generate content such as emails, images, and video. Prompting is vital for various applications such as AI language generation and problem-solving. Although well-designed prompts often require special knowledge to write correctly, they empower users to achieve their desired results with AI.

How do you optimize a prompt?

To optimize a prompt, be clear, brief, and specific. AI generates content according to the parameters you provide, but many users struggle to articulate exactly what they want. Providing feedback on the output in order to generate another iteration can make AI more effective.

Vancouver, WA, United States

Member since January 3, 2016

About the author

Micah is a digital designer who has worked with clients such as Google, Deloitte, and Autodesk. He is also the Lead Editor of Toptal’s Design Blog. His design expertise has been featured in Fast Company, TNW, and other notable publications.

Expertise

Previous Role

Digital DesignerPREVIOUSLY AT