The Enterprise Starting Point: Data Science and Artificial Intelligence

An expanding array of artificial technology options presents enterprise executives with a daunting challenge: where to start? Toptal executives share perspective on the distinction between AI and Data Science, and why the latter serves as the best starting point for most companies.

An expanding array of artificial technology options presents enterprise executives with a daunting challenge: where to start? Toptal executives share perspective on the distinction between AI and Data Science, and why the latter serves as the best starting point for most companies.

Toptal Research

In-depth analysis and industry-leading thought leadership from a panel of Toptal researchers and subject matter experts.

The rapidly expanding field of artificial intelligence and data science presents a daunting list of options for companies hoping to tap its potential. Machine learning, deep learning, natural language processing, neural networks, robotic process automation, and many more esoteric variants fill headlines and white papers.

On the cusp of delivering miraculous computational power, these technologies implore executives to adopt them or find their companies soon outmaneuvered by those that do. For a select few companies with entire divisions devoted to AI, tailoring such technology to use cases is everyday business. But for the vast majority, knowing where to start is less straightforward.

In this article, Toptal executives share perspective on the practical application of artificial technology-related solutions to common business needs.

Pedro Nogueira, a specialist in machine learning and data science, offers refreshing news to newcomer companies: the first solution is often simple, relatively low-cost, and financially accretive. Complementing perspective from Nogueira, the Toptal Enterprise team highlights recent trends in robotic process automation, which helps companies streamline routine workflows.

Robotic Process Automation and AI: Tools for different tasks

To frame the advice shared by Nogueira, it is helpful to understand the difference between Robotic Process Automation (RPA) and Artificial Intelligence (AI) and the types of data each approach is best suited to handle.

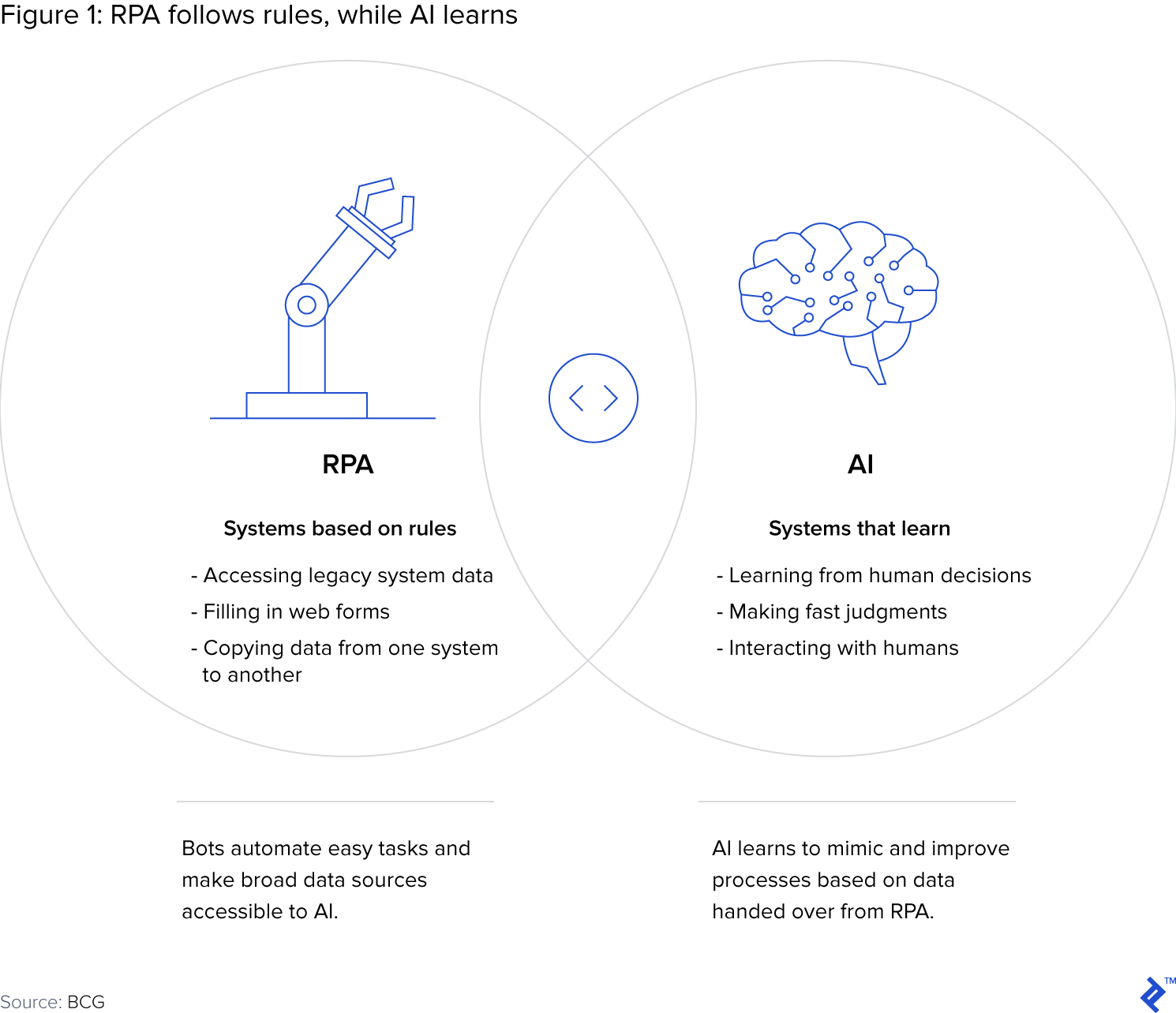

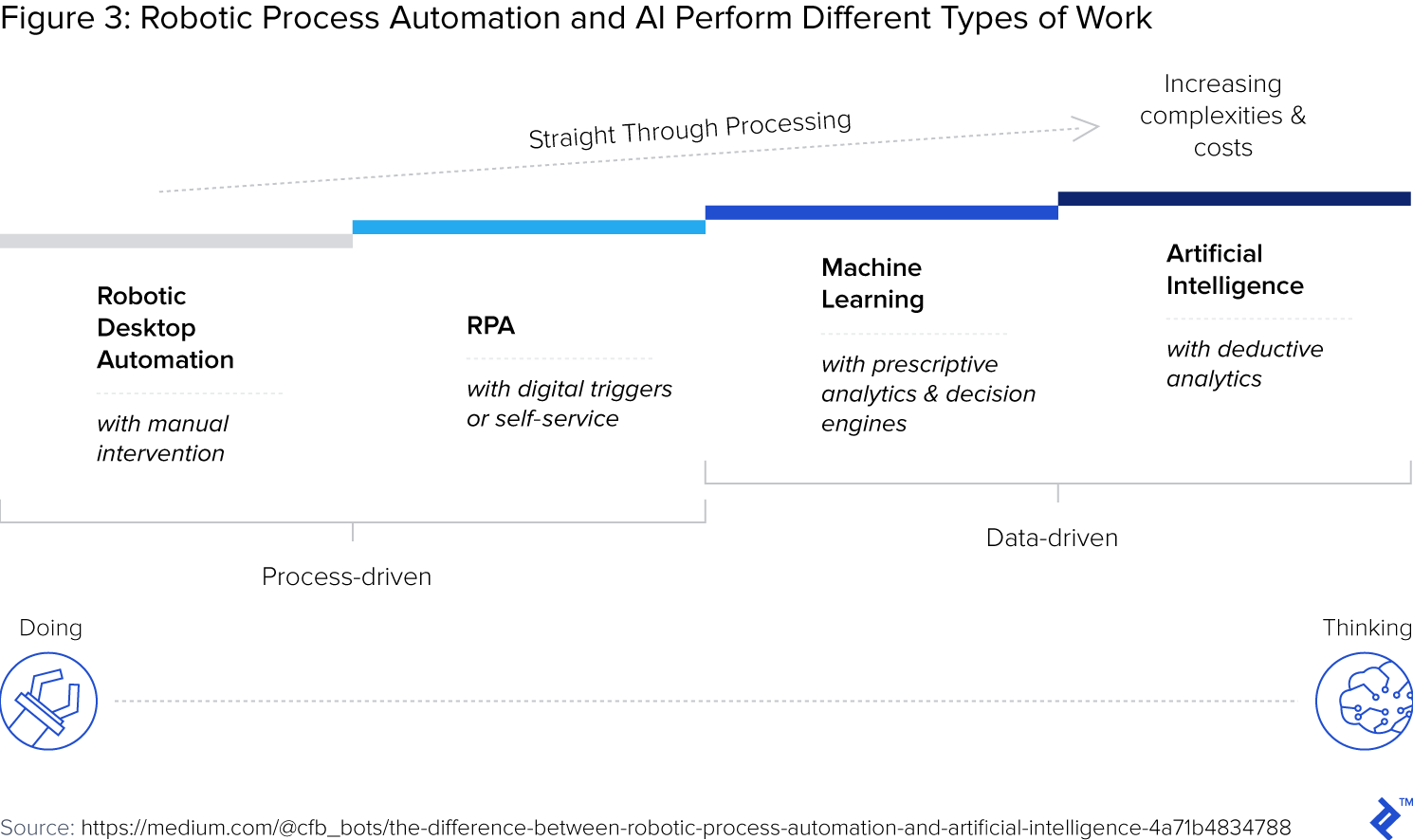

RPA and AI differ based on the jobs they perform. A software robot, RPA excels in repetitive tasks analogous to those performed by an assembly line worker or machine. Conversely, AI is best suited for less structured environments, replicating the analytical ability fundamental to human judgment and decision making.

Definitionally, the two approaches are also distinct. The IEEE Standards Association, an international organization comprised of industry experts, defines them as follows:

RPA: pre-configured software that uses business rules and predefined activity to complete the autonomous execution of a combination of processes, activities, transactions, and tasks.

AI: the combination of cognitive automation, machine learning (ML), reasoning, hypothesis generation and analysis, natural language processing and intentional algorithm mutation producing insights and analytics at or above human capability.

RPA is generally considered a subset of AI, and one that targets repetitive routines. The critical difference is that RPA does not learn, while AI can self-modify, altering its activity in response to varying environmental inputs.

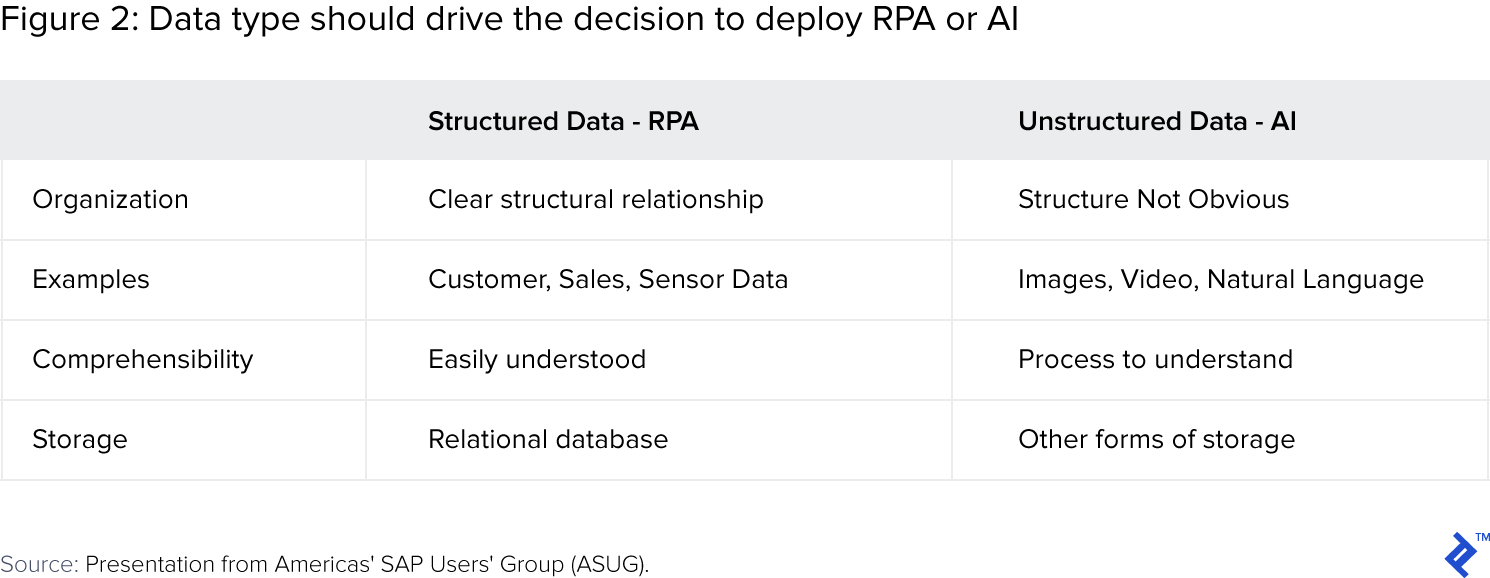

As a result, RPA is best-suited for highly structured data, while AI handles unstructured or semi-structured data. The difference between the two types of data, summarized below, is easy to grasp for anyone who has built a spreadsheet database.

Data that fits neatly into such a spreadsheet - such as customer contact information - is structured. Data that does not fit - such as natural language - is unstructured. Appreciating the difference between these data types is critical to understanding which forms of AI are appropriate for a given business case.

Blocking and tackling with business process automation

For most companies, the easiest and least risky starting point to leverage AI is business process automation. Comprised of mundane tasks that require little intelligence and possibly no human effort, such processes justify investment in technology that eliminates or significantly reduces human involvement. Companies and employees stand to benefit in a three disctinct ways:

- Employees focus effort on higher-value tasks and problem solving.

- Companies realize positive ROI from minimal ongoing operating cost.

- Process quality improves due to lack of human error.

RPA drives multiple work streams in the insurance industry

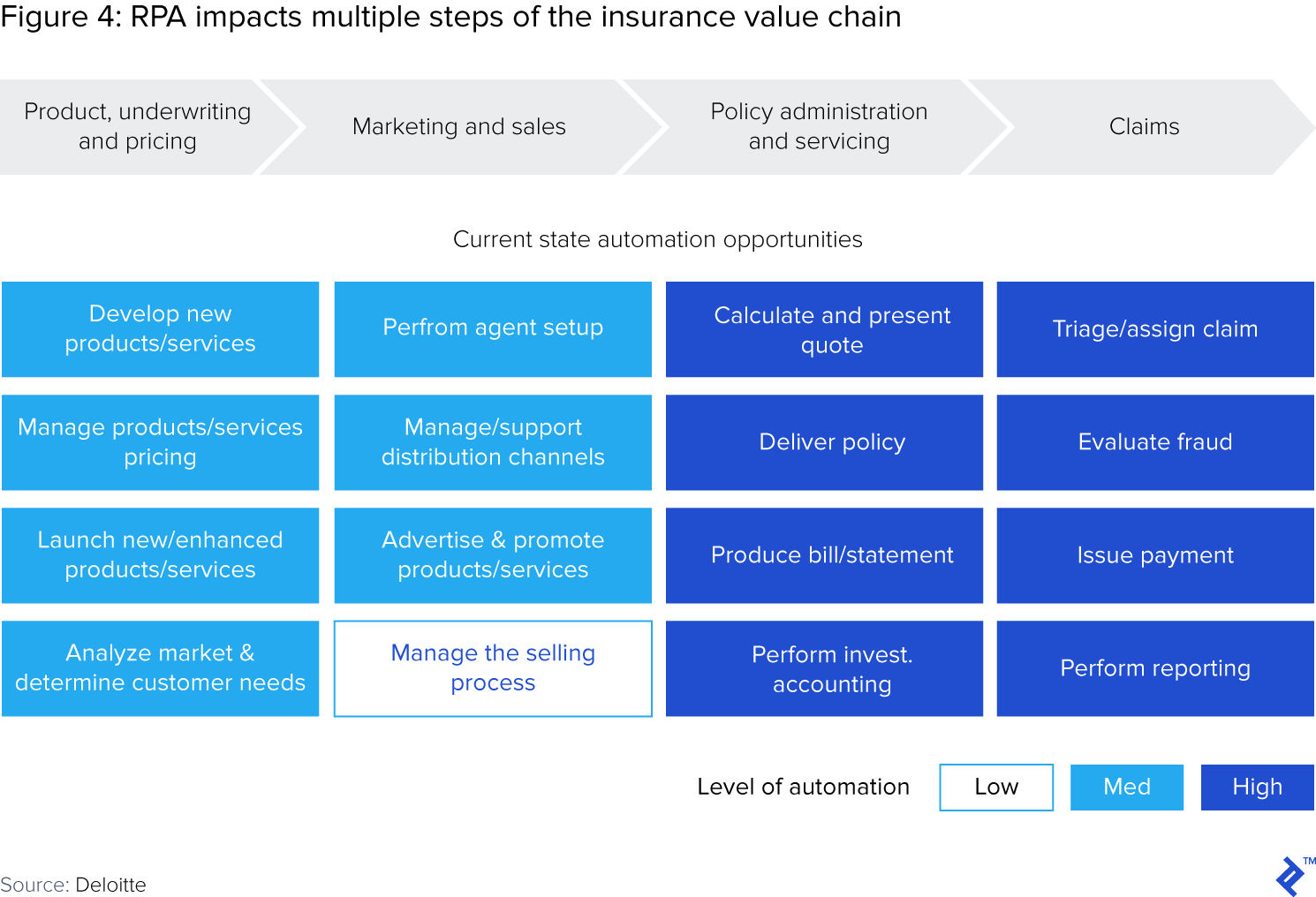

For companies already streamlining simple internal procedures such as expense reimbursement, more complex opportunities hold potential for high ROI. In the insurance industry, for example, generating insurance quotes and processing insurance claims present perfect use cases for RPA.

When underwriting a policy, insurance companies must balance risk and reward. Essentially, on average the net present value of policy premiums must exceed that of the claims. During underwriting, insurance companies estimate the risk component of this equation, helping them predict the timing and magnitude of future liabilities.

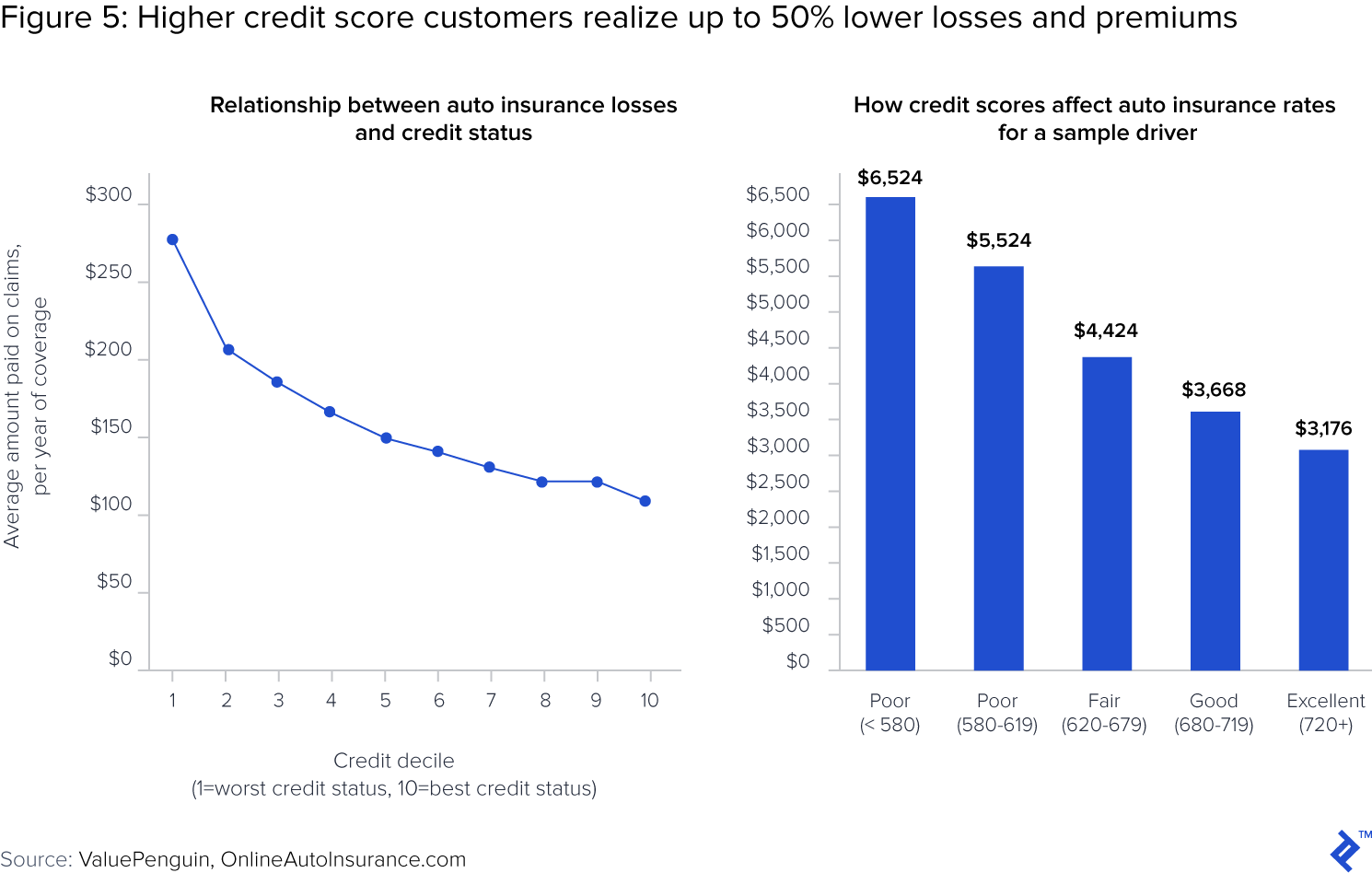

Underwriting has historically been a manual process, whose analytical requirements have been overseen by actuaries. Now such work is increasingly performed automatically and with the oversight of data scientists who draw upon new data sources to better predict risk. For example, in the auto industry, insurers historically evaluated loss histories, which are records of past insurance claims for a given driver. Insurers began to incorporate driver credit scores into their risk analysis, recognizing that high scores positively correlate to safe driving, and commensurately lower losses.

Reflecting on the underwriting example, Nogueira notes “when companies think they need AI, they often actually need data scientists.”

For Nogueira, the insurance quote process is quite familiar at both a professional and personal level. A data scientist with project experience in the insurance industry, and a motorcycle enthusiast who recently toured Portugal, he shares an anecdote to which any driver or homeowner can relate: “If I need to change motorcycles, which I like to do frequently, then I go online to a set of insurance companies and share my data through their online questionnaires.”

Once submitted, the data enters “a model that lives somewhere on the backend and analyzes my risk profile according to one or several models and then provides me a quote.” In the seconds it takes to receive such a quote, all analysis is automatic, overridden with human intervention only in the case of data outliers.

Automation also drives downstream workflows in the insurance customer lifecycle, notably during the claims process. When an insurance customer files a claim, the insurance company determines whether to fully pay, partially pay or deny the claim. The process often involves multiple external parties, including the insurance customer, and the service provider, for example a hospital in the case of healthcare, or a repair shop in the case of auto.

In the auto industry, claims adjudication depends upon verifying damage to a vehicle, determining repair costs, selecting the repair shop, and paying for the repair. For repair estimates, photos play a critical role in the claims process. The claims adjuster takes photos of the wrecked vehicle, as does the workshop - both before and after repairs are made. These photos provide the evidence of damage, repair and the basis for reimbursement.

Historically, these photos were interpreted exclusively by people, but now, image recognition software coupled with rules-based automation delivers critical information to the claims adjuster, enabling faster repairs and coverage.

Data science is the workhorse, and Data Scientists are the drivers

Companies must “define what can be easily automated, and what needs to be escalated to human decision makers,” according to Nogueira. With any process under consideration for automation, he continues, “first look at the data and figure out the rules.”

While he admits that the fields of data science and AI are merging, for the business settling Nogueira delineates the two:

“Data science is AI applied to real-world scenarios and common business needs. It has more to do with understanding the data, managing it, making it readily available, easy to process, and ultimately, a guide for decision making by company stakeholders.”

Such work often amounts to cleaning up and collating disparate data sets - no easy task - and then applying statistical analysis, such as logistic regression, to drive better predictions and decisions.

By contrast, AI is much more research-oriented and suited for unstructured data analysis. “Imagine a really complex project, one with a lot of uncertainty, for example trying to build a model that determines how many people might come in to a supermarket based on walking patterns, CCTV video and sensory data.”

Ultimately, this model might predict how people shop, what they seek, and how to position products relative to each other, optimizing the floor plan to maximize profit. While such a “blue sky” project, if successful, would undoubtedly be valuable to retailers, it would also require a team of multiple experts and could easily cost multiples of a data science-based initiative. In the retail case, a company might focus on one or a few of the most critical components of the predictive model – for example, optimizing store hours relative to foot traffic and operating costs.

The critical starting point to building data science capability is bringing the right type and number of talent on board. Fortunately, according to Nogueira, most companies “don’t need a large team of super expert developers to do many of the common automations, especially if you consider the number of APIs and SDKs available.”

While such off-the-shelf technologies provide potent tools, it is critical that they are wielded by the right hands. Here, Nogueira provides a word of caution: “these tools might actually be a problem, because a lot of people are using them in ways that they shouldn’t, because they don’t understand them.”

The danger, he notes, lies in “overfitting data models,” which results from applying a model to data in a way that does not account for the full spectrum of possibilities. Such over-training, he warns, “can end up being extremely costly to the business, because in situations that you haven’t seen before, the model does not generalize well, which can lead to making wrong decisions on the data.”

To avoid such pitfalls, Nogueira encourages companies to hire experienced data scientists. All companies seeking to unlock the value of customer or operational data “need a person with a good grasp of statistics, and enough business acumen to understand the use cases and where the value resides in the business.” From a credentials standpoint, a solid data scientist usually has at least a BS in mathematics or statistics, a strong ability to code, and can analyze a business use case to determine where data science can deliver the most impact.

Parting thoughts

While data science presents a compelling starting point from a risk/reward perspective, the broader landscape of AI technologies is also worth exploring. Enterprise executives should consider data science as the rallying point around which to start the internal conversation about AI.

As they realize success stories with business process automation, they should consider expanding scope to include more challenging use cases, perhaps better-suited to alternative AI technologies. In subsequent articles, Insights will explore the broader AI landscape, helping executives navigate a field that will undoubtedly deliver strong returns.