What Every Executive Needs to Know About the Day Facebook Disappeared From the Internet

Leading Toptal engineering and finance experts analyze the social network’s epic outage and distill key takeaways for business leaders. Find out what you can do to protect your company from a costly service interruption.

Leading Toptal engineering and finance experts analyze the social network’s epic outage and distill key takeaways for business leaders. Find out what you can do to protect your company from a costly service interruption.

Peter Matuszak is Toptal’s Senior Writer for Business. A former investigative reporter for the Chicago Tribune and policy advisor to multiple governmental agencies and officials, he now writes about tech, finance, and the future of work for Toptal Insights.

Featured Experts

Aside from the CTO, most executives do not have the bandwidth to engage deeply with technical network operations. So it may come as a surprise—and perhaps a concern—for leaders to learn that their companies’ entire network functionality, both internal and external, runs on a single protocol written down on two bar napkins at a tech conference in 1989.

It’s called the border gateway protocol or BGP. It determines the routing of all traffic on the servers we use to navigate everything from social media, email, and cloud drives to scanning entry cards at office security gates. BGP is what every network depends on to function correctly—including the ones at your company. This was the linchpin that brought down all of Facebook’s internal and external networks on Monday, October 4, 2021.

How the Facebook Outage Happened

Facebook is more than just the world’s largest social network. It is a technological behemoth. The site’s 3 billion active users generate millions of gigabytes of data every day, requiring 17 massive global data centers and a sophisticated architecture underpinning its vast digital empire.

The tech giant is in some ways a nation unto itself and has long been a leader in network engineering and innovation. But that doesn’t mean it’s not vulnerable to outages, as was proved on October 4 when the world watched Facebook’s entire network go down for more than seven hours. That’s an eternity in an always-on global economy—and one that may have cost the company an estimated $100 million in revenue.

In the wake of the incident, company leaders need to take a hard look at their own processes, says Alexander Sereda, a Toptal software product development manager and former CTO of Rhino Security Labs. “If this can happen to Facebook, it can happen to you,” he says.

While all the details have yet to emerge, Toptal experts have identified several important lessons senior leaders can learn from the episode, one of which is that even the most cutting-edge engineering can still be undone by human error.

The postmortem released by Facebook in the days following its outage pointed to human error—an engineer’s interaction with its server protocols, specifically BGP—as the central culprit in bringing its network down.

According to the company statement, “a command was issued with the intention to assess the availability of global backbone capacity.” What that command was and what error it contained, we don’t know, and Facebook isn’t saying. But the company did add that its “systems are designed to audit commands like these to prevent mistakes like this, but a bug in that audit tool prevented it from properly stopping the command.”

The mistake produced cascading ramifications because the company was apparently counting on an automated auditing tool to catch such a problem.

The erroneous command, issued during a routine update, severed all of the connections within Facebook’s backbone—the top-level network of fiber-optic connections between its data centers. At that point, the company’s BGP system, which is responsible for mapping all of the available pathways through its network, could no longer locate any valid routes into the company’s global data centers. This effectively cut Facebook off from the internet and the company’s own internal network, which also relies on BGP for routing information. Nobody could navigate the social network, not even Facebook employees inside their own facilities.

Usually, when update information is added to a server configuration, BGP will duplicate all of its previous locations from stored files and add any new ones to the mapping that connects Facebook to the internet. But in this case, all of the locations were lost until engineers could physically restore BGP backups.

“It’s a tough situation. It’s always going to be hard to prevent every command that could lead to a failure,” says James Nurmi, a Toptal cloud architect, developer, and Google alum who has more than two decades of experience helping companies increase network reliability. “The nature of configuring a router, or any complex device, means that a command in one context may be exactly what you want but in a different one could lead to disaster.”

The fact that an individual person’s mistake was at the heart of the Facebook outage should not be dismissed as a problem unique to its organization. Human error is a common reason for network outages.

The Uptime Institute publishes an annual study on the scope and consequences of data outages like the one Facebook experienced. In 2020, a year that saw a huge increase in cloud computing due to the COVID-19 pandemic, the report found that at least 42% of data centers lost server time due to a mistake made by a person interacting with the network, not an infrastructure or other technical shortcoming.

How a single internal user’s error could cause a wholesale collapse of Facebook’s networks offers an interesting view into the advanced level of engineering at the organization. The company’s engineering team focuses on making its networking technology as flexible and scalable as possible by rethinking traditional approaches and designs, according to an academic research paper Facebook contributed to earlier this year. The paper details how the company has expanded the role of BGP beyond just a typical routing protocol into a tool for rapidly deploying new servers and software updates. Almost prophetically, the paper also provides something of a roadmap for how one errant command could shut down a global network.

How Much the Outage Cost Facebook

The majority of outages that made headlines last year did not affect critical systems and mostly inconvenienced consumers and remote workers, such as interruptions or slowdowns of collaboration tools (e.g., Microsoft Teams, Zoom), online betting sites, and fitness trackers. However, to the companies experiencing these outages, the price tag in terms of lost revenue, productivity, and customer trust was significant.

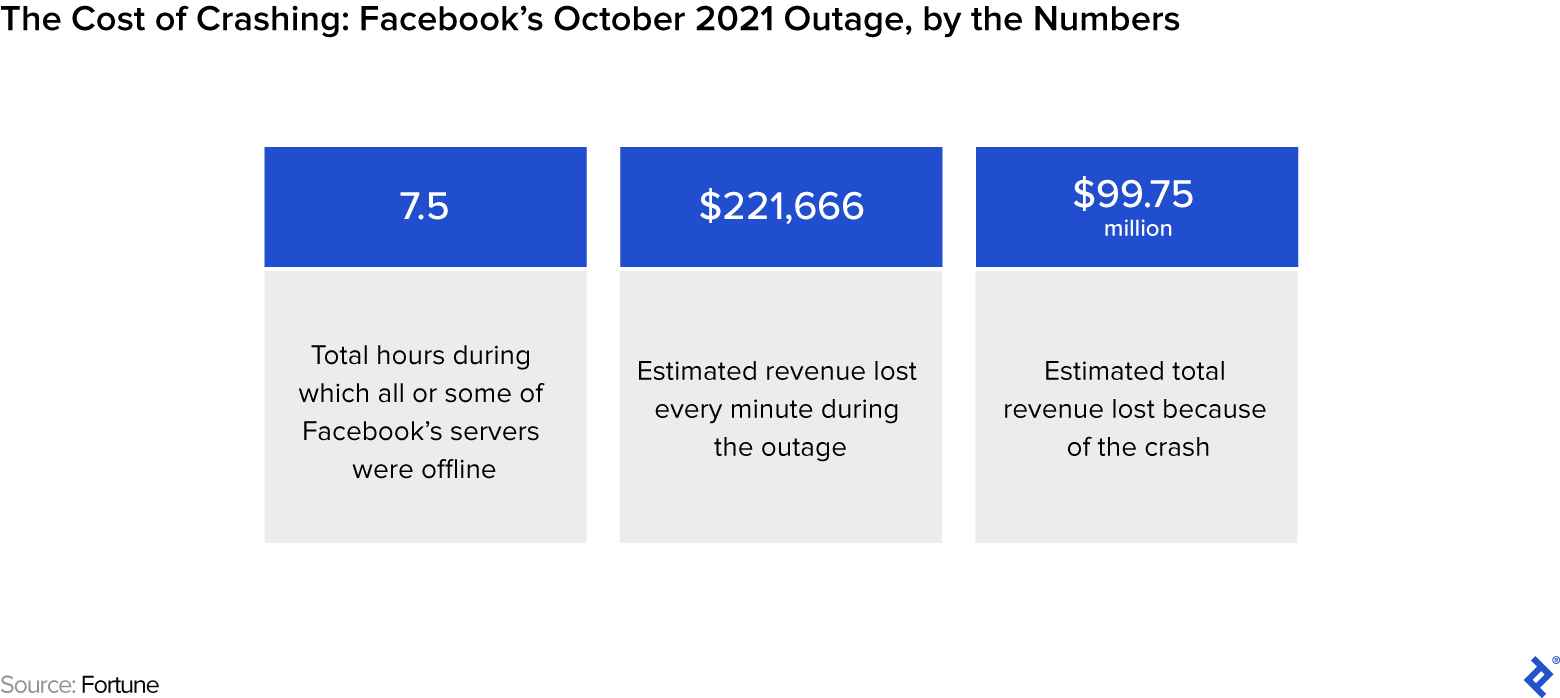

Although generalizing the cost of an outage is difficult due to the variety of businesses included in Uptime’s aforementioned report, the researchers estimate that downtime can cost anywhere from $140,000 per hour on the low end to as much as $540,000 per hour at the higher end. Based on Facebook’s second-quarter earnings, the social network may have lost $99.75 million in revenue due to its outage on October 4, according to estimates by Fortune.

Fortune’s estimates are helpful in understanding the potential effect of the outage on revenue but it’s unclear what the actual losses are, notes data scientist Erik Stettler, Toptal’s Chief Economist and a founding partner of the venture firm Firstrock Capital. “The estimates took a very linear approach. But not all units of time are equally fungible, and Facebook’s revenue is much more complex than saying every second generates the same revenue as every other second,” he says.

What’s more, if traffic spiked after the outage, Facebook may have recouped some of the losses, Stettler says. Conversely, if traffic stayed low, the company may have lost more. What is clear is that a major IT outage has fiscal repercussions for businesses, and preparing for these failures in advance is key. “Any technology is fallible. With risk management it’s not about making sure something never happens but about being ready when it does, and making that preparedness fundamental to your business plan,” he says. “It’s not the 999 days that go right that show your leadership—it’s the one day in a thousand that didn’t go well.”

3 Key Lessons From the Facebook Outage

Security Is Paramount, Even When It Inconveniences Customers

While the Facebook shutdown happened very quickly, it took more than seven hours for all of the company’s servers to come back online, in part because Facebook’s internal network communications were also impaired. The extended timeframe of the outage was also due to stringent security procedures put in place to shield Facebook and its users from hackers and other cybersecurity threats. These policies include a tight bureaucracy with no remote access and only a few individuals who are empowered to access the systems needed to restart the company’s networking operations—in person.

According to Alexander Avanesov, a Toptal developer with more than twenty years of experience building and maintaining secure networks and enterprise platforms, the delay in restarting Facebook’s systems was one thing that actually went right for the company that day.

“Unfortunately, there is no way to have both rapid reaction and complete security,” he says. Facebook has not exposed itself or its customers to a breach and likely will not lose a single user, so in this sense the company did everything right, says Avanesov. “They have more risk in a security breach if they did not install such a complex system.”

This internal negotiation between rapid reaction and security is necessary for any company that depends on networks to connect with its core revenue generators, he says. For smaller companies or businesses in more highly competitive markets, downtime can be a deal breaker with customers. However, a faster response sometimes means a lower security barrier for accessing critical systems.

Custom Workarounds Can Help Your Company Respond More Quickly

Although human error can never be fully eliminated as a risk, there are ways for a smaller-scale operation to reduce the chance that a mistake could sweep away an entire network the way it did at Facebook, says Nurmi. “The best solution I’ve seen for situations like this is to have devices configured with what is essentially a dead man’s switch,” he says. “You activate your changes, but before it gets permanently saved, a timer is set. If the configuration isn’t confirmed in some time period, the configuration gets reverted.”

Even in this circumstance there’s a risk of downtime, but that outage would likely last minutes instead of hours—even if a catastrophic error got through all of the necessary levels of internal review, he says.

Invest time and money in educating your IT team. Having a better-trained staff is the simplest, most cost-effective way to boost your readiness and response to network outages.

There are some additional options for companies looking for security protocols that allow for faster response times to an outage without allowing high-level external access to their infrastructure. Systems that can generate one-time passwords for onsite personnel to avoid the risk of a remote hack of data could prevent the need to wait on the arrival of IT staff with higher levels of server access, says Avanesov. Building these types of workarounds into a network is affordable and not too burdensome to integrate, he says. However, onsite personnel still need the expertise to resolve an error that causes a significant outage.

To Get the Best Outcome, Prepare for the Worst

Running detailed simulations for network problems and other potential catastrophic events is essential for surviving in crisis situations, says Austin Dimmer, a Toptal developer who has built and managed secure networks for the European Commission, Lego, and Publicis Worldwide. Preparedness when responding to a network collapse might be the key to limiting damage and avoiding recurring problems.

The statement made by Facebook on its recovery procedures after the crash shows an important strength within the company’s readiness for operating in a crisis, Dimmer tells Toptal Insights. “They knew exactly what they were doing,” he says. “Bringing it back all online was very risky because of the potential for overload in data centers and even the potential for fires, but because they had practiced the simulations of different disaster situations, the teams at Facebook were quite well prepared to cope with that stressful situation and have the confidence to restore the networks safely and the right way.”

Dimmer points to a client of his that was recently subjected to a ransomware attack. Because Dimmer and the IT team had run through that scenario only a few weeks earlier, he knew the company’s backup data was secure. He recommended that the client not pay the hackers and move on; the client recovered from the breach with no impact to its operations and there was no payday for the cyberthieves.

No matter what security tolerances and disaster preparedness plans are in place, executive leadership must invest time and money in educating company IT teams. Having a better-trained staff is the simplest, most cost-effective way to boost an organization’s readiness and response to network problems, the Uptime Institute found. Human error, a major cause of network outages, is often due to inadequate processes or failure to follow the ones that are already in place.

Network outages are inevitable. To minimize financial and reputational repercussions, company leaders must accept that fact—and prepare for it well in advance. Making intentional decisions about security, readiness, and response helps organizations minimize fallout and pivot from crisis to recovery with confidence.

Toptal Senior Writer Michael McDonald contributed to this report.